CHAPTER 9 UNCERTAINTY

advertisement

F Petri Microeconomics for the critical mind

CHAPTER 9 UNCERTAINTY

chapter9uncertainty 17/02/2016

p. 1

highly provisional and incomplete

This chapter, like ch. 4 on consumer theory and ch. 5 on production, has a Part I which

presents microeconomic notions that do not necessarily need the neoclassical theory of value and

distribution for their validity (and are used in applications essentially in partial-equilibrium

analyses), and a Part II that illustrates how uncertainty is taken account of in general equilibrium

theory, and sketches the possibility of a different approach.

In Part I the central notion is risk aversion. People try to protect themselves against the

possibility of unfavourable events. Giving precision to this intuitive idea has absorbed considerable

energies of economists, mathematicians and philosophers. It has required formalizing the idea of

uncertainty, and the idea of preferences among choices whose outcomes are uncertain to some

degree. I will initially follow the prevalent approach, that assumes that the possible uncertain

outcomes of each choice can be listed exhaustively and that the likelihood of each of the outcomes

is taken as given by the decision maker and measured by a probability of occurrence that satisfies

the usual axioms of probability theory. I will illustrate the most commonly assumed type of utility

function for uncertain outcomes: Von Neumann-Morgenstern expected utility, with decreasing

marginal utility of income. I will use risk aversion, defined on the basis of that utility function, to

explain insurance, risk sharing, diversification, portfolio selection. I will end Part I with some

observations on subjective expected utility, and on current research directions that question the use

of VNM utility functions.

Part II illustrates how uncertainty is dealt with in modern general equilibrium theory. It

covers the notion of Arrow-Debreu general equilibrium with contingent commodities, its

equivalence with a Radner equilibrium, and the issue of incomplete markets. These are briefly

contrasted with the treatment of uncertainty in long-period analyses, both classical and neoclassical.

EXPECTED UTILITY

A prospect is a list of outcomes, one for each possible state of the world. An individual may

have to choose among different actions which entail different prospects. For example, to insure or

not to insure against a house fire; to bet 100 dollars at roulette on black, or on a single number; to

go on a dangerous adventure trip or not; to choose among possible moves at poker.

It is possible to discuss consumer choice among prospects without using probability,

postulating simply a preference ordering among them; I say something on this later. Now I consider

choice among different prospects that consist of different bundles of goods, associated with

different known probabilities of occurrence. Risk, and not uncertainty, in the terminology of Frank

Knight.

In order to analyze risk taking the predominant assumption in the last decades has been that

preferences over risky events can be described through utility functions with a very specific

property, the expected utility form. This is as follows:

Suppose that one must choose among lotteries or gambles that give different known

probabilities of obtaining different outcomes. Outcomes can be vectors of consumption goods, or

sums of money, or happenings (e.g. that someone gets married; or an accident), anything really. The

outcomes can always be reinterpreted as events (sets of states of the world, having some relevant

characteristic in common and differing only in elements irrelevant for the preferences of the

chooser). Example of an event: all possible states of the world in which when to-night at 10:00 pm I

will gamble at roulette the ball will stop on number 22; the relevant common element is the roulette

number; many authors would describe the outcome simply as number 22, but it is always possible

to reinterpret it as an event. But the word outcome clarifies that we are considering events that come

out of a choice or decide whether a gamble was successful.

1

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 2

Let u(x) be the utility of outcome x for certain;

let px be the probability of event x;

let there be n possible events (a finite number);

a lottery or gamble L over n events (or outcomes or prizes) assigns a probability of

occurrence to each of them, the sum of their probabilities being 1. It can be represented in different

ways: one is as a double vector, that lists first all the prizes x1,...,xn and then, in the same order, their

probabilities of occurrence p1, p2, ... , pn, that sum to 1. If the possible events are known and ordered

in an unambiguous way, the vector of probabilities alone is sufficient to represent a lottery. Another

representation, adopted e.g. by Varian, and often useful to avoid ambiguities, is

p1◦x1p2◦x2...pn◦xn .

Among the outcomes of a lottery there can be other lotteries, e.g. one can have, with A, B, C

three outcomes or events:

L = p1◦Ap2(p3◦Bp4◦C) where p1+p2=1, p3+p4=1.

A lottery with lotteries among the prizes is said compound, otherwise simple. The reduced

lottery corresponding to compound lottery L is defined as the simple lottery L’ stating the final

probabilities of occurrence of each event:

L’=p1◦Ap2p3◦Bp2p4◦C.

If it had been A=C, the reduced lottery would have been L”=(p1+p2p4)◦Ap2p3◦B. Adopting

the vector representation: if you have n possible ordered events and k simple lotteries over them

L1=(p11, ..., pn1), L2=(p12, ..., pn2), ... , Lk=(p1k,...,pnk), and if a compound lottery CL has these simple

lotteries as outcomes with probabilities (q1,...,qk), the reduced lottery LR(CL,L1,...,Lk) with the n

events as prizes is the vector of probabilities (s1,...,sn) where si=pi1q1+pi2q2+...+pikqk.

In order to minimize ambiguities I use the Varian representation of lotteries. Other treatises

prefer to indicate the lottery pA◦ApB◦B ... pN◦N as pAA+pBB+...+pNN. The justification for

this representation is the following. Remember that, once the outcomes are ordered in an

unambiguous way from first to last, a simple lottery can be specified as simply the vector of

probabilities of the outcomes; now, an outcome can be considered (and we will consider it) identical

to the certainty of that outcome, which in turn can be considered equivalent to a ‘degenerate’ lottery

that assigns probability 1 to that outcome and probability zero to all other possible outcomes[1]. All

outcomes can be considered degenerate lotteries, and simple lotteries can then be seen as in fact

compound lotteries. Consider a simple lottery pA◦ApB◦BpC◦C, where pA+pB+pC=1, which

implies that A, B and C are the only possible events considered; let the outcomes be listed in the

order A,B,C; the representation of this lottery as the vector of probabilities of the outcomes is (pA,

pB, pC). Now redefine the symbols A, B, C to stand for the vectors that represent the three outcomes

as degenerate lotteries: A≡(1,0,0), B≡(0,1,0), C≡(0,0,1); then (pA, pB, pC) = pAA+pBB+pCC.

Assume that the chooser assigns a definite utility level to each event and to each lottery; if

the xi’s represent consumption vectors or incomes, assume that the utility level of each xi would be

the same if it had occurred in a different state of the world.

Definition: the chooser has utility (over lotteries) of the expected utility form or of Von

Neumann-Morgenstern (VNM) form if the utility of a lottery that assigns probability p1 to event x1,

probability p2 to event x2, ..., probability pn to event xn, can be represented as

[9.1] u(x1,...,xn; p1,p2,...,pn) = p1u(x1)+p2u(x2)+...+pnu(xn).

A utility function (over lotteries) of expected utility form is called expected utility, or Von

Neumann-Morgenstern utility from the name of the originators of the notion, or also Bernoulli

utility function. The expected utility of a lottery is the sum of the expected values (in the

probabilistic/statistical sense) of the utilities of the different events, and a lottery is preferred to

1

The equivalence of an outcome, of the certainty of that outcome, and of its degenerate lottery is

sometimes listed among the axioms of the theory (cf. axiom L1 in Varian 1993); other authors, e.g. Owen,

apper to consider the equivalence a definition, and therefore not needing an explicit axiom.

2

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 3

another one if and only if its expected utility is greater. Sometimes it is useful to distinguish the

function u(x1,...,xn; p1,p2,...,pn) from the functions u(xi) by indicating the first one with a different

symbol, e.g. U(·); but in the present case, where all the u(xi)’s are the same function, the use of a

different symbol can be misleading in that, if event xi is a lottery, u(xi) has exactly the same

functional form as U(·). Still, sometimes it is useful to have a name for u(xi) different from the

name of U(·): common terminologies are to call u(xi) the felicity function or the basic utility

function, and to call U(·) the VNM utility or overall utility function.

Of course if the Von Neumann-Morgenstern (VNM) utility function correctly represents

preferences, any increasing monotonic transformation of it correctly represents the same

preferences, but it is convenient to accept only transformations that maintain the expected utility

form (thus maintaining its convenient additive, i.e. strongly separable, form), and therefore only

affine transformations from u(ℒ) to v(ℒ)=au(ℒ)+b with a, b scalars and a>0.

Preferences admit representation via a VNM utility function when they satisfy certain

axioms. The axioms one starts from can be different, and pedagogical proofs can be considerably

simplified by assuming as axioms some propositions that in fact might be derived from the other

axioms. The minimal number of important axioms (that is, besides those establishing that

preferences over lotteries are complete[2], reflexive, and transitive; that a consumer considers the

degenerate lottery that assigns probability 1 to one outcome as the same as getting that outcome for

certain; that the consumer doesn’t care about the order in which a lottery is described), is three.

They are:

1. Equivalence axiom

2. Continuity axiom

3. Independence axiom.

Equivalence (of reduced lotteries) axiom: the chooser considers a compound lottery and its

corresponding reduced lottery as the same lottery (or at least she is indifferent among them)[3].

It must be noted that empirical evidence is not always in accord with this axiom; sometimes

people choose differently depending on how a choice among lotteries is presented. We will neglect

this fact, that seems of very limited relevance for normal economic choices.

An implication of this axiom is that one can always replace a simple lottery over more than

two outcomes with a compound lottery over two outcomes, some of them being in turn lotteries.

Thus the lottery pA◦ApB◦B(1–pA–pB)◦C is equivalent to the lottery

pA

pB

A

B ] (1–pA–pB)◦C .

(pA+pB)◦[

p A pB

p A pB

Continuity axiom: The preference relation ≿ on the space of simple lotteries ℒ is

continuous, that is, for any three lotteries A, B, C ℒ the sets {p[0,1]: p◦A(1–p)◦B ≿ C} and

{p[0,1]: C ≿ p◦A(1–p)◦B} are closed[4].

We assume complete comparability i.e. we exclude noncomparability – a rather strong assumption,

stronger than for choices under certainty, since one may be facing rather complex compound lotteries.

3

This appears as assumption L3, p. 173, in Varian 1993, and as 7.2.2 in Owen 1995. It may be

interesting for the student to compare different proofs, and to understand whether the method of proof relies

on a very different approach, or is the same behind apparent differences: the latter is the case for the

differences between the proof here and the one in Varian 1993. This axiom implies that lotteries by

themselves are not objects of preferences, only the events that are their (final) prizes are.

4

This appears as axiom U1, p. 174, in Varian 1993.

2

3

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 4

This axiom states that if a lottery p◦A(1–p)◦B is strictly preferred to an outcome (which

can be a lottery), a sufficiently small change in p will not invert the preference order. An example of

practical relevance that illustrates its concrete meaning is the following: if a trip with zero

probability of a serious accident (e.g. death) is strictly preferred to no trip, the same trip with a

positive but sufficiently small probability of a serious accident is still preferred to no trip.

Essentially, the axiom rules out lexicographic preferences. It appears acceptable in the generality of

cases.

The third axiom is more contentious; it is justified as follows: consider a lottery p◦A(1–

p)◦B; whether this lottery is preferred to another lottery should not be affected by replacing A with a

C such that the consumer is indifferent between A and C, because the consumer should find that

p◦A(1–p)◦B ~ p◦C(1–p)◦B: this is because, differently from the case in usual consumer theory,

relationships of substitutability or complementarity between A and B, and between C and B, are

irrelevant here since the consumer is not going to consume A and B or C and B: A and B are

alternative possibilities, and the same for C and B[5].

Independence axiom: The preference relation ≿ on the space of simple lotteries ℒ satisfies

independence, that is, for any lottery A, B, C ℒ and for p(0,1) it is

A≿B if and only if p◦A(1–p)◦C≿ p◦B(1–p)◦C.

This is sometimes called the substitution axiom. It can be shown (cf. Appendix) that from

this axiom one can derive the strong-preference and the indifference versions of the same axiom;

these are sometimes for simplicity directly assumed as axioms in place of the original axiom:

[9.2] A≻B if and only if p◦A(1–p)◦C≻ p◦B(1–p)◦C.

[9.3] A ~ B if and only if p◦A(1–p)◦C ~ p◦B(1–p)◦C[6].

The Independence axiom and its implications [9.2] and [9.3] assume that if we have two

lotteries L and M with L M then for any positive probability p and any third lottery C it is

p◦L(1–p)◦C p◦M(1–p)◦C, and the same preservation of ranking holds if L ~ M. Replacing the

third lottery with any other one, even with L or M, does not alter this preservation of ranking.

From these axioms one derives:

Monotonicity-in-Probabilities (M-in-P)Lemma: if AB and p’> p with p, p’ (0,1), then

(i) A p◦A(1–p)◦B,

(ii) p◦A(1–p)◦B B

(iii) p’◦A(1–p’)◦B p◦A(1–p)◦B, [7]

and conversely if p’>p with p, p’ (0,1) and A p◦A(1–p)◦B or p’◦A(1–p’)◦B p◦A(1–p)◦B,

then A B.

5

However, preferences (being ex ante relative to the resolution of uncertainty) might be influenced

by some psychological connection between outcomes; so it cannot be excluded, it seems, that one may prefer

to be given the possibility of getting A or B, rather than C or B, in spite of the fact that, if one had to choose

between A for certain and C for certain, one would be indifferent.

6

[9.3] appears in a slightly different form (because referred to a best lotter b and a worst lottery w,

whose existence is assumed by Varian but not here) as U2, p. 174, in Varian 1993.

7

This result (referred to lotteries with the best and the worst outcome as prizes) is assumed for

simplicity by Varian 1993 as an axiom, U4 p. 174; Varian says that it can be derived from the earlier axioms

he has listed, but does not give the proof, that would not have been easy because his axioms include neither

the Independence axiom as stated here (i.e. with the weak inequality sign) nor our [9.2], Varian only lists our

[9.3].

4

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 5

Proof. The trick is to use B or A in place of C in [9.2]. Inequality (i) derives from the fact that AB

and 9.2 imply A = (1–p)◦Ap◦A (1–p)◦Bp◦A for all p(0,1). Inequality (ii) analogously derives from

p◦A(1–p)◦Bp◦B(1–p)B=B. Now define π = [(p’–p)/(1–p)] (0,1), admissible since p<p’<1; then

p’◦A(1–p’)B=π◦A(1–π) ◦(p◦A(1–p)◦B); now let L stand for the compound lottery p◦A(1–p)◦B which

by (i) we know to be worse than A; then in (ii) we can replace B with L, and p with π, obtaining π◦A(1–π)

◦L L, that is, p’◦A(1–p’)◦B p◦A(1–p)◦B, that proves (iii). Conversely, A=p◦A(1–p)◦Ap◦A(1–

p)◦B implies AB by 9.2, and p’◦A(1–p’)◦B p◦A(1–p)◦B can be re-written π◦A(1–π)◦(p◦A(1–p)◦B)

p◦A(1–p)◦B with π defined as above, or π◦A(1–π)◦LL that implies AL and hence AB. ■

It is furthermore possible to derive from the above axioms the following result, sometimes

directly assumed as an axiom, usually with the name Archimedean axiom:

Archimedean ‘axiom’: Let A, B, C be outcomes such that A≻ C≻ B. Then there exists some

p* (0,1) such that p*◦A(1–p*)◦B ~ C[8].

(I write ‘axiom’ in inverted commas because here it is not an axiom, it is derived from other

axioms). It is in the proof of this result that the Continuity axiom is relevant.

Proof. The two sets {p[0,1]: p◦A(1–p)◦B ≿ C} and {p[0,1]: C ≿ p◦A(1–p)◦B} are closed and

nonempty (each one contains at least 0 or 1), and every point in [0,1] belongs to at least one of the two sets

because of completeness of the preference order. Since the unit interval is connected[9], there must be some p

belonging to both sets, and at that p the lottery p◦A(1–p)◦B must be equipreferred[10] to C since the weak

inequality holds both ways. This result only needs weak inequalities, but if the inequalities are strict, A C

B, then we can add that the common p cannot be 0 or 1 because then the lottery p◦A(1–p)◦B would be

equivalent to A for certain or to B for certain, which – given the assumption that A C B – would render the

equipreference between the lottery and C impossible. ■

We prove now:

Theorem. Uniqueness of the equipreference probability. The p* in the Archimedean

‘axiom’ is unique[11].

Proof. For any number s in [0,1] different from p*, if p*>s then, by result (iii) in the M-in-P Lemma,

C ~ p*◦A(1–p*)◦B s◦A(1–s)◦B ; and if s>p* then s◦A(1–s)◦B C. ■

I also present the proof of this result by Owen (Theory of Games, 1995, p. 153) that does not assume

result (iii) of the M-in-P Lemma and actually proves the latter lemma in a different way. Take any number s

in [0,1] different from p*. We want to show that it cannot be s◦A(1–s)◦B ~ C. We already know that it

cannot be s=0 or s=1 (see the end of the proof of the Archimedean 'axiom') so take s(0,1). Assume s<p*.

Then 0 < p*–s < 1–s and therefore, since (by the Equivalence axiom) we can write B =

8

This corresponds to result (1) p. 175 in Varian 1993.

A set is connected if it is possible to connect any point of the set to any other point of the set with a

continuous curve consisting entirely of points of the set. Each point of a continuous curve is a point of

accumulation along the curve from either direction; hence if a continuous curve in a connected set S goes

from a point of a subset F of S to a point not in F but in another subset H of S , with F and H both closed and

connected and such that FH=S, then any point of the curve not in F is in H, and there must be a point of the

curve which is a frontier point of F and also an accumulation point for H, so by the definition of closed set it

belongs to H and therefore to both subsets.

10

Some economists (e.g. Varian) would write ‘indifferent to C’, using the adjective ‘indifferent’ in

sentences like “x is indifferent to y” to mean “the consumer is indifferent between x and y”. This ugly

deformation of English is avoided by other authors who write in the same sense “x is equivalent to y”; I

prefer to use in the same sense ‘equipreferred’, that clarifies that one is talking about preferences.

11

This corresponds to result (2) p. 175 in Varian 1993.

9

5

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 6

1 p *

p * s

B

B and by assumption AB, it follows (by [9.2] with C=B) that

1 s

1 s

1 p *

p * s

A

B B. (This proves (ii) in the M-in-P Lemma.) Then by [9.2]

1 s

1 s

1 p *

p * s

A

B s◦A(1–s)◦B. But the reduced lottery corresponding to the

s◦A(1–s)◦

1 s

1 s

1 p *

p * s

A

B is p*◦A(1–p*)◦B, hence:

compound lottery s◦A(1–s)◦

1 s

1 s

C ~ p*◦A(1–p*)◦B s◦A(1–s)◦B. (This proves (iii) in the M-in-P Lemma.)

The case s>p* works in the same way with the inequalities reversed (and proves (i) in the M-in-P Lemma) ■

Now I build a utility function for preferences satisfying these axioms and show that it has

the expected utility form, following Owen (1995).

Theorem. Existence of expected utility. Under the axioms listed so far there exists a

function u that maps the set of all outcomes into the real numbers, such that for any two outcomes A

and B and any p[0,1]:

[9.4] u(A) > u(B) if and only if AB

[9.5] u(p◦A(1–p)◦B) = pu(A) + (1–p)u(B).

This function is unique up to an affine transformation, i.e. if there exists a second function v that

satisfies 9.4 and 9.5 for the same preferences, then there exist real numbers α>0 and β such that for

all outcomes A

[9.6] v(A) = αu(A) + β .

Proof (partial). The complete proof is long and I shall only give parts of it, sufficient to point out its

basic principle, which is most simply explained by assuming that there exist two outcomes E1 and E0 such[12]

that E1 E0 and initially restricting ourselves to outcomes A (which can be lotteries) such that E1 A E0.

Then by the Archimedean axiom there exists a probability s(0,1) such that s◦E1(1–s)◦E0 ~ A. This

probability s is chosen as the numerical value of u(A) relative to the reference outcomes E1 and E0. If there

exists a best outcome and a worst outcome, it is possible to choose them as reference outcomes, and then all

outcomes will either satisfy E1AE0, or E1~A in which case we put u(A)=u(E1)=1, or E0~A in which case

we put u(A)=u(E0)=0. One can then prove that u thus defined satisfies the conditions of the theorem (see

below). But one need not choose the best and worst outcomes (even when they exist) as reference outcomes,

it is possible to choose any couple of outcomes such that E1E0, then an outcome A can be preferred to E1 or

can be worse than E0, but one can still assign values to u(A) connected with probabilities determined by the

Archimedean axiom, and such that 9.4, 9.5 and 9.6 are satisfied, in the way shown below. This makes it

possible to treat cases in which there is no best or no worst outcome. (The case in which there are no two

outcomes E1 and E0 such that E1E0 is uninteresting, it is the case when for all outcomes A and B it is A~B,

then we can simply assign u(A)=0 for all events, the conditions of the theorem are satisfied.)

The rules to assign u(A) for all the five possible cases are as follows:

(a) AE1. Then there exists q(0,1) such that q◦A(1–q)◦E0 ~ E1. We define u(A)=1/q, which is >1.

(b) A~E1. We define u(A)=u(E1)=1.

(c) E1AE0. Then there exists s(0,1) such that s◦E1(1–s)◦E0 ~ A. We define u(A)=s.

(d) A~E0. We define u(A)=0.

(e) E0A. Then there exists t(0,1) such that t◦A(1–t)◦E1 ~ E0. We define u(A)=

t 1

, which is

t

negative.

12

These symbols E1, E0 should not be confused with the expectation operator.

6

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 7

Note that if we are given u(A) we know the case to which A belongs. The proof that u as defined in

cases (a) to (e) for two outcomes A and B satisfies conditions 9.4 and 9.5 is quite lengthy, depending on the

case to which each of the two outcomes belongs: given the irrelevance of the order in which outcomes are

listed, there are 15 possible combinations. Only one combination will be examined here, (c,c). The other

combinations except (e,e) are examined in the Appendix. Case (e,e) is left for the reader to examine as an

Exercise.

Assume then the case (c,c) and that u(A)=sA, u(B)=sB. If sA=sB then A~sA◦E1(1-sA)◦E0~B so A~B.

If sA>sB then sA◦E1(1-sA)◦E0 sB◦E1(1-sB)◦E0 and therefore AB. Analogously if AB it must be sA>sB

where sA◦E1(1-sA)◦E0 ~A and sB◦E1(1-sB)◦E0 ~B, otherwise it could not be sA◦E1(1-sA)◦E0 sB◦E1(1sB)◦E0. Therefore u satisfies 9.4.

To prove 9.5, consider any p(0,1). By the Equivalence Axiom and the definitions of sA, sB:

p◦A(1–p)◦B ~

~ p◦[ sA◦E1(1-sA)◦E0] (1–p)◦[ sB◦E1(1-sB)◦E0] ~

~ (psA+(1–p)sB)◦E1 [p(1–sA)+(1–p)(1–sB)]◦E0 .

Hence the utility u of the lottery p◦A(1–p)◦B is psA+(1–p)sB; since sA=u(A) and sB=u(B):

u(p◦A(1–p)◦B) = pu(A) + (1–p)u(B)

and 9.5 is satisfied.

There remains to prove that u is unique up to an affine transformation with a>0. Let v be any other

function satisfying 9.4 and 9.5. Since E1E0 it must be v(E1)>v(E0). Define β=v(E0), and α=v(E1)–v(E0) (that

satisfies α>0). Consider an outcome A of case (c), that is, such that E1AE0. Let u(A)=s where A ~

s◦E1(1–s)◦E0. Therefore v(·) must assign the same number to A and to s◦E1(1–s)◦E0; which by the

expected utility form implies:

v(A) = v(s◦E1(1–s)◦E0) =

= sv(E1) + (1–s)v(E0) =

= s(α+v(E0)) + (1-s)v(E0) =

= s(α+β) + (1-s)β = sα + β = αu(A) + β.

Hence v(·) satisfies 9.6.

With similar reasonings it can be shown that v(·) satisfies 9.6 also for A falling in the other cases (a),

(b), (d), (e). For example in case (a) we define α and β in the same way, and from E1~ q◦A(1–q)◦E0,

u(A)=1/q, v(E1) = v(q◦A(1–q)◦E0) = qv(A)+(1–q)v(E0) we deduce

α = v(E1)–v(E0) = qv(A)–qv(E0) = qv(A)–qβ,

v(A) = (α+qβ)/q = α

1

+ β = αu(A) + β. ■

q

The above considerations extend straightforwardly to cases in which the outcomes are more

than two but finite in number. If the probability of outcome n is pn, with n=1,..., N, then the

expected utility of this lottery is

N

p u ( n) .

n 1

n

We will not discuss the technical complications connected with continuous probability

distributions. It can be shown that under essentially the same axioms, if a lottery consists of a

continuous probability distribution defined on a continuum of outcomes x, then the expected utility

of this lottery is u(x)p(x)dx . We will not have occasion to deal with continuous probability

distributions in this text.

It is often said that the expected utility form implies that the utility function is cardinal,

rather than simply ordinal; in other words, that it makes sense to say that the amount by which the

utilities of two lotteries differ is greater, or smaller, than the amount by which the utilities of other

two lotteries differ (just like one can say that the difference in temperature between two days is

greater, or smaller, than the difference in temperature between two other days), a statement

generally considered to make no sense for the usual ordinal utilities of consumer choice. However,

7

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 8

it should not be forgotten that the Cobb-Douglas utility function, if written in logarithmic form,

u(x1,x2)=α ln x1 + (1–α) ln x2, is as ‘cardinal’ as an expected utility function in terms of a single

scalar, say income; indeed it is often assumed, when considering expected utility of lotteries whose

outcomes are incomes, that the basic utility of income is logarithmic, in which case if one faces a

lottery that, depending on the result (say) of a random extraction, assigns a quantity of income x 1

with probability α and a quantity of income x2 with probability 1– α, then the expected utility

function perfectly coincides with the Cobb-Douglas just written. Just like for the Cobb-Douglas, for

expected utility too some monotonic transformations (e.g. raising it to the power of 3) would cause

one to lose the additive separable form, and one does not adopt them because less convenient. What

the axioms behind the expected utility form imply is only a strongly separable utility function,

linear in the probabilities, but the form of the basic utility function u(·) remains generally nonlinear. An analogous additive separability holds also for the quasilinear utility function used to

formalize the assumption of constant marginal utility of money.

Why this utility function? Its existence relies on axioms which are not, at least at first sight,

wildly implausible. For example, the fundamental Independence Axiom essentially says this:

suppose you face a lottery p◦A(1-p)◦B; since you are going to get A or B, relationships of

complementarity or ‘anticomplementarity’ (having A makes B less agreeable) between the two

prizes, which have an important role in usual consumer theory, are not going to be relevant, so if

there is another outcome C that you find perfectly equipreferable to A, it stands to reason that it

makes no difference to you if C replaces A in the lottery – indeed it might be argued that if it does

make a difference to you then you are not truly indifferent between A and C. And for the same

reason if C is preferable to A, then replacing A with C should make the new lottery preferable to the

old one. However, there is experimental evidence and introspective evidence suggesting that often

people do not respect the axioms behind the expected utility function. Read the excellent chapter on

cognitive limitations and consumer behaviour in Robert Frank's intermediate textbook

Microeconomics and Behaviour (it is ch. 8 of the 6th edition).

So let us briefly point out that one can go some way without assuming such a specific form

of the utility function.

Preferences over goods and lotteries of goods can be represented through a utility function

as long as one makes the same assumptions as for sure goods, i.e. completeness, reflexivity,

transitivity, and continuity.

I show now that one does not need expected utility to define risk aversion.

If offered (for free) a bet that gives a 50% chance of winning 1000 euros and a 50% chance

of losing 1000 euros most people will refuse the bet. It must mean that the utility of the lottery

1/2◦(initial wealth + 1000)1/2◦(initial wealth – 1000) is less than the utility of initial wealth, i.e. is

less than the utility of obtaining the expected value of the lottery for certain.

This empirical fact is not always verified, for example people buy lottery tickets or play

roulette, in spite of knowing that the gambles associated with them are not fair, that is, that the

expected value of the variations in wealth associated with the gambles is negative[13]; but this

aversion to accept fair bets is verified in many cases of economic interest, and is the basis for the

explanation of phenomena like insurance or portfolio diversification. Therefore we proceed to

define it.

RISK AVERSION

Let us formalize uncertainty as ignorance as to which one, of an exhaustive and perfectly

known list of possible future states of the world, will come about. Uncertainty can be about

13

In many state lotteries the amount of money given back to lottery ticket buyers as prizes is a third

or less of the money paid by the buyers.

8

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 9

contemporaneous facts, e.g. about whether there is oil under a certain portion of earth surface, but

its resolution will be always associated with some future time and state of the world (e.g. states

distinguished by which information will come out on whether there is oil or not in that place).

States of the world, or simply states for brevity, are distinguished according to the variables

of interest. If several variables are needed to distinguish states, the total number of states increases

rapidly. For example if, in order to forecast the total output of tomatoes in a nation in a certain year,

states of the world are distinguished according to 10 possible climatic states (that describe rainfall,

temperature etc.) in each of 3 regions, one distinguishes 103=1000 states.

Decisions on how to act, e.g. how to spend one’s money, may depend on the likelihood one

assigns to future states. The decision by a small town mayor to buy a snowplough may depend on

how likely and frequent she esteems heavy snowfalls will be.

One can distinguish commodities according to the state with which they are associated. A

standard example is an umbrella when it rains and when it doesn’t; another one is an ice cream

when it is hot and when it is cold; or, a vacation when one is healthy, and when one is ill.

Sometimes it is possible to stipulate contracts for delivery of a good if and only if a certain

state occurs. E.g. if one pays in advance for tow-away assistance insurance, the service will be

provided if and only if the car breaks down. Insurance contracts are all basically of this type: one

pays for delivery of a service or of an amount of money conditional on a certain event having

occurred. A good associated with a specified state of the world (or a specified event, by which we

mean a set of states of the world) that may or may not occur is called a state-indexed commodity or

also a contingent commodity.

Sometimes it is possible to buy a contingent commodity, that is, to buy a promise of

delivery of a commodity conditional on the realization of the state to which the commodity is

associated. But whether this is possible or not, state-indexed commodities are a useful way to

formalize decisions under uncertainty.

Thus assume that one is concerned about the amount of a certain good (e.g. income) that

will be available depending on the state of the world. Assume there is a finite number S of possible

states of the world, in each one of which the amount xs of income can take many values[14]. The

basic assumption is that the chooser has a preference ordering over the vectors x =(x1,...,xs,...,xS) of

amounts of the good available in the several alternative states. If e.g. there are only two states, if the

good is divisible, and if its amounts are nonnegative, the set of vectors on which preferences are

defined is the set of points in R2 . This simple case will often allow us to reach a sufficient grasp of

many issues.

These vectors x =(x1,...,xs,...,xS) are formally totally analogous to usual consumption

vectors[15]. So we can suppose that preferences over these vectors are complete, reflexive,

transitive, continuous and monotonic, in the sense of ch. 4. Then there will be downward-sloping

indifference curves; these may indicate for example that, if the outcome is an amount of a single

good (e.g. income) the chooser is indifferent between the vector (10 units of the good if state 1

occurs, 10 units if state 2 occurs) and the vector (12 units if state 1 occurs, 9 units if state 2 occurs).

Note that to define these preferences and indifference curves we do not need probabilities.

However, if probabilities of occurrence of the states are known, and if preferences can be

represented via a utility function which is state-independent (that is, assigns the same utility to a

certain level of income whatever the state in which that income is obtained) and has the expectedutility form, then we can say something interesting about the slope of the indifference curves when

– restricting ourselves now to the one-good (wealth), two-states case – they cross the 45° line, cf.

Fig. 9.1. The 45° line is called the certainty line because its points represent amounts of wealth that

14

This means that income is not one of the elements that distinguish states. Alternatively, one can

distinguish states according also to income, and then group them into events that collect states sharing

aspects that do not include income: with this convention, the number S will refer to events and not to states.

15

It should be obvious that this remains true if the single good xs is replaced by a vector of goods.

9

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 10

coincide across states, will be available independently of which state occurs, and therefore are

certain. The utility of a point w=(w1,w2) on the 45° line (w1=w2=w) is therefore U(w)= pu(w1)+(1–

p)u(w2)=u(w), independent of the probabilities p, 1–p of occurrence of the two states. Now consider

displacements along the indifference curve that passes through w, that take one to (w+x1, w+x2).

Let us determine the slope of the indifference curve i.e. the derivative x2'=dx2/dx1 at the certainty

line.

x2 (income in

state 2)

y▪

■

E(x)

C(x)▪

slope –p/(1–p)

■

x

45°

O

x1 (income in state 1)

Fig. 9.1

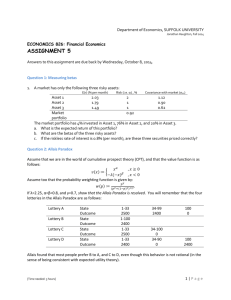

There are two possible states of the world in each one of which the amount of income can

take any of a continuum of values; the Figure shows how, given the probability of the two

states and a vector (a lottery) x, one can derive the lottery’s expected value E(x) and,

from the individual’s indifference curve through x, its certainty equivalent C(x). It also

shows that if the individual starts on the certainty line then with strictly convex

indifference curves he will not accept fair bets (e.g. if he starts at C(x) he will not accept a

bet corresponding to point y).

Differentiate with respect to x1 in x1=x2=0 both sides of

pu(w+x1)+(1-p)u(w+x2(x1)) = u(w).

u ( w x1 )

u ( w x2 ( x1 )) dx2

(1 p)

pu '(1 p)u ' x2 ' 0

We obtain p

x1

x2

dx1

(The left-hand side can be re-written as shown, with the two u' equal, because they are

determined for the same initial value of u, and expected utility implicitly assumes that the consumer

does not care about the state by itself, she cares only about the income she gets.) This implies

x2'(0) = –p/(1–p).

This is true at all points on the certainty line.

Thus in this case the absolute slope of the indifference curve at the certainty line measures

the ratio between the probabilities of the two states. Tracing a line with that slope, all points (w+x1,

w+x2) along the line have the same expected value because x2/x1= –p/(1–p) implies (1–p)x2= –px1

i.e. E(x)=0. If now we intend by x the vector indicating a point in the state plane, and not the vector

of displacements from a point on the certainty line, we can find the expected value E(x) of each

vector x by tracing a line through x with slope –p/(1–p) and finding its intersection with the

certainty line. On the other hand we can find the certainty equivalent C(x) of any vector x as the

certain amount of the good that yields the same utility as x, that is, as the point where the

indifference curve through x crosses the certainty line.

We can then state: if indifference curves are strictly convex, then the expected value of any

vector not on the certainty line is greater than the certainty equivalent of that vector[16]. This form

16

Note that the analysis is not restricted to a single good (e.g. income). Interpret y as a vector of

vectors yω of consumption goods in different states of the world ω, where yjω is the amount of consumption

good j in state of the world ω. Suppose there is a given probability vector p that assigns probability pω to

10

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 11

of indifference curves seems to be a good way to explain the situations in which choosers reject an

actuarially fair bet, which is the name given to the payment of an amount of money M to buy the

right to the result of a lottery of prizes whose expected value is M. For example you may bet $10

dollars that the toss of a fair die will come out with a 2, in which case (and only in that case) you

win $60; the probability of a 2 being 1/6, the expected value of $60 is $10; the bet is fair. Suppose

there are two states of the world distinguished by the outcome of a roulette spin (e.g. number 10

comes out, or it doesn’t); the initial situation of the consumer is a quantity x of money for certain,

i.e. the consumer is on the certainty line at some point x=(x1=x, x2=x); the consumer can bet a

certain amount of money on number 10 coming out (state 1) and, if it does, she gets an amount of

money whose expected value equals the bet. A fair bet displaces the consumer to a vector y with the

same expected value as x, and therefore below the indifference curve through x if indifference

curves are strictly convex[17]. This remains true with more than two states and with the vector x

containing more than two commodities. We then define:

Risk aversion. An individual is risk averse (i.e her preference relation is said to exhibit risk

aversion) if indifference curves (or, in the case of many states and goods, indifference

hypersurfaces) relative to state-indexed goods are strictly convex. The definition is local or global

depending on whether the convexity of indifference curves holds locally or globally.

This definition of risk aversion does not need the existence of well-defined probabilities for

the states, nor of state-independent utility, only of indifference curves (or surfaces). If probabilities

exist and utility has the expected utility form then the definition implies E(x)>C(x) for x not on the

certainty line. The converse is not true, one might have indifference curves shaped as in Fig. 9.1bis,

these imply E(x)>C(x) in spite of being convex only in a neighbourhood of the certainty line.

If one is indifferent between a lottery and obtaining its expected value for sure, one is said to

be risk neutral. If one prefers a lottery to its expected value, one is said to be risk loving. If

probabilities are not defined and one only has the map of indifference curves, risk neutrality means

straight indifference curves, and risk love means concave indifference curves (again, locally or

globally).

x2 (income in

state 2)

C(x)▪

■

x

45°

O

x1 (income in state 1)

each state of the world ω, ∑ωpω=1; so the couple (y,p) is a lottery over vectors of consumption goods. The

sure prospect y* corresponding to (y,p) is a vector of consumption goods, the same in all states of the world,

with components yj*=∑ωpωyjω i.e. the amount of each consumption good is the expected value of that

consumption good. We can define the risk premium rate as the number ρ such that the sure prospect (1-ρ)y*

has the same utility as the lottery, u((1-ρ)y*)=u(y,p). Note that this definition of the risk premium rate does

not require that the utility function over lotteries has the VNM form.

17

Cf. y in fig. 9.1. Then does risk aversion not apply to roulette gamblers? It may well apply, and yet

be compensated by the pleasure itself of gambling (the adrenaline, the decor of the casino, etc.). We are

implicitly assuming that it is only the results of the lotteries that affect preferences, but this is often untrue.

Another possibility is that the gambler believes she knows a way of beating the house.

11

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 12

Fig. 9.1bis

Now let us discuss risk aversion relative to income levels, assuming utility has the expected

utility form.

Assume a single good, income, perfectly divisible. Assume a utility of income u(x); the

utility of any income lottery ℒ=p◦x1(1–p)◦x2 is u(ℒ)=pu(x1)+(1–p)u(x2). Risk aversion is defined

as: obtaining for sure the expected value of the lottery is preferred to the lottery:

u(E(ℒ))>u(ℒ) for x1≠x2.

This must mean that u(x) is (strictly) concave, that is, the marginal utility of income is

decreasing[18]; thus when income is the payoff, risk aversion and decreasing marginal utility of

income are equivalent ways to characterize the situation.

graph

u(x*)=u(E(ℒ))

u(ℒ)

A

u(x)

u^(x)

x*=p(x*+a)+(1-p)(x*-b)=x*+pa-(1-p)b,

pa-(1-p)b=0 , b/a=p/(1-p)

B

x*

x2 C(ℒ)

x1

Fig. 9.2

Given a lottery with prizes corresponding to points A and B, its mean x* depends on (and therefore

reveals graphically) the probability p, and once determined it allows describing the prizes as x*+a and x*-b.

The certainty equivalent C(ℒ) is the quantity of wealth yielding the same utility as ℒ .

This has the following interesting interpretation. Consider a consumer who faces a lottery ℒ

yielding wealth x1 with probability p and wealth x2 with probability 1-p. Represent utility as a

concave function of wealth x, and indicate as A and B the points on this function corresponding to

x1 and x2. The expected value E(ℒ)= x* = px1+(1-p)x2 is a point on the abscissa between x1 and x2,

whose position depends on p, moving toward x1 as p increases. (If we indicate x1=x*+a, x2=x*-b,

The meaning of ‘decreasing marginal utility of income’ may appear obscure to people accustomed

to the usual utility function of consumer theory only defined up to an increasing monotonic transformation,

so that marginal utilities can be decreasing or increasing depending on the transformation one applies. But

we are dealing here with preferences representable through an expected utility function, which was shown

earlier to be unique up to an affine transformation. Now if v(x)=αu(x)+β, the sign of the second derivative is

not affected by the affine transformation; thus, to say that u’(x) is decreasing in x means that all

transformations of u(x) that maintain the expected utility form will have the same property. However, since

other transformations, that still describe the same preferences but without maintaining the expected utility

form, may not have this property, one can still ask for the concrete meaning of the restriction. This is that if

18

one faces a lottery L with different levels of income as prizes, then u(E(ℒ))>u(C(ℒ)): it is only relative to the

straight line connecting two different income-utility points (and representing therefore different expectedincome–expected-utility points depending on probabilities) in Fig. 9.2 that the concavity or not of u(x) makes

a difference. If utility depends on a vector of consumption goods, and their prices are given, the utility of

income can be taken to be the indirect utility function (uncertainty about prices can then be treated through

different prices distinguishing different states of the world), and a different meaning of decreasing marginal

utility of income can be stipulated as a decreasing (absolute) rate of substitution between income and some

assigned bundle of goods as income increases; but this has no necessary connection with a decreasing

marginal utility of income in the expected utility function.

12

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 13

we can also interpret the lottery as resulting from initial wealth w=x* and acceptance of a fair

gamble offering a win a with probability p, or a loss b with probability 1-p.) The expected utility of

the lottery is u(ℒ)= pu(x1)+(1-p)u(x2) and is determined graphically by the point corresponding to

x* on the segment joining points A and B. On the contrary the utility of wealth x* for sure is

determined, graphically, by the point on the curve u(x) corresponding to x*; u(x*) = u(E(ℒ)) is the

utility of the expected value of the lottery, and it is greater than u(ℒ) since u(x) is strictly concave,

cf. Fig. 9.2 where p=1/2. The certainty equivalent of ℒ, C(ℒ), is the amount of sure wealth yielding

the same utility as ℒ. The difference x*–C(ℒ) is called the risk avoidance price, or risk premium, or

willingness to pay to avoid risk.

We can reinterpret the situation to explain the existence of insurance, applying it to a case of

insurance against, say, house fire. Let x1 be income if no fire happens (i.e. initial income), and x2 be

income if a fire happens (causing a loss of income x1-x2); the probability of the fire is known to be

1–p. Then

x*=px1+(1-p)x2

is the expected value of the gamble the consumer faces without insurance[19], while C(ℒ) is the

certainty equivalent of the gamble, i.e. the sure amount of income that the consumer finds

equipreferred to the gamble, u(C(ℒ))=u(ℒ). As Figure 9.2 shows, owing to risk aversion it is

C(ℒ)<x*, so if someone offers the consumer an insurance contract that assures the consumer a

certain income x^ greater than C(ℒ) in exchange for the payment of (x1 – x^) as ‘premium’ or

insurance price, the consumer accepts. The maximum difference between x* and x^ the consumer is

ready to accept is x*–C(ℒ), which is accordingly called the risk premium[20] or risk avoidance price.

The risk premium is the maximum amount a risk-averse individual would be ready to pay to avoid a

lottery whose expected value were her initial sure wealth, or to avoid the additional riskiness

relative to obtaining for sure the expected value of a lottery.

Suppose now for simplicity that all consumers and all fire insurance contracts are identical.

The insurance company, by insuring a high number of consumers with independent risks, owing to

the law of large numbers can be certain to pay damages to a percentage 1–p of insured customers on

average, so it has little reason to be risk averse; if it had no expenses it would be capable of

guaranteeing its customers a certain income x* by asking for x1–x* as premium; by offering only

x^<x* (and asking for x1–x^ as premium) the insurance company obtains a difference between

earnings and payments with which it can cover administrative expenses and earn a rate of profit on

its capital. Thus one can say, as a first approximation, that it is risk aversion that makes the

existence of insurance companies possible. If the company were a monopolist, it would offer an x^

for sure only infinitesimally greater than C(ℒ); with free entry and competition, x^ will tend to be

the highest one allowing insurance companies to cover administrative expenses and to earn the

normal rate of return on their capital.

Figure 9.2 also illustrates the effect of a mean-preserving spread of the alternative

outcomes: assume that, with p constant, the distance of the possible outcomes of the lottery from its

unchanged mean value increases, that is, a and b increase while x* remains unchanged. This means

that points A and B move respectively to the right and to the left on the u(x) curve, hence the line

connecting them shifts downwards (cf. the dotted straight line in Fig. 9.2), so u(ℒ) and C(ℒ)

decrease: the risk premium increases. It is not difficult to prove that the same behaviour of u(ℒ) and

C(ℒ) is caused by a mean-preserving spread that consists of replacing an outcome, say x-b, with two

19

One can also see x* as initial wealth x1 minus the expected value of the damage x1-x2, i.e. x*=x1(1-p)(x1-x2). In an example below the expected value of the damage is indicated as d.

20

Unfortunately the term ‘premium’ is used in commercial language both to indicate what the

customer pays for the insurance coverage, and in the locution ‘risk premium’ with the meaning explained in

the text (which is why other terms are sometimes preferred for this second usage, such as risk avoidance

price). The term ‘risk premium’ is also used with a still different meaning in other contexts.

13

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 14

outcomes, one greater and one smaller, such that their joint probability is equal to the probability of

the replaced outcome and their total expected value is equal to the replaced outcome[21]: because of

risk aversion the sub-lottery with the two new outcomes as prizes has lower utility than the replaced

outcome, so the new overall lottery has lower expected utility because it has, in place of x-b, the

certainty equivalent of the sub-lottery that replaces it (Exercise: show it graphically).

In Fig. 9.2 an alternative u^(x) curve (the dotted curve) has also been drawn, more concave

than the first one, but such that u^(x*)=u(x*) so as to favour comparison. The greater concavity

causes the A-B line to be lower than with u(x), so the risk premium is greater. One then says that

u^(x) shows greater risk aversion than u(x); a more precise measure will be indicated shortly.

In Figure 9.2 the assumption that utility has the expected utility form is what allows one to

find u(ℒ) on the point of the AB segment vertically above x*. The intuition for the effect of a meanpreserving spread can also be obtained from Fig. 9.1, where a movement away from the certainty

line from any vector x to another vector x’ with the same expected value means a passage to a lower

indifference curve. The risk premium too can be derived from this graphical representation. Under

our assumption of state-independent utility, it can be measured indifferently on the state-1 axis or

on the state-2 axis, and it is indicated by the difference between the amount of, say, x1

corresponding to E(x) and the amount corresponding to C(x).

Is risk aversion a universal phenomenon? Clearly not, because it does not explain the

acceptance of gambles known to be unfair, like buying tickets of lotteries known to give back in

prizes much less than – even less than a third of – the total revenue from the sale of tickets[22], nor

does it explain the fact that people play gambles known to be unfair, like casino roulette (one of the

less unfair gambles, but still unfair, paying only 36/37 in Europe, and 36/38 in USA, on average, of

what is gambled). Decisions suggesting risk preference can be explained as due to the excitement of

gambling (i.e. one derives utility not only from the prizes but also from the activity itself of

gambling); as due to the attraction of the possibility of becoming very rich, for which one is ready

to give up small sums without worrying much about the fairness of the bet[23]; as due to

desperation, if the prize is the only way to get out of a situation which is already a disaster so that

losing the bet is not going to make things much worse (this last case explains gambles with a

negative expected value by heavily indebted individuals or by stock market traders who try to

recuperate the loss of a speculation turned sour). The same individual can be risk averse or not

depending on the gamble, e.g. most purchasers of lottery tickets also buy insurance policies.

The St. Petersburg Paradox

Still, in most cases risk aversion seems to hold. For example, decreasing marginal utility of

income appears to be the explanation of the famous St. Petersburg Paradox.

The following gamble is proposed: a fair coin is flipped until heads appears; if heads

appears at the n-th flip, a prize of 2n dollars is paid. How much would you be ready to pay for the

right to this uncertain prize? Consider the following table which illustrates the probability of the

prizes and their expected values:

21

For example in the case of Fig. 9.2 outcome x-b might be replaced by the lottery with prizes x-b+ε

and x-b-ε, both with probability 1/2. Exercise: show that the mean of the overall lottery is not affected by

such a replacement.

22

Exercise: prove formally that if a lottery with a single prize pays back to the winner the total

amount earned by selling tickets then the lottery is actuarially fair, and therefore a risk averse individual

should not buy a ticket.

23

There is reason to suspect that people tend not to calculate expected values when the gamble

involves a very small loss versus a very large (although highly improbable) gain; most people have only the

vaguest idea of the probability of winning the big prizes of important lotteries. The anticipation of a regret if

one completely gives up the possibility, however improbable, of becoming very rich is probably an important

reason why one buys lottery tickets.

14

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

flip

prize if heads first comes out at this flip

probability of heads first coming out then

expected value of prize

1

2

1/2

1

2

4

1/4

1

3

8

1/8

1

4

5 ...

16

32

1/16 1/32

1

1

p. 15

n

2n

1/2n

1

The expected value of the gamble is the sum of the expected values of the possible prizes,

hence it is 1 + 1 + 1 + ... = +∞. If one were risk neutral one should be ready to pay any sum, even an

enormous one, for the right to a single play of this gamble! Experimental evidence shows on the

contrary that on average people are ready to pay between 3 and 4 dollars. Bernoulli, one of the

founders of probability theory, proposed to explain this paradox by assuming expected utility with

the basic utility of money m given by ln m. Let mn be the prize if heads comes out at the n-th flip,

and pn the probability of this prize. Then the expected utility of the gamble is

1

1

n

u(ℒ) = pnu (mn ) n ln 2 n n n ln 2 ln 2 n = 2 ln 2 = ln 22 = ln 4 . [24]

n 1 2

n 1

n 1 2

n 1 2

This implies C(ℒ)=4, that may explain the empirical evidence as due to risk aversion being a

bit greater than if the utility of money or wealth were the natural logarithm of wealth. Also, there is

a constraint to what one can pay for such a gamble, deriving from the maximum wealth one can

dispose of; and also probably there is a minimum subsistence wealth one is not ready to risk. (This

minimum subsistence may be the level that explains why, when one’s initial expected wealth level

is below it, one becomes risk loving.)

Actuarially fair insurance

Imagine an individual facing the possibility of a loss of money owing to some event, and

considering whether to insure against this loss. Let us indicate (with the same symbols as Varian):

W the given initial wealth,

L the loss of wealth, or damage, (a single value, for simplicity) caused by the event

occurring, measured in money,

p the probability of L occurring, independent of the individual’s actions[25],

q the ‘insurance coverage’ i.e. the amount of the conditional commodity ‘one dollar the

insurance company will pay in case L occurs’,

π the given premium to be paid per dollar of coverage.

If the individual insures for an insurance coverage q, he pays πq for sure and obtains wealth

W–πq if L does not occur, W–L–πq+q if L occurs. The individual chooses q so as to maximize

utility (1–p)u(W–πq)+pu(W–L–πq+q). The first-order condition is

–(1–p)πu’(W–πq)+p(1–π)u’(W–L–πq+q) = 0 ,

that is,

u' (W - D - q q)

(1 p)

.

u' (W - q)

(1 )

p

The result requires finding the value to which the series ∑nxn converges, and then setting x=1/2 in

it. Consider the geometric series 1+x+x2+...+xn+... = 1/(1–x) for 0<x<1; differentiate both sides to obtain

0+1+2x+3x2+4x3+...+nxn–1+... = 1/(1–x)2; rewrite the left-hand side as 1+x+x+2x2+x2+3x3+x3+... =

(1+x+x2+...+xn+...)+∑nxn , hence ∑nxn = 1/(1–x)2 – 1/(1–x) = x/(1–x)2; this becomes 2 if x=1/2.

25

In many cases the probability of the damage depends on the individual’s actions: e.g. a house fire,

or falling ill and in need of medical assistance, can depend on how careless one is. This gives rise to the

problem of moral hazard, which for the moment we do not want to introduce.

24

15

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 16

Now suppose the insurance company offers an actuarially fair contract, that is, p=π, for

example because competition with entry reduces expected pure profit to zero, and the insurance

company – we may assume for simplicity – has no costs other than the payment of coverages, in

which case expected profit is, on each insurance contract, p(πq–q)+(1–p)πq = (p–π)q, implying p=π

if expected profit is zero. An actuarially fair gamble or bet is, as already indicated, one that offers a

win whose expected value equals what one pays for the gamble; if the customer pays πq and

receives q with probability p=π the expected value of receiving q is pq=πq. If p=π then the righthand side of the above first-order condition equals 1, implying equality of the marginal utilities on

the left-hand side and therefore, since u is strictly concave, a marginal utility determined in the

same point at the numerator and at the denominator i.e. (W–L–πq+q)=(W–πq) i.e. L=q. The

individual chooses complete insurance: he pays πq and is left with W–πq, and in case L happens he

receives q=L and finds himself again with W–πq. So he obtains W–πq for sure, and since we have

shown that the insurance contract is fair, this means W–πq equals the expected value of the lottery

E(ℒ) = p◦(W–L)(1–p)◦W, corresponding to income x* in Fig. 9.2.

x2 (income in

state 2)

■

E(x)

C(x)

▪

■

x

45°

O

α β

Fig. 9.3

x1 (income in state 1)

The Figure reproduces Fig. 9.1 with the indication of the risk premium, represented by

segment αβ; it also shows that a more convex indifference curve through x (the dotted

one) would cause the risk premium to be greater because it causes the certainty equivalent

to be smaller.

A clear intuition for this result can be obtained from Fig. 9.1 or 9.3. Let x represent the

lottery payoffs without insurance, with state 2 indicating income in case the damage occurs (x1=W,

x2=W–L) so now it is x2 that has probability p and the constant-expected-value lines have slope –

(1–p)/p; the expected value of this lottery is p(W–L)+(1–p)W. If the customer buys q dollars of

coverage, paying πq for it, the point representing the lottery moves to x’=(W–πq, W–πq–L+q), that

is, as q increases x’ moves North-West along a straight line of slope –(1–π)/π. If insurance is

actuarially fair, p=π, then this ‘insurance line’ coincides with the constant-expected-value line, so

for any q the expected value of the lottery with insurance is the same as without insurance, π(W–L–

πq+q)+(1–p)(W–πq) = p(W–L)+p(1–π)q+(1–p)W–(1–π)πq = p(W–L)+(1–p)W. Then the customer

finds it optimal to reach the point E(x) on the certainty line, which owing to the convexity of

indifference curves is the point with the highest utility on the constant-expected-value line. In other

words, by insuring completely the individual reaches the utility of the expected value of the lottery

for sure, and therefore avoids the loss in utility associated with risk aversion.

Example. (Here Fig. 9.2 is more appropriate.) A family has wealth m whose utility is given

by u(m)=m1/2 and has VonNeumann-Morgenstern utility relative to choices under uncertainty. The

family's initial wealth is m*=100 and it esteems there is a 50% probability of a damage of 64, which

would reduce wealth to 36.

a) Determine the expected value E(m) of the family's wealth.

16

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 17

b) Determine the expected utility of the 'lottery' describing the two possible wealths of the

family if no insurance is purchased.

c) Determine the maximum price P=d+R (where d is the expected value of the damage

reimbursement) the family is ready to pay for complete insurance, and the associated risk avoidance

price R i.e. benefit from elimination of risk.

Solution. Expected value of wealth E(m)=100/2 + 36/2 = 68 (this is the x* in Fig. 9.2).

Utility of 'lottery' L=[100, 36; p=1/2] is u(ℒ)=10/2 + 6/2 = 8. Certainty equivalent of the 'lottery' is

C(ℒ)=64, the certain wealth with utility equal to 8. Maximum P payable for complete insurance is

the one that reduces initial wealth 100 to the level yielding the same utility as without insurance i.e.

u=8, therefore P=36 that reduces wealth to C(ℒ)=64. R=P–d where d=expected value of the

reimbursement i.e. of the damage, that is, d=64/2=32; hence R=4, also obtainable as R=E(m)–C(ℒ)

because E(m)=m*–d, C(ℒ)=m*–P.

Unfair insurance

In order to cover administrative costs and, possibly, some risk aversion of the owners,

insurance companies do not offer actuarially fair insurance contracts. We can understand the effect

in terms of the two-states diagram (Fig. 9.4). Now π>p, so the ‘insurance line’ is less steep than the

constant-expected-value line. If the consumer can choose the coverage q at the fixed premium π,

and if the absolute slope of the ‘insurance line’ is greater than –dx2/dx1 at x, she maximizes utility at

the point y of tangency between the ‘insurance line’ and indifference curve, so she insures only

partially[26].

x2 (income in

state 2, with loss)

■

C(x)▪

E(x)

■y

slope –(1–p)/p

■

slope –(1–π)/π

x

45°

O

x1 (income in state 1, no loss)

Fig. 9.4

The analysis of the same case through the graphical representation of Fig. 9.2 is as follows.

With fair insurance (π=p) we have seen that the consumer insures completely, that is, buys a

coverage q=L, pays πq, and is left with x*=W–πq=W–πL for sure, which is the expected value of

the original lottery. To understand the effect of π>p let us first re-examine the case π=p, but now

with incomplete insurance. For q<L if p=π the expected value of the lottery ℒ’=p◦(W-L-πq+q)(1p)◦(W-πq) remains equal to x*=W–pL, and u(ℒ’) can be found vertically above x* on the straight

line connecting the points A’ and B’ corresponding to x=W-πq and x=W-L-πq+q on the curve u(x),

cf. Fig. 9.5; u(ℒ) rises with q but remains less than u(x*) as long as q<L, which is why it is best for

the consumer to go all the way to q=L which causes A’ and B’ to coincide.

If on the contrary π>p, the expected value of the same lottery ℒ’ equals W–pL–(π–p)q<x*,

so it decreases as q increases; there are therefore two effects on u(ℒ’) as q increases from zero, a

positive effect deriving from the A’-B’ line moving upwards, and a negative one deriving from the

26

If the insurance company is risk neutral and can decide both q and P, what is the choice that

maximizes its profit?

17

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 18

leftward movement of the point E(ℒ’) on the abscissa, which (going up from it vertically) gives us

the point on the A’-B’ line that indicates u(ℒ’). The first-order condition tells us that now it must be

u’(B’)/u’(A’)>1 for an optimum, which means that, at the optimum, B' is to the left of A' on the

utility curve: evidently as q increases the negative effect becomes stronger than the positive one

before A’ and B’ come to coincide, that is, before q=L. Therefore the optimum q for the consumer

is less than L, which means the consumer does not insure completely.

A’ A

graph

u(W)

u(ℒ’)

u(ℒ)

B’

B

W-L

x*

W

Fig. 9.5

u(ℒ’) when q<L but p=π and therefore the expected value of ℒ’ remains equal to x*.

Measuring risk aversion

Fig. 9.3 shows that the risk premium is the greater, the greater the convexity of indifference

curves; Fig. 9.2 shows that the risk premium is the greater, the greater the concavity of the expected

utility u(x). In applications, the approach illustrated in Fig. 9.2 is the more frequent one; then a

measure of the concavity of u(x) can be useful; it must measure how fast the slope of u(x) decreases

if x increases. The most widely used measure is

u" ( x)

,

u ' ( x)

the absolute value of the ratio between second and first derivative of u(x). This is called the ArrowPratt measure of absolute risk aversion. When applied to the expected utility of wealth, it indicates

how fast its slope decreases as wealth increases: the second derivative alone does not suffice,

because it is altered by affine transformations of the expected utility function, which we know do

not alter preferences and maintain the expected utility form; division by u' makes the measure

independent of such transformations. Its appropriateness as a measure of risk aversion can be shown

in two ways. The dotted utility function in Fig. 9.2 has the same slope as the other one at x* and is

more concave, thus its Arrow-Pratt measure is greater, and indeed it causes a greater risk avoidance

price (a smaller certainty equivalent) for the same lottery, indicating a greater loss of utility due to

risk aversion. The Arrow-Pratt measure also indicates how convex the indifference curves of Fig.

9.1 are at the certainty line. Consider the indifference curve through the initial, sure wealth w of a

consumer who is offered gambles consisting of a win x1 with probability p, and a loss x2 (a negative

number) with probability 1-p; if the consumer accepts one such gamble, her wealth becomes a

lottery p◦(w+x1)(1-p)(w+x2). The set of gambles the consumer will accept is the set of points on

or above the indifference curve through w; this is called her acceptance set. The acceptance set will

be the smaller, the more convex the indifference curve through w, indicating that fewer gambles

will be accepted. We can say then that risk aversion is (at least locally) the greater for small

gambles from a certain initial wealth, the more convex the indifference curves at the certaintly line.

We have already seen that at the certainty line all indifference curves have slope x2'(x1)= -p/(1-p) at

x1=0. Let us differentiate agan with respect to x1 in x1=x2=0 both sides of the equality from which

this result was derived:

18

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 19

u ( w x1 )

u ( w x2 ( x1 )) dx2

(1 p)

pu '(1 p )u ' x2 ' (0) 0 .

x1

x2

dx1

We obtain again ∂2u/∂x12=∂2u/∂x22=u"(w) because both calculated in the same point, hence:

pu"(w) + (1-p)[u"(w)x2'(0)x2'(0) + u'(w)x2"(0)]=0

from which, using the fact that x2'(x1)= -p/(1-p) at x1=0, one obtains

p u" ( w)

.

x2"(0) =

(1 p) 2 u ' ( w)

Thus the convexity of the indifference curve is the greater, the greater is the Arrow-Pratt

measure of risk aversion; a consumer with a smaller Arrow-Pratt measure will accept all the

gambles accepted by a consumer with a greater Arrow-Pratt measure, plus some more, indicating

less risk aversion.

A property of this measure is that for small bets it is approximately proportional to the risk

premium or risk avoidance price. Let us prove it.

Let the payoffs of a fair bet on wealth be a random variable h, with E(h)=0. Indicate the risk

premium as R and the initial (sure) wealth as W, with the possible values of h small relative to W.

By the definitions of certainty equivalent and of risk premium it is

E[u(W+h)] = u(W–R).

On the left-hand side we have the expected utility of the random income W+h; on the right-hand

side we have the utility of the certainty equivalent W–R. Let us expand both sides in Taylor’s

series. For the right-hand side one may stop at the second term since R is a fixed amount:

u(W–R) = u(W) – Ru’(W) + higher-order terms.

For the left-hand side it is better to include the third term to allow for the variability of h:

h2

E[u(W+h)] = E[u(W)+hu’(W)+ u”(W)+ higher-order terms] =

2

2

= u(W) + E(h)u’(W) + ½E(h )u”(W) + higher-order terms.

Remembering E(h)=0, noting that E(h2) is the variance of h, and neglecting higher-order terms, we

obtain ½E(h2)u”(W) – Ru’(W), i.e.

u" (W ) Var (h)

(*) R –

.

u ' (W ) 2

The risk premium is approximately equal to the Arrow-Pratt measure of absolute risk

aversion multiplied by half the variance of h.

The above was calculated assuming that the initial wealth was precisely the expected value

of the lottery (W+h). But the reasoning still holds when that is not the case, by replacing W with the

expected value x* of the random variable ‘wealth’. The Arrow-Pratt measure must then be

calculated at x*.

We can illustrate with a numerical example that will give us some feeling for the magnitude

of the risk premium, at the same time checking the goodness of the approximation. Take the kind of

situation considered earlier that may induce one to buy insurance against a possible house fire

causing a damage L. Assume initial wealth is W*=625; u(W)=W1/2; L=225 with a probability 10%

of happening. The lottery the uninsured individual faces is ℒ=.1◦400.9◦625; the expected value of

wealth it implies is x*=E(W)=602.5. The expected utility of the lottery is u(ℒ) = .1 · 4001/2 + .9 ·

6251/2 = 24.5. The certainty equivalent is C(ℒ) = [u(ℒ)]2 = 600.25. Therefore the risk premium is

R=E(W)–C(ℒ)=2.25 (did you expect it to be so small?). Now let us see what measure of the risk

premium is obtained from the (*) approximation. With this utility function, u”(x)/u’(x)= –1/(2x);

the Arrow-Pratt measure must be calculated at x* and therefore is 1/(2x*) = 1/1205. The variance is

E((x–x*)2) = .9·(625–602.5)2 + .1·(602.5–400)2 = 4556.25.

Half this variance times the Arrow-Pratt measure yields 1.89. The true risk premium, 2.25, is 19%

greater than the (*) approximation 1.89. The order of magnitude is not widely off the mark but the

p

19

F Petri Microeconomics for the critical mind

chapter9uncertainty 17/02/2016

p. 20

percentage of error is rather big, which shows that the (*) approximation is good only for random

variables with very small deviations from the mean.

Absolute risk aversion. A question of practical interest is whether increases in wealth induce

or not a greater readiness to accept bets. This is connected with the behaviour of the risk premium,

because if one starts with a sure wealth W and is offered a bet h that turns W into the random

variable W+h, the individual will accept the bet if

E[u(W+h)]>u(W),

which is equivalent to (indicating the lottery with the random variable):

C(W+h)>W

i.e. to

E(W+h)–R(W,h)>W.

If the bet remains unchanged in payoffs and in probabilities as W changes, it is

E(W+h)=W+E(h)

so the bet is accepted if

E(h)>R(W,h).

One speaks of decreasing absolute risk aversion if as wealth increases one comes to accept

bets that at a lower wealth level one would not accept, that is, if for any given h the risk premium R

is a decreasing function of W. It seems intuitive that especially when comparing lower with higher

levels of income and considering a bet that, even though with a positive expected value, at the lower

income level would risk reducing the individual’s income below subsistence, the individual will be

more disposed to accept the bet if rich. Indeed there is ample evidence supporting decreasing

absolute risk aversion (except, of course, in the cases of desperation mentioned earlier). (Buying

lottery tickets with improbable big prizes may appear less attractive to very rich people than to poor

people because of the smaller impact of a win on their life condition; but in this case there isn’t risk

aversion to start with, at least for the poor people.)

In some analyses the assumption is made of constant absolute risk aversion, abbreviated as

CARA, that is, that for a given bet represented by the random variable h with expected value E(h)