SA02-02-bubblenets - alumni

advertisement

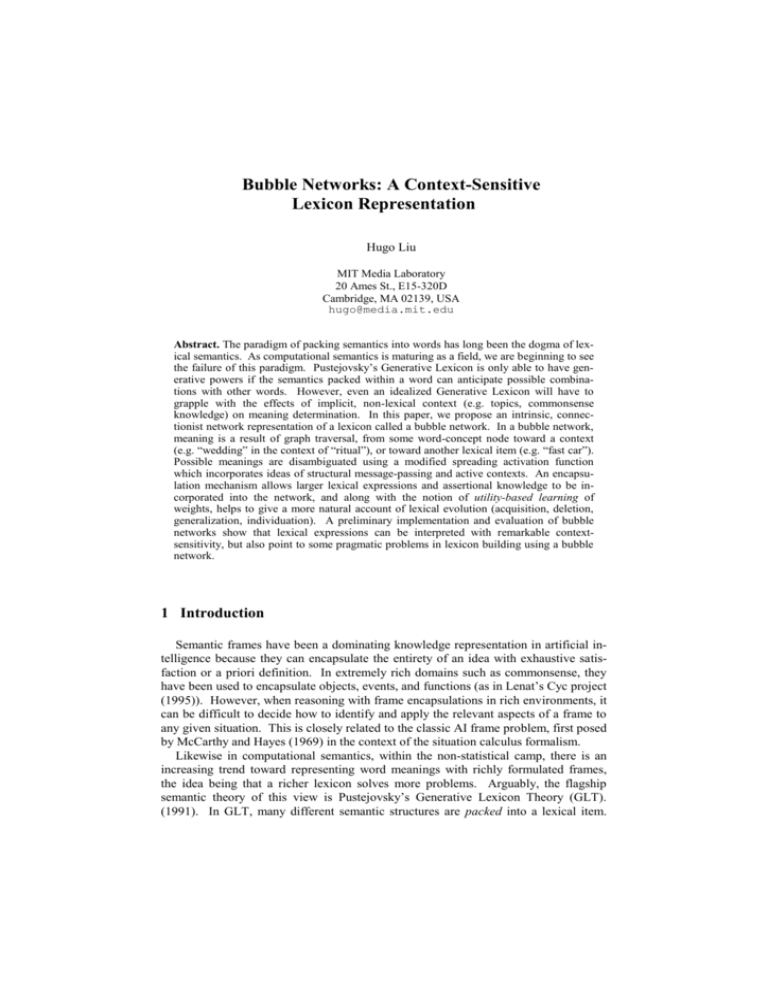

Bubble Networks: A Context-Sensitive Lexicon Representation Hugo Liu MIT Media Laboratory 20 Ames St., E15-320D Cambridge, MA 02139, USA hugo@media.mit.edu Abstract. The paradigm of packing semantics into words has long been the dogma of lexical semantics. As computational semantics is maturing as a field, we are beginning to see the failure of this paradigm. Pustejovsky’s Generative Lexicon is only able to have generative powers if the semantics packed within a word can anticipate possible combinations with other words. However, even an idealized Generative Lexicon will have to grapple with the effects of implicit, non-lexical context (e.g. topics, commonsense knowledge) on meaning determination. In this paper, we propose an intrinsic, connectionist network representation of a lexicon called a bubble network. In a bubble network, meaning is a result of graph traversal, from some word-concept node toward a context (e.g. “wedding” in the context of “ritual”), or toward another lexical item (e.g. “fast car”). Possible meanings are disambiguated using a modified spreading activation function which incorporates ideas of structural message-passing and active contexts. An encapsulation mechanism allows larger lexical expressions and assertional knowledge to be incorporated into the network, and along with the notion of utility-based learning of weights, helps to give a more natural account of lexical evolution (acquisition, deletion, generalization, individuation). A preliminary implementation and evaluation of bubble networks show that lexical expressions can be interpreted with remarkable contextsensitivity, but also point to some pragmatic problems in lexicon building using a bubble network. 1 Introduction Semantic frames have been a dominating knowledge representation in artificial intelligence because they can encapsulate the entirety of an idea with exhaustive satisfaction or a priori definition. In extremely rich domains such as commonsense, they have been used to encapsulate objects, events, and functions (as in Lenat’s Cyc project (1995)). However, when reasoning with frame encapsulations in rich environments, it can be difficult to decide how to identify and apply the relevant aspects of a frame to any given situation. This is closely related to the classic AI frame problem, first posed by McCarthy and Hayes (1969) in the context of the situation calculus formalism. Likewise in computational semantics, within the non-statistical camp, there is an increasing trend toward representing word meanings with richly formulated frames, the idea being that a richer lexicon solves more problems. Arguably, the flagship semantic theory of this view is Pustejovsky’s Generative Lexicon Theory (GLT). (1991). In GLT, many different semantic structures are packed into a lexical item. These linguistic representations include logical argument structure, event structure, qualia structure, and lexical inheritance structure. Packing this practical hodgepodge of semantics into each lexical item, GLT postulates that lexical items, when combined, will interact with and coerce one another with enough power, to generate larger lexical expressions with highly nuanced meanings. We would certainly agree that such a lexicon, being richer, would exhibit more “generative” behavior than most lexicons that have been developed in the past, such as Fellbaum et. al's WordNet project (1998). Nonetheless, we see several problems with the GLT approach that prevent it from better reflecting how human minds handle context and semantic interpretation in a more flexible way. 1. Packing semantics into lexical items is a difficult proposition, because it’s hard to know when to stop. In WordNet, formal definitions and examples are packed into words, organized into senses. In GLT, the qualia structure of a word tries to account for its constitution (what it is made of), formal definition, telic role (its function), and its agentive role (how it came into being). But how, for example, is one to decide what uses of an object should be included in the telic role, and which uses should be left out? 2. Not all relevant semantics is naturally lexical in nature. A lot of knowledge arises out of larger contexts, such as world semantics, or commonsense. For example, the proposition, “cheap apartments are rare” can only be considered true under a set of default assumptions, and if true, has a set of entailments (its interpretive context) which must also hold, such as, “they are rare because cheap apartments are in demand” and “these rare cheap apartments are in low supply.” Although it is non-obvious how such non-lexical entailments are important to the semantic interpretation of the proposition, we will show later, that these contextual factors heavily nuance the interpretation of, inter alia, the word, “rare.” The implication to GLT is that not all practically relevant meaning can be generated from lexical combination alone. In contrast, one inference we might make from the design of GLT is that the context in a semantic description must arise solely as a result of the combination and intersection of explicit words used to form the lexical expression. 3. In the GLT account, the lexicon is rather static, which we know not to be true of human minds. Namely, GLT does not give a compelling account of the lexicon’s own evolution. How can new concepts arise in the lexicon (lexical acquisition)? How can existing concepts be revised? How can concepts be combined (generalization), or split apart (individuation)? How can unnamed concepts, kinds, or immediate or transitory concepts interact with the lexicon (conceptual unification)? 4. The lexicon should reflect an underlying intrinsic (within-system semantics) conceptual system. However, whereas the conceptual system of human minds has connectionist properties (conceptual recall time lapse reflects mental distances between concepts, semantic priming (Becker et al., 1997), GLT does not exhibit any such properties. Indeed, connectionist properties are arguably the key to success in forming contexts. Contexts can be formed quite dynamically by spreading activation over a conceptual web. The intention of scrutinizing GLT in a critical way is not to discount the practical engineering value of building elaborate lexicons, or to single out GLT from our lexicon-building efforts, and in fact, much of this criticism applies to all traditional lexicons and frame systems. In this paper, we examine the weaknesses of frames and traditional lexicons, especially on the points of context and flexibility. We then use this critical evaluation to motivate a proposal for a novel knowledge representation called a bubble network. A bubble network is a connectionist network (also called semantic networks and conceptual webs, in the literature), specially purposed to represent a lexicon. With a small set of novel properties and processes, we describe how a bubble network lexicon representation handles some classically challenging semantic tasks with ease. We show how bubble networks can be thought of to subsume the representational power of frames, first-order predicate logic, inferential reasoning (deductive, inductive, and abductive), and defeasibility (default) reasoning. The network also produces some nice generative properties, subsuming the generative capabilities of GLT. However, the most desirable property of the bubble network is in its seamless handling of context, in all its different forms. Lexical polysemy (nuances in word meaning) is handled naturally (compare this to the awkwardness of WordNet senses), as are the tasks of lexical evolution (acquisition, generalization, individuation), conceptual analogy, and defining the interpretive context of a lexical expression. The power of a bubble network derives from its duality as a connectionist system with intrinsic semantics, and a symbolic reasoning system with some (albeit primitive) inherent notion of syntax. Many hybrid connectionist/symbolic systems have been proposed in the literatures of cognitive science and artificial intelligence to tackle cognitive representations. Such systems have tackled, inter alia, belief encoding (Wedelken & Shastri, 2002), concept encoding (Schank, 1972), and knowledge representation unification (Gallant, 1993). Our attempt is motivated by a desire to design a lexicon that is flexible and naturally apt with context. Our goal is not to solve the connectionist/symbolic hybridization problem; it is to demonstrate a new perspective on the relationship between lexicon and context: how an augmented connectionist network representation of a “lexicon” can naturally address the context problem for lexical expressions. We hope that our presentation will motivate further re-evaluation of the establishment dogma of lexicons as semantically packed words, and inspire more work on reinventing the lexicon to be more pliable to contextual phenomena. Before we present the bubble network, we first revisit connectionist networks and identify those properties which inspired our representation. 2 Connectionist Networks The term connectionist network is used broadly to refer to many, more specific knowledge representations, such as neural networks, the multilayer perceptron (Rosenblatt, 1958; Minsky & Papert, 1969), spreading activation networks (Collins & Loftus, 1975), semantic networks, and conceptual webs (Quine & Ullian, 1970). The common property shared by these systems is that a network consists of nodes, which are connected to each other through links representing correlation. For purposes of situating our bubble network in the literature, we characterize and discuss three desirable properties of connectionist networks that are useful to our venture: learning, representing dependency relations, and simulation. 2.1 Learning Adaptive weights. The tradition of learning networks most prominently features neural networks, which arguably best emphasizes the principles of connectionism. In neural networks, typically a multilayer perceptron (MLP), nodes (or perceptrons) are de-emphasized, in favor of weighted edges connecting nodes, which give the network a notion of memory, and intrinsic (within system) semantics. Such a network is fed a set of features to the input layer of nodes, passes through any number of hidden layers, and outputs a set of concepts. Various methods of back propagation adjust the weights along the edges in order to produce more accurate outputs. Because connecting edges carry a trainable numerical weight, neural nets are very apt for learning. The idea of learning via adapting weights is also found in belief networks (Pearl, 1988), where weights represent confidence values, causal networks (Rieger 1976), where weights represent the strength of implications, Bayesian networks, whose weights are conditional probabilities, and spreading activation networks, where weights are passed from node to node as decaying activation energies. We are interested in applying the idea of changeable weights to lexical semantics because they provide an intrinsic, learnable feature for defining semantic context, by spreading activation; they also support lexical evolution tasks such as acquisition, generalization, and individuation. De-emphasizing nodes. As in the MLP representation, the de-emphasis of nodes themselves in favor of their connections to other nodes also supports lexical evolution, because nodes can be indexical features corresponding to named lexical items, or kinds, or to unnamed features, or to contexts (to be explicated later). In fact, the purpose of node may simply be to provide a useful point of reference and to bind together a set of descriptive features which describe the referent (see Jackendoff, 2002) for a more refined discussion of indexical features and reference). One potentially important role played by nodes is to provide points for conceptual alignment across individuals. Laakso and Cottrell (2000) and Goldstone and Rogosky (2002) have demonstrated that it is possible to find conceptual correspondences across two individuals using inter-conceptual similarities between individualized conceptual web representations, without the need for total conceptual identity. This suggests that such a perspective on a lexical item as a node that is not precisely and formally defined (even though they are called by the same “word”), or extrinsically defined, still supports the faculties of language as a communication means. The lexicon as a conceptual network distinct to each individual does not take away from language’s communication efficacy. However, this is not to say that formal lexical definition plays no role. There is an acknowledged external component to lexical meaning, whether coming from social contexts (Wittgenstein, 1968), the community (Putnam, 1975), or formalized as being free from personal associations (Frege, 1892). Such external meaning, can be learned by and incorporated into an individual’s conceptual network through conceptual alignment and reinforcement. Despite the proposition that each individual maintains his/her own conceptual network that is not identical across individuals, it is still possible, and indeed, a worthwhile venture to create a common conceptual network lexicon that can be assumed to be more or less very similar to the conceptual network possessed by any reasonable person within some culture and population (since meaning converges in language and culture). Similarly, the conceptual networks within some culture and population can possess non-lexical knowledge that constitutes “common sense.” The intent of our paper is to demonstrate how a common lexicon can be represented using a conceptual network, and also how non-lexical commonsense knowledge integrates tightly with lexical knowledge. Generalization. Having only discussed adaptive weights as a learning method for networks, we should add that two other methods important to our venture are rote memory (knowledge is accumulated by directly adding it to the network) and restructuring (generalization). Case-based reasoning (Kolodner, 1993; Schank & Riesbeck 1994) exemplifies rote memory learning. Cases are first added to the knowledge base, and later, generalizations are produced based on a notion of case similarity. In the case of our conceptual network, we will introduce graph traversal as a notion of similarity. One issue of relevance not well covered in the literature is negative learning over graphs, or, learning how to inhibit concepts, and prune contexts. The most relevant work is Winston’s (1975) “near-miss” arch learning. His program learned to generalize a relational graph given positive, negative, and near-miss examples. In our bubble network, conceptual generalization is a consequence of a tendency toward activation economy, the minimization of activation energies needed in graph traversals. 2.2 Representing dependency relations Conceptual Dependency. Connnectionist networks have also been used extensively as a platform for symbolic reasoning. Frege’s (1879) tree notation for firstorder predicate-logic (FOPL), and Peirce’s (1885) existential graphs, were early attempts to represent dependency relations between concepts, but were more graphical drawings than machine-readable representations. In existential graphs, arbitrary predicates, which were concept nodes, were instead used as relations, labeling edges. We do need to label the edges between nodes with dependency relations for reasoning. The key is to decide on a set of edge labels that is not too small or too big and is most appropriate. Arbitrary or object-specific predicate labels might be appropriate for closed domains, such as the case of Brachman’s (1979) description logics system, Knowledge Language One (KL-ONE), but if we are to represent something as rich and diverse as a lexicon, arbitrary dependency labels making generalized reasoning very difficult. Some have tried to enumerate a set of conceptually prime relations, including Silvio Ceccato’s (1961) correlational nets with 56 different relations, and Margaret Masterman's (1961) semantic network based on 100 primitive concept types, with some success. While such creations are no doubt richly expressive, there is little agreement on these primitive types, and motivating a lexicon with an a priori and elaborate set of primitives lends only inflexibility to the system. On the opposite end of the spectrum, overly impoverished relation types, such as the nymic subtyping relations of WordNet, severely curtail the expressive power of the network. Schank’s system of Conceptual Dependency (Schank & Tesler 1969) took primitive representations to a new level of sophistication. His theory mapped words into 12 conceptual primitive acts and 4 primitive conceptualizations. Though our goal is not to try to decompose concepts and words into primitive acts, we recognize this as an important work because Schank’s graphs had an inherent notion of syntax. Edge labels included an agent-verb relation and a verb-object relation, and the three primitive acts of a-, p- and m-trans incorporated a notion of causality. While we will not use primitive acts, we do try to incorporate the casual transfer relation as a type of node in our system. We also incorporate the idea of an agent, verb and modifier into a small ontology of inter-node relations, though in a slightly different formulation. 2.3 Simulation Semantic networks are often treated as static structures that can be read off by a computer program. However, some procedural semantic networks have executable mechanisms contained in the net itself. Quillian’s spreading activation is one that we have already mentioned. We quite happily incorporate the idea of spreading activation when trying to represent a lexicon because spreading activation over intrinsic edge weights is a natural way to define interpretative context. In Petri’s (1962) Petri nets, the idea of messaging passing from Quillian’s networks is combined with a dataflow model which simulates an event. In our model of a lexicon we opt to keep the nodes as free from internal state and content as possible (see the above discussion on de-emphasizing nodes); however, one concession had to be made toward procedural semantics. In order to represent the action of verbs, we introduce a differentiated node for verbs based on Schankian transfer, called a transnode. This node has two binding sites, a before and an after, thus, its activation simulates some procedural change of state of the network. Simulation is a core theme of our bubble networks. Going beyond spreading activation, which can be seen as rather undirected inference away from some origin node, bubble networks engage in directed search. A search path represents a semantic interpretation, and spreading activation away from a search path generates an interpretive context. So it can be said to be true of bubble networks that meaning is a result of simulation. We hope to make this more clear in the next section. 3 Bubble Networks Synthesizing together the desirable properties of connectionist networks described in the previous section, some of which were motivated by connectionism, and others by symbol processing, we present a hybrid symbolic/connectionist system apt for repre- senting a rich lexicon, compound meanings, and interpretive contexts. In this section we first state the tenets, assumptions, and goals of this representation. Second, we describe the types of nodes, edges, and operators available and processes. Third, we perform some interesting linguistic tasks used bubble networks (BN). The rest of the paper will discuss an implementation of bubble networks, followed by evaluation and discussion. formal auto context drive -------- speed limit function param param param property car property property road road material property property paint property value top speed param value value xor value location speed tires pavement dirt value property property param rating wash ---------- value fast value drying time ability ability person ability time xor property walk --------- Fig. 1. A static view of a bubble network. We selectively depict some nodes and edges relevant to the lexical items “car”, “road”, and “fast”. Edge weights are not shown. Nodes cleaved in half by a straight line are transnodes, and have some notion of syntax. The black node is a context-activation node. 3.1 Goals, Tenets and Assumptions Goals. The goals of the bubble network lexicon representation can be stated as follows: 1. Lexicon building should not involve arbitrary decisions regarding how much semantic structure to pack into a word. Any semantic structure possibly relevant in explaining a lexical item should be accessible to it. 2. Lexical items should interact with each other to produce compounds and still larger expressions, with rich generative meaning. 3. 4. 5. 6. 7. The lexical representation should provide a natural and systematic account of polysemy (ambiguous meaning). The lexical representation should provide a natural account of how context affects the semantic interpretation of any lexical expression, and explain how non-lexical knowledge (including commonsense knowledge) is a part of this context. The lexicon representation should provide an account of lexical evolution – how concepts may be acquired, generalized, individuated, and deleted. The ontology of relations between nodes should not be completely conceptspecific as in description logics, as the network should be easily machinetraversable without too much knowledge in the traversal mechanism. We want to be able to embed and make use of frame-like knowledge. Tenets. Graphically Figure 1 resembles any plain semantic network, but the differences will soon be evident. Here, we begin by explaining some principled differences. 1. No coherent meaning without simulation. From a static view of the bubble network, lexical items are merely a collection of relations to a large number of other nodes, reflecting the many useful perspectives of a word, conflated onto a single network; therefore, lexical items hardly have any coherent meaning in a static view. When human minds think about what a word or phrase means, meaning is always evaluated in some context. Similarly, a word only becomes coherently meaningful in a bubble network as a result of simulation (graph traversal): spreading activation (edges are weighted, though figure 1 does not show the weights) from the origin node, toward some destination. The destination may be a context node, or if a larger lexical expression is being assembled, toward other lexical nodes. The destination node, no matter if it is a word or a context, can be seen as a semantic primer. It provides a context which helps us hammer down a more coherent and succinct meaning. 2. Activated nodes in the context biases interpretation. The meaning of a word, therefore, is the collection of nodes and relations it has traversed in moving toward its context destination. The meaning of a compound, such as an adjective-noun pair, is the collection of paths from the root noun to the adjectival attribute. One path corresponds to one “word sense” (rather unambiguous meaning). The viability of a word sense path depends upon any context biases nearby the path which may boost the activation energy of that path. In this way, semantic interpretation is very naturally influenced by context, as context prefers one interpretation by lending activation energy to its neighbors. Assumptions. We make some reasonable assumptions about our representation. 1. Nodes are word-concepts. Although determiners, pronouns, and prepositions can be represented as nodes, we will not show these because they are connected to too many nodes. 2. 3. 4. 5. Nodes may also be larger lexical expressions, such as “fast car,” constructed through the process of encapsulation (explanation to follow), or they may be unnamed, intermediate concepts, though those are usually not shown. In our examples, we always show selected nodes and edges, although the success of such a lexicon design thrives on the network actually being very well-connected and dense. Obviously different word senses (e.g. fast: not eat, vs. quick) are represented by different nodes. However, more nuanced polysemy is handled by the same node. We assume we can cleanly distinguish between these two classes of word senses. Compare to WordNet, in which a lot of nuanced polysemy is cleaved into different word senses. Though not always shown, relations are always numerically weighted between 0.0 and 1.0, in addition to the predicate label, and nodes also have a stable activation energy (cf. spreading activation literature). 3.2 Ontology of Nodes, Relations, and Operators As shown in Figure 2, there are three types of nodes. Normal nodes may be wordconcepts, or larger encapsulated lexical expressions. However, some kinds of meaning i.e. actions, beliefs, implications cannot be represented using static nodes and relations. Whereas most semantic networks have overcome this problem by introducing a causal relation (Rieger, 1976; Pearl, 1988), we opted for a causal node called a TransNode because it offers a more precise account of situation change by providing two binding sites representing a before and after state. TransNodes are based on the Schankian notion of transfer, though we use them more generally for any action wordconcept whose nature is causal. TransNodes are divided and have a top and bottom binding site, in addition to the periphery (because it can also behave like a normal node). The top and bottom binding sites refer to a before and after situation, much like Marvin Minsky’s transframe (1986). TransNode introduces the notion of causality as being inherent in some word-concepts, like actions. We feel that this is a more faithful representation than putting the causality in links. With TransNodes, causal effects on the semantic network are only observable by simulation. The next section will offer a more detailed account of how this node is used. Types of Nodes ----Normal Types of Relations function x.y() param x(y,) Types of Operators NOT OR XOR TransNode ability x.y() isA x:y (subtype) AND ContextNode TransientNode property x.y assoc x,y 1 2 3 value x=y (ORDERING) Fig. 2. Ontology of node, relation, and operator types. ContextNodes are also interesting. They use the assoc (generic association) relation, along with operators, to cause the network to be in some state when they are activated. In Figure 1, the “formal auto context” ContextNode is meant to represent a person’s stereotyped notion of what constitutes the domain of automotives. When it is activated, it loosely activates a set of other nodes. The next section will explain their activation. Of course, context nodes, because they are rather vague, will not be identical across people; however, we posit that because knowledge of domains is a part of commonsense knowledge, these nodes will be conceptually similar across people. Because ContextNodes help to group and organize nodes, they are useful in producing abstractions, just as a semantic frame might. For example, let’s consider again, the example of a car, as depicted in Figure 1. A car can be thought of as an assembly of its individual parts, or it can be thought of functionally as something that is a type of transportation that people use to drive from point A to point B. We need a mechanism to distinguish these two abstractions of a car. We could, of course, depend purely on lexical context from other parts of the larger lexical expression to help us pick the right abstractions; however, it is occasionally desirable to enforce more absolutely the abstraction boundaries. Of course, we can view an abstraction, as a type of packaged context. Along these lines, we can introduce two ContextNodes to define the appropriate abstractions. So far we have only talked about nodes that are stable word-concepts and stable contexts in the lexicon. These can be thought of as being stable in memory, and very slowly changing. However, it is also desirable to represent more temporary concepts, such as those used in thought. For example, to reason about “fast cars”, one might encapsulate one particular sense of fast car into a TransientNode. Or if one wanted to instantiate a car into a particular one and attribute some strange fantastical features to it, one can also accomplish this with a TransientNode. If there is little reason for it to persist, a TransientNode can slowly die out as its connections fade over time. However, if it becomes a useful concept, it might persist and be strengthened, evolving into a normal node. Although not shown in Figure 2, any of the three stable nodes can be transient. TransientNodes explain how fleeting concepts in thought can be reconciled with the lexicon, which contains more stable elements. We present a small ontology of relations to represent fairly generic relations between concepts. These are quite consistent, though not identical with network relations found in the literature []. The reason why relations are also expressed in objectoriented programming notation is because the process of search in the network engages in marker passing of relations. Oriented-oriented programming notation is a useful shorthand and is better than stating sequences of binary relations because it already has a notion of symbol binding. It also important to be reminded at this point, that each edge carries not only a named relation, but also a numerical weight, indicating the strength of a relation. Numerical weights are critically important in all processes of bubble networks, especially spreading activation and learning. Operators put certain conditions on relations. In Figure 1, road material may only take on the value of pavement or dirt, and not both at once. Some operators will only hold in a certain instantiation or a certain context, so operators can be conditionally activated by a context or node. For example, a person can drive and walk, but under the time context, a person can only drive XOR walk. 3.3 Processes Having described the types of nodes, relations, and operators, we now explain the processes that are core themes of bubble networks. top speed a.b car a.b() drive ------- a.b(c) speed a.b(c=d) fast car a.b tires (a.b).c rating ((a.b).c)=d fast car a.b paint (a.b).c drying time ((a.b).c)=d fast car b.a() wash ------- b.a(c) speed b.a(c=d) fast car a.b() drive ------- a.b(c) road c.d & a.b(c) speed limit c.d=e & a.b(c) car a.b() drive ------- a.b(c) road c.d & a.b(c) road material c.d=e & a.b(c) (a.b)=c fast (a) The car whose top speed is fast. car (b) The car that can be driven at a speed that is fast. (c) The car whose tires have a rating that is fast. (d) The car whose paint has a drying time that is fast. (e) The car that can be washed at a speed that is fast. fast pavement (f) The car that can be driven on a road whose speed limit is fast. e.f & c.d = e a.b(c) drying time e.f=g & c.d = e a.b(c) fast (g) The car that can be driven on a road whose road material is pavement, whose drying time is fast. Fig. 3. Different meanings of “fast car,” resulting from network traversal. Numerical weights and other context nodes are not shown. Edges are labeled with message passing, in OOP notation. The ith letter corresponds to the ith node in a traversal. Meaning Determination. One of the important tenets of the lexicon’s representation in bubble networks is that coherent meaning can only arise out of simulation. That is to say, out-of-context, word-concepts have so many possible meanings associated with each of them that we can only hope to make sense of a word by putting it into some context, be it, a topic area (e.g. traversing from “car” toward the ContextNode of “transportation”), or lexical context (e.g. traversing from “car” toward the normal node of “fast”). We motivate this meaning as simulation idea with the example of attribute attachment for “fast car”, as depicted in Figure 1. Figure 3 shows some of the different interpretations of “fast car”. As illustrated in Figure 3, “fast car” produces many different interpretations given no other context. Novel to bubble networks, not only are numerical weights passed (cf. Quillian’s spreading activation network), OOP-style messages are also passed. Therefore, “drying time” will not always relate to “fast” in the same sense. It depends on whether or not pavement is drying or a washed car is drying. Therefore, the history of traversal functions to nuance the meaning of the current node. Although traversal produces many meanings for “fast car,” most of the senses will not be very energetic, that is to say, they are not very plausible out of context. The senses given in Figure 3 are ordered by plausibility. Plausibility is determined by the activation energy of the traversal path. Spreading activation across a traversal path is different than spreading activation in literature. active j contexts j ijx n 1, n n n i (1) ij x ni M n1,n n cn n , n 1 (2) c Equation (1) shows how a typical activation energy for the xth path between nodes i and j is calculated in classical spreading activation systems (see Salton & Buckley, 1988). It is the summation over all nodes in the path, of the product of the activation energy of each node n along the path, times the magnitude of the edge weight leading into node n. However, in a bubble network, we would like to make use of extra information to arrive at a more precise evaluation of a path’s activation energy, especially against all other paths between i and j. This can be thought of as meaning disambiguation, because in the end, we inhibit the incorrect paths which represent incorrect interpretations. To perform this disambiguation, the influence of external contexts that are active (i.e. other parts of the lexical expression, relevant and active non-lexical knowledge, discourse context, and topic ContextNodes), and the plausibility of the OOP-message being passed are factored in. If we are evaluating a traversal path in a larger context, such as a part of a sentence or larger discourse structure, or some topic is active, then there will likely be a set of word-concept nodes and ContextNodes which have remained active. These contexts are factored into our spreading activation evaluation function (2) as the summation over all active contexts c of all paths from c to n. The plausibility of the OOP-message being passed M is also important. Adn ,n1 mittedly, for different linguistic assembly tasks, different heuristics will be needed. In attribute attachment (e.g. adj-noun compounds), the heuristic is fairly straightforward. The case in which the attribute characterizes the noun-concept directly is preferred, followed by the adjective characterizing the noun-concept’s ability or use (e.g. Fig. 3(b)) or subpart (e.g. Fig. 3(a,c,d)), followed by the adjective characterizing some external manipulation of the noun-concept (e.g. Fig. 3(e)). What is not preferred is when the adjective characterizes another noun-concept that is a sister concept (e.g. Fig. 3(f,g)). Our spreading activation function (2) incorporates classic spreading activation considerations of node activation energy and edge weight, with context influence on every node in the path, and OOP-message plausibility. Recall that the plausibility ordering given in Figure 3 assumed no major active contexts. However, let’s consider how the interpretation might change had the discourse context been a conversation at a car wash. In such a case, “car wash” might be an active ContextNode. So the meaning depicted in Fig. 3(e) would experience increased activation energy from the context term, "car wash", wash . This boost would likely make (e) a plausible, if not the preferred, interpretation. Encapsulation. Once a specific meaning is determined for a lexical compound, it may be desirable to refer to it, so, we can assign to it a new indexical feature. This happens by the process of encapsulation, in which a specific traversal of the network is captured into a new TransientNode. (Of course, if the node is used enough, over time, if may become a stable node). The new node inherits just the specific relations present in the nodes along the traversal path. Figure 4 illustrates sense (b) of “fast car”. fast car isA assoc car function AND assoc drive ----- param assoc speed value fast Fig. 4. Encapsulation. One meaning of “fast car” is encapsulated into a TransientNode, making it easy to reference and overload. More than just lexical compounds can be encapsulated. For example, groupings of concepts (such as a group of specific cars) can be encapsulated, along with objects that share a set of properties or descriptive features (Jackendoff calls these kinds), and even assertions and whole lines of reasoning can be encapsulated (with the help of the Boolean and ordering operators). And encapsulation is more than just a useful way of abstraction-making. Once a concept has been encapsulated, its meaning can be overloaded, evolving away from the original meaning. For example, we might instantiate “car” into “Mary’s car,” and then add a set of properties specific to Mary’s car. We believe encapsulation, along with classical weight learning, supports accounts of lexical evolution, namely, it helps to explain how new concepts may be acquired, concepts may be generalized (concept intersection), or individuated (concept overloading). Lexical Evolution. Along with context-sensitivity, lexical evolution is another way that the bubble network distinguishes itself from other lexicon representations. Already mentioned, encapsulation offers a mechanism by which concept combinations can be captured and overloaded. Variations on this idea allow for concept acquisition, deletion, generalization, and individuation. The primary drive behind evolution is utility. As the bubble network is invoked and used over time, concepts and relations which are more often traveled will have their weights promoted. The utility of a concept increases its stable activation energy. If it is useful to have two specific versions of a concept rather than a single concept, then individuation is likely. Similarly, if a general concept covers all the ground of individual examples, then, that general concept might become more stable over time and the individual examples may die off. Contrast utility-driven learning with rote memory learning, as in the case-based learning scheme of Schank & Riesbeck (1994). In their scheme, generalization is motivated by case similarity, so very similar cases might be merged, and not-sosimilar cases will not be merged. Our scheme makes a different prediction. Very similar cases will not be generalized if the differences between them are important enough to justify both cases existing. Not-so-similar cases might be merged if the cases are obscure enough to not be invoked very often. Utility learning is a benefit derived from maintaining intrinsic weights, as is done in most neural networks. Frame Learning. On a more practical note, one question which may be looming in the reader’s mind is how a bubble network might practically be constructed. Because of lexical evolution, it is theoretically possible to start with very few concepts and to slowly grow the network. However, a more practical solution would be to bootstrap the network by learning frame knowledge from existing lexicons, such as GLT, or even Cyc. Taking the example of Cyc, we might map Cyc containers into nodes, Cyc predicates into a combination of TransNodes and bubble network relations, and map micro-theories (Cyc’s version of contexts) into ContextNodes which activate concepts within each micro-theory. Assertional knowledge can be encapsulated into new nodes. Cyc suffers from the problem of rigidity, especially contextual rigidity, as exhibited by microtheories which predefine context boundaries. (Figure 5). However, we believe that once frames are imported into a bubble network, the notion of context will become much more flexible and dynamic, through the process of meaning determination. Contexts will also evolve, based on the notion of utility, not just predefinition. Fig. 5. Cyc’s notion of context via Microtheories, versus real contexts. 4 Implementation and Evaluation To test the ideas put forth in this paper, we implemented bubble networks over a subset of the Open Mind Commonsense Semantic Network (OMCSNet) (Liu & Singh, 2002) based on the Open Mind Commonsense knowledge base (Singh, 2002), and using the adaptive weight training algorithm developed for ARIA (Liu & Lieberman, 2002). Edge weights were assigned an a priori fixed value, based on the type of relation. The spreading activation evaluation function described in equation (2) was implemented. We planted 4 general ContextNodes through OMCSNet, based on proximity to the hasCollocate relation, which was translated into the assoc relation in the bubble network. An experiment was run over four lexical compounds, alternatingly turning on each of the ContextNodes plus the null ContextNode. ContextNode activations were set to a very high value to elicit a context-sensitive meaning. Table 1 summarizes the results. Table 1. Results of an experiment run to determine the effects of active context on attribute attachment in compounds. Compound (context) Fast horse ( ) Fast horse (money) Fast horse (culture) Fast horse (transportation) Cheap apartment ( ) Cheap apartment (money) Cheap apartment (culture) Cheap apartment (transportation) Love tree ( ) Love tree (money) Love tree (culture) Love tree (transportation) Talk music ( ) Talk music (money) Talk music (culture) Talk music (transportation) Top Interpretation ( ij x score in %) Horse that is fast. (30%) Horse that races, which wins money, is fast. (60%) Horse that is fast (30%) Horse is used to ride, which can be fast. (55%) Apartment that has a cost which can be expensive. (22%) Apartment that has a cost which can be expensive. (80%) Apartment is used for living, which is cheap in New York. (60%) Apartment that is near work; Gas money to work can be cheap (20%) Tree is a part of nature, which can be loved (15%) Buying a tree costs money; money is loved. (25%) People who are in love kiss under a tree. (25%) Tree is a part of nature, which can be loved (20%) Music is a language which has use to talk. (30%) Music is used for advertisement, which is an ability of talk radio. (22%) Music that is classical is talked about by people. (30%) Music is used in elevators where people talk. (30%) One difference between the attribute attachment example given earlier in the paper and the results of the evaluation is that assertional knowledge (e.g. “Gas money to work can be cheap”) is an allowable part of the traversal path. Assertional knowledge is encapsulated as a node. As the results show, meaning interpretation can be very context-sensitive. However, with it comes several consequences. First, meaning interpretation is very sensitive to the sorts of concepts/relations/knowledge present in the lexicon. For example, in the last example in Table 1, “talk music” in the transportation context was interpreted as “music is used in elevators, where people talk.” This interpretation came about, even though music is played in buses, cars, planes, and everywhere else in transportation. This has to do with the sparsity of relations in the test bubble network. Although those other transportation concepts were present, they were not properly connected to “music”. What this suggests is that meaning is not only influenced by what exists in the network, it is also heavily influenced by what is absent from the network, such as the absence of a relation that should exist. Generally though, this preliminary evaluation of bubble networks for meaning determination is promising, as it shows how the meaning of lexical expressions can be made very sensitive to context. Compare this with traditional notions of polysemy as a phenomena which is best handled through a fixed and predefined set of senses. But senses do not produce the generative behavior witnessed in real life, in which meaning is naturally nuanced by context. 5 Conclusion In this paper we presented a novel lexicon representation called the bubble network. Bubble networks hybridize a connectionist network with some symbol manipulation properties, resulting in an intrinsic lexical representation with the power to encode frame-based lexical semantics found in GLT. Nodes in a bubble network are indexical features for word-concepts, which then connect to other word-concepts through a small set of OOP relations. Contrasting the traditional approach taken to lexicons, in which semantics are packed into a word, the bubble networks approach de-emphasizes word-concept nodes, instead, positing that a word-concept is just the set of descriptive features it binds together. An implication of this is that words only have meaning when we traverse the network toward some context. For example, the meaning of “wedding” in the context of “event” may be that it has a ceremony, followed by a reception, and that invitations, gifts, and proper dress are required. But in the context of “ritual”, a wedding might more aptly mean an exchange of vows between a bride and a groom, accompanied by bridesmaids and a best man and flower girls. Or in a lexical expression, meaning will consist of the path which connects two lexical items, such as in “fast car.” Meaning is completely context-sensitive, and this notion is more aptly captured in a bubble network than in a traditional lexicon in which word and context boundaries are enforced. Because bubble networks have adaptive edge weights in addition to named relations, we can begin to provide an account for lexical evolution (word-concept acquisition, deletion, generalization, individuation) based on the notion of network utility. The availability of ContextNodes and the role of active contexts in a revised spreading activation function for bubble networks makes explicit the notion that meaning determination is a context-sensitive process. The mechanisms of encapsulation and overloading provide an account for lexical evolution, and also for temporary lexicon manipulations, such as assembling a temporary lexical expression. A cursory implementation and evaluation of bubble networks over OMCSNet yielded some interesting preliminary findings. The semantic interpretation of lexical compounds under different contexts is remarkably sensitive. However, these preliminary findings also tell a cautionary tale. The accuracy of a semantic interpretation is heavily reliant on the concepts in the network being well-connected. This makes the task of importing traditional lexicons into bubble networks all the more challenging, because traditional lexicons are typically not conceptually well-connected. References 1. Becker, S., Moscovitch, M., Behrmann, M., & Joordens, S. (1997). Long-term semantic priming: A computational account and empirical evidence. Journal of Experimental Psychology: Learning, Memory, & Cognition, 23(5), 1059-1082. 2. Brachman, Ronald J. (1979) "On the epistemological status of semantic networks," in Findler (1979) 3-50. 3. Ceccato, Silvio (1961) Linguistic Analysis and Programming for Mechanical Translation, Gordon and Breach, New York. 4. Collins, A. M., & Loftus, E. F. (1975). A spreading-activation theory of semantic processing. Psychological Review, 82, 407--428. 5. Fellbaum, C. (Ed.). (1998). WordNet: An electronic lexical database. Cambridge, MA: MIT Press. 6. G. Frege. On sense and reference. In P. Geach and M. Black, editors, Translations from the Philosophical Writings of Gottlob Frege, pages 56--78. Blackwell, Oxford, 1970. Originally published in 1892. 7. Frege, Gottlob (1879) Begriffsschrift, English translation in J. van Heijenoort, ed. (1967) From Frege to Gödel, Harvard University Press, Cambridge, MA, pp. 1-82. 8. Gallant, S., editor (1993). Neural Network Learning and Expert Systems. MIT Press, Cambridge, Massachusetts. 9. Goldstone, R. L., Rogosky, B. J. (2002). Using relations within conceptual systems to translate across conceptual systems. Cognition 84, 295-320 10. Jackendoff, R. (2002). Reference and Truth. In, Jackendoff, R., Foundations of Language. Oxford University Press, 2002. 11. Kolodner, Janet L. (1993) Case-Based Reasoning, Morgan Kaufmann Publishers, San Mateo, CA. 12. Laakso, A. & Cottrell, G. (2000). Content and cluster analysis: assessing representational similarity in neural systems, Philosophical Psychology, 13, 47-76 13. Lenat, D. B. (1995). CYC: A large-scale investment in knowledge infrastructure. Communications of the ACM, 38(11). 14. Liu, Hugo and Lieberman, Henry (2002). Robust photo retrieval using world semantics. In Proceedings of the 3rd LREC Workshop: Creating and Using Semantics for Information Retrieval and Filtering: State-of-the-art and Future Research (LREC2002), pp. 15-20, Las Palmas, Canary Islands. 15. Liu, Hugo and Singh, Push (2002). OMCSNet: A commonsense semantic network. MIT Media Laboratory Society of Mind Group Technical Report 02-1. 16. Masterman, Margaret (1961) "Semantic message detection for machine translation, using an interlingua," in NPL (1961) pp. 438-475. 17. J. McCarthy and P. J. Hayes. Some philosophical problems from the standpoint of Artificial Intelligence. In B. Meltzer and D. Michie, editors, Machine Intelligence 4, pages 463 -502. Edinburgh University Press, 1969. 18. Minsky, M. (1986). Society of Mind. Simon and Schuster, New York. 19. M. Minsky and S. Papert. Perceptrons: An Introduction to Computational Geometry. The MIT Press, 1969. 20. Pearl, J. (1988). Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Morgan Kaufmann, San Mateo, CA. 21. Peirce, Charles Sanders (1885) "On the algebra of logic," American Journal of Mathematics 7, 180-202. 22. Petri, C.A., "Kommunikation mit Automaten" (in German), Schriften des IIM Nr. 2, Institut fur Instrumentelle Mathematik, Bonn, 1962. 23. Pustejovsky, J. (1991) `The generative lexicon', Computational Linguistics, 17(4), 409-441. 24. Putnam, H., (1975), The meaning of 'meaning', in Mind, language and reality, Cambridge University Press, New York, NY 25. Quine, W. V. and S. Ullian, Web of Belief, Random House, 1970. 26. Rieger, Chuck (1976) "An organization of knowledge for problem solving and language comprehension," Artificial Intelligence 7:2, 89-127. 27. Rosenblatt, F. (1958). The Perceptron: A Probabilistic Model for Information Storage and Organization in the Brain. Psychological Review, 65, 386--408. 28. Salton, G., and Buckley, C., (1988). On the Use of Spreading Activation Methods in Automatic Information Retrieval. Proceedings of the 11th Ann. Int. ACM SIGIR Conf. on R&D in Information Retrieval (ACM), 147-160.Schank, Roger (1972) "Conceptual Dependency: A Theory of Natural Language Understanding, " Cognitive Psychology 3, 552--631. 29. Schank, Roger C., Alex Kass, & Christopher K. Riesbeck (1994) Inside Case-Based Explanation, Lawrence Erlbaum Associates, Hillsdale, NJ. 30. Schank, Roger C., & Larry G. Tesler (1969) "A conceptual parser for natural language," Proc. IJCAI-69, 569-578. 31. Singh, Push, Lin, Thomas, Mueller, Erik T., Lim, Grace, Perkins, Travell, & Zhu, Wan Li (2002). Open Mind Common Sense: Knowledge acquisition from the general public. In Proceedings of the First International Conference on Ontologies, Databases, and Applications of Semantics for Large Scale Information Systems. Lecture Notes in Computer Science. Heidelberg: Springer-Verlag. 32. C. Wendelken and L. Shastri. (2002). Combining belief and utility in a structured connectionist agent architecture., Proceedings of Cognitive Science 2002, Fairfax, VA, August 2002. 33. Winston, Patrick Henry, (1975) "Learning structural descriptions from examples," in P. H. Winston, ed., The Psychology of Computer Vision, McGraw-Hill, New York, 157-209 34. Wittgenstein, L.: 1968, Philosophical Investigations, the English Text of the Third Edition. Translated by G. E. M. Anscombe. Basil Blackwell.