Advanced Computational Structural Genomics

advertisement

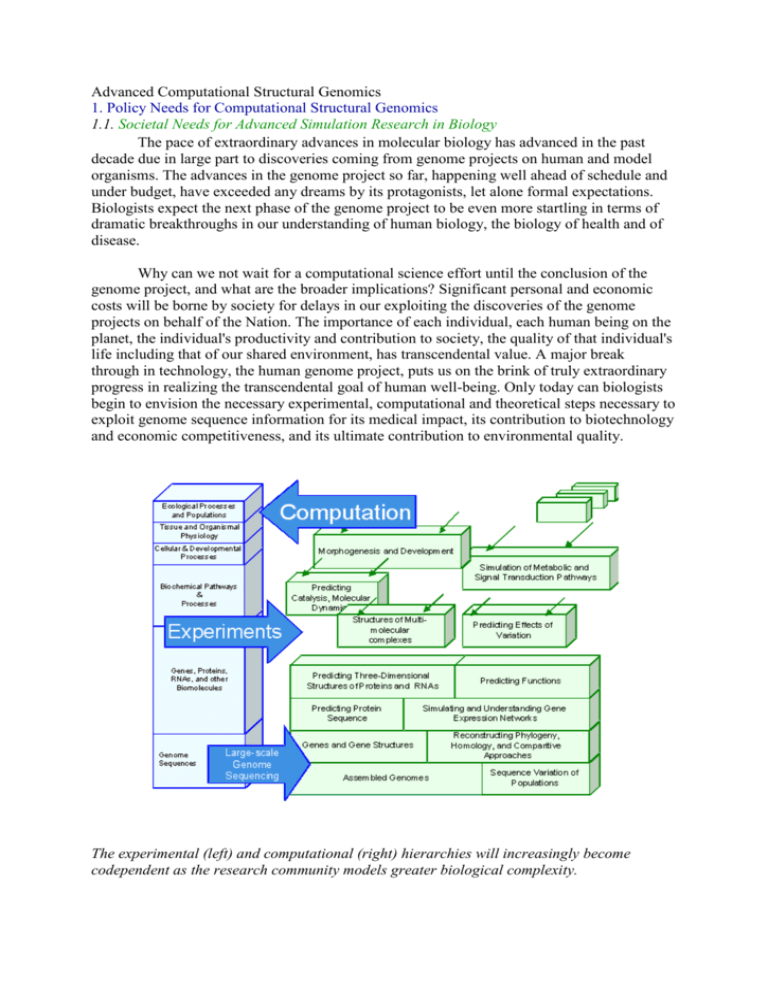

Advanced Computational Structural Genomics 1. Policy Needs for Computational Structural Genomics 1.1. Societal Needs for Advanced Simulation Research in Biology The pace of extraordinary advances in molecular biology has advanced in the past decade due in large part to discoveries coming from genome projects on human and model organisms. The advances in the genome project so far, happening well ahead of schedule and under budget, have exceeded any dreams by its protagonists, let alone formal expectations. Biologists expect the next phase of the genome project to be even more startling in terms of dramatic breakthroughs in our understanding of human biology, the biology of health and of disease. Why can we not wait for a computational science effort until the conclusion of the genome project, and what are the broader implications? Significant personal and economic costs will be borne by society for delays in our exploiting the discoveries of the genome projects on behalf of the Nation. The importance of each individual, each human being on the planet, the individual's productivity and contribution to society, the quality of that individual's life including that of our shared environment, has transcendental value. A major break through in technology, the human genome project, puts us on the brink of truly extraordinary progress in realizing the transcendental goal of human well-being. Only today can biologists begin to envision the necessary experimental, computational and theoretical steps necessary to exploit genome sequence information for its medical impact, its contribution to biotechnology and economic competitiveness, and its ultimate contribution to environmental quality. The experimental (left) and computational (right) hierarchies will increasingly become codependent as the research community models greater biological complexity. Individualized medicine- the recognition of individual differences in drug and treatment response, in disease development and progression, in the appearance and thus diagnosis of disease- justifies a much more aggressive approach to utilizing DNA sequence information and the introduction of simulation capabilities to extract the implicit information contained in the human genome. Each year thousands die and a hundred times more people suffer adverse reactions of various extents to drugs that are applied in perfectly correct usage, dose and disease specificity. Cancer in particular stands out as a disease of the genes. Two patients with what appear to be identical cancers (based on cellular pathology) at the same stage of malignancy, growth and dissemination will have very different responses to the same therapy regime; for example, one could rapidly fail to respond to treatment, become terminal in weeks, and the other could respond immediately and ultimately recover fully. Re-engineering microbes for bioremediation will depend directly on the understanding derived from the simulation studies proposed here. The design of new macromolecules and the redesign of microbe metabolism based on simulation studies, truly grand challenges for applied biology, will contribute to a wide range of environmental missions for the Department, including the environmental bioremediation of the Nation's most contaminated sites, often mixed waste for which no economically feasible cleanup technology currently exists. Many aspects of sustainable development, changing the nature of industrial processes to use environmentally friendly processes, depend on the same kinds of advances. Similarly, in the longer term the understanding of living systems will also contribute to environmental research on carbon management. To exploit the inherent genome information derived from knowing the DNA sequences, computational advances, along with related experimental biotechnology, are essential. Knowing the sequence of the DNA does not tell us about the function of the genes, specifically the actions of their protein products - where, when, why, how the proteins act is the essence of biological knowledge required. Encoded in the DNA sequence is a protein's three-dimensional topography, which in turn determines function; uncovering this sequencestructure-function relationship is the core goal of modern structural biology today. The goal of the Advanced computational structural genomics initiative is to link sequence, structure and function, and to move from analysis of individual macromolecules to macromolecular assemblies and complex oligomeric interactions that make up the complex processes within the cell. 1.2 Elucidating the Path from Protein Structure, to Function, to Disease A particular sequence derived from the genome encodes in a character string the threedimensional structure of a protein that performs a specific function in the cell. The primary outcome of the Argonne Workshop on Structural Genomics emphasized the experimental goal of determining the relationship between sequence and structure at a level unattainable before the coordinated effort to map and sequence the genomes of many organisms. It is in fact a logical extension of the genome effort to systematically elaborate DNA sequences into full three dimensional structures and functional analysis; this new effort will require the same level of cooperation and collaboration among scientists as was necessary in the original genome project. The Structural Genomics initiative is gaining momentum and will primarily focus initially on the infrastructure and support necessary to realize high throughput experimental structures by x-ray crystallography and high field NMR. Success of this effort is in some sense guaranteed, and the computational challenges that are posed when a structural genomics initiative of this scale is contemplated is already clear cut. It is the outgrowth from this direction, a computational genomics initiative- that will capture everything from sequence, structure and functional genomics to genetic networks and metabolic engineering to forward folding of macromolecules and kinetics to cellular level interactions. This approach makes all the modeling and simulation of macromolecules fair game and moves toward the complex systems approach, the complicated level of real living systems rather than individual macromolecules. The sequencing of microbial genomes containing several thousand genes and the eventual completion of human and other model organism genomes is the underlying driving force for understanding biological systems at a whole new level of complexity. There is for the first time the potential to understand living organisms as whole, complex dynamic systems and to use large-scale computation to simulate their behavior. Modeling all levels of biological complexity is well beyond even the next generation of teraFLOP computers, but each increment in the computing infrastructure makes it possible to move up the biological complexity ladder and solve previously unsolvable classes of problems. 2. Scientific Scope of Computational Structural Genomics 2.1 The First Step Beyond the Genome Project: High-Throughput Genome Assembly, Modeling, and Annotation The first step in the biological hierarchy is a comprehensive genome-based analysis of the rapidly emerging genomic data. With changes in sequencing technology and methods, the rate of acquisition of human and other genome data over the next few years will be ~100 times higher than originally anticipated. Assembling and interpreting these data will require new and emerging levels of coordination and collaboration in the genome research community to develop the necessary computing algorithms, data management and visualization system. Annotation the elucidation and description of biologically relevant features in the sequence is essential in order for genome data to be useful. The quality with which annotation is done will have direct impact on the value of the sequence. At a minimum, the data must be annotated to indicate the existence of gene coding regions and control regions. Further annotation activities that add value to a genome include finding simple and complex repeats, characterizing the organization of promoters and gene families, the distribution of G+C content, and tying together evidence for functional motifs and homologs. Table I shows many high-priority computational challenges associated with the analysis, modeling and annotation of genome data. The first is the basic assembly and interpretation of the sequence data itself (analysis and annotation) as it is produced at increasing rates over the next five years. There will be a never-ending race to keep up with this flow, estimated at about 200 million base pairs per day by some time in 1999, and to execute the required computational codes to locate and understand the meaning of genes, motifs, proteins, and genomes as a whole. Cataloging the flood of genes and proteins and understanding their relationship to one another, their variation between individuals and organisms, and evolution represents a number of very complex computational tasks. Many calculations such as multiple sequence alignments, phylogenetic tree generation, and pedigree analysis are NP-hard problems and cannot be currently done at the scale needed to understand the body of data being produced, unless a variety of shortcuts is introduced. Related to this is the need to compare whole genomes to each other on many levels both in terms of nucleic acid and proteins and on different spatial scales. Large-scale genome comparisons will also permit biological inference of structure and function. Table I. Current and Expected Sustained Capability Requirements for Major Community Genomics Codes Problem Class Sequence assembly Binary sequence comparison Multiple sequence comparison Gene modeling Phylogeny trees Protein family classification Sustained Capability 1999 >1012 flops 1012 flops Sustained Capability 2000 1014 flops >1014 flops 1012 flops >1014 flops >1015 flops 1011 flops >1010 flops 1017 flops 1013 flops 1012 flops Sequence Comparisons Against Protein Families for Understanding Human Pathology Searching a protein sequence database for homologues is a powerful tool for discovering the structure and function of a sequence. Amongst the algorithms and tools available for this task, Hidden Markov model (HMM)-based search methods improve both the sensitivity and selectivity of database searches by employing position-dependent scores to characterize and build a model for an entire family of sequences. HMMs have been used to analyze proteins using two complementary strategies. In the first, a sequence is used to a search a collection of protein families, such as Pfam, to find which of the families it matches. In the second approach an HMM for a family is used to search a primary sequence database to identify additional members of the family. The latter approach has yielded insights into protein involved in both normal and abnormal human pathology such as Fanconi Anaemia A, Gaucher disease, Krabbe disease, polymyositis scleroderma and disaccharide intolerance II. HMM-based analysis of the Werner Syndrome protein sequence (WRN) suggested it possessed exonuclease activity, and subsequent experiments confirmed the prediction [1]. Like WRN, mutation of the protein encoded by the Klotho gene lead to a syndrome with features resembling ageing. However, Klotho is predicted to be a member of the family 1 glycosidase (see Figure). Eventually, large-scale sequence comparisons against HMM models for protein families will require enormous computational resources to find these sequencefunction correlations over genome-scale databases. The similarities and differences between two plant and archeal members of a family of glycosidases that includes a protein implicated in ageing. Ribbons correspond to the betastrands and alpha-helices of the underlying TIM barrel (red) and family 1 glycosidase domain (cyan). Amino acid side chains drawn in magenta, yellow and green are important for structure and/or function. The loop in yellow denotes a region proposed to be important for substrate recognition. The 2-deoxy-2-fluorglucosyl substrate bound at the active site of one of the enzymes is shown with carbon atoms in grey, oxygen in red and fluorine in green. 2.2 From Genome Annotation to Protein Folds: Comparative Modeling and Fold Assignment The key to understanding the inner workings of cells is to learn the three-dimensional atomic structures of the some 100,000 proteins that form their architecture and carry out their metabolism. These three-dimensional (3D) structures are encoded in the blueprint of the DNA genome. Within cells, the DNA blueprint is translated into protein structures through exquisitely complex machinery- itself composed of proteins. The experimental process of deciphering the atomic structures of the majority of cellular proteins is expected to take a century at the present rate of work. New developments in comparative modeling and fold recognition will short circuit this process, that is we can learn to translate the DNA message by computer. Protein fold assignment. A genome-encoded amino acid sequence (center) is tested for compatibility with a library of known 3D protein folds[2]. An actual library would contain of the order of 1000 folds; the one shown here illustrates the most common protein folding motifs. There are two possible outcomes of the compatibility test: that the sequence is most compatible with one of the known folds or that the sequence is not compatible with any known fold. The second outcome may mean either that the sequence belongs to one of the folds not yet discovered, or that the compatibility measures are not fully developed to detect the distant relationship of sequence to its structure. The goal of fold assignment and comparative modeling is to assign each new genome sequence to the known protein fold or structure that it most closely resembles, using computational methods. Fold assignment and comparative modeling techniques can then be helpful in proposing and testing hypotheses in molecular biology, such as in inferring biological function, predicting the location and properties of ligand binding sites, in designing drugs, testing remote protein-protein relationships. It can also provide starting models in Xray crystallography and NMR spectroscopy. The success of these methods rests on a fundamental experimental discovery of structural biology: the 3D structures of proteins have been better conserved during evolution than their genome sequences. When the similarity of a target sequence to another sequence with known structure is above a certain threshold, comparative modeling methods can often provide quantitatively accurate protein structure predictions, since a small change in the protein sequence usually results in a small change in its 3D structure. Even when the percentage identity of a target sequence falls below this level, then at least qualitative information about the overall fold topology can often be predicted. Several fundamental issues remain to amplify the effectiveness of fold assignment and comparative modeling. A primary issue in fold assignment is the determination of better multipositional compatibility functions which will extend fold assignment into the "twilight zone" of sequence homology. In both fold assignment and comparative modeling, better alignment algorithms that deal with multipositional compatibility functions are needed. A move toward detailed empirical energy functions and increasingly sophisticated optimization approaches in comparative modeling will occur in the future. The availability of tera-scale computational power will further extend fold assignment in the following ways: the alignment by dynamic programming is performed in order L2 time, where L is the length of the sequence/structure. For 100,000's of sequences and 10,000's of structures (each of order 102 amino acids long), an all to all comparison of sequence to structure using dynamic programming would require on order of 1013 operations. The use of multipositional functions that will scale as L3 or L4 depending on the complexity of the compatibility function, and may require 1015 to 1017 FLOPS, and will likely need an effective search strategy in addition. The next generation of 100 teraFLOP computers addresses both fundamental issues in reliable fold assignment. First, an increase in complexity in alignment algorithms for multipositional functions that scale beyond L2, and secondly the development of new multipositional compatibility functions whose parameters are derived by training on large databases with multiple iterations, resulting in an increase in sensitivity and specificity of these new models. Availability of tera-flop computing will greatly benefit comparative protein structure modeling of both single proteins and the whole human genome. Once an alignment is determined, comparative modeling is formally a problem in molecular structure optimization. The objective function is similar in complexity to typical empirical protein force fields used in ab initio global optimization prediction and protein folding, and scales as M2, where M is the number of atoms in the model. A typical calculation for a medium sized protein takes in the order of 1012 Flops. More specifically, the increased computing power is likely to improve the accuracy of comparative models by alleviating the alignment problem and the loop modeling problem, at the very least. It is probable that the effect of alignment errors will decrease by performing independent comparative modeling calculations for many different alignments. This would in essence correspond to a conformational search with soft constraints imposed by the alignment procedure. Such a procedure would increase the computational cost to 1015 Flops for a single protein, and to 1020 Flops for the human genome. Specialized loop modeling procedures can be used after the initial comparative models are obtained by standard techniques, typically needing about 5000 energy function evaluations to obtain one loop conformation. It is standard to calculate an "ensemble" of 25-400 loop conformations for each loop sequence. Thus, the total number of function evaluations is on the order of 106 for prediction of one loop sequence. Applying these procedures to the whole human genome would take on the order of 1018 Flops. Large-Scale Comparative Modeling of Protein Structures of the Yeast Genome Recently, a large-scale comparative protein structure modeling of the yeast genome was performed[3]. Fold assignment, comparative protein structure modeling, and model evaluation were completely automated. As an illustration, the method was applied to the proteins in the Saccharomyces cerevisiae (baker's yeast) genome. It resulted in all-atom 3D models for substantial segments of 1071 (17%) of the yeast proteins, only 40 of which have had their 3D structure determined experimentally. Of the 1071 modeled yeast proteins, 236 were related clearly to a protein of known structure for the first time; 41 of these have not been previously characterized at all. Many of the models are sufficiently accurate to facilitate interpretation of the existing functional data as well as to aid in the construction of mutants and chimeric proteins for testing new functional hypotheses. This study shows that comparative modeling efficiently increases the value of sequence information from the genome projects, although it is not yet possible to model all proteins with useful accuracy. The main bottlenecks are the absence of structurally defined members in many protein families and the difficulties in detection of weak similarities, both for fold recognition and sequence-structure alignment. However, while only 400 out of a few thousand domain folds are known, the structure of most globular folds is likely to be determined in less than ten years. Thus, comparative modeling will conceivably be applicable to most of the globular protein domains close to the completion of the human genome project. Large-scale protein structure modeling. A small sample of the 1,100 comparative models calculated for the proteins in the yeast genome is displayed over an image of a yeast cell. 1.3 Low Resolution Folds to Structures with Biochemical Relevance: Toward Accurate Structure, Dynamics, and Thermodynamics As we move to an era of genetic information at the level of complete genomes, classifying the fold topology of each sequence in the genome is a vital first step toward understanding gene function. However, the ultimate limitation in fold recognition is that these algorithms only provide "low-resolution" structures. It is crucial to enhance and develop methods that permit a quantitative description of protein structure, dynamics and thermodynamics, in order relate specific sequence changes to structural changes, and structural changes to associated functional/phenotypic change. These more accurate approaches will greatly improve our ability to modify proteins for novel uses such as to change the catalytic specificity of enzymes and have them degrade harmful waste products. While often tertiary fold of proteins involved in disease change dramatically on mutation, those whose fold remains invariant, may have quantitative differences in structure that can have important macroscopic effects on function that can be manifested as disease. More accurate screening for new drug targets that bind tightly to specific protein receptors for inhibition will require quantitative modeling of protein/drug interactions. Therefore the next step is the quantitative determination of protein structure starting from the fold prediction, and ultimately directly from sequence. Estimate of the number of updates of the molecular dynamics algorithmic kernal that can be accomplished for a 100 amino acid protein and 3000 water molecules with 100 tflop resources. The effort possible from a Scientific Simulation Initiative of 100 terascale means that time and size scales become accessible for the first time for predicting and folding protein structure, and simulating protein function and thermodynamics. The simulation of a protein folding trajectory of a 100 amino acid protein and 3000 water molecules would require 10121015 updates of an empirical energy function (and its derivatives) that scales as N2, where N will range from 10,000 to 100,000. Many folding trajectories will likely be needed to understand the process on how proteins self-assemble. Optimization approaches for generating a diversity in biochemically relevant structures and misfolded conformers will take on the order of 108-1010 updates depending on the number of conformations and improvements in algorithms. The simulations of relative free energies important for determining drug binding affinities can take on the order of 106 steps, and the thermodynamic derivatives >106 updates. Simulation of the Molecular Mechanism of Membrane Binding of Viral Envelope Proteins The first step of infection by enveloped virus proteins involves the attachment of a viral envelope glycoprotein to the membrane of the host cell. This attachment leads to fusion of viral and host cell membranes, and subsequent deposition of viral genetic material into the cell. It is known that the envelope protein, hemagglutinin, exists under normal conditions in a "retracted" state where the peptide that actually binds to the sialylated cell surface receptor is buried some 100Å from the distal tip of the protein, and is therefore not capable of binding to the cell membrane. However, at low pH a major conformational change occurs whereby the fusion peptide is delivered (~100Å !) via a "spring-loaded mechanism" to the distal tip where it is available for binding to the cell membrane. While the crystal structures of the inactive (neutral pH) and active (low pH) forms are known, the molecular details of the large-scale conformational change that produces the activated hemagglutinin, and of the binding of the fusion peptide to the cell surface receptors, are not known. As many aspects of this process are likely prototypical of a class of enveloped viruses including HIV, a detailed understanding of the molecular mechanism would be beneficial in guiding efforts to intervene before the viral infection is completed. Atomistic simulations of the process of viral binding to cell membranes are extremely demanding computationally [4]. Taken together, the hemagglutinin, lipid membrane, and sufficient water molecules to solvate the system in a periodically replicable box adds up to more than 100,000 atoms, an order of magnitude larger than what is presently considered a large biomolecular system. Furthermore, the large scale conformational changes in the protein, and displacements of lipids as the protein binds the membrane involve severe time scale bottlenecks as well. X-ray crystal structure of the fusion-activated (low pH) form of the influenza virus hemagglutinin poised above a small (256 lipids) patch of a lipid membrane from an MD simulation. The N-terminal peptides that actually bind to the membrane are not present in this hemagglutinin structure. An all-atom model of this complex plus solvent in a periodically replicated box would contain >100,000 atoms, and long time scale motions would need to be simulated to understand this step in the mechanism of viral infection. 2.4 Advancing Biotechnology Research: In Silico Drug Design The robust prediction of protein structure ultimately ties directly into our ability to rationally develop highly specific therapeutic drugs and to understand enzymatic mechanisms. Calculations of affinities with which drug molecules bind to proteins that are important in metabolic reactions and molecular signaling processes, and the mechanisms of catalytic function of certain key enzymes, can be obtained with computational approaches described here. However, there are many challenges to the simulations of these biological processes that arise from the large molecular sizes and long time scales relevant for biological function, or the subtle energetics and complex milieu of biochemical reactions. Some of these same computational methods and bottlenecks arise in the evaluation of the binding affinity of drugs to specific targets, but the task of the drug designer is further complicated by the need to identify a small group of compounds out of a virtually limitless universe of combinatorial possibilities. Only a small percentage of a pool of viable drug candidates actually lead to the identification of a clinically useful compound, with typically over $200 million spent in research costs to successfully bring it to market. On average, a period of 12 years elapses between the identification and FDA approval of a successful drug, with the major bottleneck being the generation of novel, high-quality drug candidates. While rational, computer-based methods represent a quantum leap forward for identifying drug candidates, substantial increases in compute power are needed to allow for both greater selection sensitivity and genome-scale modeling of future drugs. Table 2 below gives an estimate of the method and current computational requirements to complete a binding affinity calculation for a given drug library size. Going down the table for a given model complexity is a level of computational accuracy. The benefits of improved model accuracy must be offset against the cost of evaluating a model over the ever increasing size of the drug compound library brought about by combinatorial synthesis, and further exacerbated by the high throughput efforts of the genome project and structural annotation of new protein targets. Table 2. Estimates of current computational requirements to complete a binding affinity calculation for a given drug library size. Modeling complexity Method Size of library Required computing time Molecular Mechanics SPECITOPE 140,000 ~1 hour Rigid ligand/target LUDI 30,000 1-4 hours CLIX 30,000 33 hours Molecular Mechanics Hammerhead 80,000 3-4 days Partially flexible ligand DOCK 17,000 3-4 days Rigid target DOCK 53,000 14 days 50,000 21 days 1 ~several days 1 >several weeks Molecular Mechanics ICM Fully flexible ligand, Rigid target Molecular Mechanics AMBER Free energy perturbation CHARMM QM Active site and Gaussian, MM protein Q-Chem State-of-the-art quantum chemistry algorithms can expand the applicability of QM/MM methods to simulate a greatly expanded QM subsystem for enzymatic studies, or even estimation of drug binding affinities. An estimate of the cost of using QM methods for evaluating a single energy and force evaluation system of 104 heavy atoms, would require resources that can handle ~1016 FLOPS; on a 100 teraFLOP machine this would require five minutes. Parallel versions of these methods have been implemented and are available at a number of universities and DOE laboratories. On current generation teraFLOP platforms, QM calculations of unprecedented size are now possible, allowing HF optimizations on systems with over 1000 atoms and MP2 energies on hundreds of atoms. The presently available traditional-scaling (~N3) first principles molecular dynamics code is running efficiently on serial platforms, including high-end workstations and vector supercomputers. The ultimate goal is to develop linear scaling quantum molecular dynamics code. It will be important to adapt these codes to the parallel architectures, requiring rewriting parts of existing programs, and developing linear scaling algorithms 2.5 Linking Structural Genomics to Systems Modeling: Modeling the Cellular Program The cellular program that governs the growth, development, environmental response, and evolutionary context of an organism does so robustly in the face of a fluctuating environment and energy sources. It integrates numerous signals about events the cell must track in order to determine which reactions to turn on, off, or slow down and speed up. These signals, which are derived both from internal processes, other cells, and changes in the extracellular medium, arrive asynchronously, and are multivalued in meaning. The cellular program also has memory of its own particular history as written in the complement and concentrations of chemicals contained in the cell at any instant. The circuitry that implements the working of a cell and/or collection of cells is a network of interconnected biochemical, genetic reactions, and other reaction types. The experimental task of mapping genetic regulatory networks using genetic footprinting and two-hybrid techniques is well underway, and the kinetics of these networks is being generated at an astounding rate. Technology derivatives of genome data such as gene expression microarrays and in vivo fluorescent tagging of proteins through genetic fusion with the GFP protein can be used as a probe for network interaction and dynamics. If the promise of the genome projects and the structural genomics effort is to be fully realized, then predictive simulation methods must be developed to make sense of this emerging experimental data. First is the problem of modeling the network structure, i.e. the nodes and connectivity defined by sets of reactions among proteins, small molecules and DNA. Second is the functional analysis of a network using simulation models built up from "functional units" describing the kinetics of the interactions. Prediction of networks from genomic data can be approached from several directions. If the function of a gene can be predicted from homology, then prior knowledge of the pathways in which that function is found in other organisms can be used to predict the possible biochemical networks in which the protein participates. Homology approaches based on protein structural data or functional data for a protein previously characterized can be used to predict the type of kinetic behavior of a new enzyme. Thus structural prediction methods that can predict the fold of the protein product of a given gene are fundamental to the deduction of the network structure. The non-linearity of the biochemical and genetic reactions, along with the high degree of connection (sharing of substrates, products and effectors) among these reactions, make the qualitative analysis of their behavior as a network difficult. Furthermore, the small numbers of molecules involved in biochemical reactions (typical concentrations of 100 molecules/cell) ensure that thermal fluctuations in reaction rates are expected to become significant compared to the average behavior at such low concentrations. Since genetic control generally involves only one or two copies of the relevant promoters and genes per cell, this noise is expected to be even worse for genetic reactions. The inherent randomness and discreteness of these reactions can have significant macroscopic consequences such as that common inside living cells. There are three bottlenecks in the numerical analysis of biochemical reaction networks. The first is the multiple time scales involved. Since the time between biochemical reactions decreases exponentially with the total probability of a reaction per unit time, the number of computational steps to simulate a unit of biological time increases roughly exponentially as reactions are added to the system or rate constants are increased. The second bottleneck derives from the necessity to collect sufficient statistics from many runs of the Monte-Carlo simulation to predict the phenomenon of interest. The third bottleneck is a practical one of model building and testing: hypothesis exploration, sensitivity analyses, and back calculations, will also be computationally intensive. Modeling the Reaction Pathway for the Glycolytic Biochemical System A novel gene expression time series analysis algorithm known as the Correlation Metric Construction uses a time lagged correlation metric as a measure of distance between reacting species. The constructed matrix R is then converted to a Euclidean distance matrix D, and multidimensional scaling, MDS, is used to allow the visualization of the configuration of points in high dimensional space as a two dimensional stick and ball diagram. The goal of this algorithm is to deduce the reaction pathway underlying the response dynamics, and was used on the first few steps of the glycolytic pathway determined by experiment[5]. The reconstituted reaction system of the glycolytic pathway, containing eight enzymes and 14 metabolic intermediates, was kept away from equilibrium in a continuous-flow, stirred-tank reactor. Input concentrations of adenosine monophosphate and citrate were externally varied over time, and their concentrations in the reactor and the response of eight other species were measured. The CMC algorithm showed a good prediction of the reaction pathway from the measurements in this much-studied biochemical system. Both the MDS diagram itself and the predicted reaction pathway resemble the classically determined reaction pathway. In addition, CMC measurements yield information about the underlying kinetics of the network. For example, species connected by small numbers of fast reactions were predicted to have smaller distances between them than species connected by a slow reaction. Plot of the time-lagged correlation function of G6P with all other species. The experimentally determined lagged correlation functions. The graph clarifies the temporal ordering data inherent in the correlation functions. (B) The 2D projection of the MDS diagram. Each point represents the calculated time series of a given species. The closer two points are, the higher the correlation between the respective time series. Black (gray) lines indicate negative (positive) correlation between the respective species. (C) Predicted reaction pathway derived from the CMC diagram. Its correspondence to the known mechanism is high. 3. Implicit Collaborations Across the DOE Mission Sciences Computational modeling plays a key role in all scientific understanding. Therefore it is probably not surprising that disparate scientific disciplines share common modeling strategies, algorithmic bottlenecks, and information technology issues, and use computer scientists and applied mathematicians as a common resource. In this Section we emphasize what are the cross-cutting computational problems whose solutions could benefit a large number of scientific applications, or where technologies should be transferred between the computational biology domain and other disciplines. 3.1 Computer Hardware The advanced computational biology simulations described in this white paper will require computer performance well beyond what is currently available, but computational speed alone will not ensure that the computer is useful for any specific simulation method. Several other factors are critical including the size of primary system memory (RAM), the size and speed of secondary storage, and the overall architecture of the computer. This will undoubtedly be true for global climate, combustion, and other basis energy science areas. Many parallel processing computer architectures have been developed over the years, but the dominant parallel architecture that has emerged is the distributed memory, multiple instruction-multiple data architecture (MIMD), which consists of a set of independent processors with their own local RAM memory interconnected by some sort of communication channel. Such an architecture is characterized by the topology and speed of the interconnection network, and by the speed and memory size of the individual processors. All of the current generation of teraFLOP-class computers, including ASCI Red and Blue are of this design. Just as with the computer hardware, there have been a large number of software programming paradigms developed for parallel computers. A great deal of research has gone into developing software tools to assist in parallel programming or even to automatically parallelize existing single-processor software. Selected parallelization and debugging tools can assist and new programming models such as Object-Oriented programming (using C++ or FORTRAN90) can help hide the details of the underlying computer architecture. At the current time, however, efficiently using massively parallel computers primarily involves redesigning and rewriting software by hand. This is complicated by the facts that the best serial (single processor) algorithms are often not the best suited for parallel computers and the optimal choice of algorithm often depends on the details of the computer hardware. The different simulation methods presently used have different requirements of parallel computer hardware. Simulation methods which involve calculating averaged properties from a large number of smaller calculations that can be individually run on gigaFLOP class processors are most ideally suited for parallelism. These methods include classical and quantum Monte Carlo. In these simulations a minimal amount of initial data can be sent to each processor which then independently calculates a result that is communicated back to a single processor. By choosing an appropriate size of problem for each single processor (problem granularity), these algorithms will work efficiently on virtually any MIMD computer, including separate computer workstations linked by local-area networks. The quantum chemical and molecular dynamics methods, or certain optimization algorithms, in which all processors are applied to the calculation of a single chemical wave function or trajectory, involve much greater challenges to parallelization and involve greater constraints on the parallel computer architecture. Since all processors are involved in a single computation, interprocessor communication must occur. It is the rate of this communication, characterized in term of raw speed (bandwidth) and initialization time (latency) that usually limits the efficient use of parallel computers. The minimal necessary communication rate depends exquisitely on the simulation type, choice of algorithm and problem size. Generally, it is essential software design criteria that as the problem scales to larger size, the ratio of computational operations per communication decrease (or at least remain constant), so that for some problem size, the communication rate will not constitute a bottleneck. Moreover, it is important that the work per processor, or "load balance", scale evenly so that no processors end up with much larger computational loads and become bottlenecks. In a broad sense, the nature of computational biology simulations–in particular the physical principle that interactions attenuate with distance–will ensure that scalable parallel algorithms can be developed, albeit at some effort. Even given the very broad range of simulation methods required by computational biology, it is possible to provide some guidelines for the most efficient computer architectures. Regarding the size of primary memory, it is usually most efficient if a copy of the (6xN) set of coordinates describing a time step of a molecular dynamics simulation or the (NxN) matrices describing the quantum chemical wavefunction, can be stored on each processing element. For the biological systems of the sort described in this white paper, this corresponds to a minimum of several hundred megabytes of RAM per processor. Moreover, since many of the simulation methods involve the repeated calculation of quantities that could be stored and reused (e.g. two electron integrals in quantum chemistry or interaction lists in molecular dynamics), memory can often be traded for computer operations so that larger memory size will permit even larger simulations. Similarly, general estimates can be made for the minimal interprocessor communication rates. Since the goal of parallel processing is to distribute the effort of a calculation, for tightly coupled methods such as quantum chemical simulations, it is essential that the time to communicating a partial result be less than the time to simply recalculate it. For example, the quantum chemical two-electron integrals require 10-100 floating point operations to calculate, so that they can be usefully sent to other processors only if that requires less than ~100 cycles to communicate to send the 8 or 16 byte result. Assuming gigaFLOP speeds for individual processing elements in the parallel computers, this translates roughly to gigabyte/sec interprocessor communication speeds. (Note that many partial results involve vastly more operations, so that they place a much weaker constraint on the communication rate. 3.2 Information Technologies and Database Management "Biology is an Information Science" [6] and the field is poised to put into practice new information science and data management technologies directly. Two major conferences are emerging within the field of computational biology (ISMB - Intelligent Systems for Molecular Biology; and RECOMB - Research in Computational Biology). Each year, associated workshops focus on how to push new techniques from computer science into use in computational biology. For example, at ISMB-94 a workshop focused on problems involved in integrating biological databases; follow-up workshops in 1995 and 1996 explored CORBA and java as methods toward integration solutions. In 1997, a post-conference workshop focused on issues in accurate, usable annotations of genomes. This year, a pre-conference workshop will explore how to use text-processing and machine-translation methods for building ontologies to support cross-linking between databases about organisms. All of these criteria require ultra-high-speed networks to interconnect students, experimental biologists, and computational biologists and publicly funded data repositories. This community will, for example, benefit directly from every new distributed networking data exchange tool that develops as a result of Internet-II and the high-speed Energy Sciences network. Data warehousing addresses a fundamental data management issue: the need to transparently query and analyze data from multiple heterogeneous sources distributed across an enterprise or a scientific community. Warehousing techniques have been successfully applied to a multitude of business applications in the commercial world. Although the need for this capability is as vital in the sciences as in business, functional warehouses tailored for specific scientific needs are few and far between. A key technical reason for this discrepancy is that our understanding of the concepts being explored in an evolving scientific domain change constantly, leading to rapid changes in data representation. When the format of source data changes, the warehouse must be updated to read that source or it will not function properly. The bulk of these modifications involve extremely tedious, low-level translation and integration tasks which typically require the full attention of both database and domain experts. Given the lack of the ability to automate this work, warehouse maintenance costs are prohibitive, and warehouse "up-times" severely restricted. This is the major roadblock to a successful warehouse to for scientific data domains. Regardless of whether the scientific domain is genome, combustion, high energy physics, or climate modeling, the underlying challenges for data management are similar, and present in varying degrees for any warehouse. We need to move towards the automation of these scientific tasks. Research will play a vital role in achieving that goal and in scaling warehousing approaches to dynamic scientific domains. Warehouse implementations have an equally important role; they allow one to exercise design decisions, and provide a test-bed that stimulates the research to follow more functional and robust paths. 3.3 Ensuring Scalability on Parallel Architectures In all of the research areas, demonstrably successful parallel implementations must be able to exploit each new generation of computer architectures that will rely on increased number of processors to realize multiple teraFLOP computing. We see the use of the paradigm/software support tools as an important component of developing effective parallelization strategies that can fully exploit the increased number of processors that will comprise a 100 teraFLOP computing resource. However, these paradigm/software support libraries have largely been developed on model problems at a finer level of granularity than "real life" computational problems. But it is the "real life" problems that involve complexity in length scales, time scales, and severe scalings of algorithmic kernals that are in need of the next and future generations of multiple teraFLOP computing. The problems described in this initiative provide for a more realistic level of granularity to investigate the improved use of software support tools for parallel implementations, and may improve the use of high computing resources by the other scientific disciplines, such as climate, combustion, and materials, that need scalable algorithms. Even when kernals can be identified and parallel algorithms can be designed to solve them, often the implementation does not scale well with the number of processors. Since 100 teraFLOP computing will only be possible on parallel architectures, problems in scalability is a severe limitation in realizing the goals of Advanced Computational Structural Genomics. Lack of scalability often arises from straightforward parallel implementations where the algorithm is controlled by a central scheduler and for which communication among processors is wired to be synchronous. In the computer science community, various paradigms and software library support modules exist for exploring better parallel implementations. These can decompose the requirements of a problem domain into high-level modules, each of which is efficiently and portably supported in a software library that can address issues involving communication, embedding, mapping, etc for a scientific application of interest. These tools allow different decompositions of a parallel implementation to be rapidly explored, by handling all of the low-level communication for a given platform. However, these paradigms and their supporting software libraries usually are developed in the context of model mathematical applications, which are at a finer level of granularity than "real life" computational science problems. A unique opportunity exists to use some of the computational science problems described in the previous chapters to refine or redefine the current parallel paradigms currently in use. The outcome of this direction could be broader than the particular scientific application, and may provide insight in how to improve the use of parallel computing resources in general. 3.4 Meta Problem Solving Environments In order to take advantage of teraFLOP computing power, biological software design must move towards an efficient geographically distributed software paradigm. One novel paradigm is the "Meta Problem Solving Environment", a software system based on the "plug and play" paradigm. Algorithms are encapsulated into specially designed software modules called components which the user can link together produce a simulation. Software components can exist anywhere on a large computing network and yet can be employed to perform a specified simulation on any geographically distributed parallel computing environment to which the user has access. In order to satisfy this design requirement, software components must run as separate threads, respond to signals of other components and, in general, behave quite unlike a standard subroutine library. Therefore, the software architecture must specify the rules for a component to identify standard communication interfaces as well the rules that govern how a component responds to signals. Also, components must be permitted to specify resources and obtain communication lines. Although the component based "Meta Problem Solving Environment" software paradigm is far more complex than any current software system employed by the biological community today, it has many advantages. By coming together to produce uniform component design protocols, computational biologists could quickly apply the latest algorithmic advances to novel new systems. Also, simulations could be constructed using the best available components. For example, real space force component from one researcher can be combined with a reciprocal space component from another and an integration component from another group, and could significantly increase computational efficiency compared to any single biological computing package. In addition, with good design protocols, all the components employed in a given simulation need not be built by computational biologists. For instance, parallel FFT component software already exists that could be used to improve significantly SPME performance. Finally, the biological community will not be acting alone in adopting a component based approach. The DOE ASCI project is currently using using tools such as CORBA (Common Object Request Broker Architecture), and Los Alamos National Laboratory's PAWS (Parallel Application Workspace) to produce component based software products. Therefore, with appropriate communication within the community and with others, biological computational scientists can begin to produce a new software standard that will function well in large geographically distributed environment and very quickly lead to teraFLOP computing capability. 3.5 Usability Evaluation and Benchmarking In the last five years, there has been major progress in benchmarking massively and highly parallel machines for scientific applications. For example, the NAS Parallel Benchmarks (NPB) were a major step toward assessing the computational performance of early MPPs, and served well to compare MPPs with parallel vector machines. Based on numerical algorithms derived from CFD and aerospace applications, the NPB soon were widely adopted as an intercomparison metric of parallel machine performance. Subsequently the NPB were included in the international benchmarking effort, PARKBENCH (PARallel Kernels and BENCHmarks). In 1996, portable HPF and MPI versions of the NPB were made available. With increasing industry emphasis on parallelism, a number of other organizations, as part of their procurement process, are designing benchmark suites to evaluate the computational performance of highly parallel systems. The Advanced Computational Structural Genomics program will involve both research and development activities and implementation of comprehensive biological modeling simulations. Therefore, its success will depend not only on raw computational performance but also on an effective environment for code development and analysis of model results. These issues suggest that a new benchmarking approach is needed, one that evaluates not only raw computational performance but also system usability. In addition, there should be formal tracking of benchmark results throughout the course of the program to provide an objective measure of progress. To meet these objectives, the Advanced Computational Structural Genomics will support benchmarking and performance evaluation in four areas: Identify or create scalable, computationally intensive benchmarks that reflect the production computing needs of the particular scientific community or common algorithm, and evaluate the scalability and capability of high performance systems. Establish system performance metrics appropriate for scientific modeling. Establish usability metrics for assessing the entire research/ development/ analysis environment. Establish a repository of benchmark results for measuring progress in the initiative and for international comparison. Identify or create scalable, computationally intensive benchmarks that reflect the needs of the scientific community. The purpose of this activity is to develop benchmarks that can be shared by the community to gain insight into the computational performance of machines that will be proposed by vendors in response to the Scientific Simulation Initiative. It is well known that the GFlops performance level achieved on an application is not a reliable measure of scientific throughput. For example, an algorithm may achieve a very high floating point rate on a certain computer architecture, but may be inefficient at actually solving the problem. Therefore, accurate and useful benchmarks must be based on a variety of numerical methods that have proven themselves efficient for various modeling problems. Benchmarks for scientific computing applications in general have traditionally used either a functional approach or a performance-oriented approach. In the functional approach, a benchmark represents a workload well if it performs the function as the workload, e.g., a modeling workload is represented by a subset of computational biology codes. In the performanceoriented approach, a benchmark represents a workload well if it exhibits the same performance characteristics as the workload, e.g., a structural genomics modeling workload would be represented by a set of kernels, code fragments, and a communication and I/O test. The assumption is that the system performance can be predicted from an aggregate model of the performance kernels. We propose that Advanced Computational Structural Genomics use mainly a functional approach and identify or develop a set of benchmark models. The benchmark suite also must include a range of simulation models typically found in the computational biological community. These benchmarks should be developed in the spirit of the NAS Parallel Benchmarks (the CFD applications benchmarks): the models should exhibit all the important floating point, memory access, communication, and I/O characteristics of a realistic model, yet the code should be scalable, portable, compact, and relatively easy to implement. As mentioned above, this is a substantial effort, because many extant benchmark codes for some scientific modeling are not readily portable, or are not currently well suited or even capable of running on more than a few tens of processors. Establish system performance metrics appropriate for scientific modeling. We propose that Advanced Computational Structural Genomics select a few full-scale scientific applications, and intercompare parallel systems using the wall clock seconds per model application. Establish usability metrics and system attributes for assessing the entire research/development/analysis environment. The benchmarks in (1) focus on PE performance alone and do not measure any of the important system features that contribute to the overall utility of a parallel system. These features include code development tools, batch and interactive management capabilities, I/O performance, performance of the memory hierarchy, compiler speed and availability, operating system speed and stability, networking interface performance, mean time between failures or interrupts, etc. Many of these features have been quite weak on current highly parallel computers as compared to vector supercomputers. We propose that the Computational Structural Genomics area develop a new set of utility benchmarks and attributes for highly and massively parallel machines as well as clusters of SMPs. The goal of these benchmarks is to derive a set of metrics that measure the overall utility of machines and systems for a productive scientific modeling environment. Such an environment would emphasize model flexibility, rapid job turnaround, and effective capabilities for analysis and data access. Also, the system attributes will specify a set of desired capabilities necessary to administer and manage the system and its resources. At this time there is no industry standard benchmark which addresses these issues. All scientific disciplines coupled to the SSI can drive the state of the art in performance evaluation by initiating such an effort. Establish a repository of benchmark results for measuring progress in the initiative and for international comparison. Benchmarking codes, metrics, and the detailed benchmark results from the activities in (1), (2), and (3) must be available to the public and the high performance computing community through frequent publication of reports, Web pages, etc., with the goal of influencing the computer industry. The Basic will immediately benefit from using these benchmarks for the next-generation procurement of production machines. will create a central repository of benchmark codes and performance data. It will also publish annual reports about the measured performance gains and accomplishments of the initiative, as well as providing international comparison data. We expect that the rapid development of new high-performance computing technology will continue, with rapidly changing architectures and new software and tools becoming available. Hence a benchmark must be designed to be able to evaluate systems across several hardware generations, diverse architectures, and changing software environments. In return, we believe that a well-designed benchmark can drive the HPC industry and create a better focus in the industry on high-end computing. Acknowledgements A web-based version of this document, and the more comprehensive white paper on computational biology can be found at: http://cbcg.lbl.gov/ssi-csb/ We would like to thank the following individuals who contributed to the intellectual content of this document: 2.1. The First Step Beyond the Genome Project: High-Throughput Genome Assembly, Modeling, and Annotation Phil LaCascio, Oakridge National Laboratory Saira Mian, Lawrence Berkeley National Laboratory Richard Mural, Oakridge National Laboratory Frank Olken, Lawrence Berkeley National Laboratory Jay Snoddy, Oakridge National Laboratory Sylvia Spengler, Lawrence Berkeley National Laboratory David States, Washington University, Institute for Biomedical Computing Ed Uberbacher, Oakridge National Laboratory Manfred Zorn , Lawrence Berkeley National Laboratory 2.2. From Genome Annotation to Protein Folds: Comparative Modeling and Fold Assignment David Eisenberg, University of California, Los Angeles Barry Honig, Columbia University Alan Lapedes, Los Alamos National Laboratory Andrej Sali, Rockefeller University 2.3. Low Resolution Folds to Protein Structures with Biochemical Relevance: Toward Quantitative Structure, Dynamics, and Thermodynamics Charles Brooks, Scripps Research Institute Yong Duan, University of California, San Francisco Teresa Head-Gordon, Lawrence Berkeley National Laboratory Volkhard Helms, University of California, San Diego Peter Kollman, University of California, San Francisco Glenn Martyna, Indiana University Andy McCammon, University of California, San Diego Jeff Skolnick, Scripps Research Institute Doug Tobias, University of California, Irvine 2.4. Biotechnology Advances from Computational Structural Genomics: In Silico Drug Design Ruben Abagyan, NYU, Skirball Institute Jeff Blaney, Metaphorics, Inc. Fred Cohen, University of California, San Francisco Tack Kuntz, University of California, San Francisco 2.5. Linking Structural Genomics to Systems Modeling: Modeling the Cellular Program Adam Arkin, Lawrence Berkeley National Laboratory Denise Wolf, Lawrence Berkeley National Laboratory Peter Karp, Pangea 3.1. Computer Hardware Mike Colvin, Lawrence Livermore National Laboratory 3.2. Information Technologies and Database Management Terry Gaasterland, Argonne National Laboratory Charles Musick, Lawrence Livermore National Laboratory 3.3. Ensuring Scalability on Parallel Architectures. Silvia Crivelli, Lawrence Berkeley National Laboratory Teresa Head-Gordon, Lawrence Berkeley National Laboratory 3.4. Meta Problem Solving Environments Glenn Martyna, Indiana University 3.5. Usability Evaluation and Benchmarking Horst Simon, Lawrence Berkeley National Laboratory References Huang, S. R., Li, B. M.; Gray, M. D.; Oshima, J.; et al. (1998). The Premature Ageing Syndrome Protein, Wrn, Is A 3'->5' Exonuclease. Nature Genetics 20, 114-116. Fischer, D.; Rice, D.; Bowie, J. U.; Eisenberg, D. (1996). Assigning Amino Acid Sequences To 3-Dimensional Protein Folds. Faseb Journal 10, 126-136. Sanchez, R.; Sali, A. (1998). Large-Scale Protein Structure Modeling Of The Saccharomyces Cerevisiae Genome. Proceedings Of The National Academy Of Sciences USA 95, 1359713602. Tobias, D. J.; Tu, K. C.; Klein, M. L. (1997). Atomic-Scale Molecular Dynamics Simulations Of Lipid Membranes. Current Opinion In Colloid & Interface Science 2, 15-26. Arkin, A; Shen, P. D.; Ross, J. (1997). A Test Case Of Correlation Metric Construction Of A Reaction Pathway From Measurements. Science 277, 1275-1279 Gaasterland, T. (1998). Structural Genomics: Bioinformatics In The Driver's Seat. Nature Biotechnology 16, 625-627. Appendix: 1. Glossary Aggregation information: The information obtained from applying (a set of) operators to the initial data. For example, monthly sales or expenses, average length of a phone call, or maximum number of calls during a given time. Amino acid: Any of a class of 20 molecules that are combined to form proteins in living organisms. The sequence of amino acids in a protein and hence protein function are determined by the genetic code. API (application program interface): An API is a collection of methods that are used by external programs to access and interact with a class or library. Their main function is to provide a consistent interface to a library, isolating programs from changes in the library implementation or functionality. Automatic Schema Integration: Determination of identical objects represented in the schemata of different databases by programs using a set of built-in rules, without user intervention. Base pair: A unit of information carried by the DNA molecule. Chemically these are purine and pyrimidine complementary bases connected by weak bonds. Two strands of DNA are held together in a shape of a double helix by the bonds between paired bases. Bioinformatics: Field of study dealing with management of data in biological sciences. Change detection: The process of identifying when a data source has changed and how. The types of changes detected include: new data, modifications to existing data, and schema changes. Chromosome: A self-replicating genetic structure in the cells, containing the cellular DNA that bears in its nucleotide sequence the linear array of genes. Conflicts: When the same concept is represented in different databases, its representation may be semantically and syntactically different. For example, a length may be defined in different units, or contain a different set of attributes. These differences are called conflicts, and must be resolved in order to integrate the data from the different sources. Data cleaning: Removal and/or correction of erroneous data introduced by data entry errors, expired validity of data, or by some other means. Data ingest: Loading of data into the warehouse. Data/schema integration: Merging of data/schema from different sources into a single repository, after resolving incompatibilities among the sources. Data mining: Analysis of raw data to find interesting facts about the data and for purposes of knowledge discovery. Data warehouse: An integrated repository of data from multiple, possibly heterogeneous data sources, presented with consistent and coherent semantics. Warehouses usually contain summary information represented on a centralized storage facility. Distributed database: A set of geographically distributed data connected by a computer network and controlled by a single DBMS running at all the sites. DNA (deoxyribonucleic acid): The molecule that encodes genetic information, a double stranded heteropolymer composed of four types of nucleotides, each containing a different base. See base pair. Federated databases: An integrated repository data from of multiple, possibly heterogeneous, data sources presented with consistent and coherent semantics. They do not usually contain any summary data, and all of the data resides only at the data source (i.e. no local storage). Gene: A fundamental physical and functional unit of heredity. A gene is an ordered sequence of nucleotides located in a particular position on a particular chromosome that encodes a specific functional product (i.e., a protein or RNA molecule). See gene expression. Gene expression: The process by which a gene's coded information is converted into the structures present and operating in the cell. Expressed genes include those that are transcribed into mRNA and then translated into protein and those that are transcribed into RNA molecules only. Genetic code: The sequence of nucleotides, coded in triplets along the mRNA, that determines the sequence of amino acids in protein synthesis. The DNA sequence of a gene can be used to specify the mRNA sequence, and the genetic code can in turn be used to specify the amino acid sequence. Genetic map (linkage map): A map of relative positions of genes or other chromosome markers, determined on the basis of how often they are inherited together. See physical map. Genome: All the genetic material in the chromosomes of a particular organism; its size is generally given as its total number of base pairs. Genetic sequencing: The process of identifying the ordered list of amino or nucleic acids that form a particular gene or protein. Global schema: A schema, or a map of the data content of a data warehouse that integrates the schemata from several source repositories. It is "global", because it is presented to warehouse users as the schema that they can query against to find and relate information from any of the sources, or from the aggregate information in the warehouse. HGP (human genome project): The U.S. Human Genome Project is a 15-year effort coordinated by the U.S. Department of Energy and the National Institutes of Health to identify all the estimated 100,000 genes in human DNA, determine the sequence of the 3 billion chemical bases that make up human DNA, store this information in databases, and develop tools for data analysis. To help achieve these goals, a study of the genetic makeup of several non-human organisms, including the common human gut bacterium Escherichia coli, the fruit fly, and the laboratory mouse is also underway. Homology: Here, a relationship of having evolved from the same ancestral gene. Two nucleic acids are said to be homologous if their nucleotide sequences are identical or closely related. Similarly, two proteins are homologous if their amino acid sequences are related. Homology can also be inferred from structural similarity. Intelligent search: Procedures which use additional knowledge to eliminate a majority of space to be searched in order to retrieve a set of data items which satisfy a given set of properties. JGI (joint genome institute): A massive DOE sponsored project that will integrate human genome research based in three of its national laboratories in California and New Mexico. The Joint Genome Institute is a "virtual laboratory" that will sequence approximately 40 percent of the total human DNA by 2005 and share information through public databases. Knowledge discovery: The process of identifying and understanding hidden information and patterns in raw data which are of interest to the organization and can be used to improve the business procedures. Data mining is a single, but very important, step in this process. Metadata: Description of the data. Metadata is used to explicitly associate information with (and knowledge about) data in a repository. This information may range from the simple (it is always an integer) to arbitrarily complex (it is a floating point value representing the temperature of an object in degrees Celsius, and is accurate to 5 significant digits). Mediated data warehouse architecture: A data warehouse architecture providing access to data from various sources, where not all the data is stored is cached at the warehouse. Mediators or software agents determine the best source of data to satisfy user requests and fetch data from the selected sites in real-time. Micro-theories: A micro-theory is an ontology about a specific domain, that fits within, and for the most part is consistent with, an ontology with a broader scope. For example, structural biology fits within the larger context of biology. Structural biology will have its own terminology and specific algorithms that apply within the specific domain, but may not be useful or identical to, for example, the genome community. mRNA (messenger RNA): RNA that serves as a template for protein synthesis. See genetic code. multi-databases: A repository of data from several, possibly heterogeneous data sources. They do not provide a consistent view of the data contained within the data sources, do not provide summary information, and rarely have a local data store. nucleic acid: A large molecule composed of nucleotide subunits. Nucleotide: A subunit of DNA or RNA chemically consisting of a purine or pyrimidine base, a phosphodiester, and a sugar. object identification: The process of determining data items representing the same real-world concepts in data sources, and merging the information to a single consistent format. This will allow users to obtain accurate and unique answers to information requests. object model: A data model for representation real-world entities using the concept of objects which can belong to one of several classes. The classes have a specialization-generalization relationship and contain both data and methods. Objects themselves can be comprised of other objects. OLAP (on-line analytical processing): Analysis of data along various dimensions (such as time periods, sales areas, and distributors) so as to obtain summary information as well as very specific targeted information to predict business performance based on various parameters. ontology framework: A framework for data management and manipulation based on a descriptions of inter-relationships of the data items in a specific domain. This allows a more targeted search of the data items using a semantic understanding in addition to using the syntactic values. PDB (protein data bank): The Protein Data Bank (PDB) is an archive of experimentally determined three-dimensional structures of biological macromolecules, serving a global community of researchers, educators, and students. The archives contain atomic coordinates, bibliographic citations, primary and secondary structure information, as well as crystallographic structure factors and NMR experimental data. partially materialized views: In a mediated warehouse, a portion of the data represented in the global schema may not be represented in the warehouse, but is only accessible through the mediator. The portion of the data represented locally is said to be materialized. Thus, if there is both local and non-local data represented in the schema, it is said to be partially materialized. physical map: A map of the locations of identifiable markers on DNA, regardless of inheritance. For the human genome, the lowest-resolution physical map is the banding patterns on the 24 different chromosomes; the highest-resolution map would be the complete nucleotide sequence of the chromosomes. See genetic (linkage) map. Protein: A large molecule composed of one or more chains of amino acids in a specific order; the order is determined by the sequence of nucleotides in the gene coding for the protein. Proteins are required for the structure, function, and regulation of the body's cells, tissues, and organs, and each protein has unique functions. protein sequence: The ordered list of amino acids that make up the protein. protein taxonomy: Proteins can be grouped according to several criteria including their sequence or structural similarity with other proteins. These groupings are often referred to as taxonomies. RNA (ribonucleic acid): A molecule chemically similar to DNA, involved in the readout of information stored in DNA. schema (pl. schemata): A description of the data represented within a database. The format of the description varies but includes a table layout for a relational database or an entityrelationship diagram. SCOP: A database of distinct protein structures and their structural classifications, along with detailed information about the close relatives of any particular protein. Sequencing: Determination of the order of nucleotides in a DNA or RNA molecule, or the order of amino acids in a protein molecule. SWISS-PROT: is a protein sequence database with high level of annotations (such as the description of the function of a protein, its domains structure, post-translational modifications, variants, etc.), and a minimal level of redundancy. terabyte: A measurement of the amount of computer storage. Equivalent to 1,000 gigabytes or 1,000,000 megabytes of information. unstructured data: Refers to data whose structure is not well defined or strictly enforced. For example, web pages and flat files are unstructured data while a relational database is structure. http://cbcg.lbl.gov/ssi-csb/Meso.html#anchor584580