Visible Human, Construct Thyself

advertisement

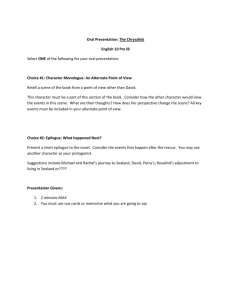

Visible Human, Construct Thyself: The Digital Anatomist Dynamic Scene Generator James F. Brinkley, Evan M. Albright, Sara Kim, Jose L.V. Mejino, Linda G. Shapiro, Cornelius Rosse Structural Informatics Group, Department of Biological Structure, University of Washington, Seattle, WA brinkley@u.washington.edu Availability of the Visible Human dataset has led to many interesting applications and research projects in imaging and graphics, as evidenced by the Visible Human web site and by papers from the previous conference. However, the project has not yet achieved the long-term goal stated in the Visible Human Fact sheet, to “…transparently link the print library of functional-physiological knowledge with the image library of structural-anatomical knowledge into one unified resource of health information.”. We believe that the critical missing pieces necessary to achieve this goal are 1) a comprehensive symbolic knowledge base of anatomical terms and relationships that gives meaning to the images, 2) a fully segmented dataset that is widely available and that associates each voxel or extracted structure with a name from the knowledge base, and 3) methods for combining these resources in intelligent ways. In a companion paper, we propose that the Digital Anatomist Foundational Model (FM) has the potential to become the required symbolic knowledge base[Rosse2000]. We are also aware of many efforts to segment the VH data, although none of these efforts has yet resulted in widely available segmented data. In this paper we assume that the required resources are or will become available. Instead we concentrate on the third critical piece, namely, methods for combining the resources in intelligent ways. We describe the Digital Anatomist Dynamic Scene Generator, a Web-based program that uses the FM to intelligently combine individual 3-D mesh “primitives”, representing parts of organs, into 3-D anatomical scenes. The scenes are rendered on a fast server, the rendered images are then sent to a web browser where the user can change the scene or navigate through it. The Dynamic Scene Generator. The scene generator is composed of several modules from our Digital Anatomist Information System (AIS), a distributed, network-based system for anatomy information [Brinkley1999]. Web Web Browser Browser CGI CGI Script Script FM FM Server Server Graphics Graphics Server Server “Data “Data Server” Server” FM FM 3-D 3-D Primitives Primitives Correspondences Correspondences Figure 1. The Digital Anatomist Dynamic Scene Generator modules. The building blocks of a scene are shown in the bottom row, and consist of terms and relationships from the FM, 3-D mesh primitives, and a list of correspondences between the primitives and names in the FM. These resources are made available by means of the FM Server and the Data Server (which for now is simulated by a function that accesses a flat file). Scenes are generated and rendered by the graphics server, currently running on an Intel Quad Processor. The graphics server accepts commands from perl CGI scripts that implement three different user interfaces: an authoring interface for creating new scenes, a scene manager for saving and retrieving scenes, and a scene explorer for end users. These interfaces generate web forms which capture user commands to add structures to a scene, to save a scene, to rotate a scene, etc. These commands are in turn passed to the graphics server, which performs the action, renders the scene, and returns an image snapshot to the web browser, along with forms allowing further interaction. Screenshots of the three interfaces are shown below: Figure 2. The authoring interface, displays a frame for navigating through the FM, and for selecting structures to add to the scene. It also allows queries of the FM so that entire subtrees (e.g. all parts of the Descending thoracic aorta) can be added, highlighted or removed. Figure 3. The scene manager interface allows the author to create scene groups, consisting of initial scenes and “add-ons”, which are subscenes that can be added to an initial scene in the end-user interface. Once a scene group is created, it is immediately available to the scene explorer. Figure 4. The end user scene explorer presents a list of structures in the scene that can be selected, then removed or highlighted. It also shows the available add-ons as small icons that can be added by the user. As on the other interfaces, camera controls allow the scene to be rotated or zoomed. Plans and Discussion. We are currently performing a preliminary evaluation of this system, to see how the scene generator can be used as an educational tool for anatomy teachers and students. Feedback from this and other evaluations will help us combine 3-D scenes with 2-D annotated images to create a distance learning module in anatomy. In the longer term, the resulting information system, when filled out with segmented models from the entire Visible Human, has the potential for many other Web-based applications, including structure-based visual access to non-image based biomedical information, thereby bringing us a step closer to the long term goals of the Visible Human Project. Acknowledgements. This work was supported by NLM Grants LM06316 and LM06822. References [Rosse2000] Rosse, C., Mejino, J. L., Shapiro, L. G. and Brinkley, J. F., "Visible Human, Know Thyself: The Digital Anatomist Structural Abstraction," in Visible Human Project Conference 2000. Bethesda, Maryland: National Library of Medicine, 2000 Submitted. [Brinkley1999] Brinkley, J. F., Wong, B. A., Hinshaw, K. P. and Rosse, C., "Design of an anatomy information system," Computer Graphics and Applications, vol. 19, pp. 38-48, 1999.