PAC Learning Theory - Computer Science @ UC Davis

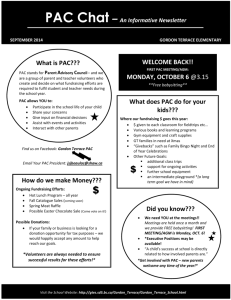

advertisement

Brief Comments on PAC Learning Theory There are two important aspects of complexity in machine learning. First, there is the issue of sample complexity: in many learning problems, training data is expensive and we should hope not to need too much of it. Secondly, there is computational complexity: A neural network, for example, which takes an hour to train may be of no practical use in complex financial prediction problems. It is important that both the amount of training data required for a prescribed level of performance and the running time of the learning algorithm in learning from this data do not increase too dramatically as the `difficulty' of the learning problem increases. Such issues have been formalised and investigated over the past decade within the field of `computational learning theory'. In the class, I tried to describe one popular framework for discussing such problems. This is the probabilistic framework which has become known as the `probably approximately correct', or PAC, model of learning. Of course, there are many other mathematical frameworks in which to discuss learning and generalization and I make no claim that the framework discussed in the class is superior to others discussed elsewhere. We do not have time to survey the whole area of the PAC model and its important variants. I have placed emphasis on those topics that are discussed in your text book and those that I find to be of most interest and, consequently, I mentioned sample complexity and not computational complexity. There are now a number of books dealing with probabilistic models of learning: the interested reader might consult the following books. L. Devroye, L. Gyorfi and G. Lugosi. A Probabilistic Theory of Pattern Recognition. Springer-Verlag, New York, 1996. B. K. Natarajan. Machine Learning: A Theoretical Approach. Morgan Kaufmann, San Mateo, California, 1991. M.J. Kearns and U. Vazirani (1995). Introduction to Computational Learning Theory, MIT Press 1995. V. N. Vapnik. The Nature of Statistical Learning Theory. Springer, New York, 1995. M. Vidyasagar, A Theory of Learning and Generalization: With Applications to Neural Networks and Control Systems, Springer, London, 1997. The basic PAC model as it was discussed in the class is applicable for classification problems. The second part concerns extensions of the basic PAC model, such as those relevant to neural networks with real-valued outputs. The PAC theory is useful for a number of non-neural learning problems, such as the inference of boolean functions. Therefore, while aiming to keep the applications pertinent to neural networks, I have tried also to retain the generality of the theory. What we will do is to finish this topic soon and move on to a couple of other methods so you are equipped with enough ammunition to work on your term papers. If there is time, I plan to revisit this topic toward the end of the quarter and spend some time on theoretical issues. Unless I receive requests otherwise, I plan to move on to NN, Bayesian nets and genetic algorithms in that order.