Homework3

COSC 6335

“Data Mining”

Homework3 Fall 2008

Dr. Eick

Due: Thursday, December 4 in class (only problem 8 and 11)

7) Clustering [8] Ungraded!! a) DBSCAN and DENCLUE both compute outliers. How do they define outliers? What are the main differences in their procedures to determine outliers? [3] b) What role does the parameter

play in DENCLUE’s density estimation approach? If we choose a very small value for

; what is the impact on the density function? [2] c) How is subspace clustering different from traditional clustering? [3]

8) Ensemble Methods [7] a) What is the key idea of ensemble methods? Why have they been successfully used in many applications to obtain classifiers with high accuracies? [2] b) In which case, does AdaBoost increase the weight of an example? Why does the

AdaBoost algorithm modify the weights of examples? [3] c) What role does the parameter

i

play in the AdaBoost algorithm? [2]

9) Preprocessing [6] Ungraded!! a) Assume you have to do feature selection for a classification task. What are the characteristics of features (attributes) you might remove from the dataset prior to learning the classification algorithm? [3] b) Give an example of a problem that might benefit from feature creation [3].

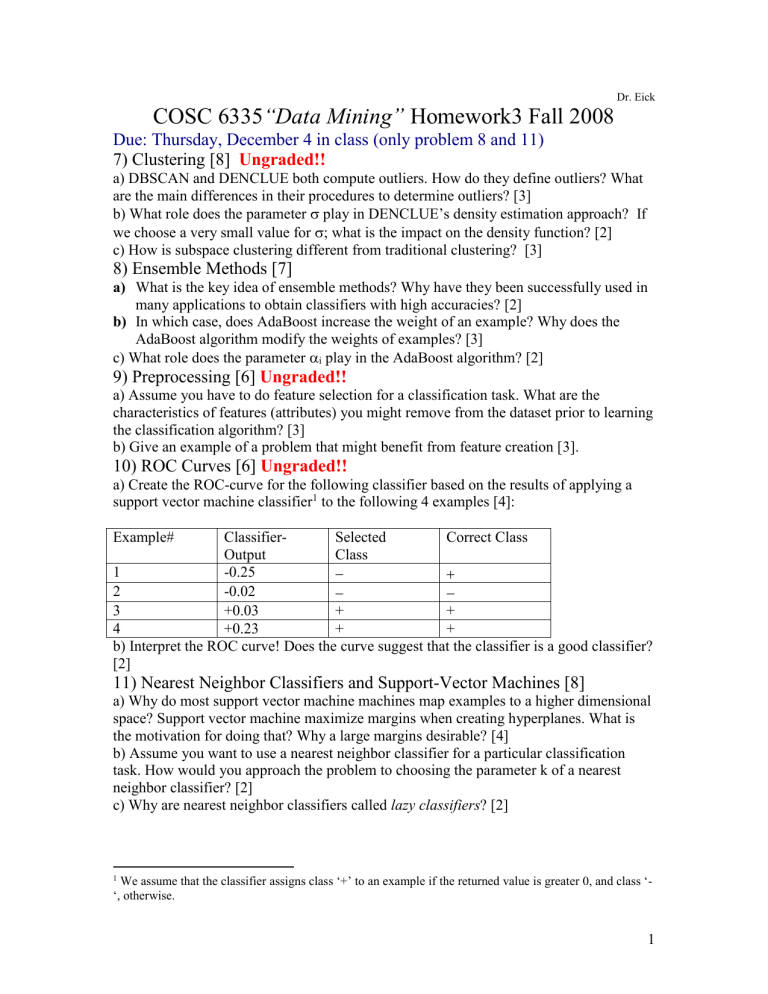

10) ROC Curves [6] Ungraded!!

a) Create the ROC-curve for the following classifier based on the results of applying a support vector machine classifier

1

to the following 4 examples [4]:

Example# Correct Class

1

2

3

4

Classifier-

Output

-0.25

-0.02

+0.03

+0.23

Selected

Class

+

+

+

+ b) Interpret the ROC curve! Does the curve suggest that the classifier is a good classifier?

[2]

11) Nearest Neighbor Classifiers and Support-Vector Machines [8] a) Why do most support vector machine machines map examples to a higher dimensional space? Support vector machine maximize margins when creating hyperplanes. What is the motivation for doing that? Why a large margins desirable? [4] b) Assume you want to use a nearest neighbor classifier for a particular classification task. How would you approach the problem to choosing the parameter k of a nearest neighbor classifier? [2] c) Why are nearest neighbor classifiers called lazy classifiers ? [2]

1 We assume that the classifier assigns class ‘+’ to an example if the returned value is greater 0, and class ‘-

‘, otherwise.

1

![[ ] ( )](http://s2.studylib.net/store/data/010785185_1-54d79703635cecfd30fdad38297c90bb-300x300.png)