Improving the Integrity of User-Developed Applications

advertisement

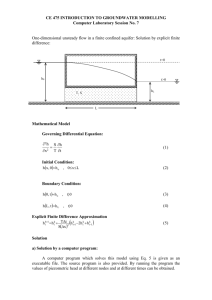

2/12/2016 10:34 PM Improving the Integrity of User-Developed Applications ABSTRACT: In the past two decades, the number of user-developed applications in accounting has grown exponentially. Unfortunately, prior research indicates that many applications contain significant errors. This work presents a framework identifying factors, such as task characteristics and contingency issues, that may improve the integrity of user-developed applications. One goal of accounting information systems research is to explore the implications of technology for professional accounting and business practice. This study examines how to improve the integrity of user-developed applications, a common but often overlooked technology in professional accounting and business practices. Key Words: user-developed computing applications, systems integrity, error detection, spreadsheet errors, database errors. Data Availability: Contact the first author. Improving the Integrity of User-Developed Applications I. INTRODUCTION ‘Technology is a pervasive and growing component of accounting tasks and has been shown to change work processes’ (Mauldin and Ruchala 1999, 328). This paper examines how to improve the integrity (or accuracy) of a common but often overlooked technology, user-developed applications, defined as ‘software applications developed by individuals who are not trained information systems professionals’ (Kreie et al. 2000, 143). Today, managers in both small and large organizations rely on output (i.e. financial reports, tax schedules, managerial reports, and audit workpapers) from accountant-developed computer applications such as spreadsheets and databases to make major investment, resource allocation, performance evaluation, product pricing, and audit opinion judgments (AICPA 1994; Arens et al. 2003; Goff 2004; Horowitz 2004; Lanza 2004). In large organizations, internal and external decision makers make performance and investment decisions based upon information downloaded from enterprise resource systems and modified by spreadsheet technology (Worthen 2003; Horowitz 2004). In small and large organizations, management often relies on financial information stored in accountant-developed databases to support investment, performance, and product pricing decisions (Hall 1996; Klein 1997; Goff 2004). User-developed applications improve work processes within organizations by providing decision makers with timely, custom-formatted information (Klein et al. 1997; McGill et al. 2003). However, the trust placed in information generated by user-developed applications may not always be warranted (Ross 1996; Galletta et al. 1996-97; Klein 1997; Panko 1998). Spreadsheets and databases often contain unintentional errors. Spreadsheet errors can be cut, pasted, and copied repeatedly by clicking, dragging, and dropping in spreadsheets. Database errors can be queried and copied quickly. Spreadsheet and database errors are not a rare 2 occurrence. Previous research examining operational spreadsheets as well as those developed in laboratory experiments indicates that from 20 percent to 80 percent of all spreadsheets contain errors (Hall 1996; Panko 1998, 2000; Kruck and Sheetz 2001; Morrison et al. 2002; PricewaterhouseCoopers 2004). Research examining operational databases finds that up to 30 percent of user-developed databases contain errors (Fox et al. 1994; Klein 1998). Although the existence of errors in user-developed applications is no secret, organizations have not developed policies and procedures for application development with the same rigor and discipline used in other programs (Panko 1998; Teo and Tan 1999). In the short term, individual developers are responsible for application integrity. As a result, developers are challenged to find ways to increase the integrity of their work. In this paper, we refer to integrity as accuracy or the degree to which applications are error-free (AICPA 2003). We draw upon Mauldin and Ruchala (1999)’s meta-theory of accounting information systems (AIS) to identify factors that may improve the integrity of user-developed applications. This work is important as it explores the integrity of user-developed applications, a common but often overlooked technology. Our study extends prior user-developed application research by examining (1) how task characteristics influence accountants’ decision to create their own applications and (2) how these task characteristics interact with contingency factors (i.e. cognitive, technological, and organizational) to impact the integrity of user-developed applications, and ultimately task performance. We integrate research from two familiar applications, spreadsheets and databases. The modified framework applies both to these familiar applications and to newer, growing applications such as (1) managerial accounting information displays using intranet technology and (2) financial information graphs for either internal or external reporting (Arunachalam et al. 2002; Powell and Moore 2002; Downey 2004). In 3 addition, our work contributes to accounting information systems by providing researchers with evidence that Mauldin and Ruchala’s meta-theory is extendable to one specific accounting information systems, that is to user-developed applications. The framework is important for organizations wanting to increase the quality of their financial information used to monitor performance, attract investment capital, and meet regulatory reporting requirements. As user-developed application usage grows in environments with little developer control and/or training, the possibility of relying on and reporting financial information that could negatively impact firm reputation and long-term viability significantly increases. This paper proceeds as follows. Section II of this paper discusses current user-developed application usage and error concerns, Section III develops the research framework. Section III provides a discussion and summary. II. USER-DEVELOPED APPLICATION USAGE AND ERRORS Accountants have steadily increased their usage of user-developed applications to support business decisions. A ten-year study of spreadsheet use by accountants in New Zealand reveals that, by 1996, three-quarters of accountants used spreadsheets compared to about one-third in 1986 (Coy and Buchanan 1997). Even with the widespread growth in enterprise resource systems, most accountants still rely on spreadsheets for planning and budgeting decisions (Worthen 2003). Similarly, the number of accountants using databases has grown rapidly since 1995 (Klein 2001). Users often cite timeliness and accuracy as the two dominant benefits of developing their own computer applications (Hall 1996). Unfortunately, the perception of userdeveloped application accuracy does not match reality. 4 The prevalence of errors in operational applications is cause for concern because the developer as well as other individuals (i.e. non-developer users) may rely on the information presented in the application. This reliance can lead to embarrassing, or even disastrous, situations if the errors go undetected and decisions are made based on inaccurate information. Given that even one error can subvert all the controls in the systems feeding into an application, the risk of inappropriate business decisions based upon user-developed application output is significant (Klein 1997, Panko 1998; Butler 2001). Reliance on erroneous information has led to flawed decisions in a number of documented situations. For example, a mutual fund management firm employee failed to put a minus sign in a $1.2 billion entry. As a result, shareholders invested in this fund, expecting a capital gains distribution based on a calculated $ 2.3 billion gain. When the error was corrected, resulting in a $ 0.1 billion loss, no distributions were made (Savitz 1994; Godfrey 1995). Recently, a cut-and-paste error cost an utility firm $24 million when it used information from spreadsheets containing mismatched bids to invest in power transmission hedging contracts (Cullen 2003; French 2003). The firm recorded a $ 0.08 per share loss due to the error; the firm’s prior period earnings per share were $ 0.13. Finally, a construction firm in Florida underbid a project by a quarter of a million dollars when costs added to a spreadsheet were not included in a range sum (Gilman and Bulkeley 1986; Ditlea 1987; Hayen and Peters 1989). The errors made by the mutual fund management, utility, and construction firms are not isolated incidents. Errors have been documented in (1) spreadsheets prepared for a variety of financial decision making purposes: sales forecasts, market projections, purchases, tax estimates, and investment evaluation (Business Week 1984; Ditlea 1987; Dhebar 1993; Godfrey 1995; Horowitz 2004), (2) databases used for recording sales and inventory (Klein 1997), and (3) 5 database inquiries created by both developers and non-developer users (Borthick et al. 2001a, 2001b; Bowen and Rohde 2002). The lack of formal training in developing applications may contribute to the number of errors. Only 40 percent of spreadsheet developers have any formal training (Hall 1996). Hall (1996) finds that independent learning and learning from coworkers are the two predominant methods for acquiring spreadsheet skills. Similarly, Klein (1997) indicates that many database developers acquire and improve their skills through discussion with colleagues. Application development is not controlled to the same extent as programming in many organizations. Hall (1996) finds that only 10 percent of developers are aware of design controls for user-developed applications. Among those aware of a policy on application development, only a third have a copy of the policy. In addition, these policies are often difficult to enforce (Galletta and Hufnagel 1992; Hall 1996; Guimaraes et al. 1999). III. RESEARCH FRAMEWORK We present a research framework to identify factors that contribute to the integrity of user-developed applications. Our framework is based upon the meta-theory of AIS developed by Maudlin and Ruchala (1999). The original meta-theory framework suggests that AIS research should be task-based, task characteristics establish the system design characteristics needed, the effects of AIS on task performance must be examined within the context of contingency factors, and AIS outcome is task performance (Mauldin and Ruchala 1999). Following Mauldin and Ruchala’s work (1999), the framework for user-developed applications, shown in Figure 1, has a task focus. Accountants develop their own computer applications to meet the needs of both internal and external decision makers (Teo and LeePartridge 2001). Task characteristics and contingency factors from cognitive psychology, 6 computer science, and organizational behavior literature impact the integrity of these applications. Decision makers use output from these applications to complete tasks effectively and efficiently. For example, construction firms use information summarized by spreadsheets to estimate project costs. Mutual fund investors use capital distribution information calculated by spreadsheets to make investment decisions (Savitz 1994; Godfrey 1995). Unreliable user-developed applications negatively impact task performance. To illustrate, consider the cut and paste error in the utility firm’s spreadsheet discussed earlier. Due to this one error, the firm made a poor investment decision resulting in a $24 million loss (Cullen 2003; French 2003). << Insert Figure 1 here >> We make two modifications to Mauldin and Ruchala (1999)’s framework. First, because we are interested in identifying factors that improve the integrity of user-developed applications (i.e. realized implementations of technology), we examine the relationship between applications and task performance at the instantiation level. Second, to highlight the impact of application integrity on task performance, our framework adds an intermediary link to the task performance theoretical construct. We suggest that task characteristics and contingency factors impact application integrity. Integrity influences task performance. Three factors drove our decision to modify Mauldin and Ruchala (1999)’s framework rather than rely on an existing user-developed application framework. First, prior frameworks target specific user-developed applications. To illustrate, frameworks developed by Galletta et al. (1996-97), Janvrin and Morrison (2000), and Kruck and Scheetz (2001) examine spreadsheet errors while Klein (2001)’s model identifies factors impacting database errors. Second, most prior frameworks were developed by examining existing research rather than considering the 7 broader contingency factors (i.e. cognitive, technological, and organizational factors) involved in human problem solving and decision making (Galletta et al. 1996-97; Harris 2000; Janvrin and Morrison 2000; and Kruck and Scheetz 2001). Finally, our work provides researchers evidence that Mauldin and Ruchala’s meta-theory is extendable to specific accounting information systems applications. Next, based upon prior research, we describe the task characteristics and contingency factors that influence the integrity of user-developed applications. Task Characteristics Task characteristics include mental processes, complexity, task demands, and task frequency. Accountants use user-developed application output to perform several mental processes, such as information search, knowledge retrieval, hypothesis generation and evaluation, problem solving design, estimation or prediction, and choice. For example, accountants at the Florida construction firm discussed earlier used output from spreadsheets to perform project cost estimation tasks (Gilman and Bulkeley 1986; Ditlea 1987; Hayen and Peters 1989). Actuaries utilize information from user-developed databases as input when calculating reserves (Klein 1997). Prior information systems research proposes that matching the mental processes and information format (see technological factor discussion below) may improve task performance (Vessey 1991). Whether these results hold for user-developed applications is an empirical question. The complexity of tasks based upon user-developed application output varies greatly from simple purchasing decisions to pricing complex hedging contracts (Hall 1996; Cullen 2003; French 2003). Research indicates that task complexity impacts application integrity. For example, Borthick et al. (2001a) find that ambiguity in query directions adversely affects the 8 accuracy and efficiency of database queries. Although even spreadsheets and databases used to perform fairly simple tasks are known to contain errors, as task complexity increases, so does the opportunity for human error (PricewaterhouseCoopers 2004). Task demands fall on a continuum with physical labor at one end and symbolic, abstract knowledge at the other (Stone and Gueutal 1985). User-developed applications assist individuals performing tasks across the entire continuum. To illustrate, consider the construction firm discussed earlier. Their spreadsheet replaced a manual bid calculation task. On the other end, the utility firm depended upon several spreadsheets when quantifying hedging risks, a highly abstract task. The types of errors found in user-developed applications may vary by task demands. For example, specification errors (i.e. failure of applications to reflect requirements specified by users) are more likely to occur when applications are used to assist individuals in performing abstract knowledge tasks (Chan et al. 1993; Pryor 2003). Task frequency refers to whether the task will be repeated, and if so, how many times. Task importance (i.e. mission critical vs. non-mission critical) can interact with task frequency. To illustrate, some spreadsheets and databases provide support for small dollar, one-time decisions. Others are used daily by accountants to support critical financial decisions. Userdeveloped applications that facilitate critical business decisions should be designed with the same level of quality assurance as other computer applications (Klein 1997; Teo and Tan 1999). Cognitive Factors Cognitive factors, including domain knowledge and application experience, are important characteristics to be considered when investigating the integrity of user-developed applications. Domain knowledge, the amount of knowledge the developer possesses relative to the subject of the application, varies. For a capital budget spreadsheet, an accountant is expected 9 to have higher domain knowledge than a journalist. Galletta et al. (1996-97) describe errors that result from the misunderstanding or misapplication of the spreadsheet content area as domain errors. Panko and Halverson (1997) find that accounting and finance majors make fewer spreadsheet errors than other business majors. Applications may be developed by individuals at all levels of an organization, such as clerical employees, managers, and executives (Chan and Storey 1996; Edberg and Bowman 1996; Hall 1996). Developers’ experience creating applications varies from novice to expert. Janvrin and Morrison (2000) find that application experience reduces spreadsheet error rates. However, application experience does not always improve integrity. Hall (1996) suggests that without training, even experienced developers may still create unreliable applications. Additional research examining the impact of application experience on integrity is needed. Technological Factors Technological factors refer to the tools and techniques that have been developed specifically to acquire and process information in support of human purposes (March and Smith 1995). We discuss two technological factors: application-based aids and information presentation. Currently, many application-based aids designed to improve spreadsheet outcomes are available. Examples include both data specification and validation functions within popular software packages (e.g. cell labels and data validation functions in Microsoft Excel) and independent spreadsheet audit packages (e.g. Spreadsheet Professional, Spreadsheet Detective, Spreadsheet x1Navigator 2000, Cambridge Spreadsheet Analyst). Whether these add-ons improve application integrity remains an empirical question. Information presentation is concerned with format issues. Galletta et al. (1996-97) examine the impact of examining spreadsheet output on paper vs. screen and find that paper 10 format improves error detection. Recently, several researchers have examined the impact of normalization format on database query tasks (Borthick et al. 2001b, Bowen and Rohde 2002). Interestingly, they find higher database normalization does not improve database query performance. They conjecture that since higher normalization increases data fragmentation (i.e. greater number of tables with smaller number of attributes in each table), query complexity increases as more joins and subqueries are required to extract the required information (Borthick et al. 2001b; Bowen and Rohde 2002). Organizational Factors We discuss four organizational factors. The first three, application planning and design, review and testing procedures, and developer time constraints, were proposed by Mauldin and Ruchala (1999). The remaining factor, presence and enforcement of user-developed application policy, was identified by examining user-developed application literature (Cheney et al. 1986; Hall 1996; Panko 1998; Guimaraes et al. 1999). Planning and design refers to methodologies to improve the layout of the user-developed application. Janvrin and Morrison (2000) show that using a structured design approach as opposed to an ad hoc approach reduces spreadsheet error rates. Ronen, Palley and Lucas (1989) similarly suggest that implementing a structured approach to spreadsheet design will improve spreadsheet integrity. Review and testing procedures refer to activities designed to detect and correct userdeveloped application errors (Kruck and Sheetz 2001). Several researchers stress the importance of developing procedures to test applications with known values during the development process (Panko 1999; Kruck and Scheetz 2001). Edge and Wilson (1990) suggest that review and testing procedures performed by a third party (i.e. not the application developer) are more effective. 11 Encouragingly, several researchers have recently devoted significant effort to develop new review and testing procedures (Klein 2001; Pierce 2004). The effectiveness of these procedures on improving the integrity of user-developed applications remains an empirical question. Time constraints during the development process impact the integrity of user-developed applications (Ballou and Pazer 1995; Forster et al. 2003). Hall (1996) reports that 18 percent of developers feel rushed to complete their spreadsheets, which may contribute to more errors. Time pressure may also limit the amount of effort devoted to testing spreadsheets for errors (Galletta et al. 1996-97). Finally, several firms have implemented application development and usage policies to reduce risks associated with user-developed applications (Rockart and Flannery 1983; Cheney et al. 1986; Hall 1996; Guimaraes et al. 1999). The effectiveness of user-developed application policies remains an empirical question, particularly since, as noted earlier, these policies are often difficult to enforce (Galletta and Hufnagel 1992; Hall 1996; Guimaraes et al. 1999). IV. DISCUSSION AND CONCLUSION One goal of AIS research is to explore the implications of technology for professional accounting and business practice (Stone 2002, 3; Sutton and Arnold 2002, 8). Our research framework examines how to improve the integrity of user-developed applications, a significant but often overlooked technology. Based upon Mauldin and Ruchala’s meta-theory of AIS (1999), the framework predicts that task characteristics and contingency factors (i.e. cognitive, technological, and organizational) will impact the integrity of user-developed applications. In turn, application integrity influences task performance. This study expands prior user-developed application work by integrating (1) contingency factors from cognitive psychology, computer science, and organizational management and (2) 12 research investigating errors in two common user-developed applications, spreadsheets and databases, into a comprehensive research framework. The modified Mauldin and Ruchala framework can be used to improve the integrity of both familiar user-developed applications (i.e. spreadsheets and databases) and newer, rapidly growing applications (i.e. managerial accounting information displays via intranet technology and financial information graphs). Identifying factors that increase the integrity of user-developed applications is important. Many accountants and managers rely on output from these applications to make major business decisions (Kruck and Scheetz 2001; Cullen 2003; French 2003; Horowitz 2004; Debreceny and Bowen 2005). Without quality application output, task performance suffers. Future Research Opportunities and Conclusions To illustrate our research framework, we present a study examining one technological factor. The Mauldin and Ruchala meta-theory suggests that task characteristics and contingency factors may interact to impact task performance (1999, 319). Future research could identify user-developed application task characteristics and contingency factors that may interact, and examine whether these interactions will improve the integrity of these applications. The research framework was developed by synthesizing research on the integrity of two common user-developed applications, spreadsheets and databases. Business leaders are now relying on other user-developed application output, including graphical presentations and web pages (Arunachalam et al. 2002; Powell and Moore 2002; Downey 2004). As noted earlier, we believe the modified Mauldin and Ruchala framework can be used to examine the integrity of these newer applications. Further research could test this conjecture. Our study examines how to improve the integrity of user-developed applications. Information systems researchers and practitioners may argue that reliable systems have other 13 characteristics, such as availability, security, and maintainability (McPhie 2000; Boritz and Hunton 2002; AICPA 2003). Future work could examine factors that influence these characteristics of user-developed applications. Most user-developed application research implicitly assumes that detected errors are representative of the population of actual errors. However, errors are often difficult to detect (Attwood 1984; Reason 1990; Hendry and Green 1994; Sellen 1994). Studies in auditing and information systems provide evidence that detected errors may not represent the actual error characteristics of the population since subjects often can not find all seeded errors (Caster 1990; Galletta et al. 1993; Galletta et al. 1996-97; Caster et al. 2000; Engel and Hunton 2001). Exploring how various review procedures and decision aids impact the integrity of userdeveloped applications may help determine if detected errors are representative of the population of actual errors generated by user-developed applications. Auditors often examine evidence generated by user-developed applications. In addition, many electronic audit workpapers include user-developed applications. The growing literature exploring the audit review process (e.g. Rich et al. 1997; Sprinkle and Tubbs 1998; Bamber and Ramsey 2000; Brazel et al. 2004) may benefit from understanding whether workpaper preparers and reviewers adjust their evidence evaluation process to reflect the risks of relying on userdeveloped application outputs. There are many benefits to user-developed applications. However, significant research and education needs to be conducted before we can be assured that the accounting profession and business practice are aware of the outcomes (both positive and negative) associated with userdeveloped applications. 14 References American Institute of Certified Public Accountants (AICPA). 1994. Auditing With Computers. New York NY: AICPA. _______, 2003. Suitable Trust Services Criteria and Illustrations. Available at: http://www.aicpa.org/download/trust_services/final-Trust-Services.pdf. Arens, A.A., R.J. Elder, and M.S. Beasley. 2003. Auditing and Assurance Services: An Integrated Approach. 9th Edition. Upper Saddle River NJ: Prentice Hall. Arunachalam, V., B.K. W. Pei, and P.J. Steinbart. 2002. Impression management with graphs: Effects on choices. Journal of Information Systems 16 (Fall): 183-202. Attwood, C.M. 1984. Error detection processes in statistical problem solving. Cognitive Science (8): 413-437. Ballou, D.P., and H.L. Pazer. 1995. Designing information systems to optimize the accuracy-timeliness tradeoff. Information Systems Research 6(1); 51-72. Bamber, E.M., and R.J. Ramsey. 2000. The effects of specialization in audit workpaper review on review efficiency and reviewers’ confidence. Auditing: A Journal of Practice & Theory 19 (Fall): 147-158. Boritz, J.E., and J.E. Hunton. 2002. Investigating the impact of auditor-provided systems reliability assurance on potential service recipients. Journal of Information Systems 15 (Supplement): 69-88. Borthick, A.F., P.L. Bowen, D.R. Jones, and M.H.K. Tse. 2001a. The effects of information request ambiguity and construct incongruence on query development. Decision Support Systems 32 (November): 3-25. _______, M.R. Liu, P.L. Bowen, and F.H. Rohde. 2001b. The effects of normalization on the ability of business end-users to detect data anomalies: An experimental evaluation. Journal of Research and Practice in Information Technology 33 (August): 239-262. Bowen, P.L., and F.H. Rohde. 2002. Further evidence on the effects of normalization on end-user query errors: An experimental evaluation. International Journal of Accounting Information Systems 3 (December): 255-290. Brazel, J.F., C.P. Agoglia, and R.C. Hatfield. 2004. Electronic vs. face-to-face review: The effects of alternative forms of review on audit preparer performance and accountability perceptions. The Accounting Review (October): 949-966. 15 Business Week. 1984. How personal computers can trip up executives. September 24: 94-102. Butler, R. 2001. The role of spreadsheets in the Allied Irish Banks / All First currency trading fraud. Proceedings of EuSpRIG 2001. http://www.gre.ac.uk/~cd02/eusprig/2001/AIB_Spreadsheets.htm. Caster, P. 1990. An empirical study of accounts receivable confirmations as audit evidence. Auditing: A Journal of Practice & Theory 9 (Fall): 75-91. _______, D.Massey, and A. Wright. 2000. Research on the nature, characteristics, and causes of accounting errors: The need for multi-method approach. Journal of Accounting Literature 19: 60-92. Chan, H.C., K.K. Wei, and K.L. Siau. 1993. User-database interface: The effect of abstraction levels on query performance. MIS Quarterly 17 4 (December): 441-464. Chan, Y.E., and V.C. Storey. 1996. The use of spreadsheets in organizations: Determinants and consequences. Information & Management 31 (December): 119-134. Cheney, P. R. Mann, and D. Amoroso. 1986. Organizational factors affecting the success of end-user computing. Journal of Management Information Systems 3 (1): 6580. Coy, D.V., and J.T. Buchanan. 1997. Spreadsheet use by accountants in New Zealand, 1986-96. Working paper. Cullen, D. 2003. Excel snafu costs firm $24 m. The Register June 19 http://thereigister.com/content/67/31298.html. Debreceny, R.S., and P.L. Bowen 2005. The effects on end-user query performance of incorporating object-oriented abstractions in database accounting systems. Journal of Information Systems 19 (Spring): 43-74. Dhebar, A. 1993. Managing the quality of quantitative analysis. Sloan Management Review 34 (Winter): 69-75. Ditlea, S. 1987. Spreadsheets can be hazardous to your health. Personal Computing 11 (January): 60-69. Downey, J.P. 2004. Toward a comprehensive framework: EUC research issues and trends (1990-2000). Journal of Organizational and End User Computing 16 (4): 1-16. Edberg, D.T., and B.J. Bowman. 1996. User-developed applications: An empirical study of application quality and developer productivity. Journal of Management Information Systems 12 (Summer): 167-185. 16 Edge, W.R., and E.J.G. Wilson. 1990. Avoiding the hazards of microcomputer spreadsheets. Internal Auditor (April): 35-39. Engel, T.J. and J.E. Hunton. 2001. The effects of small monetary incentives on response quality and rates in the positive confirmation of account receivable balances. Auditing: A Journal of Practice & Theory 20 (March): 157-168. French, C. 2003. TransAlta says clerical snafu costs it $24 million. Globeinvestor June 19 at http://www.globeinvestor.com/servlet/Ariclenews/stroy/RC/20030603/2003-0603F23.html. Forster, J., E.T. Higgins, and A.T. Bianco. 2003. Speed/accuracy decisions in task performance: Built-in trade-off or separate strategic concerns? Organizational Behavior and Human Decision Processes 90 (January): 148-164. Fox, C., A. Levitin, and T.C. Redman. 1994. The notion of data and its quality dimensions. Information Processing and Management 30 (January): 9-19. Galletta, D.F., and E. Hufnagel. 1992. A model of end-user computing policy: Determinants of organizational compliance. Information and Management 22 (January): 1-18. _______, D. Abraham, M. El Louadi, W. Lekse, Y. Pollalis, and J. Sampler. 1993. An empirical study of spreadsheet error-finding performance. Accounting, Management, and Information Technologies 3 (February): 79-95. _______, K.S. Hartzel, S.E. Johnson, J.L. Joseph, and S. Rustagi. 1996-97. Spreadsheet presentation and error detection: An experimental study. Journal of Management Information Systems 13 (Winter): 45-63. Gilman, H., and W. Bulkeley. 1986. Can software firms be held responsible when a program makes a costly error? Wall Street Journal August 4: 17. Godfrey, K. 1995. Computing error at Fidelity’s Magellan fund. The Risks Digest 16: January 6. Goff, J. 2004. In the fast lane. CFO.com. December 7 http://www.cfo.com/search/search.cfm?qr=in+the+fast+lane. Guimaraes, T., Y.P. Gupta, and R.K. Rainer, Jr. 1999. Empirically testing the relationship between end-user computing problems and information center success factors. Decision Sciences 30 (Spring): 393-413. Hall, M.J.J. 1996. A risk and control oriented study of the practices of spreadsheet application developers. Proceedings of the Twenty-Ninth Hawaii International Conference on Systems Sciences, Vol II., 364-373. 17 Harris, R.W. 2000. Schools of thought in research into end-user computing success. Journal of End User Computing 12 (January-March): 24-35. Hayen, R.L., and R.M. Peters. 1989. How to ensure spreadsheet integrity. Management Accounting 60 (April): 30-33. Hendry, D.G., and T.R. Green. 1994. Crating, comprehending, and explaining spreadsheets: A cognitive interpretation of what discretionary users think of the spreadsheet model. International Journal of Human-Computer Studies 40 (June): 10331065. Horowitz, A.S. 2004. Spreadsheet overload? Spreadsheets are growing like weeds, but they may be a liability in the Sarbanes-Oxley era. Computerworld May 24 http://www.computerworld.com/printthis/2004/0,4814,93292,00.html. Janvrin, D., and J. Morrison. 2000. Using a structured design approach to reduce risks in end user spreadsheet development. Information & Management 37: 1-12. Klein, B. 1997. How do actuaries use data containing errors?: Models of error detection and error correction. Information Resources Management Journal 10 (Fall): 27-36. _______. 1998. Data quality in the practice of consumer product management: Evidence from the field. Data Quality 4 1 (September): 1 – 20. _______. 2001. Detecting errors in data: Clarification of the impact of base rate expectations and incentives. Omega: The International Journal of Management Science (29): 391-404. _______, D.Goodhue, and G. Davis. 1997. Can humans detect errors in data? Impact of base rates, incentives, and goals. MIS Quarterly (June): 169-194). Kreie, J., T. Cronan, J. Pendley, and J. Renwick. 2000. Applications development by end-users: Can quality be improved? Decision Support Systems 29: 143-152. Kruck, S.E., & S.D. Sheetz. 2001. Spreadsheet accuracy theory. Journal of Information Systems Education. 12 (2): 93-108. Lanza, R. Financial-statement analysis is back in style. IT Audit 7: June 1. Leib, S. 2003. Spreadsheets forever. CFO.com (September 15). March, S.T., and G.F. Smith. 1995. Design and natural science research on information technology. Decision Support Systems 15: 251-26. 18 Maudlin, E.G., and L.V. Ruchala. 1999. Towards a meta-theory of accounting information systems. Accounting, Organizations and Society 24 (May): 317-331. McGill, T., V. Hobbs, and J. Klobas. 2003. User-developed applications and information systems success: A test of Delone and McLean’s model. Information Resources Management Journal 16 (Jan-Mar): 24-45. McPhie, D. 2000. AICPA/CICA SysTrustTM principles and criteria. Journal of Information Systems 14 (Supplement): 1-8. Morrison, M., J. Morrison, J. Melrose, and E.V. Wilson. 2002. A visual code inspection approach to reduce spreadsheet linking errors. Journal of End User Computing 14 (July-September): 51-63. Panko, R. R. 1998. What we know about spreadsheet errors. Journal of End User Computing 10 (Spring): 15-21. ________. 1999. Applying code inspection to spreadsheet testing. Journal of Management Information Systems 16 (2): 159-176. ________. 2000. Spreadsheet errors: What we know. What we think we can do. Proceedings of the Spreadsheet Risk Symposium, European Spreadsheet Risks Interest Group (EuSpRIG), Greenwich, England, July 17-18. ________, and R.P. Halverson, Jr. 1997. Are two heads better than one? (At reducing errors in spreadsheet modeling?) Office Systems Research Journal 15 (Spring): 21-32. Pierce, E. 2004. Assessing data quality with control matrices. Communications of the ACM 47 (February): 82-86. Powell, A., and J.E. Moore. 2002. The focus of research in end user computing: Where have we come since the 1980’s? Journal of End User Computing 14 (January-March): 3-22. PricewaterhouseCoopers. 2004. The use of spreadsheets: Considerations for section 404 of the Sarbanes-Oxley Act. Available at: http://www.cfodirect.com/cfopublic.nsf/ Pryor, L. 2003. Abstraction. http://www.louisepryor.com/showTopic.do?topic=5 Reason, J.T. 1990. Human Error. New York: Cambridge University Press. Rich, J.S., I. Solomon, and K.T. Trotman. 1997. The audit review process: A characterization from the persuasion perspective. Accounting, Organizations, and Society 22 (July): 481-505. 19 Rockart, J. and L. Flannery. 1983. The management of end user computing. Communications of the ACM 26: 776-784. Ronen, B., M.A. Palley, and H. Lucas. 1989. Spreadsheet analysis and design. Communications of the ACM 32 (1): 84-93. Ross, J. 1996. Spreadsheet risk: How and why to build a better spreadsheet. Harvard Business Review (September-October): 10-12. Savitz, E.J., 1994. Magellan loses its compass. Barron’s 84 (50): December 12: 35. Sellen, A.J., 1994. Detection of everyday errors. Applied Psychology: An International Review 43(4): 475-498. Sprinkle, G.B., and R.M. Tubbs. 1998. The effects of audit risk and information important on auditor memory during working paper review. The Accounting Review 73 (October): 475-502. Stone, D.N. 2002. Researching the revolution: Prospects and possibilities for the journal of information systems. Journal of Information Systems 16 (Spring): 1-6. Stone, E.F. and H.G. Gueutal. 1985. An empirical derivation of the dimensions along which characteristics of jobs are perceived. Academy of Management Journal: 376-393. Sutton, S.G., and V. Arnold. 2002. Foundations and frameworks for AIS research. In Researching Accounting as an Information Systems Discipline, edited by V. Arnold and S. Sutton, 1-10. Sarasota FL: American Accounting Association. Teo, T.S.H., and M. Tan. 1999. Spreadsheet development and “what-if” analysis: Quantitative versus qualitative errors. Journal of Accounting, Management & Information Technology 9: 141-160. _______., and J.H. Lee-Partridge. 2001. Effects of error factors and prior incremental practice on spreadsheet error detection: An experimental study. Omega The International Journal of Management Science 29: 445-456. Vessey, I. 1991. Cognitive fit: A theory-based analysis of the graphs versus tables literature. Decision Sciences 22 2 (Spring): 219-241. Worthen, B. 2003. Playing by new rules. CIO Magazine (May 15): http://www.cio.com/archive/051503/rules.html. 20 FIGURE 1 Research Framework for Improving the Integrity of User-Developed Applications (Based on Mauldin and Ruchala Meta-Theory of AIS (1999)) Cognitive Domain Knowledge Application Experience System Design Alternatives Task Performance Task Characteristics Task Technological Application-Based Aids Information Presentation UserUserDeveloped Developed Applications Applications Mental Process Complexity Task Demands Frequency Application Integrity Organizational Planning & Design Testing & Review Procedures Time Constraints User-Developed Application Policy 21 Task Decision Performance