Multiple Regression Models

advertisement

Multiple Regression Models

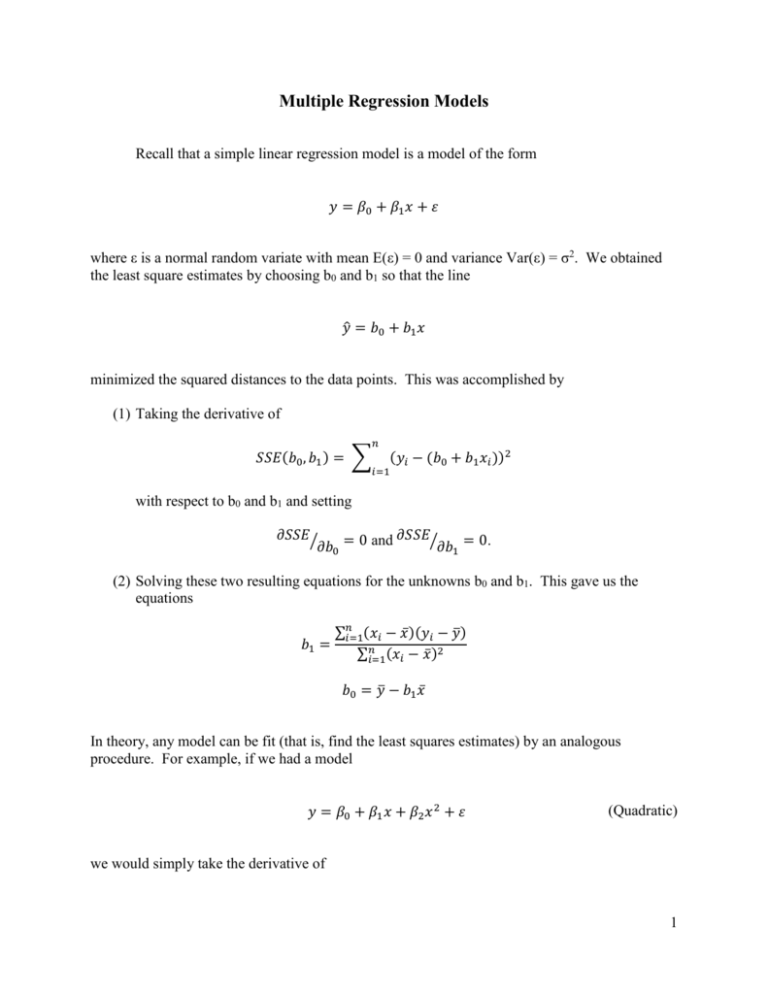

Recall that a simple linear regression model is a model of the form

𝑦 = 𝛽0 + 𝛽1 𝑥 + 𝜀

where ε is a normal random variate with mean E(ε) = 0 and variance Var(ε) = σ2. We obtained

the least square estimates by choosing b0 and b1 so that the line

𝑦̂ = 𝑏0 + 𝑏1 𝑥

minimized the squared distances to the data points. This was accomplished by

(1) Taking the derivative of

𝑛

𝑆𝑆𝐸(𝑏0 , 𝑏1 ) = ∑

(𝑦𝑖 − (𝑏0 + 𝑏1 𝑥𝑖 ))2

𝑖=1

with respect to b0 and b1 and setting

𝜕𝑆𝑆𝐸⁄

𝜕𝑆𝑆𝐸⁄

𝜕𝑏0 = 0 and

𝜕𝑏1 = 0.

(2) Solving these two resulting equations for the unknowns b0 and b1. This gave us the

equations

∑𝑛𝑖=1(𝑥𝑖 − 𝑥̅ )(𝑦𝑖 − 𝑦̅)

𝑏1 =

∑𝑛𝑖=1(𝑥𝑖 − 𝑥̅ )2

𝑏0 = 𝑦̅ − 𝑏1 𝑥̅

In theory, any model can be fit (that is, find the least squares estimates) by an analogous

procedure. For example, if we had a model

𝑦 = 𝛽0 + 𝛽1 𝑥 + 𝛽2 𝑥 2 + 𝜀

(Quadratic)

we would simply take the derivative of

1

𝑛

𝑆𝑆𝐸(𝑏0 , 𝑏1 , 𝑏2 ) = ∑

(𝑦𝑖 − (𝑏0 + 𝑏1 𝑥𝑖 + 𝑏2 𝑥𝑖2 ))2

𝑖=1

with respect to b0, b1, and b2 and set the resulting three equations equal to zero. These three

equations are then solved for the three unknowns, b0, b1, and b2 to give the estimated function

𝑦 = 𝑏0 + 𝑏1 𝑥 + 𝑏2 𝑥 2

of equation (Quadratic). Equations (Quadratic) is an example of a multiple regression model.

Another example of a multiple regression model is

𝑦 = 𝛽0 + 𝛽1 𝑥1 + 𝛽2 𝑥2 + 𝛽3 𝑥3 + 𝜀

where x1, x2, and x3 are three independent variables which are being used to describe the

dependent variable y.

How does a multiple regression model compare to a simple regression model?

An analyst typically does not know what the preferred or best regression model is a priori.

Instead, the model is typically identified through careful exploration and assessment.

Unfortunately, the equations for b0 and b1 (estimates of β0 and β1) will not be the same in model

(Quadratic) as they were in the simple linear regression model!! This implies that every time we

select a different model, we have to refit the model from scratch. Fortunately, Excel provides us

a simple way to get the results for each model.

Example: The amount of electrical power (y) consumed by a manufacturing facility during a

shift is thought to depend upon (1) the number of units produced during the shift (x1), and (2) the

shift involved (first or second). The following model is being investigated.

𝑦 = 𝛽0 + 𝛽1 𝑥1 + 𝛽2 𝑥2 + 𝜀

where

𝑥2 = {

0 if the units are produced during shift 1,

1 if the units are produced during shift 2.

Data was collected to fit the model as shown below.

2

According to the output in Multiple Regression.xlsx, our least square fit is

𝑦̂ = 7.82 + 1.47𝑥1 + 3.75𝑥2

Note: To run Data/Data Analysis/Regression, it is necessary to have all of the independent

variables (x’s) in contiguous columns.

Exercise: For the electrical power problem, fit the model

𝑦 = 𝛽0 + 𝛽1 𝑥1 + 𝛽3 𝑥12 + 𝛽2 𝑥2 + 𝜀

(2)

Evaluating the Model

(1) F-test: The outputs for models (1) and (2) both provided an F-statistic in the ANOVA table

and t-statistics in the table which described the estimates of the parameters (bottom table). In the

case of a multiple regression model, the F-test is testing to see if any of the independent variable

coefficients in the model are nonzero. For model (1) this becomes:

𝐻0 : 𝛽1 = 𝛽2 = 0

And for model (2):

𝐻0 : 𝛽1 = 𝛽2 = 𝛽3 = 0

versus the alternative that at least one βi ≠ 0, which is to say that at least one of the x terms

belongs in the model. What would we conclude for model (1)? Model (2)?

(2) t-tests: The t-tests are, as before, testing hypotheses about the individual βi:

𝐻0 : 𝛽𝑖 = 0

𝐻𝑎 : 𝛽𝑖 ≠ 0

Consider the term for units produced in model (1). Does it appear that β1 ≠0? How about in

model (2)?

3

This is one of the main difficulties with building models using multiple regression. It is possible

(our models are an immediate example) for a variable to appear important or not important

depending on what other variables are included in the model. (Consider the model which only

includes units produced as shown in the sheet Units produced only.)

These independent variables contain information about why the dependent variable y varies from

observation to observation. A problem arises because the independent variables overlap with

regard to this information; in fact, they contain information about each other, referred to as

multicollinearity. The most direct way to see this is through a correlation analysis. Correlation

is a measure of the strength of the linear relationship between two variables. In our model, x1

and x12 happen to be strongly correlated (this is certainly not always the case) because of the

values in the data set—see the sheet entitled Model (3). Multicollinearity confounds our ability

to attribute the independent variables’ influence on the dependent variable.

Further analysis and reflection may reconcile the problem. In our case, the units producedsquared term is not really justified unless there is reason to believe that units produced influence

the power consumption in a non-linear way.

(3) r2 and its derivatives: As before, r2 is the fraction of variation explained by the independent

variables in the model.

𝑟2 =

𝑆𝑆𝑅

𝑆𝑆𝑇

257.71

261.421

In our case for model (1), 𝑟 2 = 269.875 = 95.49%, while for model (2), 𝑟 2 = 269.875 = 96.87%.

It appears that a little more variation was explained by including the term x12 in our model;

however, one needs to be careful in using this measure to compare models. For example, if you

decided to include another variable in the model, say x3 = sunspot activity during the shift, then

you are guaranteed that your new model will have a higher r2, even though x3 probably does not

have an effect on y.

It is reasonable to compare another model with two independent variables with our model (1).

The model with the higher r2 would appear to be explaining a greater proportion of the

variability, although other factors concerning the models should also be examined, such as

residuals, etc.

The measure Multiple R is obtained by taking the square root of r2. This measures the correlation

between the dependent variable y and the set of the independent variables, as if they were a

single variable.

The measure Adjusted R Square is obtained from the computation

𝐴𝑑𝑗 𝑟 2 = 1 − [(1 − 𝑟 2 )

(𝑛 − 1)

]

(𝑛 − 𝑘 − 1)

4

where k = the number of independent variables in the model. This measure attempts to correct

for two issues—(1) the number of independent variables in the model, thus allowing us to better

compare this measure among models with different numbers of independent variables, and (2)

predicting the actual amount of variation which will be explained if the model is used for future

data sets. Adjusted R Square will be smaller, in absolute value, than r2, but it can be positive or

negative.

5