Volya Kapatsinski - Linguistics

advertisement

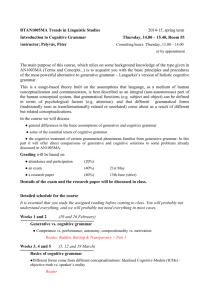

GLOSS and the Department of Linguistics Colloquium November 1, at 3pm (not 3:30) in 202 Agate Hall Volya Kapatsinski Department of Linguistics University of Oregon "Some controversial issues in linguistic theory: Redundancy, individual variability, and the need for multimodel inference" Epigraph: "I am quite unwilling to grant that our brain-storage has any great use for economy; instead, I feel that extravagant redundancy is built in all along the line, and table look-up rather than algorithm is the normal behavior… A linguist who could not devise a better grammar than is present in any speaker’s brain ought to try another trade." Householder (1966:99-100) Abstract: Cognitive and constructionist approaches to grammar agree that grammar consists primarily (for some, exclusively) of knowledge of form-meaning pairings (a.k.a. constructions). The constructions are language-specific and must be learned from one's experience with language rather than known innately. This much is uncontroversial. Nonetheless, a number of foundational issues remain unsettled. One such issue is the issue of abstraction during learning: does acquisition of language involve acquisition of grammar, an intricate web of predictive relationships amongst features of the experienced stimuli, or would simple storage of the experienced stimuli coupled with analogical reasoning suffice, as exemplar models suggest (e.g., Skousen 1989)? I argue that pure exemplar models are fatally flawed, and that it is necessary to learn which features are predictive of which other features. Nonetheless, exemplar models work very well in many cases (e.g., Arndt-Lappe 2011, Eddington 2000, Ernestus & Baayen 2003). Why is that? I argue that this is because linguistic units are multidimensional and redundant: every feature is predictable from many other features. This often makes it possible to predict any feature by letting all other features vote, without giving some of them a stronger voice. Yet, recalcitrant exceptions where this strategy fails remain (e.g., Albright & Hayes 2003, Kalyan 2012) and reveal that we actually do learn which features are predictive of others. Another issue is whether the goal of grammatical description should be description of E-Language (the language out there, observed in a corpus) or I-Language (the system of mental representation generating the language out there within an individual, which is not directly observable; Chomsky 1986). The terms "cognitive grammar" (Langacker 1987) and "cognitive linguistics" (Lakoff 1987) imply the I-Language goal. How can this goal be reconciled with the fact that language conventionalization is at the level of E-Language, the level of observable behavior (Weinreich, Labov & Herzog 1968), and that there is much individual variability in the generalizations that one draws from linguistic experience (e.g., Householder 1966, Barlow 2010, Dabrowska 2012, Misyak & Christiansen 2010, Yu 2010)? Individual variability at the level of mental representation can be tolerated, and is inevitable, in a redundant system where many different grammars will produce the same behavior much of the time (e.g., Ernestus & Baayen 2003). If we are then to keep to the I-Language goals of cognitive grammar, what we should infer from a corpus is not a single grammar but rather an ensemble of grammars, each grammar weighted by how much it is supported by the corpus. This ensemble can then be used to make predictions about future samples of language drawn from the same population. Fortunately, multimodel inference techniques (Burnham & Anderson 2002, Strobl et al. 2008) are designed to do exactly that. I shall argue that multimodel inference provides a principled solution to the problem of idiolects for the study of I-Language/cognitive grammar.