Chapter 5 Handout

advertisement

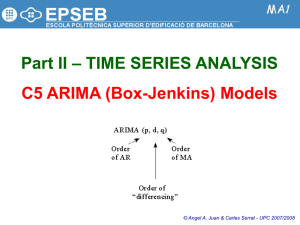

Ch 5. – ARIMA Modeling (Box-Jenkins Models) - Computing in JMP & R Example 5.1: Loan Applications (pgs. 267 – 271) To begin we select Modeling > Time Series which will produce a plot of the time series and compute ACF and the PACF for the time series. Notice the note that appears to the right of the Modeling drop-down menu. We can see that in addition to the ACF plots, there are options to fit models to the time series and make forecasts from them. ARIMA & Seasonal ARIMA are the models we are examining in Chapter 5. The dialog box to begin modeling the Dow Jones Index is shown below. Notice the time series data must be evenly spaced, i.e. daily, weekly, quarterly, or annually in general. 1 Below is the default output from the Time Series modeling option. Given that the ACF dies down in a exponential or damped exponential fashion and that PACF cuts off after lag 2, we might choose to fit a AR(2) model, i.e. a second-order autoregressive model. Select ARIMA if the time series is not seasonal. If it is nonstationary you should consider differencing in attempt to create a stationary time series based on the differences. The order of differencing is specified through the choice of the differencing parameter (d). ARIMA models are denoted generically as: ARIMA(p,d,q) where p = autoregressive order d = differencing needed to create a stationary or weakly stationary time series. q = moving-average order 2 The dialog box for ARIMA modeling in JMP for stationary or weakly stationary time series is shown below, here we have selected an AR(2) to be fit to this time series. The results of the fit are shown below: The parameter estimates for (𝜇, 𝜙1 , 𝑎𝑛𝑑 𝜙2 ) are shown on the following page along with an analysis of the residuals from the AR(2) fit. 3 Parameter estimates Residual analysis 4 We can save the Prediction Formula from the Model: AR(2) pull-down menu which opens a spreadsheet containing the fitted values for observed data and forecasts for the next 25 time periods. … … … … … 5 Example 2: Table E5.1 (pg. 288 text) An arbitrary time series. Below are plots of this time series and the ACF and PACF. 6 The ACF plot essentially cuts off after lag 1, but it does appear to decay exponentially to some degree as well. The PACF has a significant autocorrelation at lag 1 as well, and essentially dies down from there. We will could consider two models based on this an MA(1) or a ARMA(1,1). We will fit both and compare the results. MA(1) dialog ARMA(1,1) dialog MA(1) & ARMA(1,1) Fit Comparing 𝑅 2 and AIC for both models we see the AIC is smaller for MA(1) model but the R-square is larger for the ARMA(1,1). The model summaries for both models are on the following page. 7 Summary of MA(1) and ARMA(1,1) models, note the AR(1) term is not significant. So the MA(1) is sufficient, but we should examine the residuals for structure. The residuals from the MA(1) fit are shown below along with the associated ACF and PACF plots. 8 The residuals from the MA(1) fit show no time dependent structure, again confirming the MA(1) model is sufficient for these data. In cases where the time series is NOT stationary to begin with we need to create a stationary time series before fitting these models. This is done by differencing the time series to create stationarity and then employing AR and MA models to the differenced series. The differencing is the Integrated part of the ARIMA acronym. For nonseasonal time series, we generally only need to consider using first or second order differencing. Recall the first order difference of a time series is 𝑦𝑡 − 𝑦𝑡−1 = (1 − 𝐵)𝑦𝑡 and the second order difference is the first difference of the first differenced series, i.e. (1 − 𝐵)(1 − 𝐵)𝑦𝑡 = (1 − 2𝐵 + 𝐵 2 )𝑦𝑡 = 𝑦𝑡 − 2𝑦𝑡−1 + 𝑦𝑡−2 ARIMA models are specified as seen above in terms of p, d and q where, 𝑝 = 𝑎𝑢𝑡𝑜𝑟𝑒𝑔𝑟𝑒𝑠𝑠𝑖𝑣𝑒 𝑜𝑟𝑑𝑒𝑟 𝑑 = 𝑑𝑖𝑓𝑓𝑒𝑟𝑒𝑛𝑐𝑖𝑛𝑔 𝑜𝑟𝑑𝑒𝑟 (1 𝑜𝑟 2 𝑡𝑦𝑝𝑖𝑐𝑎𝑙𝑙𝑦) 𝑞 = 𝑚𝑜𝑣𝑖𝑛𝑔 𝑎𝑣𝑒𝑟𝑎𝑔𝑒 𝑜𝑟𝑑𝑒𝑟 giving us the ARIMA specification in terms of these orders as ARIMA(p,d,q). We illustrate this process with Example 3 on the following page. 9 Example 3: Weekly Toothpaste Sales (Toothpaste Sales.JMP) Below is a plot of the weekly toothpaste sales. We can see the PACF cuts off after lag 1, however the ACF does not decay due to the obvious nonstationarity of the time series. In order create a stationary time series we will consider the first difference of the time series. On the following page is a plot of the first differenced time series formed using the Dif function in JMP. 10 The ACF plot now decays and the PACF plot again cuts off after lag 1. Thus an AR(1) model applied to the first differences might be an adequate model for these data. The other thing to note before fitting the ARIMA(1,1,0) model is that the mean of the differenced time series is not close to 0, thus an intercept term should be used in the model. Often times a differenced time series will have a mean close to 0 and thus an intercept may not be needed in the ARIMA model. You fit the ARIMA(1,1,0) model from the plot of the ORIGINAL time series not the first differenced one. The order of differencing (d) is specified when fitting the ARIMA model in JMP. 11 The dialogue box for the ARIMA(1,1,0) model for these data is shown below. The fitted model is summarized below. 12 The model 𝑅 2 = .9999 indicating a very good fit. The residuals are shown below. The residuals look fine, so we could then use this model to obtain forecasts etc. 13 Example 4: DVD Sales (DVD Sales.JMP) The time series is clearly nonstationary as evidenced by the plot of the time series and the fact the ACF plot does not decay exponentially with many significant autocorrelations at higher order lags. Again we consider differencing of the time series to achieve stationarity. Note: mean is essentially 0 no intercept model! The first difference of the time series is essentially stationary, however the lag 1 & 6 autocorrelations are significant, the lag 6 autocorrelation being significant is particular is interesting to note. The ACF does appear to decay exponentially with the exception of lag 6 autocorrelation. The PACF shows lags 1 & 2 and possibly 5 as being significant. Thus might consider the following models based on these plots: ARIMA(2,1,0) assuming ACF decays ARIMA(0,1,6) assuming PACF decays and the lag 6 autocorrelation in the ACF plot is meaningful. ARIMA(2,1,6) assuming PACF cuts off after 2 and the lag 6 autocorrelation in the ACF plot is meaningful. 14 Fitting all of these models in JMP yields the following summary statistics regarding the model fits. ARIMA(2,1,0) ARIMA(0,1,6) ARIMA(2,1,6) The best models appear to be the ARIMA(0,1,6) or the ARIMA(2,1,6) models in terms of R-square, however the ARIMA(0,1,6) has a smaller AIC. The parameter estimates and significance tests for these two models are shown below. 15 We can see the AR coefficients are not significant and neither are the lags between 1 and 6 on the MA(6) part. Unfortunately in JMP you cannot remove the 2 – 5 terms, but it appears the AR terms are not needed, thus our tentative model choice for these data is the ARIMA(0,1,6) model, or since the AR terms are not included the IMA(1,6) model. The residuals from the IMA(1,6) model are shown below. As these residuals look great in terms of lack of time dependence we can use this model to make future forecasts of DVD sales. However, we see the prediction intervals get very wide if we look too far into the future.’ 16 Seasonal ARIMA - 𝑨𝑹𝑰𝑴𝑨(𝒑, 𝒅, 𝒒) × (𝑷, 𝑫, 𝑸) When a time series exhibits seasonality/cyclical trends we can use Seasonal ARIMA modeling. This again requires the use of differencing of the original time series to create a stationary time series, which can then be modeled using AR and MA models. We need to handle the seasonal part of the time series separately from the nonseasonal trend. This means we need to identify P, D, Q for the seasonal component and p,d,q for the nonseasonal part. Notice that capital letters are used to denote the autoregressive (P), differencing (D), and moving average (Q) orders. When fitting a seasonal ARIMA model we therefore have six orders to determine by looking at the appropriate ACF and PACF plots. However, there are fairly restrictive rules of thumb when modeling the seasonal level (see notes) that make this process less daunting. Seven Step Procedure (see notes): Step 0 – Plot the time series in the original scale and log transform the response if the seasonal variation is increasing with time. Step 1 – Use differencing to achieve stationarity. At a minimum for strongly seasonal data the first seasonal difference (1 − 𝐵 𝐿 )𝑦𝑡 = 𝑦𝑡 − 𝑦𝑡−𝐿 will generally be needed. Step 2 - Examine ACF and PACF plots to tentatively identify nonseasonal level model. Step 3 – Examine ACF and PACF plots to tentatively identify seasonal level model. Step 4 – Combine models from Steps 2 & 3 to arrive at a tentative overall seasonal ARIMA model, i.e. 𝐴𝑅𝐼𝑀𝐴(𝑝, 𝑑, 𝑞) × (𝑃, 𝐷, 𝑄). Where 𝑑 & 𝐷 are based on what differencing you used to achieve stationarity. Step 5 – Fit tentative model and look at p-values, performance statistics, and the ACF/PACF of the residuals from the fit. Step 6 – Explore other models and repeat 5 choose “best”. 17 Example 1: Monthly Number of International Airline Passengers (in 1000’s) This time series is the number of passengers on a monthly basis in thousands for an 11year period. A plot of the time series in the original scale is shown below along with the associated ACF and PACF plots. Step 0 - Clearly the ACF plot does not decay exponentially nor does it cutoff after a specific lag and thus the time series is NOT stationary. Thus differencing should be used to achieve stationarity before attempting to identify appropriate ARIMA models for the nonseasonal and seasonal levels. We also note that the seasonal variation 18 increases with time, thus a logarithmic transformation of the time series should be used. The plot of the time series transformed log base 10 is shown below. The seasonal variation appears to now be stable, thus from here on out log10 (𝑃𝑎𝑠𝑠𝑒𝑛𝑔𝑒𝑟𝑠) will be considered to be the time series (𝑦𝑡 ). In the JMP calculator use the following formula: Step 1 - We now consider differencing to achieve stationarity, which will be considered both on the nonseasonal and seasonal level. We will add three columns to the spreadsheet for the following differences: (1 − 𝐵)𝑦𝑡 , (1 − 𝐵12 )𝑦𝑡 , 𝑎𝑛𝑑 (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 . The formulae in the JMP calculator for these respectively are: We can then examine ACF and PACF plots of the differenced series to determine which order of differencing will create a stationary time series. 19 ACF and PACF for (1 − 𝐵)𝑦𝑡 ACF and PACF for (1 − 𝐵12 )𝑦𝑡 20 ACF and PACF for (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 As the ACF plot of (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 cuts off quickly at both the seasonal and nonseasonal level, we conclude these values are stationary. Therefore, we will use the behavior of the ACF and PACF plots of this time series to tentatively identify models for both the nonseasonal and seasonal levels of the time series. Also realize at this point we have identified the order of differencing for both the nonseasonal and seasonal components of the seasonal ARIMA model, namely 𝑑 = 1 & 𝐷 = 1. Step 3 - At the nonseasonal level the ACF has a spike at lag 1 and, with the possible exception of lag 3, cuts off, and the PACF dies down, with the possible exception of lag 1. Thus we might consider an MA(1) model for the nonseasonal level. Other possibilities include MA(3) and ARMA(1,1). 𝑧𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 21 Step 4 - At the seasonal level the ACF and PACF both have a spike at lag 12 and at lag 24 we see a significant decrease in the autocorrelation and partial autocorrelation. However, the autocorrelation at lag 24 is smaller than the partial autocorrelation at lag 24, thus we will conclude the ACF cuts off after lag 12 and the PACF dies down after lag 12. This implies we would use a MA(1) model, which is also consistent with the recommendation that if the autocorrelation at the first seasonal lag is negative we should use a moving average (MA) model vs. an autoregressive model (AR). 𝑧𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1,12 𝜀𝑡−12 Step 5 – Combining our tentative conclusions from Steps 3 & 4 we will first consider the model 𝐴𝑅𝐼𝑀𝐴(0,1,1) × (0,1,1) for the log transformed time series. Because both the nonseasonal and seasonal models are both MA models we need a multiplicative term in the final model (which JMP will do automatically). 𝑧𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 − 𝜃1,12 𝜀𝑡−12 + 𝜃1 𝜃1,12 𝜀𝑡−13 Step 6 – Fit the model and examine performance. Next specify the parameters for the 𝐴𝑅𝐼𝑀𝐴(0,1,1) × (0,1,1) model. Given the mean and SD of the differenced series (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 we don’t need to include an intercept. 22 The summary of the fitted model is shown below. This model has a good R-square and small MAPE and MAE. 23 An examination of the residuals is shown below. At this point we could consider fitting the other models that we also possibilities from Steps 2 and 3, however this model does not exhibit any model deficiencies. 24 Example 2: Monthly Hotel Occupancy - (Hotels.JMP) Traveler’s Rest, Inc., operates four hotels in a Midwestern city. The analysts in the operating division of the corporation were asked to develop a model that could be used to obtain short-term forecasts (up to one year) of the number of occupied rooms in the hotels. These forecasts were needed by various personnel to assist in decision making with regard to hiring additional help during the summer months, ordering materials that have long delivery lead times, budgeting local advertising expenditures, and so on. The available historical data consisted of the number of occupied rooms during each day for the previous 15 years, starting on the first day of January. Because monthly forecasts were desired, these data were reduced to monthly averages. The plot of the original time series is shown below. 25 What interesting features do we see in this time series? Strong seasonal pattern Increased variation in the seasonal fluctuations over time. Because of the increased variation in seasonal cycles we should/might consider transforming the response to the log scale. Below is a plot of the time series in the log10 scale. The seasonal variation appears to have stabilized after transforming the log scale, thus we develop a seasonal ARIMA model for these data in the transformed scale (𝑖. 𝑒. 𝑛𝑒𝑤 𝑦𝑡 = log10 (𝑦𝑡 ). Future forecasts will then need to be back-transformed to the original scale to be of use to the personnel who rely on these forecasts for their planning. 26 The ACF plot clearly shows that the time series in the log scale is NOT stationary. Thus we need to consider differencing of the time series to achieve stationarity. To begin we form the first order nonseasonal difference, first order seasonal difference, and first order difference of the first seasonal difference, i.e. (1 − 𝐵)𝑦𝑡 , (1 − 𝐵12 )𝑦𝑡 , 𝑎𝑛𝑑 (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 . The ACF and PACF plot of the first differences (1 − 𝐵)𝑦𝑡 = 𝑦𝑡 − 𝑦𝑡−1 is shown below. 27 The ACF dies down very slowly at both the seasonal and nonseasonal level, thus the first order differences are not stationary. The plot of ACF and the PACF of the first seasonal difference (1 − 𝐵12 )𝑦𝑡 are shown below. After lag 5 the nonseasonal ACF cuts off and decays in a sinusoidal manner. In terms of seasonal trend, the ACF cuts off after lag 12 and decays rapidly as the lag 24 autocorrelation is very small. Thus we might consider the seasonally differenced time series stationary for the purposes of developing a seasonal ARIMA model, particularly if we are willing to consider a MA(5) model for the nonseasonal level of the time series. However, we still may want to at least examine the first difference of the first seasonal differences, i.e. (1 − 𝐵)(1 − 𝐵12 )𝑦𝑡 . The plots of these differences and the associated ACF and PACF are shown on the following page. 28 The twice differenced time series appears to be less stationary than the first seasonal difference above. This shows what happens when we over-difference a time series. Taking an additional difference of a stationary or weakly stationary time series will often times produce a time series that is less stationary! Thus we will use the first seasonally difference time series in developing the AR and MA components of the seasonal and nonseasonal levels (i.e. we have chosen the integrated (I) part of our seasonal ARIMA as d = 0 and D = 1). The plots on the following page again show the ACF and the PACF of the first seasonally differenced series (1 − 𝐵12 )𝑦𝑡 , and we will use these to identify potential AR and MA components for both the seasonal and nonseasonal levels of this differenced time series. 29 ACF and PACF of (1 − 𝐵12 )𝑦𝑡 At the seasonal level we see that ACF cuts off after lag 12 and PACF dies down, thus a MA(1) for the seasonal component seems appropriate. Also the fact that the lag 12 autocorrelation is negative suggests an MA(1) vs. AR(1) would be appropriate. At the nonseasonal level the choice is not entirely clear. One could potential argue one of the following options: the ACF cuts off at lag 5 and PACF decays MA(5) the PACF cuts off at lag 5 and the ACF decays AR(5) both the ACF and PACF cut off at lag 5 and decay thereafter ARMA(5,5). We can fit all of these models along with the MA(1) for seasonal level and use model performance measures and analysis of the residuals to decide which option is “best” for these data. The basic summaries of these three potential models is shown below. 30 The best model appears to be the ARIMA(0,0,5)X(0,1,1) model in terms of AIC and 𝑅 2 . The residuals from this model are analyzed shown below. 𝑇ℎ𝑒𝑠𝑒 𝑟𝑒𝑠𝑖𝑑𝑢𝑎𝑙𝑠 𝑎𝑟𝑒 𝑐𝑜𝑛𝑠𝑖𝑠𝑡𝑒𝑛𝑡 𝑤𝑖𝑡ℎ 𝑤ℎ𝑖𝑡𝑒 𝑛𝑜𝑖𝑠𝑒 𝑎𝑛𝑑 𝑡ℎ𝑢𝑠 𝑠𝑢𝑔𝑔𝑒𝑠𝑡 𝑛𝑜 𝑟𝑒𝑚𝑎𝑖𝑛𝑖𝑛𝑔 𝑡𝑖𝑚𝑒 𝑑𝑒𝑝𝑒𝑛𝑑𝑒𝑛𝑡 𝑠𝑡𝑟𝑢 − 𝑑𝑜𝑤𝑛 𝑚𝑒𝑛𝑢 𝑎𝑠 𝑠ℎ𝑜𝑤𝑛 𝑏𝑒𝑙𝑜𝑤. The resulting forecasts for the next 𝜏 = 25 months are shown on the following page. 31 The forecasts and prediction intervals are in the log10 scale. Thus we need to back transform them using the JMP calculator using the exponential function, 10𝑦𝑡 . For example, to obtain the monthly average occupancy in the original scale use the JMP calculator to form the expression shown below: 32 The highlighted rows and columns show the prediction and prediction intervals for next 25 months in the original scale. The Overlay plot below shows these forecasts and forecast intervals graphically. 33 A better way to judge the predictive or forecast performance of a time series is to set aside a portion of the observations at the end of the available time series and develop a model using the portion not set aside to predict the response value for observations that were set aside. The observations that are set aside are referred to as the test cases and the cases that are used to develop the model are called the training cases. We will demonstrate this process for the hotel time series below. We will first set aside the average occupancy for the last year of the available time series and use the first 14 years of the time series to predict these observations. There are several ways to do this in JMP and I will show a way that makes it clear what we actually doing. First highlight just the rows (not the columns) of the spreadsheet that correspond to the last year and select Table > Subset which will create a new data table of just these highlighted cases. 34 The new dataset containing the last year of the time series is shown below, you can save this data table as Hotel Guests (Test Cases).JMP. We can then delete these rows from the original data set and rename the original spreadsheet as Hotel Guests (Train Cases).JMP. Using just the training cases we can then fit our ARIMA(0,0,5)X(0,1,1)12 model specifying Forecast Periods to be 12. We can then save select Save Prediction Formula from the ARIMA(0,0,5)X(0,1,1) model pull-down menu. 35 The last 12 rows of the Prediction Formula spreadsheet contains the forecasted values for the test cases we removed – thus comparing these predictions to the actual values will give us a means to examine the predictive performance of our seasonal ARIMA model. We may want to delete the remaining rows to focus on these 12 forecast values. We want these columns to compare to the test cases. Copying and pasting these columns to the Hotel Guests (Test Cases).JMP file, we then can compute the predictions in the original scale, and compare these predictions to the actual by computing Absolute Percent Errors (APE) & Absolute Error (AE). We could then repeat this process for other candidate models for these data and choose which model gives the best forecasts of these test cases. Here are the MAPE and MAE for these 12 forecasts. 36 Summary of Non-seasonal ARIMA/Box-Jenkins Models General Non-seasonal Models Moving average of order q ACF Cuts off after lag q PACF Dies down Dies down Cuts off after lag p Dies down Dies down Cuts off after lag 1, specifically −𝜃1 𝜌1 = 1 + 𝜃12 𝜌𝑘 = 0 𝑓𝑜𝑟 𝑘 ≥ 2 Dies down in fashion dominated by exponential decay Dies down in fashion dominated by exponential decay 𝑦𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1 𝑦𝑡−1 − 𝜃2 𝑦𝑡−2 − ⋯ − 𝜃𝑞 𝑦𝑡−𝑞 Notation: MA(q) Autoregressive of order p 𝑦𝑡 = 𝜇 + 𝜙1 𝑦𝑡−1 + 𝜙2 𝑦𝑡−2 + ⋯ + 𝜙𝑝 𝑦𝑡−𝑝 + 𝜀𝑡 Notation: AR(p) Mixed autoregressive-moving average of order (p,q) 𝑦𝑡 = 𝜇 + 𝜙1 𝑦𝑡−1 + 𝜙2 𝑦𝑡−2 + ⋯ + 𝜙𝑝 𝑦𝑡−𝑝 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 − 𝜃2 𝜀𝑡−2 − ⋯ − 𝜃𝑞 𝜀𝑡−𝑞 Notation: ARMA(p,q) First order moving average 𝑦𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 Notation: MA(1) Second order moving average Cuts off after lag 2, specifically: 𝑦𝑡 = 𝜇 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 − 𝜃2 𝜀𝑡−2 Notation: MA(2) First-order autoregressive 𝑦𝑡 = 𝜇 + 𝜙1 𝑦𝑡−1 + 𝜀𝑡 −𝜃1 (1 − 𝜃2 ) 1 + 𝜃12 + 𝜃22 −𝜃2 𝜌2 = 1 + 𝜃12 + 𝜃22 𝜌𝑘 = 0 𝑓𝑜𝑟 𝑘 ≥ 2 𝜌1 = Dies down in an exponential fashion, specifically 𝜌𝑘 = (𝜙1 )𝑘 𝑓𝑜𝑟 𝑘 ≥ 1 Cuts off after lag 1. Notation: AR(1) 37 Second-order autoregressive 𝑦𝑡 = 𝜇 + 𝜙1 𝑦𝑡−1 + 𝜙2 𝑦𝑡−2 + 𝜀𝑡 Mixed autoregressive-moving average of order (1,1) 𝑦𝑡 = 𝜇 + 𝜙1 𝑦𝑡−1 + 𝜀𝑡 − 𝜃1 𝜀𝑡−1 Notation: ARMA(1,1) Dies down in an exponential fashion, according to damped exponentials of sine waves, specifically 𝜙1 𝜌1 = 1 − 𝜙2 𝜙12 𝜌2 = + 𝜙2 1 − 𝜙2 𝜌𝑘 = 𝜙1 𝜌𝑘−1 + 𝜙2 𝜌𝑘−2 for 𝑘 ≥ 3 Cuts off after lag 2. Dies down in a damped exponential fashion, specifically: Dies down in a fashion dominated by exponential decay. 𝜌1 = (1 − 𝜙1 𝜃1 )(𝜙1 − 𝜃1 ) 1 + 𝜃12 − 2𝜃1 𝜙1 𝜌𝑘 = 𝜙1 𝜌𝑘−1 for k > 2 38