Slides - Engineering

advertisement

Time Series Analysis

Negar Koochakzadeh

Outline

Introduction:

Stationary / Non-stationary TS Data

Existing TSA Models

Example 1: International Airline Passenger

Example 2&3: Energy Load Prediction

Time Series Data Mining

AR (Auto-Regression)

MA (Moving Average)

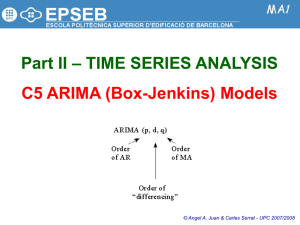

ARMA (Auto-Regression Moving Average)

ARIMA (Auto-Regression Integrated Moving Average)

SARIMA (Seasonal ARIMA)

Examples

Time Series Data

Time Series Classification (SVM)

Example

Example 4: Stock Market Analysis

Time Series Data

In many fields of study, data is collected from a system

over time.

This sequence of observations generated a time series:

Examples:

Closing prices of the stock market

A country’s unemployment rate

Temperature readings of an industrial furnace

Sea level changes in coastal regions

Number of flu cases in a region

Inventory levels at a production site

Temporal Behaviour

Most physical processes do not change quickly, often

makes consecutive observation correlated.

Correlation between consecutive observation is called

autocorrelation.

Most of the standard modeling methods based on the

assumption of independent observations can be

misleading.

We need to consider alternative methods that take into

account the serial dependence in the data.

Stationary Time Series Data

Stationary time series are characterized by having a

distribution that is independent of time shifts.

Mean and variance of these time series are constants

If arbitrary snapshots of the time series we study exhibit

similar behaviour in central tendency and spread, we can

assume that the time series is indeed stationary.

Stationary or Non-Stationary?

In practice, there is no clear demarcation line between a

stationary and a non-stationary process.

Some methods to identify:

Visual inspection

Using intuition and knowledge about the process

Autocorrelation Function (ACF)

Variogram

Visual Inspection

A properly constructed graph of a time series can

dramatically improve the statistical analysis and accelerate

the discovery of the hidden information in the data.

“You can observe a lot by watching.” This is particularly

true with time series data analysis! [Yogi Berra, 1963]

Intuition and knowledge Inspection

Does it make sense...

for a tightly controlled chemical process to exhibit similar

behaviour in mean and variance in time?

to expect the stock market out it “to remain in

equilibrium about a constant mean level”

The selection of a stationary or non-stationary model

must often be made on the basis of not only the data but

also a physical understanding of the process.

Autocorrelation Function (ACF)

Autocorrelation is the crosscorrelation of a time series data with

itself based on lag k

𝐴𝐶𝐹(𝐾) = 𝐶𝑜𝑟𝑟(𝑍𝑡 , 𝑍𝑡−𝐾 )

ACF summarizes as a function of k,

how correlated the observations that

are k lags apart are.

If the ACF does not dampen out then

the process is likely not stationary

(If a time series is non-stationary, the

ACF will not die out quickly)

Variogram

The Variogram Gk measures the variance of differences k

time units apart relative to the variance of the differences

one time unit apart

𝑉 { 𝑍𝑡+𝑘 − 𝑍𝑡 }

𝐺𝑘 =

𝑉 { 𝑍𝑡+1 − 𝑍𝑡 }

For stationary process, Gk when plotted as a function of

k will reach an asymptote line. However, if the process is

non-stationary, Gk will increase monotonically.

Modeling and Prediction

“If we wish to make predictions, then clearly we must assume

that something does not vary with time.” [Brockwell and

Davis, 2002]

Let’s try to predict and build a model for our time series

process based on:

Serial Dependency

Leading Indicators

Disturbance

True disturbances caused by unknown and/or uncontrollable

factors that have direct impact on the process.

It is impossible to come up with a comprehensive

deterministic model to account for all these possible

disturbances, since by definition they are unknown.

In these cases, a probabilistic or stochastic model will be more

appropriate to describe the behaviour of the process.

Notations

∇𝑍𝑡 = 𝑍𝑡 − 𝑍𝑡−1

∇2 𝑍𝑡 = ∇ ∇𝑍𝑡 = 𝑍𝑡 − 2𝑍𝑡−1 + 𝑍𝑡−2

∇𝑠 𝑍𝑡 = 𝑍𝑡 − 𝑍𝑡−𝑠

𝑍𝑡 = 𝑍𝑡 − 𝜇

𝑍𝑡−𝑠 (𝑘) = 𝑓𝑜𝑟𝑐𝑎𝑠𝑡 𝑜𝑓 𝑍𝑡−𝑠+𝑘 𝑎𝑡 𝑡𝑖𝑚𝑒 𝑡 − 𝑠

Backshift Operator

𝐵𝑍𝑡 = 𝑍𝑡−1

∇𝑍𝑡 = (1 − 𝐵)𝑍𝑡

∇ ∇𝑍𝑡

= (1 − 𝐵2 )𝑍𝑡

∇𝑠 𝑍𝑡 = (1 − 𝐵)𝑠 𝑍𝑡

Auto-Regressive Models

AR(P)

𝑍𝑡 = 𝜑1 𝑍𝑡−1 + . . . + 𝜑1 𝑍𝑡−𝑝 + 𝑎𝑡

Where at is an error term (called white error) assumed

to be uncorrelated with zero mean and constant

variance.

The random error at cannot be observed. Instead we

estimate it by using the one-step-ahead forecast error

𝑎𝑡 = 𝑍𝑡 − 𝑍𝑡−1 (1)

The regression coefficients 𝜑𝑖 , i = 1, ... , p, are parameters

to be estimated from the data

Moving Average

Current and previous disturbances affect the value.

We have a sequence of random shocks bombarding the

system and not just a single shock.

MA(q)

𝑍𝑡 = 𝑎𝑡 − 𝜃1 𝑎𝑡−1 − . . . − 𝜃𝑞 𝑎𝑡−𝑞

Uncorrelated random shocks with zero mean and

constant variance

The coefficients 𝜃𝑖 , i = 1, ... , q are parameters to be

determined from the data

Auto-Regressive Moving Average

ARMA(p,q)

𝑍𝑡 = 𝜑1 𝑍𝑡−1 + . . . + 𝜑1 𝑍𝑡−𝑝 + 𝑎𝑡 − 𝜃1 𝑎𝑡−1 − . . . − 𝜃𝑞 𝑎𝑡−𝑞

Typical stationary time series models come in three

general classes, auto-regressive (AR) models, moving

average (MA) models, or a combination of the two

(ARMA).

Identifying appropriate Model

The ACF plays an extremely crucial role in the

identification of time series models

The identification of the particular model within ARMA

class of models is determined by looking at the ACF and

PACF.

Partial Autocorrelation Function (PACF)

Partial Autocorrelation is the partial cross-correlation of a

time series data with itself based on lag k

Partial correlation is a conditional correlation:

It is the correlation between two variables under the assumption

that we know and take into account the values of some other set of

variables

How Zt and Zt-k are correlated taking into account how both

Zt and Zt-k are related to Zt-1 , Zt-2 , ... , Zt-k+1

The kth order PACF measure correlation between Zt and Zt+k

after adjustments have been made for the intermediate

observations Zt-1 , Zt-2 , ... , Zt-k+1

𝑃𝐴𝐶𝐹(𝐾) = 𝐶𝑜𝑟𝑟 (𝑍𝑡 − 𝑃𝑡,𝑘 (𝑍𝑡 ) , 𝑍𝑡−𝐾 − 𝑃𝑡,𝑘 (𝑍𝑡−𝐾 ))

where 𝑃𝑡,𝑘 (𝑥) denotes the projection of x onto the space spanned by

Zt-1 , Zt-2 , ... , Zt-k+1

ARMA Model identification from ACF and PACF

AR(p)

ACF

PACF

Infinite damped

exponentials and/or

damped sine waves;

Tails off

Finite; cuts off

after p lags

Source: Adapted from BJR

MA(q)

ARMA(p, q)

Finite; cuts off

after q lags

Infinite damped

exponentials and/or

damped sine waves;

Tails off

Infinite damped

exponentials and/or

damped sine waves;

Tails off

Infinite damped

exponentials and/or

damped sine waves;

Tails off

Examples

Models for Non-Stationary Data

Standard autoregressive moving average (ARMA) time

series models apply only to stationary time series.

The assumption that a time series is stationary is quite

unrealistic. (Stationary is not natural!)

For a system to exhibit a stationary behaviour, it has to be

tightly controlled and maintained in time.

Otherwise, systems will tend to drift away from

stationary

Converting Non-Stationary Data to Stationary

More realistic is to claim that the changes to a process, or

the first difference, form a stationary process.

And if that is not realistic, we mat try to see if the

changes of the changes, the second difference, form a

stationary process.

If that is the case, we can then model the changes, make

forecasts about the future values of these changes, and

from the model of the changes build models and create

forecasts of the original non-stationary time series.

In practice, we seldom need to go beyond second order

differencing.

Auto Regressive Integrated Moving Average

(ARIMA)

In the case of non-stationary data, differencing before we

use the (stationary) ARMA model to fit the (differenced)

data wt = ∇𝑍𝑡 = 𝑍𝑡 − 𝑍𝑡−1 is appropriate.

Because the inverse operation of differencing is summing

or integrating, an ARMA model applied to d differenced

data is called an autoregressive integrated moving average

process, ARIMA (p, d, q).

𝑤𝑡 = ∇𝑑 𝑍𝑡

In practice, the orders p, d, and q are seldom higher than

2.

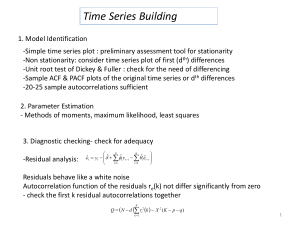

Stages of the time series model building

process using ARIMA

Consider a general

ARIMA Model

Identify the appropriate

degree of differencing if needed

Using ACF and PACF,

find a tentative model

Estimate the parameters of

the model using

appropriate software

Perform the residual

analysis.

Is the model adequate?

Start forecasting

Model Evaluation

Once a model has been fitted to the data, we process to

conduct a number of diagnostic checks.

If the model fits well, the residuals should essentially

behave like white noise.

In other words, the residuals should be uncorrelated with

constant variance.

Standard checks are

to compute the ACF

and PACF of the residuals.

If they appear in the

confidence interval there is

no alarm indications that

the model does not fit well.

Exponentially Weighted Moving Average

Special case of ARIMA model: EWMA

𝑍𝑡 = (1 − 𝜃)(𝑍𝑡−1 + 𝜃𝑍𝑡−2 + 𝜃 2 𝑍𝑡−3 +. . . ) + 𝑎𝑡

|𝜃| < 1

Unlike a regular average that assigns equal weight to all

observation, an EWMA has a relatively short memory that

assigns decreasing weights to past observations.

EWMA made practical sense that a forecast should be a

weighted average that assigns most weight to the most

immediate past observation, somewhat less weight to the

second to the last observation, and so on.

It just made good practical sense.

Seasonal Models

For ARIMA models, the serial dependence of the current

observation to the previous observations was often

strongest for the immediate past and followed a decaying

pattern as we move further back in time.

For some systems, this dependence shows a repeating,

cyclic behaviour.

This cyclic pattern or as more commonly called seasonal

pattern can be effectively used to further improve the

forecasting performance.

The ARIMA models are flexible enough to allow for

modeling both seasonal and non-seasonal dependence.

Example 1: International Airline Passengers

Trend and Seasonal Relationship

Two relationship going on simultaneously:

Between observations for successive months within the same

year

Between observation for the same month in successive years.

Therefore, we essentially need to build two time series

models, and then combine the two.

If the season is s period long, in this example s = 12

months, then observation that are s time intervals apart

are alike.

Pre-Processing

Log Transformation

Apply Differencing on Seasonal Data

For seasonal data, we may need to use not only regular

difference ∇𝑍𝑡 but also a seasonal difference ∇𝑠 𝑍𝑡 .

Sometimes, we may even need both (e.g., ∇∇𝑠 𝑍𝑡 ) to obtain

an ACF that dies out sufficiently quickly.

Investigate ACFs

Only the last one (combination of regular difference and

seasonal difference) is stationary:

Model Identification

ACF seems to cut off after the first one (in k=12).

This is a sign of a Moving Average Model applied to the 12month seasonal pattern.

Second, look for patterns between

successive months

ACF seems to cut off after the first one

First order MA term in the regular model

ACF

Identifying stationary seasonal models is a modification of

the one used for regular ARMA time series models where

the patterns of the sample ACF and PACF provide

guidance.

First, look for similarities that are 12 lags apart.

PACF

Model Evaluation

ACF of the residuals after fitting a first order SMA model

to ∇∇12 𝑍𝑡 :

We see that the ACF shows a significant negative spike at

lag 1, indicating that we need an additional regular moving

average term

ARIMA (p,d,q)*(P,D,Q)12

𝑊𝑡 = 𝑏𝑡 − Θ1 bt−12

𝑏𝑡 = 𝑎1 − 𝜃1 𝑎𝑡−1

∇∇12 𝑍𝑡 = 𝑎1 − 𝜃1 𝑎𝑡−1 − Θ1 (𝑎𝑡−12 − 𝜃1 𝑎𝑡−13 )

𝑍𝑡 − 𝑍𝑡−1 − 𝑍𝑡−12 + 𝑍𝑡−13 = 𝑎1 − 𝜃1 𝑎𝑡−1 − Θ1 𝑎𝑡−12 − Θ1 𝜃1 𝑎𝑡−13

Example 2: Energy Peak Load Prediction

The hourly peak load follows

a daily periodic pattern

Covert peak load

values into ∇24 𝑍𝑡 and then

apply ARMA

ACF

S=24 hours

PACF

Example 3: Energy Load Prediction

Daily, weekly, and

monthly periodic patterns

Exogenous Variables

(Temperature)

They proposed to apply Periodic Auto-Regression (PAR)

* An auto-regression is periodic when the parameters

are allowed to vary across seasons.

Example 3 (cont’d)

Proposed model template:

Seasonality varying

intercept term

Dummy variable

for weekly seasonal

Dummy variable

for monthly seasonal

Exogenous variable

for temperature

sensitivity

Time Series Data Mining

Using Serial Dependency of forecasting variable to build

the training set.

Leading indicators might exhibit similar behaviour to

forecasting variable

The important task is to find out whether there exists a lagged

relationship between indicators and predicted variable

If such a relationship exists, then from the current and past

behaviour of the leading indicators, it may be possible to

determine how the sales will behave in the near future.

Time Series SVM

Optimization problem

in SVM:

Error in SVM:

Error in Modified SVM:

Example 4: Stock Market Analysis

Portfolio optimization is the decision process of asset

selection and weighting, such that the collection of assets

satisfies an investor’s objectives

Bloomberg

Mnemonic

Serial dependency or Lagged

Relationship between

stock performance and

financial indicators from

the companies.

Description

Indicates how much out of every dollar of

sales, the company actually keeps in earning :

Net Income / Revenue

Quantifies the companies success of effort to

RETURN_

earn a profit with respect to its total asset:

ON_ASSET

Net Income / Total Assets

Quantifies the companies success of effort to

RETURN_ON_CAP earn a profit with respect to its capital:

Net Income / (Total Assets - Current Liabilities)

Quantifies the ratios of Return On Asset to

Return On Equity (ROE: Net Income as a

ROA_TO_ROE

percentage of shareholders' equity):

Shareholder's Equity / Total Assets

Indicates Return On Asset calculated based on

the last line of the company's income

ROA_BASED_

statement. This reflects the fact that all

ON_BOTTOM_EPS

expenses have already been taken out of

revenues, and there is nothing left to subtract.

Indicates Revenue with respect to each share

price. Revenue is the income that a company

REVENUE_PER_SH receives from its normal business activities,

usually from the sale of goods and services to

PROF_MARGIN

Stock Ranking

Learn relationship between stocks’ current features and

their future rank score. (Lagged Relationship)

By Applying modified version of SVM Rank Algorithm for

time series based on exponential weighted error.

t1

t0

tk

t2

tc

ΔtS

Δtr

3

S2 0.2 0.3

0.6

1

S2 0.5 0.6

S3 0.4 0.5

2

S3 0.7 0.1

0.4

2

S3 0.1 0.7

0.2

Training Set

3

S1 0.5 0.9

0.1

2

S2 0.8 0.2

0.7

1

S3 0.7 0.7

f2

0.4

2

S1 0.4 0.3

0.4

2

1

0.5

1

S2 0.5 0.9 -0.7

3

3

0.1

3

S3 0.7 0.2

1

2

f1

Target

Rank Score

Predicted

Rank Score

S2 0.3 0.1 -0.1

f2

future ROI

S1 0.4 0.2 -0.3

f1

Stock Name

1

f2

Target

Rank Score

0.6

f1

future ROI

S1 0.3 0.7

S1 0.5 0.9

f1

Stock Name

1

f2

Target

Rank Score

Target

Rank Score

0.5

f1

future ROI

f2

future ROI

Stock Name

Target

Rank Score

Stock Name

future ROI

Stock Name

…

0.6

Testing Set

References

[1] Søren Bisgaard and M. Kulahci, TIME SERIES ANALYSIS AND FORECASTING

BY EXAMPLE: A JOHN WILEY & SONS, INC., 2011.

[2] Rayman Preet Singh, Peter Xiang Gao, and Daniel J. Lizotte, "On Hourly

Home Peak Load Prediction," in IEEE SmartGridComm, 2012.

[3] Marcelo Espinoza, Caroline Joye, Ronnie Belmans, and Bart De Moor,

"Short-Term Load Forecasting, Profile Identification, and Customer

Segmentation: A Methodology Based on Periodic Time Series," Power

Systems, vol. 20, pp. 1622-1630, 2005.

[4] F. E. H. Tay and L. Cao, "Modified support vector machines in financial time

series forecasting," Neurocomputing, vol. 48, pp. 847-861, 2002

Questions?