Information and Bits One bit of information is the amount of

advertisement

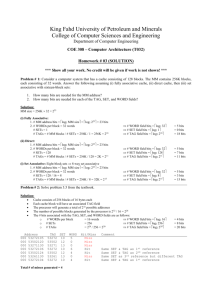

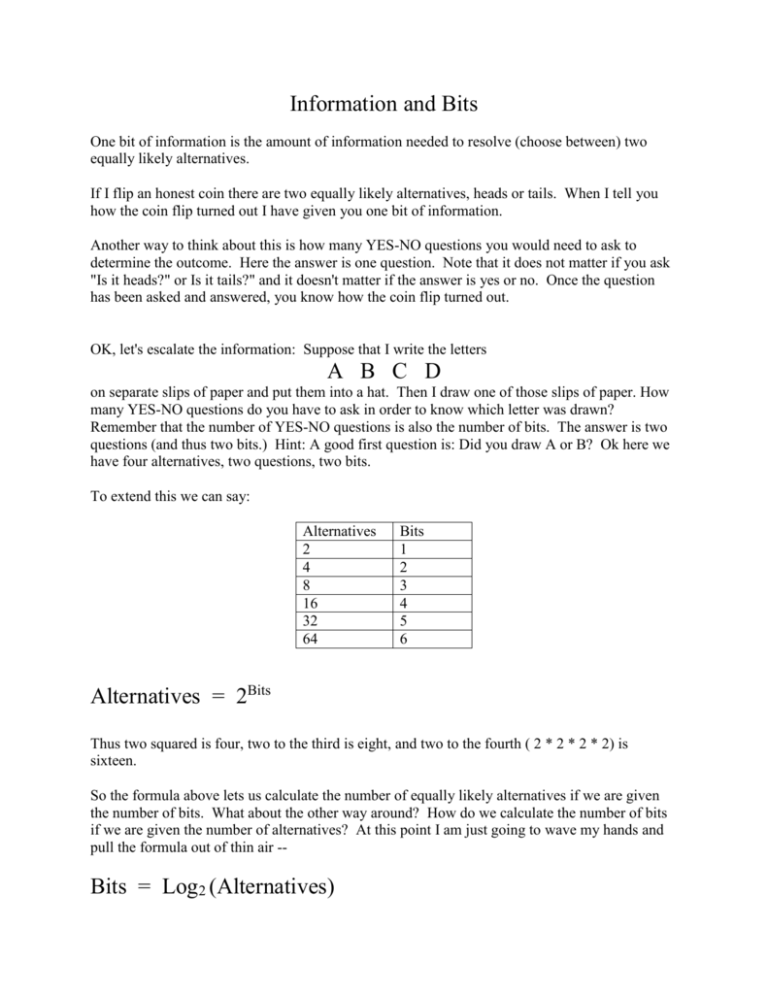

Information and Bits One bit of information is the amount of information needed to resolve (choose between) two equally likely alternatives. If I flip an honest coin there are two equally likely alternatives, heads or tails. When I tell you how the coin flip turned out I have given you one bit of information. Another way to think about this is how many YES-NO questions you would need to ask to determine the outcome. Here the answer is one question. Note that it does not matter if you ask "Is it heads?" or Is it tails?" and it doesn't matter if the answer is yes or no. Once the question has been asked and answered, you know how the coin flip turned out. OK, let's escalate the information: Suppose that I write the letters A B C D on separate slips of paper and put them into a hat. Then I draw one of those slips of paper. How many YES-NO questions do you have to ask in order to know which letter was drawn? Remember that the number of YES-NO questions is also the number of bits. The answer is two questions (and thus two bits.) Hint: A good first question is: Did you draw A or B? Ok here we have four alternatives, two questions, two bits. To extend this we can say: Alternatives 2 4 8 16 32 64 Bits 1 2 3 4 5 6 Alternatives = 2Bits Thus two squared is four, two to the third is eight, and two to the fourth ( 2 * 2 * 2 * 2) is sixteen. So the formula above lets us calculate the number of equally likely alternatives if we are given the number of bits. What about the other way around? How do we calculate the number of bits if we are given the number of alternatives? At this point I am just going to wave my hands and pull the formula out of thin air -- Bits = Log2 (Alternatives) At this point you either nod your head and say; "Logarithms, yeah, I know logarithms" or alternatively; "WTF?" In either case, you are not going to calculate logarithms by hand, so let's just say that you can get the result from Excel by using: =log(alternatives, 2) Here is a sample: Alternatives 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Bits 0 1 1.584963 2 2.321928 2.584963 2.807355 3 3.169925 3.321928 3.459432 3.584963 3.70044 3.807355 3.906891 4 For example, if we think about the roll of one die the possible outcomes are one of the numbers one through six. Looking at the table above we see that one roll with six equally likely outcomes gives about 2.58 bits of information. Up to this point I have been talking about equally likely alternatives (the flip of an honest coin) but we can also deal with the case of unequal probabilities. Let's start with the simple case of a dishonest coin. Suppose that I have a coin that, when flipped, comes down heads 90% of the time and tails 10% of the time. Again, I wave my hands and produce the formula: Bits = - ( ( .9 * log2(.9)) + ( .1 * log2(.1))) = .47 What this formula does is it takes the probability of each possible outcome, multiplies it by the log2 of the probability and then adds the results for each possible outcome. So the formula is: H = - ∑ ( pi * log2(pi)) where there is a probability (pi) for every possible outcome. Wait! How come the answer is H and not Bits? You don't want to know, but the fact is that the measure of information can also be viewed as a measure of uncertainty or randomness. These are things that other fields besides Information Science talk about and so there is more than one notation. The H stands for "entropy." Why? I'm going to go get a beer rather than discuss this -good advice for you too. Rolling a Pair of Dice Sum 2 3 4 5 6 7 8 9 10 11 12 # of combinations 1 2 3 4 5 6 5 4 3 2 1 Probability p log p 0.027777778 -0.143609028 0.055555556 -0.2316625 0.083333333 -0.298746875 0.111111111 -0.352213889 0.138888889 -0.395555126 0.166666667 -0.430827083 0.138888889 -0.395555126 0.111111111 -0.352213889 0.083333333 -0.298746875 0.055555556 -0.2316625 0.027777778 -0.143609028 3.274401919 bits