Parameter Subset Coding Mode Decision

advertisement

Coding Efficiency and Quality

Improvement for MPEG Surround

Encoding

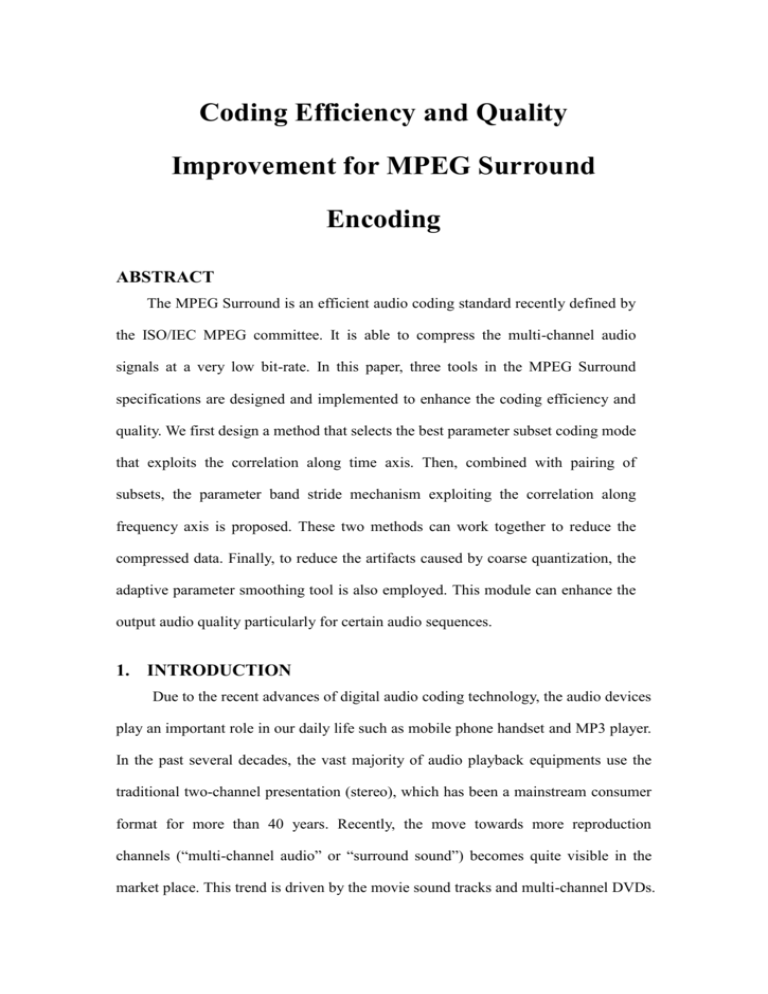

ABSTRACT

The MPEG Surround is an efficient audio coding standard recently defined by

the ISO/IEC MPEG committee. It is able to compress the multi-channel audio

signals at a very low bit-rate. In this paper, three tools in the MPEG Surround

specifications are designed and implemented to enhance the coding efficiency and

quality. We first design a method that selects the best parameter subset coding mode

that exploits the correlation along time axis. Then, combined with pairing of

subsets, the parameter band stride mechanism exploiting the correlation along

frequency axis is proposed. These two methods can work together to reduce the

compressed data. Finally, to reduce the artifacts caused by coarse quantization, the

adaptive parameter smoothing tool is also employed. This module can enhance the

output audio quality particularly for certain audio sequences.

1. INTRODUCTION

Due to the recent advances of digital audio coding technology, the audio devices

play an important role in our daily life such as mobile phone handset and MP3 player.

In the past several decades, the vast majority of audio playback equipments use the

traditional two-channel presentation (stereo), which has been a mainstream consumer

format for more than 40 years. Recently, the move towards more reproduction

channels (“multi-channel audio” or “surround sound”) becomes quite visible in the

market place. This trend is driven by the movie sound tracks and multi-channel DVDs.

However, the associated data increase in the total amount of bitrate as well as the

compatibility issues among various existing formats and the reproduction systems

bring new challenges. Therefore, it requires a high-quality, low-bitrate audio coding

mechanism that can serve both communities that uses the conventional stereo

equipment and that uses the next-generation multi-channel equipment.

Based on these observations, the ISO/MPEG Audio standardization group

started a new work item in 2004. The MPEG audio group has proposed many low

bitrate audio coding standards such as MPEG-1 layer III (MP3), MPEG-4 Advanced

Audio Coding (AAC) and MPEG-4 High-Efficiency AAC (HEAAC). The most recent

effort is to define a state of the art new standard, the “MPEG Surround”, which was

finalized in 2006. The specifications provide an extremely efficient method for coding

multi-channel sound by transmitting a compressed stereo (or even mono) audio signal

with low-rate side information. In this way, the backward compatibility is retained to

pervasive stereo playback systems, while the side information permits the

next-generation players to present a high-quality multi-channel surround experience.

This paper briefly describes the concepts of the MPEG Surround coding

schemes. Then, the processes of several key elements in the MPEG Surround encoder,

which are normative to the MPEG Surround specifications, will be introduced in

detail, including the methods and the experimental results. These added features,

which are used to reduce bitrate or enhance quality, were only partly implemented in

the reference encoding software [6]; hence, several new ideas are proposed to

accomplish the goals.

2. MPEG Surround

MPEG Surround is defined based on the concept of spatial audio coding (SAC)

[1]. Rather than coding the discrete audio input signals individually, the spatial audio

coding scheme decomposes the multi-channel audio signals into the mono or stereo

downmix signals plus a compact set of parameters that captures the spatial image of

the input signals. As can be seen in Figure 1, the SAC encoder performs a downmix

procedure on the input signals, while extracting the spatial parameters. These spatial

parameters can preserve the information lost in the process of downmix and be

represented in an extremely compact way. Besides, by reducing the multi-channel

audio signals to mono or stereo downmix signal, the SAC approach provides a quite

efficient representation for the multi-channel audio. After the encoding process, the

downmix signal and the parameters are transmitted to the SAC decoder. With both

kinds of information transmitted, high quality multi-channel audio signals can be

synthesized by the SAC decoder.

Figure 1 Principle of Spatial Audio Coding [5]

Figure 2 shows the basic structure of the MPEG Surround encoder. Each input

audio channel is firstly decomposed into frequency bands by means of a set of hybrid

QMF analysis filter banks (T/F Transform), the purpose of which is to closely mimic

the frequency resolution of the human auditory system. Then, the downmix procedure

and the spatial parameter extraction are executed. After the downmix procedure, the

downmix signals are transformed back to the time domain representation by means of

a set of synthesis filter banks (F/T Transform). To achieve a higher compression rate,

a conventional audio encoder can be further combined with the MPEG Surround

encoder to encode the downmix signals, which makes the MPEG Surround encoder

act as a pre-processing to the audio coder.

Figure 2 Overview of the MPEG Surround encoder [2]

3.

Parameter Subset Coding Mode Decision

This section describes a coding scheme of the MPEG Surround specifications

that is used to exploit correlation between neighboring data. A full-search method is

used here to choose appropriate coding configures to reduce data.

3.1. Parameter Subset Coding Mode

After all the parameters are calculated, they are going to be quantized and coded.

The MPEG Surround provides a method of reducing the number of parameter subsets

that require encoding, which is not implemented in the reference encoding software.

This method is practiced by assigning each subset a coding mode. There are totally

four modes available for selection, as can be seen in Table 1. The four modes

respectively describe how the decoder reconstructs parameters. The first mode is the

“Default” mode, which sets all parameter values to the default values specified in

Table 40 in clause 7 of ISO/IEC 23003-1. The second mode is the “Keep” mode,

which sets all values equal to the previous parameter subset. The third mode is the

“Interpolation” mode, which linearly interpolates values using the previous and next

subsets that are not using interpolation mode. The last mode is the “Lossless” mode,

which fetches parameters from the bitstream. No parameter data will be transmitted

for the first three data modes, while the “Lossless” mode requires transmission of

parameter values. The reference encoding software implements merely the “Lossless”

mode and thus all the parameter values are to be included in the bitstream.

Table 1 Parameter subset coding mode [3]

bsXXXdataMode

Meaning

0 (Default)

set to default parameter values

1 (Keep)

keep previous parameter values unchanged

2 (Interpolation)

interpolate parameter values

3 (Lossless)

read losslessly coded parameter values

3.2. Coding Mode Decision

To reduce the total bits to encode the parameter values, it is necessary to

increase the usage of the first three coding modes. Beside the “Default” mode, the

“Keep” and “Interpolation” modes instruct the decoder to retrieve data from the

previous subsets. The key of using these coding modes is to exploiting correlation

between subsets. Consequently, the purpose of the encoder is to compare parameter

values in the present subset with those in the previous subset (and the default values),

and then decide which coding mode is most suitable for the current subset. The flow

chart of the decision process is shown in Figure 3.

The flow chart shows the proposed procedure that decides the data mode of a

subset. First of all, the estimation errors of the first three modes are calculated. The

error of a specific mode i in a subset is defined as:

𝑠𝑡𝑜𝑝𝑏𝑎𝑛𝑑

𝑒𝑟𝑟(𝑖) =

∑

|𝑥𝑖 (𝑝𝑏) − 𝑥(𝑝𝑏)|,

𝑝𝑏=𝑠𝑡𝑎𝑟𝑡𝑏𝑎𝑛𝑑

where pb is the parameter band, x are the quantized values and 𝑥𝑖 are the values

reconstructed by the decoder. For example, for the “Keep” mode (mode 1), 𝑥1 are

equal to the values of previous parameter subset. After the errors of the first three

modes are calculated, the minimum of the three errors: err(m) with mode m is

selected and compared with a threshold. The reason of using a threshold is because

that only the “Lossless” mode leads to the error-free reconstruction of parameters, and

thus a certain amount of reconstruction error must be allowed if the increase number

of the first three modes is necessary. The allowable error is represented by the

threshold used here. If the threshold increases, the final bitrate becomes lower but

with a higher error. Therefore, there is a trade-off. The way we adjust the threshold is

to reduce the bitrate and make sure there is no obvious artifact being detectable. Thus,

if the minimum error err(m) is less than the threshold, then the error is considered

acceptable and mode m will be selected. Otherwise, the “Lossless” mode is assigned

to the subset and produces the error-free reconstruction at the cost of higher bitrate.

Figure 3 Flow chart of the coding mode decision module

3.3. Experimental Results

Since the purpose of this coding mode decision module is to reduce the

redundancy of neighboring subsets, bitrate variation is an indication of how this

module works. The bitrate reduction is the comparison between bitstream size with

and without using the coding mode module and can be interpreted as

(𝑠𝑖𝑧𝑒𝑜𝑟𝑖𝑔 −𝑠𝑖𝑧𝑒𝑐𝑜𝑑𝑖𝑛𝑔 )

𝑠𝑖𝑧𝑒𝑜𝑟𝑖𝑔

× 100% . Actually, this reduction percentage can be roughly

estimated according to the statistics of coding modes selected. Because only selecting

the “Lossless” mode requires extra data transmitted, the ratio of the other three modes

can be considered as the data reduction. The theoretical data reduction can be

expressed as:

𝑇ℎ𝑒𝑜𝑟𝑒𝑡𝑖𝑐𝑎𝑙 𝑑𝑎𝑡𝑎 𝑟𝑒𝑑𝑢𝑐𝑡𝑖𝑜𝑛(%) = (1 −

𝑠𝑢𝑏𝑠𝑒𝑡𝑙𝑜𝑠𝑠𝑙𝑒𝑠𝑠

) × 100%

𝑠𝑢𝑏𝑠𝑒𝑡𝑎𝑙𝑙

The comparisons between the experimental bitrate and the theoretical data reductions

are shown in Figure 4.

Figure 4 Comparisons between the experimental bitrate and the theoretical data

reductions of each 5 test sequences for (a) ps=1 (b) ps=2 and (c) ps=4

From the results above, we can observe the following two noticeable points: (1)

The theoretical predictions are higher than the experimental results. This is because

that the time differential coding can reduce the redundancy between the neighboring

subsets, and this module performs a similar function. Consequently, the gain provided

by the time differential coding decreases when this module is active. (2) When the

number of parameter sets gets higher with the same threshold, both the theoretical

data reduction and the experimental bitrate reduction rates decrease. The reason is

related to the data distribution. Table 2 Standard deviation of DT distributions shows

the standard deviations of time differential data. It can be found that most cases of

ps=4 have larger standard deviations than those of ps=1. According to the information

theory, a more centralized distribution has higher correlation and is more

compressible. Consequently, the bitrate reduction decreases as the number of

parameter sets increases.

Table 2 Standard deviation of DT distributions

Test sequence

4.

ICC

CLD

ps=1

ps=4

ps=1

ps=4

1

1.07

1.31

1.37

1.66

2

0.81

0.86

2.15

1.40

3

0.79

1.17

1.94

1.75

4

0.84

1.21

1.77

2.13

5

0.92

1.14

1.46

1.61

Parameter Band Stride Decision

4.1. Parameter Band Stride

The MPEG Surround adjusts frequency resolution of parameters by using

strides for parameter band grouping. After parameters in a parameter subset are

extracted, the number of parameters is equal to the number of parameter bands. Then,

the encoder can group parameters along the frequency axis. That is, the encoder

encodes only one parameter for every n successive parameters, and n is the parameter

band stride. The grouped parameters in one parameter band stride are termed as

parameter group. Four kinds of strides are supported by the MPEG Surround

specifications: 1(no parameter grouping), 2, 5 and 28. When the striding scheme is in

use, the frequency resolution (equal to the number of parameter groups) can be

dynamically adjusted, varying between 1 to the number of parameter bands, as shown

in Table 3. The relationship between the number of parameter groups (pg) and

𝑝𝑏

parameter bands (pb) is 𝑝𝑔 = ⌈𝑠𝑡𝑟𝑖𝑑𝑒⌉, where ⌈. ⌉ is the ceiling function defined by

⌈𝑥⌉ = 𝑚𝑖𝑛{𝑛 ∈ 𝑍|𝑛 ≤ 𝑥}. The key point of using parameter band striding is to exploit

correlation between parameters along the frequency axis. The purpose of the encoder

is to decide which stride shall be applied to each subset.

Table 3 The frequency resolution decided by the number of parameter bands and

strides[4]

Parameter

Parameter groups using different strides

bands

Stride 1

Stride 2

Stride 5

Stride 28

4

4

2

1

1

5

5

3

1

1

7

7

4

2

1

10

10

5

2

1

14

14

7

3

1

20

20

10

4

1

28

28

14

6

1

4.2. Parameter Band Stride Decision

The flow chart of our proposed algorithm is shown in Figure 5. The pairing of

parameter subsets is also considered together since the stride is common for a pair of

subset. The input to this flow is the parameter subsets of a frame with all parameters

quantized and coding modes decided. The first step is to check whether two

“successive” subsets are both “losslessly coded”. The condition of “successive” is

imposed because that the correlation between non-successive subsets is low, and there

would be no necessity to pair them together. Another condition “losslessly coded” is

used because that only subsets using “Lossless” mode need encoding. Under the

situation that these two conditions are satisfied, there are three possible outcomes, as

can be seen in the flow chart:

(1) Two successive subsets are encoded as a pair with the same stride (>1).

(2) Two successive subsets are encoded separately, using different strides (>1).

(3) Two successive subsets are encoded as a pair but the common stride is 1.

Theoretically the first case saves the most bits and the third one the least.

Consequently, this algorithm should be designed in such a way that the first case has a

top priority. If the current subset is losslessly coded but the next one is not, then the

current subset is encoded singly.

Yes

2 successive No

lossless subset?

coded singly

Yes common stride

for both subset?

Yes

pair and

stride>1

No

separate strides No

for the 2 subset?

not pair but

stride>1

pair with

stride=1

Figure 5 Flow chart of adaptive frequency resolution and pairing decision

4.3. Experimental Results

Since the purpose of the parameter band stride decision module is to reduce the

redundancy, the bitrate reduction is a measure to check how this module works.

Analogous to the previous module, the data reduction statistics of this module can be

estimated in a rough way. Since using strides reduces the number of data requiring

coding, the data reduction can be approximately estimated if we compare the new data

size with the lossless data size. The estimation can be expressed as:

𝑇ℎ𝑒𝑜𝑟𝑒𝑡𝑖𝑐𝑎𝑙 𝑑𝑎𝑡𝑎 𝑟𝑒𝑑𝑢𝑐𝑡𝑖𝑜𝑛(%)

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒1 +

= (1 −

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒2 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒5 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒28

+

+

𝑅𝑠𝑡𝑟𝑖𝑑𝑒2

𝑅𝑠𝑡𝑟𝑖𝑑𝑒5

𝑅𝑠𝑡𝑟𝑖𝑑𝑒28

)

𝑠𝑢𝑏𝑠𝑒𝑡𝑙𝑜𝑠𝑠𝑙𝑒𝑠𝑠

× 100%

where 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒𝑥 is the number of subsets using stridex, and 𝑅𝑠𝑡𝑟𝑖𝑑𝑒𝑥 = 𝑝𝑔

𝑝𝑏

𝑠𝑡𝑟𝑖𝑑𝑒𝑥

.

The term 𝑅𝑠𝑡𝑟𝑖𝑑𝑒𝑥 is used to adjust the ratio of data reduced. The comparisons

between the experimental bitrate and the theoretical data reductions are shown in

Figure 6.

Figure 6 Comparisons between the experimental bitrate and the theoretical data

reductions of each 5 test sequences for (a) ps=1 (b) ps=2 and (c) ps=4

From the above results, there are also two points worth noticing: (1) The

theoretical predictions are higher than the experimental results. This is because that

this module and the frequency differential coding both exploit the correlation along

the frequency axis. Hence, the gain provided by the differential coding decreases. (2)

As the number of parameter sets increases, both the theoretical and the experimental

results decrease. Table 4 Standard deviations of DF distributions the standard

deviations of frequency differential data. It is observed that the cases of ps=1 often

have more centralized distributions than the cases of ps=4. Consequently, the higher

number of parameter sets result in less bitrate reduction.

Table 4 Standard deviations of DF distributions

Test sequence

ICC

CLD

ps=1

ps=4

ps=1

ps=4

1

1.85

2.14

2.95

3.27

2

1.85

1.98

4.14

4.24

3

1.61

1.89

3.14

3.39

4

1.47

1.75

2.8

3.02

5

1.77

1.99

2.94

3.19

Moreover, if we compare the results of these two modules, there are also two

interesting points worth noticing: (1) The bitrate reduction percentage results of the

coding mode decision module are better than those of the parameter band stride

decision module. The reason can be seen in Table 2 and Table 4. It is found that in

most cases, the DT data have lower standard deviations than the DF data, which

means that the correlation along the time axis is usually higher than that along the

frequency axis. Consequently, the coding mode decision module is usually more

efficient than the parameter band decision module. (2) The coding mode decision

module has closer theoretical and experimental results than the parameter band

decision module does. Since the difference between the theoretical prediction and the

actually bitrate reduction is the entropy coding schemes, the reason should also be

seen from this aspect. Figure 7 Distributions of coding schemesshows the

distributions of differential coding schemes before and after adding these two

modules respectively. Compared with the original coding process, the coding mode

decision module makes the ratio of DT decrease and DF increase. However, the

parameter band stride decision module makes the ratio of PCM coding increase a lot.

It is known that using PCM coding usually results in an inferior compression rate than

using DF and DT; consequently, the efficiency of differential coding schemes after

using the parameter band stride decision module is usually worse than using the

coding mode decision module.

Figure 7 Distributions of coding schemes

Finally, the above two modules can be combined together and the data reduction

can still be estimated as:

𝑇ℎ𝑒𝑜𝑟𝑒𝑡𝑖𝑐𝑎𝑙 𝑑𝑎𝑡𝑎 𝑟𝑒𝑑𝑢𝑐𝑡𝑖𝑜𝑛(%)

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒1 +

= (1 −

×

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒2 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒5 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒28

𝑅𝑠𝑡𝑟𝑖𝑑𝑒2 + 𝑅𝑠𝑡𝑟𝑖𝑑𝑒5 + 𝑅𝑠𝑡𝑟𝑖𝑑𝑒28

𝑠𝑢𝑏𝑠𝑒𝑡𝑙𝑜𝑠𝑠𝑙𝑒𝑠𝑠

𝑠𝑢𝑏𝑠𝑒𝑡𝑙𝑜𝑠𝑠𝑙𝑒𝑠𝑠

) × 100%

𝑠𝑢𝑏𝑠𝑒𝑡𝑎𝑙𝑙

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒1 +

= (1 −

𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒2 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒5 𝑠𝑢𝑏𝑠𝑒𝑡𝑠𝑡𝑟𝑖𝑑𝑒28

𝑅𝑠𝑡𝑟𝑖𝑑𝑒2 + 𝑅𝑠𝑡𝑟𝑖𝑑𝑒5 + 𝑅𝑠𝑡𝑟𝑖𝑑𝑒28

)

𝑠𝑢𝑏𝑠𝑒𝑡𝑎𝑙𝑙

× 100%

The comparisons between the experimental bitrate and the theoretical data reductions

are shown in Figure 8. The actual bitrate reduction is usually lying between 25~55%,

depending on the test sequences and the configuration. Beside, these two modules add

about 0.13% computational complexity, as Figure 9 shows.

Figure 8 Comparisons between the experimental bitrate and the theoretical data

reductions of each 5 test sequences for (a) ps=1 (b) ps=2 and (c) ps=4

ottbox, 1.7

others, 2.0

coding mode +

pbstride, 0.1

entrop

y

coding

, 0.2

synthesis

filterbank, 9.9

analysis filter

bank, 86.0

Figure 9 Complexity analysis of the encoder including coding mode decision and

parameter band decision modules

5.

Adaptive Parameter Smoothing

5.1. Parameter Smoothing

In the MPEG Surround specifications, two different quantization granularities

are provided, which results in different qualities and bitrates. For low bitrate

applications, in order to reduce the total bits, it is generally preferred to use coarse

granularity quantizer on the spatial parameters than fine granularity. However, the

larger quantization steps of coarse granularity may result in artifacts, especially in the

case of stationary and tonal signals. For these slowly varying sources, the coarse

quantizer produces a more discontinuous waveform, which is often perceived as

artifacts. Consequently, the MPEG Surround provides an “Adaptive Parameter

Smoothing” tool to reduce this type of artifacts. This tool is running at the decoder

side by using a first order IIR filter controlled by additional side information

transmitted by the encoder [5].

The parameter smoothing process is shown in Figure 10, where 𝑊 𝑙−1 are the

𝑙

data of the previous parameter set, 𝑊𝑘𝑜𝑛𝑗

are the data calculated by using the current

parameter set and 𝑊 𝑙 are the smoothed data obtained after the smoothing process.

This process is performed using an IIR filter defined as below [3].

𝑙

𝑊 ={

𝑙

𝑊 𝑙−1 + 𝑆𝑑𝑒𝑙𝑡𝑎 (𝑊𝑘𝑜𝑛𝑗

− 𝑊 𝑙−1 ), 𝑖𝑓 𝑡ℎ𝑒𝑠𝑚𝑜𝑜𝑡ℎ𝑖𝑛𝑔 𝑝𝑟𝑜𝑐𝑒𝑠𝑠 𝑖𝑠 𝑎𝑐𝑡𝑖𝑣𝑒

𝑙

𝑊𝑘𝑜𝑛𝑗

,

𝑖𝑓 𝑡ℎ𝑒 𝑠𝑚𝑜𝑜𝑡ℎ𝑖𝑛𝑔 𝑝𝑟𝑜𝑐𝑒𝑠𝑠 𝑖𝑠 𝑖𝑛𝑎𝑐𝑡𝑖𝑣𝑒

If smoothing is active by the encoder, the filter will be applied to obtain data. Besides,

the encoder can also control Sdelta to adjust the degree of smoothing. Sdelta is

obtained by

𝑑

𝜏

where d is the number of time slots in the current parameter set, and 𝜏

is the time constant decided by the encoder. The MPEG Surround specification

provides 4 kinds of time constants, and this configuration can be changed in every

parameter set.

S delta

d

l

W l W l 1 Sdelta (Wkonj

W l 1 )

l

Wkonj

Wl

W l 1

d

Figure 10 Adaptive parameter smoothing

5.2. Smoothing Flag Decision

Since the adaptive parameter smoothing decision module is applied to recover

some reconstructed error due to coarse quantization, this module should be active

only if the coarse quantization scheme is applied. The decision scheme is based on the

error measures defined as the sum of the differences between the smoothed parameter

and fine-quantized parameters. The error measure is defined as:

𝑒𝑟𝑟(𝑝𝑠, 𝑖) =

𝑛𝑢𝑚𝑏𝑜𝑥

𝑠𝑡𝑜𝑝𝑏𝑎𝑛𝑑

∑

∑

|𝑥̃𝑖 (𝑏𝑜𝑥𝐼𝑑𝑥, 𝑝𝑏) − 𝑥̃(𝑏𝑜𝑥𝐼𝑑𝑥, 𝑝𝑏)|,

𝑏𝑜𝑥𝐼𝑑𝑥=0 𝑝𝑏=𝑠𝑡𝑎𝑟𝑡𝑏𝑎𝑛𝑑

where ps is the current parameter set, i is the time constant, boxIdx is the box index of

the tree configuration, 𝑥̃ are the normalized dequantized parameters that are

originally fine-quantized, and 𝑥̃𝑖 are the smoothed parameters using time constant i.

The normalization formula is :

x̃(boxIdx, pb) =

x(boxIdx, pb) − x̅

σ

where x̅ is the mean of the parameters in the subset and σ is the standard deviation.

Since this configuration can be adjusted for every parameter set, the error measure

must be calculated for each set that covers all the boxes and the parameter bands in

the set. The flow chart can be seen in Figure 11, in which the iteration number is the

number of parameter sets. First, the smoothed error (𝑒𝑟𝑟(𝑝𝑠, 𝑖)) of each time constant

i and the coarse-quantized error (𝑒𝑟𝑟_𝑜𝑟𝑖𝑔) are calculated. Then, the configuration

with the least error will be selected as for the current parameter set. The process is

repeated until the configurations of all parameter sets are decided.

Figure 11 Flow chart of the adaptive parameter smoothing decision module

5.3. Experimental Results

Using the smoothing mechanism requires some additional bits, and

consequently we have to compare the bitrate with and without this mechanism.

Besides, the comparison between bitrate using the smoothing mechanism and fine

quantization are evaluated. The results are shown in Table 5. It can be seen that this

module adds no more than 1% bitrate, which is because that the smoothing flags need

only several bits for each frame.

Table 5

Bitrate change percentage of the smoothed bitstreams. The upper row is the

percentages compared with the coarse quantization; the lower row is compared with

the fine quantization

1

2

3

4

5

Test sequence

Bitrate change %

(cf. coarse quantized)

0.51

0.55

0.69

0.64

0.53

Bitrate change %

(cf. fine quantized)

-11.53

-7.37

-7.03

-10.93

-11.00

Since this module is used to reduce the artifacts caused by the coarse

quantization, we should compare the quantization errors. The experimental results for

the CLD and the ICC are shown respectively in Figure 12. It can be seen that there are

no obvious differences between the quantization errors of the coarse-quantized and

the smoothed CLD. However, for the ICC, the quantization errors after using the

smoothing module are lower than using the coarse quantizer, which shows the

efficiency of this module. Also, waveform comparisons between the fine quantized

signals, the coarse quantized signals and the smoothed signals are shown in Figure

13Error! Reference source not found.. It can be seen that the smoothed signals have

a closer envelope to the fine quantized signals, and the coarse quantization produces

incorrect variations in a few places. The large magnitude may be due to the coarse

quantization steps that lead to some discontinuities. Under this situation, using the

smoothing mechanism can compensate it at a very low cost. Besides, this extra

module adds some computational complexity. The complexity of each parts of the

encoding process is shown in Figure 14 as a pie chart. It can be found that this module

adds in computational burden no more than 1%

Figure 12 Quantization error per parameter after the fine quantization, the coarse

(a)

(b)

(c)

Figure 13 Comparison of the resulting waveforms. (a) fine quantized (b) coarse

quantized (c) smoothed

ottbox,

smoothing, 0.4

1.4 synthesis

filterbank, 9.9

others, 1.7

entropy coding,

0.2

analysis filter

bank, 86.4

Figure 14 Complexity analysis of the encoder including smoothing decision modules

6. Conclusions

The main goal of this paper is to design and implement several MPEG Surround

encoder modules. First, we survey the MPEG Surround specifications. We also trace

the reference software against the specifications. Some deficient parts are found and

modified. Three modules, namely, the frequency resolution decision, the coding mode

decision, and the adaptive parameter smoothing have been designed to improve

efficiency or performance of the encoder.

First, the frequency resolution is decided by searching the best parameter band

stride for each subset. The pairing configuration is also taken into consideration. For

the coding mode decision, the correlation between neighboring subsets is exploited.

Also, these results are explained based on the theoretical estimates and show the

related experimental results as evidence. Then, to compensate for the artifacts due to

the coarse quantization, a parameter smoothing mechanism is used. The functionality

of this module is evaluated from different aspects, such as bitrates, waveforms and

complexities. The locations of the above three modules in the overall encoding

process

are

shown

in

MPEG Surround Encoder

with New Modules

T/F Transofrm

T/F Transofrm

F/T Transofrm

Downmix

Audio

Encoder

F/T Transofrm

T/F Transofrm

Spatial

Parameter

Estimation

Parameter

Smoothing

Decision

Compressed

Audio

Bitstream

Subset Coding

Mode Decision

Parameter Band

Stride Decsion

Spatial Parameters

Figure 15, which are marked in grey boxes.

MPEG Surround Encoder

with New Modules

T/F Transofrm

T/F Transofrm

F/T Transofrm

Downmix

F/T Transofrm

T/F Transofrm

Spatial

Parameter

Estimation

Parameter

Smoothing

Decision

Audio

Encoder

Compressed

Audio

Bitstream

Subset Coding

Mode Decision

Parameter Band

Stride Decsion

Spatial Parameters

Figure 15 Overview of MPEG Surround encoder combined with all additional

modules

References:

[1] J. Breebaart et al., ”MPEG Spatial Audio Coding/ MPEG Surround: Overview and

Current Status”, 119Th Audio Engineering Society (AES) Convention, New York,

NY, USA, October 7-10, 2005.

[2]Chih-Kang Han, “MPEG surround codec acceleration and implementation on TI

DSP platform”, M.S. thesis, Department of Electronics Engineering, National

Chiao Tung University, HsinChu, Taiwan, June, 2007.

[3] ISO/IEC JTC1/SC29/WG11 (MPEG), Document N8324, “Text of ISO/IEC FDIS

23003-1, MPEG surround”, Klagenfurt, 2006.

[4] J. Herre et al., “A study of the MPEG surround quality versus bit-rate curve”,

123Rd Audio Engineer Society (AES) Convention, New York, NY, USA, October

5-8, 2007.

[5] J. Herre et al., “MPEG surround – the ISO/MPEG standard for efficient and

compatible multi-channel audio coding”, 122nd Audio Engineer Society (AES)

Convention, Vienna, Austria, May 5-8, 2007.

[6] ISO/IEC JTC1/SC29/WG11 (MPEG), document N9093, " ISO/IEC

23003-1:2007/FPDAM 2, MPEG Surround reference software ", San Jose, USA,

April, 2004.