Some hash fundaa

advertisement

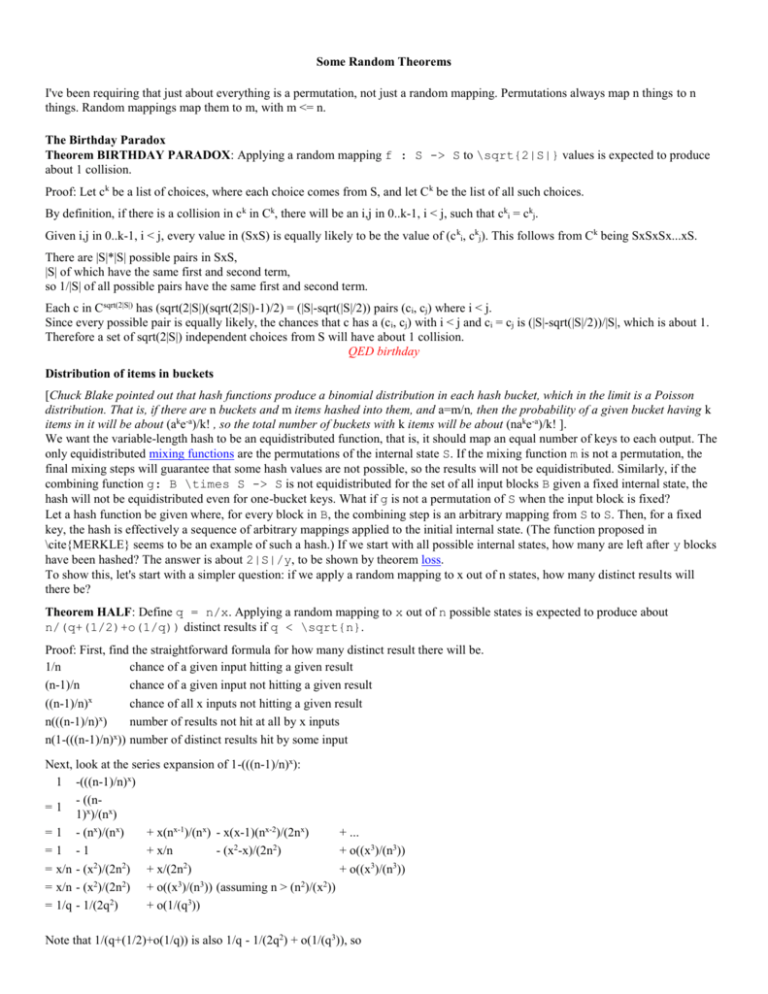

Some Random Theorems

I've been requiring that just about everything is a permutation, not just a random mapping. Permutations always map n things to n

things. Random mappings map them to m, with m <= n.

The Birthday Paradox

Theorem BIRTHDAY PARADOX: Applying a random mapping f : S -> S to \sqrt{2|S|} values is expected to produce

about 1 collision.

Proof: Let ck be a list of choices, where each choice comes from S, and let C k be the list of all such choices.

By definition, if there is a collision in c k in Ck, there will be an i,j in 0..k-1, i < j, such that cki = ckj.

Given i,j in 0..k-1, i < j, every value in (SxS) is equally likely to be the value of (c ki, ckj). This follows from Ck being SxSxSx...xS.

There are |S|*|S| possible pairs in SxS,

|S| of which have the same first and second term,

so 1/|S| of all possible pairs have the same first and second term.

Each c in Csqrt(2|S|) has (sqrt(2|S|)(sqrt(2|S|)-1)/2) = (|S|-sqrt(|S|/2)) pairs (ci, cj) where i < j.

Since every possible pair is equally likely, the chances that c has a (ci, cj) with i < j and ci = cj is (|S|-sqrt(|S|/2))/|S|, which is about 1.

Therefore a set of sqrt(2|S|) independent choices from S will have about 1 collision.

QED birthday

Distribution of items in buckets

[Chuck Blake pointed out that hash functions produce a binomial distribution in each hash bucket, which in the limit is a Poisson

distribution. That is, if there are n buckets and m items hashed into them, and a=m/n, then the probability of a given bucket having k

items in it will be about (ake-a)/k! , so the total number of buckets with k items will be about (nake-a)/k! ].

We want the variable-length hash to be an equidistributed function, that is, it should map an equal number of keys to each output. The

only equidistributed mixing functions are the permutations of the internal state S. If the mixing function m is not a permutation, the

final mixing steps will guarantee that some hash values are not possible, so the results will not be equidistributed. Similarly, if the

combining function g: B \times S -> S is not equidistributed for the set of all input blocks B given a fixed internal state, the

hash will not be equidistributed even for one-bucket keys. What if g is not a permutation of S when the input block is fixed?

Let a hash function be given where, for every block in B, the combining step is an arbitrary mapping from S to S. Then, for a fixed

key, the hash is effectively a sequence of arbitrary mappings applied to the initial internal state. (The function proposed in

\cite{MERKLE} seems to be an example of such a hash.) If we start with all possible internal states, how many are left after y blocks

have been hashed? The answer is about 2|S|/y, to be shown by theorem loss.

To show this, let's start with a simpler question: if we apply a random mapping to x out of n states, how many distinct results will

there be?

Theorem HALF: Define q = n/x. Applying a random mapping to x out of n possible states is expected to produce about

n/(q+(1/2)+o(1/q)) distinct results if q < \sqrt{n}.

Proof: First, find the straightforward formula for how many distinct result there will be.

1/n

chance of a given input hitting a given result

(n-1)/n

chance of a given input not hitting a given result

x

((n-1)/n)

chance of all x inputs not hitting a given result

n(((n-1)/n)x)

number of results not hit at all by x inputs

x

n(1-(((n-1)/n) )) number of distinct results hit by some input

Next, look at the series expansion of 1-(((n-1)/n)x):

1 -(((n-1)/n)x)

- ((n=1

1)x)/(nx)

= 1 - (nx)/(nx)

+ x(nx-1)/(nx) - x(x-1)(nx-2)/(2nx)

+ ...

2

2

=1 -1

+ x/n

- (x -x)/(2n )

+ o((x3)/(n3))

= x/n - (x2)/(2n2) + x/(2n2)

+ o((x3)/(n3))

2

2

3

3

2

2

= x/n - (x )/(2n ) + o((x )/(n )) (assuming n > (n )/(x ))

= 1/q - 1/(2q2)

+ o(1/(q3))

Note that 1/(q+(1/2)+o(1/q)) is also 1/q - 1/(2q2) + o(1/(q3)), so

1-(((n-1)/n)x) = 1/((n/x)+(1/2)+o(x/n))

QED half

For example, n/10 states will map to about n/10.5 states.

Corollary BUCKETS: Hashing b/q keys into b buckets is expected to hit about b/(q+(1/2)) buckets, if q < \sqrt(b).

OK. Now we can look at the original question, applying a sequence of y random mappings to all n possible states.

Theorem LOSS: After applying y random mappings gi : S -> S to all elements of S, the number of elements remaining will be about

(2|S|)/y when y < \sqrt{n}.

The exact formula for xi, the number of states after i mappings, is:

x0 = n

xi+1 = n(1-(((n-1)/n)xi))

Hypothesis: after i mappings we are left with (2n)/(i+o(\log i)) states. The next mapping will leave us this many states:

n(1-((n-1)/n)xi)

= n/{(n/(xi))

+ 1/2 + o((xi)/n)}

(by half)

= n/{n/(2n/(i+o(\log i))) + 1/2 + o({2n/(i+o(\log i))}/n)} (by hypothesis)

= n/{((i+o(\log i))/2)

+ 1/2 + o( 2 /(i+o(\log i))}

= 2n/{ i+o(\log i)

+ 1 + o( 4 / i)}

= 2n/{(i+1)

+ o(\log (i+1))}

(since \sum{i=1}n{4/i} is o(\log n))

The base case is satisfied because o(\log i) can be any constant, in reality 2 for i=0. By induction, the number of states left after

y mappings is (2n)/(y+o(\log y)). This sequence converges to the sequence 2n/y.

QED loss

Experiments with n=65536 agree with both of these results.

Using theorem loss we can see that if the combining function does not act as a permutation on S, changing the ith out of q blocks is

expected to allow only \frac{2}{q-i} of all possible final states to be produced. If |S| = 2 8 (as in \cite{CACMhash}, which does

not have this problem), such a loss would be disasterous. But when |S| = 2 128 (as in \cite{MERKLE}) this loss may be negligible.

The birthday paradox: Applying a random mapping f : S -> S to \sqrt{2|S|} values is expected to produce about 1 collision.

A common question in random number generators can be answered as a corollary of the theorems above. If a random number

generator is based on a random mapping f : S -> S, what is its expected cycle length? The answer is about \sqrt{|S|}.

Theorem SQRT The median number of distinct values in the sequence fi(x), where f : S -> S is a random mapping and x is an

element in S, is between \sqrt{|S|} and \sqrt{2|S|}.

By theorem birthday, applying f to \sqrt{|S|} arbitrary values in S should yield about \frac{|S|}{\sqrt{|S|}+1/2 +

o(1/\sqrt{|S|})} distinct values. That is, there should be about 1/2 a collision. Similarly, mapping a subset of \sqrt{2|S|}

values is expected to cause about 1 collision. (Theorem half will produce the same results.)

If no subset had more than one collision, then an expectation of 1/2 a collision would imply every subset had a 1/2 chance of a at

least one collision. Some subsets have more than one collision, though, so the chances of having at least one collision is less than 1/2

when the subset has \sqrt{|S|} values.

On the other hand, a set of \sqrt{2|S|} values is expected to produce 1 collision. These values could be chosen one at a time. If a

collision does occur, there should be no more than 1 collision expected in the remaining values. If we solve for x in the equation

\sumi=0\sqrt{2|S|}{ixi+1} = 1, then x is a lower bound on the fraction of subsets with at least one collision. Since the solution is x = 1/2,

the probability of having at least one collision is more than 1/2 when f maps a set of \sqrt{2|S|} values.

Until one collision occurs, the sequence fi(x) is a list of arbitrary values chosen from S. According to the bounds above, over half the

choices of (f, x) will go \sqrt{|S|} values without a collision, and less than half will go \sqrt{2|S|} values without a

collision, so the median must be somewhere in between.

QED sqrt

Hashing

Lecture 21

Steven S. Skiena

Hashing

One way to convert form names to integers is to use the letters to form a base ``alphabet-size'' number system:

To convert ``STEVE'' to a number, observe that e is the 5th letter of the alphabet, s is the 19th letter, t is the 20th letter, and v is the

22nd letter.

Thus ``Steve''

Thus one way we could represent a table of names would be to set aside an array big enough to contain one element for each possible

string of letters, then store data in the elements corresponding to real people. By computing this function, it tells us where the person's

phone number is immediately!!

What's the Problem?

Because we must leave room for every possible string, this method will use an incredible amount of memory. We need a data structure

to represent a sparse table, one where almost all entries will be empty.

We can reduce the number of boxes we need if we are willing to put more than one thing in the same box!

Example: suppose we use the base alphabet number system, then take the remainder

Now the table is much smaller, but we need a way to deal with the fact that more than one, (but hopefully every few) keys can get

mapped to the same array element.

The Basics of Hashing

The basics of hashing is to apply a function to the search key so we can determine where the item is without looking at the other items.

To make the table of reasonable size, we must allow for collisions, two distinct keys mapped to the same location.

We a special hash function to map keys (hopefully uniformly) to integers in a certain range.

We set up an array as big as this range, and use the valve of the function as the index to store the appropriate key. Special

care must be taken to handle collisions when they occur.

There are several clever techniques we will see to develop good hash functions and deal with the problems of duplicates.

Hash Functions

The verb ``hash'' means ``to mix up'', and so we seek a function to mix up keys as well as possible.

The best possible hash function would hash m keys into n ``buckets'' with no more than

called a perfect hash function

keys per bucket. Such a function is

How can we build a hash function?

Let us consider hashing character strings to integers. The ORD function returns the character code associated with a given character.

By using the ``base character size'' number system, we can map each string to an integer.

The First Three SSN digits Hash

The first three digits of the Social Security Number

The last three digits of the Social Security Number

What is the big picture?

1.

2.

3.

A hash function which maps an arbitrary key to an integer turns searching into array access, hence O(1).

To use a finite sized array means two different keys will be mapped to the same place. Thus we must have some way to

handle collisions.

A good hash function must spread the keys uniformly, or else we have a linear search.

Ideas for Hash Functions

Truncation - When grades are posted, the last four digits of your SSN are used, because they distribute students more

uniformly than the first four digits.

Folding - We should get a better spread by factoring in the entire key. Maybe subtract the last four digits from the first five

digits of the SSN, and take the absolute value?

Modular Arithmetic - When constructing pseudorandom numbers, a good trick for uniform distribution was to take a big

number mod the size of our range. Because of our roulette wheel analogy, the numbers tend to get spread well if the tablesize

is selected carefully.

Prime Numbers are Good Things

Suppose we wanted to hash check totals by the dollar value in pennies mod 1000. What happens?

,

, and

Prices tend to be clumped by similar last digits, so we get clustering.

If we instead use a prime numbered Modulus like 1007, these clusters will get broken:

.

,

, and

In general, it is a good idea to use prime modulus for hash table size, since it is less likely the data will be multiples of large primes as

opposed to small primes - all multiples of 4 get mapped to even numbers in an even sized hash table!

The Birthday Paradox

No matter how good our hash function is, we had better be prepared for collisions, because of the birthday paradox.

Assuming 365 days a year, what is the probability that exactly two people share a birthday? Once the first person has fixed their

birthday, the second person has 365 possible days to be born to avoid a collision, or a 365/365 chance.

With three people, the probability that no two share is

insertions into an m-element table is

. In general, the probability of there being no collisions after n

When m = 366, this probability sinks below 1/2 when N = 23 and to almost 0 when

.

The moral is that collisions are common, even with good hash functions.

What about Collisions?

No matter how good our hash functions are, we must deal with collisions. What do we do when the spot in the table we need is

occupied?

Put it somewhere else! - In open addressing, we have a rule to decide where to put it if the space is already occupied.

Keep a list at each bin! - At each spot in the hash table, keep a linked list of keys sharing this hash value, and do a sequential

search to find the one we need. This method is called chaining.

Collision Resolution by Chaining

The easiest approach is to let each element in the hash table be a pointer to a list of keys.

Insertion, deletion, and query reduce to the problem in linked lists. If the n keys are distributed uniformly in a table of size m/n, each

operation takes O(m/n) time.

Chaining is easy, but devotes a considerable amount of memory to pointers, which could be used to make the table larger. Still, it is

my preferred method.

Open Addressing

We can dispense with all these pointers by using an implicit reference derived from a simple function:

If the space we want to use is filled, we can examine the remaining locations:

1.

Sequentially

2.

Quadratically

3.

Linearly

The reason for using a more complicated scheme is to avoid long runs from similarly hashed keys.

Deletion in an open addressing scheme is ugly, since removing one element can break a chain of insertions, making some elements

inaccessible.

Performance on Set Operations

With either chaining or open addressing:

Search - O(1) expected, O(n) worst case.

Insert - O(1) expected, O(n) worst case.

Delete - O(1) expected, O(n) worst case.

Pragmatically, a hash table is often the best data structure to maintain a dictionary. However, the worst-case running time is

unpredictable.

The best worst-case bounds on a dictionary come from balanced binary trees, such as red-black trees.

Data Structures and Algorithms

8.3 Hash Tables

8.3.1 Direct Address Tables

If we have a collection of n elements whose keys are unique integers in (1,m),

where m >= n,

then we can store the items in a direct address table, T[m],

where Ti is either empty or contains one of the elements of our collection.

Searching a direct address table is clearly an O(1) operation:

for a key, k, we access Tk,

if it contains an element, return it,

if it doesn't then return a NULL.

There are two constraints here:

1.

2.

the keys must be unique, and

the range of the key must be severely bounded.

If the keys are not unique, then we can simply construct a set of m lists and store the

heads of these lists in the direct address table. The time to find an element matching an

input key will still be O(1).

However, if each element of the collection has some other distinguishing feature (other

than its key), and if the maximum number of duplicates is ndupmax, then searching for a

specific element is O(ndupmax). If duplicates are the exception rather than the rule, then

ndupmax is much smaller than n and a direct address table will provide good performance.

But if ndupmax approaches n, then the time to find a specific element is O(n) and a tree

structure will be more efficient.

The range of the key determines the size of the direct address table and may be too large to be practical. For instance it's not likely that

you'll be able to use a direct address table to store elements which have arbitrary 32-bit integers as their keys for a few years yet!

Direct addressing is easily generalised to the case where there is a function,

h(k) => (1,m)

which maps each value of the key, k, to the range (1,m). In this case, we place the element in T[h(k)] rather than T[k] and we can

search in O(1) time as before.

8.3.2 Mapping functions

The direct address approach requires that the function, h(k), is a one-to-one mapping from each k to integers in (1,m). Such a function

is known as a perfect hashing function: it maps each key to a distinct integer within some manageable range and enables us to

trivially build an O(1) search time table.

Unfortunately, finding a perfect hashing function is not always possible. Let's say that we can find a hash function, h(k), which maps

most of the keys onto unique integers, but maps a small number of keys on to the same integer. If the number of collisions (cases

where multiple keys map onto the same integer), is sufficiently small, then hash tables work quite well and give O(1) search times.

Handling the collisions

In the small number of cases, where multiple keys map to the same integer, then elements with different keys may be stored in the

same "slot" of the hash table. It is clear that when the hash function is used to locate a potential match, it will be necessary to compare

the key of that element with the search key. But there may be more than one element which should be stored in a single slot of the

table. Various techniques are used to manage this problem:

1.

2.

3.

4.

5.

6.

chaining,

overflow areas,

re-hashing,

using neighbouring slots (linear probing),

quadratic probing,

random probing, ...

Chaining

One simple scheme is to chain all collisions in lists attached to the appropriate slot. This allows an unlimited number of collisions to

be handled and doesn't require a priori knowledge of how many elements are contained in the collection. The tradeoff is the same as

with linked lists versus array implementations of collections: linked list overhead in space and, to a lesser extent, in time.

Re-hashing

Re-hashing schemes use a second hashing operation when there is a collision. If there

is a further collision, we re-hash until an empty "slot" in the table is found.

The re-hashing function can either be a new function or a re-application of the

original one. As long as the functions are applied to a key in the same order, then a

sought key can always be located.

Linear probing

One of the simplest re-hashing functions is +1 (or -1), ie on a collision, look in the

neighbouring slot in the table. It calculates the new address extremely quickly and

may be extremely efficient on a modern RISC processor due to efficient cache

utilisation (cf. the discussion of linked list efficiency).

The animation gives you a practical demonstration of the effect of linear probing: it h(j)=h(k), so the next hash function,

also implements a quadratic re-hash function so that you can compare the difference. h1 is used. A second collision occurs,

so h2 is used.

Clustering

Linear probing is subject to a clustering phenomenon. Re-hashes from one location occupy a block of slots in the table which "grows"

towards slots to which other keys hash. This exacerbates the collision problem and the number of re-hashed can become large.

Quadratic Probing

Better behaviour is usually obtained with quadratic probing, where the secondary hash function depends on the re-hash index:

address = h(key) + c i2

th

on the t re-hash. (A more complex function of i may also be used.) Since keys which are mapped to the same value by the primary

hash function follow the same sequence of addresses, quadratic probing shows secondary clustering. However, secondary clustering

is not nearly as severe as the clustering shown by linear probes.

Re-hashing schemes use the originally allocated table space and thus avoid linked list overhead, but require advance knowledge of the

number of items to be stored.

However, the collision elements are stored in slots to which other key values map directly, thus the potential for multiple collisions

increases as the table becomes full.

Overflow area

Another scheme will divide the pre-allocated table into two sections: the primary area to which keys are mapped and an area for

collisions, normally termed the overflow area.

When a collision occurs, a slot in the overflow area is used for the new

element and a link from the primary slot established as in a chained system.

This is essentially the same as chaining, except that the overflow area is preallocated and thus possibly faster to access. As with re-hashing, the

maximum number of elements must be known in advance, but in this case,

two parameters must be estimated: the optimum size of the primary and

overflow areas.

Of course, it is possible to design systems with multiple overflow tables, or with a mechanism for handling overflow out of the

overflow area, which provide flexibility without losing the advantages of the overflow scheme.

Summary: Hash Table Organization

Organization

Chaining

Advantages

Disadvantages

Unlimited number of elements

Unlimited number of collisions

Overhead of multiple linked lists

Re-hashing

Fast re-hashing

Fast access through use

of main table space

Maximum number of elements must be known

Multiple collisions may become

probable

Overflow area

Fast access

Collisions don't use primary table space

Two parameters which govern performance

need to be estimated