Hashing 2

advertisement

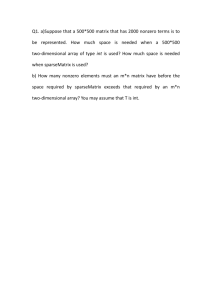

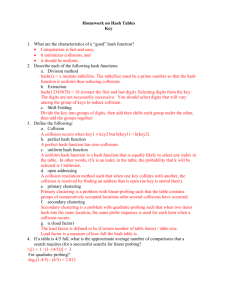

Hashing Part One Reaching for the Perfect Search Most of this material stolen from "File Structures" by Folk, Zoellick and Riccardi Text File v. Binary File Unordered Binary File ◦ average search takes N/2 file operations Ordered Binary File ◦ average search takes Log2N file operations ◦ but keeping the data file sorted is costly Indexed File ◦ average search takes 3 or 4 file operations Perfect Search ◦ search time = 1file read Definition: o a magic black box that converts a key to the file Name Field1 Field2 address of that record Dannelly Hash Function Dannelly ... Example Hashing Function: o Key = Customer's Name o Function = 1st letter x 2nd letter, then use rightmost 4 letters. Name BALL LOWELL TREE OLIVIER ascii 66x65 76x79 84x82 79x76 = = = = product 4290 6004 6888 6004 RRN 290 004 888 004 Definition: ◦ When two or more keys hash to the same address. Minimizing the Number of Collisions: 1) pick a hash function that avoids collisions, i.e. one with a seemingly random distribution ◦ e.g. our previous function is terrible because letters like "E" and "L" occur frequently, while no one's name starts with "XZ". 2) spread out the records ◦ 300 records in a file with space for 1000 records will have many fewer collisions than 300 records in a file with capacity of 400 Our objective is to muddle the relationship between the keys and the addresses. Good Ideas: use both addition and multiplication avoid integer overflow so mix in some subtraction and division too divide by prime numbers 1. pad the name with spaces Why 19,937 ? 2. fold and add pairs of letters 19,937 is the largest prime that insures the next add will not cause integer overflow. 3. mod by a prime after each add 4. divide sum by file size Example: Key="LOWELL" and file size = 1,000 L O W E 76 79 | 87 69 7679 + 8769 = 16448 + 7676 = 4187 + 3232 = 7419 + 3232 = 10651 + 3232 = L L | 76 76 | 32 32 16,448 % 19,937 24,124 % 19,937 7,419 % 19,937 10,651 % 19,937 13,883 % 19,937 13833 % 1000 = 833 | = = = = = 32 32 | 32 32 16,448 4,187 7,419 10,651 13,833 The simplest hash function for a string is "add up all the characters, then divide by filesize" For example, ◦ filesize = 100 records ◦ key = "pen" ◦ address = ( 16 + 5 + 14 ) % 100 = 35 1. Find another word with the same mapping 2. Give an improvement to this hash function a b c d e f g h i j k l m n o p q r s t u v w x y z 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 The optimal hash function for a set of keys: 1. will evenly distribute the keys across the address space, and 2. every address has a equal chance of being used. Uniform distribution is nearly impossible. Good Mapping key A B C D E address 1 2 3 4 5 6 7 8 9 10 Poor Mapping key A B C D E address 1 2 3 4 5 6 7 8 9 10 Suppose we have a file of 10,000 records, finding a hash function that will take our 10,000 keys and yield 10,000 different addresses is essentially impossible. So, our 10,000 records are stored in a larger file. How much larger than 10,000? o 10,500? o 12,000? o 50,000? It Depends ◦ larger datafile: more empty (wasted) space fewer collisions Even with a very good hash function, collisions will occur. We must have an algorithm to locate alternative addresses. Example, ◦ Suppose "dog" and "cat" both hash to location 25. ◦ If we add "dog" first, then dog goes in location 25. ◦ If we later add "cat", where does it go? ◦ Same idea for searching. If cat is supposed to be at 25 but dog is there, where do we look next? "Linear Probing" or "Progressive Overflow" When a key maps to address already in use, just try the next one. If that one is in use, try the next one. yadda yadda Easy to implement. Usually works well, especially with a nondense file and a good hash function. Can lead to clumps of records. Assume these keys map to these addresses: 1. 2. 3. 4. 5. adams = 20 bates = 22 cole = 20 dean = 21 evans = 23 Where will each record be placed if inserted in that order? Using linear probing, how many file accesses for each? How many collisions is acceptable? ◦ Analysis: packing density v probing length Is there a collision resolution algorithm better than linear probing? ◦ buckets