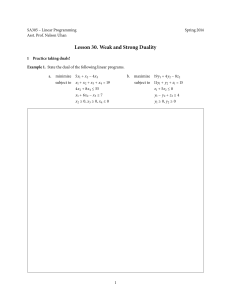

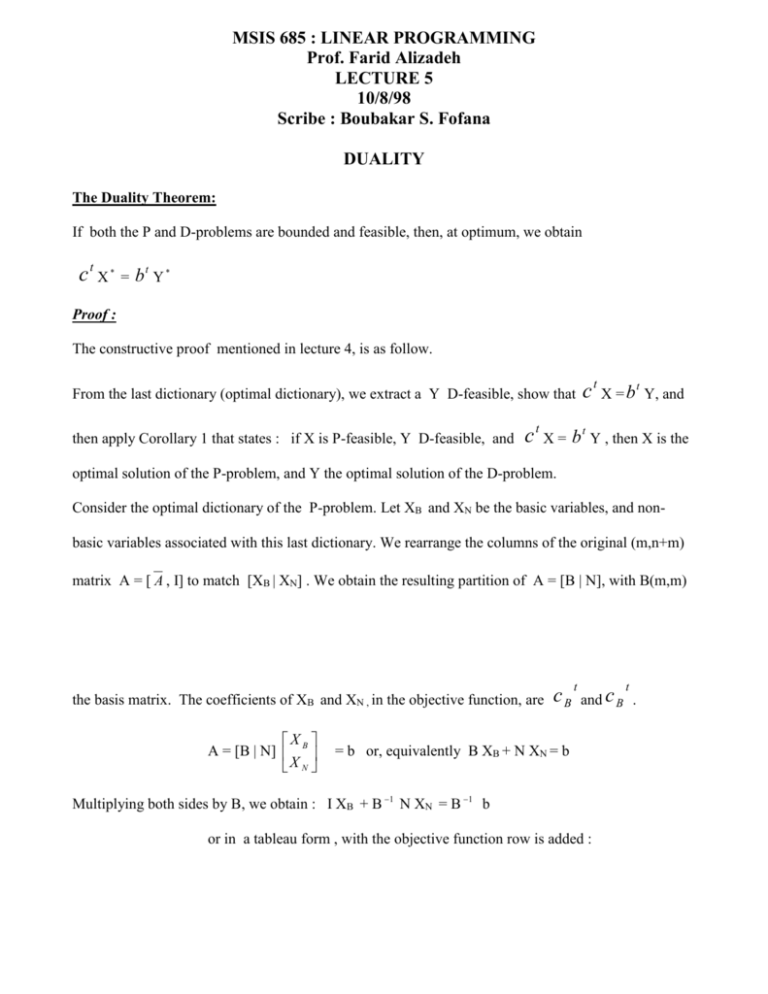

MSIS 685 : LINEAR PROGRAMMING

advertisement

MSIS 685 : LINEAR PROGRAMMING

Prof. Farid Alizadeh

LECTURE 5

10/8/98

Scribe : Boubakar S. Fofana

DUALITY

The Duality Theorem:

If both the P and D-problems are bounded and feasible, then, at optimum, we obtain

c t X * = bt Y *

Proof :

The constructive proof mentioned in lecture 4, is as follow.

From the last dictionary (optimal dictionary), we extract a Y D-feasible, show that

then apply Corollary 1 that states : if X is P-feasible, Y D-feasible, and

c t X = b t Y, and

c t X = b t Y , then X is the

optimal solution of the P-problem, and Y the optimal solution of the D-problem.

Consider the optimal dictionary of the P-problem. Let XB and XN be the basic variables, and nonbasic variables associated with this last dictionary. We rearrange the columns of the original (m,n+m)

matrix A = [ A , I] to match [XB | XN] . We obtain the resulting partition of A = [B | N], with B(m,m)

the basis matrix. The coefficients of XB and XN , in the objective function, are

X

A = [B | N] B

X N

cB

t

t

and c B .

= b or, equivalently B XB + N XN = b

Multiplying both sides by B, we obtain : I XB + B 1 N XN = B 1 b

or in a tableau form , with the objective function row is added :

2

XN

XB

B 1 N

cN

B 1 b

I

t

cB

0

t

To reflect the fact that cB is always zero, we add (-cB * row1) to row2 :

XN

XB

B 1 N

cN

t

-

B 1 b

I

cB

t

0

B 1 N

The algorithm stopping condition is :

-

cN

t

-

cB

cB

t

t

B 1 b

B 1 N 0 (coefficients of the non-basic variables in

the objective function)

Let Y* =

cB

t

B 1 . To establish the theorem we now prove that

(1) Y* is D- feasible

(2)

c t X* = b t Y*

and invoke Corollary 2.

(2) is straightforward :

b t Y* = ( c B B 1 ) b =

t

cB

t

cB

t

=

=

cB

(B 1 b) by the associativity of matrices

XB

t

XB +

cN

t

t

= [ cB |

=

c t X*

cN

t

XN

X

] B

X N

with XN = 0 (non-basic variables)

3

(1) Y* is D-feasible A Y* c and

t

I Y* 0

t

[ A , I] Y* [ c ,0]

A t Y*

c

Y* t A

ct

(by transposition of the previous terms)

To prove the last inequality , we substitute equivalent expressions for Y* t , A, and

Y* t A

=

cB

t

t

B 1 [B | N] = [ c B B 1 B |

t

= [ cB |

t

= [ cB |

c

Y* t A -

c=

[ 0|

cB

cN

t

cB

t

t

cB

t

B 1 N ]

B 1 N ]

]

B 1 N -

cN

t

] 0,

by virtue of the algorithm stopping condition :

cN

t

-

cB

t

B 1 N 0

The Complementary Slackness Theorem:

Consider the P and D-problem in the standard form :

Primal Problem :

Dual Problem :

ct X

Min b t Y

Max

At Y - Z = c

AX + S = b

X >= 0, S 0

Y 0, Z 0

Suppose {X ,S} is P-feasible, and {Y, Z} D-feasible. Then :

Zi Xi = 0

i = 1,2, …..n

Sj Yj = 0

j = 1,2, …. m

Proof :

X and Y are both feasible :

bt Y

c t X ( Weak Duality Lemma)

c:

4

Optimum is reached ( b t Y -

c

t

X) = 0 (duality gap reduced to zero)

By substituting equivalent expressions for b and c, we obtain, after simplification :

(AX + S ) t Y – (A t Y – Z) X = S t Y + Z t X =0

m

or S j Y j +

*

j 1

*

n

Z

i 1

*

i

*

Xi = 0

Since each term of the n+m equations is 0 (feasible variables by hypothesis)

They add up to zero iif

Zi Xi = 0

i = 1,2, …..n

Sj Yj = 0

j = 1,2, …. M

Remarks :

(1) The feasiblity equations ( AX + S = b and A t Y - Z = c ) and the complementary slackness

theorem form 2( n+ m ) equations, not all linear, have been solved numerically, in the use of another

algorithm.

(2) If the dual optimum solution is not unique, then the primal optimum solution is degenerate.

If the primal optimum solution is not unique, then the dual optimum solution is degenerate.

(3) The complementary slackness equations are called strict if :

Z i X i = 0 ( Z i = 0 and X i 0 ) or (Z i 0 and X i = 0) and not (Z i = 0 and X i =0)

The same observation applies to S j Y j = 0

Also, the P or D-problem is degenerate if not all equations are strict.

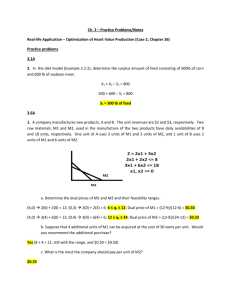

Correspondence between the Primal and Dual Problems:

The following table shows the correspondence between the primal problem and its dual, with respect

to the signs of the variables, and the direction of the constraints.

5

PRIMAL ( Minimization)

DUAL (Maximization)

Xi 0

( constraint expression ) i c i

Xi 0

( constraint expression ) i c i

X i free

( constraint expression) i = c i

( constraint expression) i c i

Xi 0

( constraint expression ) i c i

Xi 0

( constraint expression ) i = c i

X i free

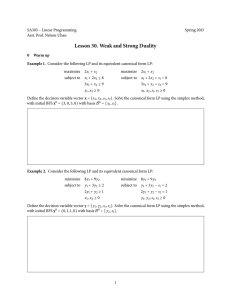

Example : Write the dual of the minimization problem :

Min

2x 1 + 3x 2 -4 x 3 + x 4

s.t.

(p 1 )

x1 + x2 - x3 + x4 = 5

(p 2 ) 2x 1 - x 2 - 3x 3 + 2x 4 6

(p 3 ) -x 1 +2 x 2 + 4x 3 - x 4 -3

x 1 0, x 2 free , x 3 0, x 4 0

The signs of x 1 , x 2 , x 3 , and x 4 , variables corresponding to the dual constraints

(d 1 ), (d 2 ), (d 3 ), and (d 4 ) , determine the directions of these constraints :

x1 0

(d 1 ) with

x 2 free

(d 2 ) with =

x3 0

(d 3 ) with

x4 0

(d 4 ) with

The correspondence between y 1 , y 2 , and y 3 , dual variables, and the primal constraints is :

(p 1 ) with =

y 1 free

(p 2 ) with

y2 0

(p 3 ) with

y3 0

The complete dual problem can be written now :

Max 5y 1 + 6y 2 - 3y 3

ST

y 1 + 2y 2 - y 3 2

y 1 - y 2 +2 y 3 = 3

-y 1 - y 2 +2 y 3 -4

y 1 + 2y 2 - y 3 1

y 1 free, y 2 0, y 3 0

6

……….