Notes 11 - Wharton Statistics Department

advertisement

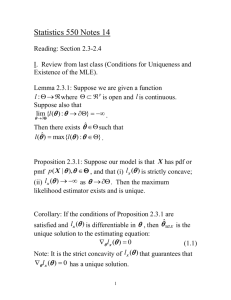

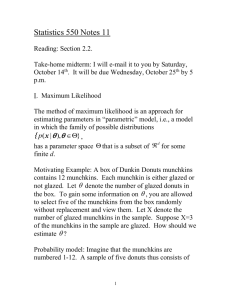

Statistics 550 Notes 11

Reading: Section 2.3

I. Invariance Property of the MLE

Consider the model that the data X ~ p( x | ), . For

an invertible mapping g ( ) , the model can be

reparameterized as X ~ p( x | ), g () where

g ( ) .

Theorem 1: The invariance property of the MLE is that the

MLE for in this reparameterized model is g (ˆMLE ) .

Proof: Since the mapping g ( ) is invertible, the

1

inverse function g ( ) is well defined and the likelihood

function of g ( ) written as a function of is given by

L* x ( ) p( x | g 1 ( )) Lx ( g 1 ( ) | x )

and

sup L* x ( ) sup Lx ( g 1 ( ) | x) sup Lx ( | x) .

*

Thus, the maximum of L x ( ) is attained at g (ˆMLE ) .

■

Example 1: Consider the model X 1 ,

n

e xi

p( x1 , , xn | )

xi !

i 1

1

, X n iid Poisson ( ):

We have shown that for X 0 the MLE is ˆMLE X .

Suppose we reparameterize the model in terms of

g ( ) e , which is the probability X i equals zero

(e.g, if X i is the number of vehicles that pass a marker on a

roadway during a ten second period, is the probability

that no vehicles pass the marker). By Theorem 1, the MLE

ˆ

X

of is e MLE e .

II. Likelihood Equation and the MLE

If is open, l X ( ) is differentiable in and ˆMLE exists,

then ˆ

must satisfy the following estimating equation:

MLE

l X ( ) 0

(1.1)

l X ( ) 0

This is known as the likelihood equation.

However, solving the likelihood equation does not

immediately yield the MLE as (i) solutions of (1.1) may be

local maxima rather than global maxima, (ii) solutions of

(1.1) may be local minima or saddlepoints rather than local

maxima or (iii) the MLE may not exist.

Example 1: Let X 1 , X 2 , X 3 be iid observations from a

1

p

(

x

|

)

Cauchy distribution about ,

(1 ( x )2 ) ,

.

2

Suppose X1 0, X 2 1, X 3 10 .

There are three solutions to the likelihood for 0 10 ,

two of which are local maxima and one of which is a local

minima.

3

Example 2: Consider X 1 ,

normal distributions

p( x | 1 , 2 , 12 , 22 , q) q

, X n iid from a mixture of

( x 1 )2

( x 2 )2

1

1

exp

(1

q

)

exp

.

2

2

21

2 2

21

2 2

The log likelihood is

n

( X i 1 ) 2

( X i 2 ) 2

1

1

l X ( 1 , 2 , 12 , 22 , q) log q

exp

(1

q

)

exp

2 12

2 22

2 2

i 1

2 1

2

Let 1 X1 , 2 1 , q 0, q 1 . Then as 1 0 ,

p( X 1 | 1 , 2 , 12 , 22 , q) so that the likelihood function

is unbounded.

Example 3: Normal distribution

X1 ,

, X n iid N ( , 2 )

2

(

x

)

1

1

2

i

, xn | , )

exp

2

i 1 2

n

p( x1 ,

n

1

l ( , ) n log log 2

2

2 2

2

(

X

)

i

i 1

n

We solve the likelihood equation.

The partials with respect to and are

l

1

n

2 i 1 ( X i )

l

n

n

3 i 1 ( X i ) 24

Setting the first partial equal to zero and solving for the

MLE, we obtain

X

Setting the second partial equal to zero and substituting the

mle for , we find that the mle for is

1 n

2

(

X

X

)

i

.

n i 1

There is a unique solution to the likelihood equation. To

verify that this unique solution to the likelihood equation is

in fact the MLE, we need to check that this is a local

maximum and examine the behavior of the likelihood

function at the boundary of the parameter space.

However, we can conclude that this unique solution to the

likelihood equation is in fact the MLE from some general

facts about exponential families:

First, note that the normal model is a two-parameter

exponential family with natural parameters

1

1 2 , 2 2 :

2

p( x1 ,

n

1

, xn | , ) exp log

2

2 2

2

n

x 2 xi n 2

i 1

i 1

n

2

i

In terms of 1 , 2 , the unique solution to the likelihood

X

1

1 n

, 2 n

1

2

equations are

.

2

(

X

X

)

( X i X )2

i

n i 1

n i 1

5

III. Properties of likelihood function and natural parameter

space for exponential families

Theorem 2 (Based on Bickel and Doksum’s Theorem

1.6.3):

Consider an exponential family

k

p( x | ) h( x ) exp T j ( x ) j A( ) with natural

i 1

parameter space . We have

(a) is convex.

(b) The likelihood function l x ( ) is concave.

Proof: We first prove that A( ) is a convex function.

Suppose 1 ,2 and 0 1 . To prove that A is

convex, we need to show that

A(1 (1 )2 ) A(1 ) (1 ) A(2 )

(1.2)

or equivalently

exp A(1 (1 )2 ) exp A(1 ) (1 ) A(2 ) (1.3)

To prove this, we use a version of Hölder’s inequality (see

Appendix B.9.4 of Bickel and Doksum): For any

1 1

u ( x), v( x), h( x) 0, r , s 0 with 1 , Hölder’s

r s

inequality says

u( x)v( x)h( x)dx

r

u

( x)h( x)dx

6

1/ r

s

v

( x)h( x)dx

1/ s

Thus, we have

exp{ A(1 (1 )2 )} exp[ j 1 (1 j (1 )2 j )T j ( x)]h( x) dx =

k

exp[ j 11 jTj ( x)]exp[ j 1 (1 )2 jTj ( x)]h( x)dx

k

k

1/

(exp[ j 11 jTj ( x)]) h( x)dx

k

1/(1 )

(exp[ j 1 (1 )2 jTi ( x)]) h( x)dx

k

exp{ A(1 )}exp{(1 ) A(2 )}

where the inequality follows from Hölder’s inequality with

1

1

r ,s

A( ) is

1 . This proves (1.3) and hence that

a convex function.

To prove part (a), note that if 1 ,2 , the right hand

side of (1.2) is finite. Because

k

exp( A( )) h( x) exp jT j ( x) dx 0 for all ,

j 1

we conclude from (1.2) that 1 (1 )2 and (a)

follows.

To prove part (b), note that

k

l x ( ) log h( x ) Ti ( x ) j ( x ) A( ) .

i 1

Since A( ) is convex, A( ) is concave and l x ( ) is the

sum of a concave function and a linear function of and is

hence concave itself.

A fact about maximization of concave functions on convex

domains is

7

1

Theorem 3: Let D n be convex and f : D be a

concave and differentiable function on D . Then x is a

global maximum on D if and only if x f ( x) 0 .

Proof: A First Course in Optimization Theory, R.K.

Sundaram, Theorem 7.15, pg. 187.

Combining Theorems 1 and 2, we conclude that for an

exponential family over its natural parameter space, a point

is the MLE if and only if it is a solution to the likelihood

equation.

1

X

, 2

1

1 n

2 n

are the

2

(Xi X )

( X i X )2

n i 1

n i 1

MLEs for the normal distribution in the natural

parameterization. Since there is an invertible map

h : (1 ,2 ) ( , ) , by the invariance property of the

MLE (Theorem 1), ( ˆ MLE , ˆ MLE ) h(ˆ1, MLE ,ˆ2, MLE ) :

Thus,

ˆ MLE X , ˆ MLE

1 n

2

(

X

X

)

i

.

n i 1

Section 2.3 provides conditions for exponential families

under which there exists a solution to the likelihood

equation and conditions under which there is a unique

solution to the likelihood equation (by Theorems 1 and 2

above, if there is more than one solution to the likelihood

equation, then each solution is an MLE).

8

IV. Finding the MLE

Example 3: Gamma distribution

1

x 1e x / , 0 x

f ( x; , ) ( )

0,

elsewhere

l ( , ) i 1 log ( ) log ( 1)log X i X i /

n

for the parameter space 0, 0 .

The gamma distribution is a two-dimensional exponential

family so a solution to the likelihood equation is the MLE.

The partial derivatives of the log likelihood are

l

n '( )

i 1

log log X i

( )

X

l

n

i 1 2i

Setting the second partial derivative equal to zero, we find

ˆMLE

n

i 1

Xi

nˆ MLE

When this solution is substituted into the first partial

derivative, we obtain a nonlinear equation for the MLE of

:

X

'( )

n

i 1 i

n

n log

n log ˆ MLE i 1 log X i 0

( )

n

This equation cannot be solved in closed form.

n

9

V. The Bisection Method

The bisection method is a method for finding the root of a

one-dimensional function f that is continuous on (a, b) ,

f (a) 0 f (b) for which f is increasing (an analogous

method can be used for f decreasing).

*

Note: There is a root f ( x ) 0 by the intermediate value

theorem.

Bisection Algorithm:

*

Decide on tolerance 0 for | xfinal x |

Stop algorithm when we find xfinal

1. Find x0 , x1 such that f ( x0 ) 0, f ( x1 ) 0 .

Initialize xold x1 , xold x0 .

1

2. If | xold xold | 2 , set x final 2 ( xold xold ) and return x final

1

x

Else set new 2 ( xold xold )

3. If f ( xnew ) 0, set x final xnew .

If f ( xnew ) 0 set xold xnew and go to step 2.

If f ( xnew ) 0, set xold xnew and go to step 2.

Lemma 2.4.1: The bisection algorithm stops at a solution

x final such that

| x final x* | .

10

Proof: If xm is the mth iterate of xnew ,

1

1

| xm xm 1 | | xm 1 xm 2 |

| x1 x0 |

2

2m 1

Moreover, by the intermediate value theorem,

xm x* xm 1 for all m .

Therefore,

| xm 1 x* | 2 m | x1 x0 |

*

For m log 2 (| x1 x0 | / ), we have | xm 1 x | .

Note: Bisection can be much more efficient than the

approach of specifying a grid of points between a and b and

evaluating f at each grid point, since for finding the root to

within , a grid of size | x1 x0 | / is required, while

bisection requires only log 2 (| x1 x0 | / ) evaluations of f.

Example 3 continued:

In a study of the natural variability of rainfall, the rainfall

of summer storms was measured by a network of rain

gauges in southern Illinois for the years 1960-1964. 227

measurements were taken.

11

R program for finding the maximum likelihood estimate

using the bisection method.

digamma(x) = function in R that computes the derivative of

'( x)

the log of the gamma function of x, ( x)

uniroot(f,interval) = function in R that finds the

approximate zero of a function in the interval using

bisection type method.

alphahatfunc=function(alpha,xvec){

n=length(xvec);

eq=-n*digamma(alpha)n*log(mean(xvec))+n*log(alpha)+sum(log(xvec));

12

eq;

}

> alphahatfunc(.3779155,illinoisrainfall)

[1] 65.25308

> alphahatfunc(.5,illinoisrainfall)

[1] -45.27781

alpharoot=uniroot(alphahatfunc,interval=c(.377,.5),xvec=ill

inoisrainfall)

> alpharoot

$root

[1] 0.4407967

$f.root

[1] -0.004515694

$iter

[1] 4

$estim.prec

[1] 6.103516e-05

betahatmle=mean(illinoisrainfall)/.4407967

[1] 0.5090602

ˆ MLE .4408

ˆMLE .5091

13

Comparison with method of moments:

E ( X i )

Var ( X i ) 2 , E ( X i2 ) 2 2 2

Substituting

E ( X i )

2

into the expression for E ( X i ) ,

we obtain

E ( X i2 ) E ( X i )

2

E ( X i )

E ( X i )

2

E ( X i2 ) E ( X i )

2

Thus,

2

ˆMOM

1 n 2 1 n

X

X

i n i 1 i

n i 1

1 n

Xi

n i 1

ˆ MOM

1 n

i 1 X i

n

2

1 n 2 1 n

X i n i 1 X i

n i 1

2

betahatmom=(mean(illinoisrainfall^2)(mean(illinoisrainfall))^2)/mean(illinoisrainfall)

> betahatmom

[1] 0.5937626

14

alphahatmom=(mean(illinoisrainfall))^2/(mean(illinoisrainf

all^2)-(mean(illinoisrainfall))^2)

> alphahatmom

[1] 0.3779155

Simulation Study:

We simulated 100 iid data points from a Gamma(1,1)

distribution and calculated the MLE and method of

moments estimators. We repeated this 1000 times and

calculated the mean squared errors of the estimators.

# Comparison of MLE and MOM for gamma distribution

sims=1000;

n=100;

betahatmle=rep(0,sims);

alphahatmle=rep(0,sims);

betahatmom=rep(0,sims);

alphahatmom=rep(0,sims);

alphahatfunc=function(alpha,xvec){

n=length(xvec);

eq=-n*digamma(alpha)n*log(mean(xvec))+n*log(alpha)+sum(log(xvec));

eq;

}

for(i in 1:sims){

15

x=rgamma(n,1,1);

alphahatmle[i]=uniroot(alphahatfunc,interval=c(.001,10),x

vec=x)$root;

betahatmle[i]=mean(x)/alphahatmle[i];

betahatmom[i]=(mean(x^2)-(mean(x))^2)/mean(x);

alphahatmom[i]=(mean(x))^2/(mean(x^2)-(mean(x))^2);

}

# MSE of MLEs

> mean((alphahatmle-1)^2);

[1] 0.01813474

> mean((betahatmle-1)^2);

[1] 0.02457860

>

> # MSE of MOMs

> mean((alphahatmom-1)^2);

[1] 0.04292494

> mean((betahatmom-1)^2);

[1] 0.04534693

The MLE performs substantially better than the method of

moments. The mean square error for is 0.018 for the

MLE compared to 0.043 for the Method of Moments and

the mean square error for is 0.025 for the MLE

compared to 0.045 for the Method of Moments.

16