Chapter 5-13. Monte Carlo Simulation andBootstrapping

advertisement

Chapter 5-13. Monte Carlo Simulation andBootstrapping

In this chapter, we will learn how to perform both a Monte Carlo simulation and bootstrapping

simulation to obtain a confidence interval for the mean. We will contrast the results and compare

them to the formula approach for the CI.

In the next chapter, we will apply the bootstrap method to validate a prediction model

(prognostic model).

Exercise Let’s begin with an example of bootstrapping being use (Kim et al, 2006).

In the Results section of the Abstract they state,

“Logistic regression analysis continued to show significance for lethargy (odds ratio,

2.20; bias-correced 95% confidence interval, 1.11-3.63)...Bootstrap resampling validated

the importance of the significant variables identified in the regression analysis.”

In the Statistical Analysis section they state,

“We then performed bootstrap resampling procedures with 100 iterations each to obtain

95% bias-corrected CIs for each predictor variable and to assess its stability.”

In the Results section they state,

“With bootstrap analysis, the results of the multivariable analysis were validated, and new

biased-corrected 95% CIs were calculated (Table 4).”

The authors are attempting to convince the reader that their predictors of shunt malfunction are

more valid and reliable since they used a boostrap approach.

By the end of this chapter, we should understand bootstrapping well enough to judge if

bootstrapping added to this Kim et al paper, or whether it simply misleads the reader into

thinking more validity was added than really was.

_________________

Source: Stoddard GJ. Biostatistics and Epidemiology Using Stata: A Course Manual [unpublished manuscript] University of Utah

School of Medicine, 2010.

Chapter 5-13 (revision 16 May 2010)

p. 1

Theoretical Justification for the Bootstrap Method

We begin with the theoretical justification for the bootstrap method.

Where the name “bootstrapping” comes from

Efron and Tibshirani (1998, p.5) explain,

“The use of the term bootstrap derives from the phrase to pull oneself up by one’s

bootstrap, widely thought to be based on one of the eighteenth century Adventures of

Baron Munchausen, by Rudolph Erich Raspe. (The Baron had fallen to the bottom of a

deep lake. Just when it looked like all was lost, he thought to pick himself up by his own

bootstraps.)”

Let’s recall what a sampling distribution is. Consider the sampling distribution of the mean.

Conducting a Monte Carlo simulation, we take 10,000 repeated samples (of size n=50, for

example) from the population, compute the mean from each of these samples, and then display

these means in a histogram. This histogram represents the “sampling distribution of the mean”.

In bootstrapping, we do something very similar. We begin with our sample (of size n=50, for

example). Then we take repeated samples (usually 1,000 samples is sufficient) of size n=50 from

our sample, but we do it with replacement. That is, we randomly select an observation, record

it’s value, and then put it back in the hat so that it has a chance to be drawn again. From each

sample (called a “resample”, since we sampled from a sample), we compute the mean. We

display the n=1,000 means computed from the resamples in a histogram. This histogram

represents the “sampling distribution of the mean”, just as it did in the Monte Carlo simulation.

Mooney and Duval (1993, p.10) give a concise description of bootstrapping,

“In bootstrapping, we treat the sample as the population and conduct a Monte Carlo-style

procedure on the sample. This is done by drawing a large number of ‘resamples’ of size n

from this original sample randomly with replacement. So, although each resample will

have the same number of elements as the original sample, through replacement

resampling each resample could have some of the original data points represented in it

more than once, and some not represented at all. Therefore, each of these resamples will

likely be slightly and randomly different from the original sample. And because the

elements in these resamples vary slightly, a statistic, θ̂* , calculated from one of these

resamples will likely take on a slightly different value from each of the other θ̂* ’s and

from the original θ̂* . The central assertion of bootstrapping is that a relative frequency

distribution of these θ̂* ’s calculated from the resamples is an estimate of the sampling

distribution of θ̂ .

Chapter 5-13 (revision 16 May 2010)

p. 2

If the sample is a good approximation of the population (a representative sample), bootstrapping

will provide a good approximation of the sampling distribution of θ̂ (Efron & Stein, 1981;

Mooney and Duval, 1993, p.20).

It might seem at first that there is not sufficient information in a sample to derive a sampling

distribution for a statistic. To explain why it works, we first define what a probability

distribution is (first described in mathematical language, followed by simple English).

For a discrete variable, the probability mass function p(a) of X is defined as:

p(a) = P{ X = a }

example: the pmf of a coin flip is

X=1 (if heads), p(1) = 1/2

X=0 (if tails ), p(0) = 1/2

For a continuous variable, the probability density function f(x) of X is defined as

P{ X B} f ( x)dx

B

example: the pdf of the normal distribution is

P{ X B}

Chapter 5-13 (revision 16 May 2010)

1

2

B

e ( x )

2

/ 2 2

dx

p. 3

Let f(x) denote the probability distribution for any variable (either a probability mass function or

a probability density function), which may be known or unknown. We can construct an

empirical probability distribution function (EDF) of x from the sample by placing a probability of

1/n at each point x1, x2, ... xn. This EDF of x is the nonparametric maximum likelihood estimate

(MLE) of the population distribution function, f(x) (Rao, 1987, pp. 162-166; Rohatgi, 1984,

pp.234-236). In other words, given no other information about the population, the sample is our

best estimate of the population. (Mooney and Duval, 1993, pp 10-11)

Stated in simple English, the EDF is simply the histogram of the sample data, with the heights of

the bars representing the proportion of the sample that have each specific value. When we assign

1/n to each observation (each birth weight), and 5 babies have a birth weight of 3015, the

probability of that birth weight is 5 1/n, or 5/n, which is the height of the bar for a birth weight

of 3015 in the histogram. The f(x) in the population is simply a similar histogram of all the

values in the population, with heights of bars scaled to represent proportions. The normal

distribution function given above is nothing more than a mathematical expression of the smooth

line drawn through the center of the top of each bar in the histogram.

In Chapter 5-5, when we derived the logistic regression model and introduced maximum

likelihood estimation, we said that the MLE of the logistic regression was the set of model

parameters (the and ’s in the model) that gave the greatest probability of producing the data in

our sample.

When we say that the EDF is the nonparametric MLE of f(x), we are simply stating what

population distribution has the greatest probability (likelihood) of producing our observed

sample. It is the population distribution that has the identical shape of the sample distribution.

In other words, our sample is representative of, or looks just like, the population distribution.

Because of the fact that the EDF is the nonparametric MLE of f(x), repeatedly resampling a

sample to arrive at the distribution function of a statistic, or bootstrap resampling, is analogous to

repeatedly taking random samples from a population to arrive at the distribution function of a

statistic (Monte Carlo sampling). (Mooney and Duval, 1993, p. 11)

Example

We will use the statistical formula approach, the Monte Carlo approach, and the Bootstrapping

approach to estimate a mean and its 95% confidence interval, and then compare the results.

Chapter 5-13 (revision 16 May 2010)

p. 4

We will illustrate the process with the Framingham Heart Study dataset.

Framingham Heart Study dataset (2.20.Framingham.dta)

This is a dataset distributed with Dupont (2002, p 77). The dataset comes from a long-term

follow-up study of cardiovascular risk factors on 4699 patients living in the town of

Framingham, Massachusetts. The patients were free of coronary heart disease at their baseline

exam (recruitment of patients started in 1948).

Date Codebook

Baseline exam:

sdp

systolic blood pressure (SBP) in mm Hg

dbp

diastolic blood pressure (DBP) in mm Hg

age

age in years

scl

serum cholesterol (SCL) in mg/100ml

bmi

body mass index (BMI) = weight/height2 in kg/m2

sex

gender (1=male, 2=female)

month

month of year in which baseline exam occurred

id

patient identification variable (numbered 1 to 4699)

Follow-up information on coronary heart disease:

followup

follow-up in days

chdfate

CHD outcome (1=patient develops CHD at the end of follow-up,

0=otherwise)

Reading in the data,

File

Open

Find the directory where you copied the course CD

Change to the subdirectory datasets & do-files

Single click on 2.20.Framingham.dta

Open

use "C:\Documents and Settings\u0032770.SRVR\Desktop\

Biostats & Epi With Stata\datasets & do-files\

2.20.Framingham.dta", clear

*

which must be all on one line, or use:

cd "C:\Documents and Settings\u0032770.SRVR\Desktop\"

cd "Biostats & Epi With Stata\datasets & do-files"

use 2.20.Framingham.dta, clear

Chapter 5-13 (revision 16 May 2010)

p. 5

We’ll consider this sample (N=4,699) to be our population, which we will soon take a smaller

sample from. We are doing this for illustrative purposes, since we need to know the correct

value of the mean that our sample is trying to estimate.

Computing the “population” confidence interval for serum cholesterol (SCL),

Statistics

Summaries, tables & tests

Summary and descriptive statistics

Confidence intervals

Main tab: Variables: scl

OK

ci scl

Variable |

Obs

Mean

Std. Err.

[95% Conf. Interval]

-------------+--------------------------------------------------------------scl |

4666

228.2925

.6520838

227.0141

229.5709

Interpretation of Confidence Interval

With a 95% confidence interval, we are 95% confident that the interval covers the population

mean. (population mean is considered fixed, the interval is random)

Van Belle et al (2004, p.86) provide the following interpretation for the 95% confidence interval

for the population mean, :

“Since the sample mean, Y , varies from sample to sample, it cannot mean that 95% of

the sample means will fall in the interval for a specific sample mean. The interpretation

is that the probability is 0.95 that the interval straddles the population mean.”

We will take a random sample of n=200 out of n=4,699 to provide a smaller, simpler dataset for

illustration.

set seed 999

sample 200, count

Chapter 5-13 (revision 16 May 2010)

p. 6

Formula Approach

Using our sample of N=200 patients, which contains one missing value for serum cholesterol

(SCL), we use the ordinary formula approach to obtain the mean and 95% CI.

ci scl

Variable |

Obs

Mean

Std. Err.

[95% Conf. Interval]

-------------+--------------------------------------------------------------scl |

199

233.4472

3.014984

227.5016

239.3928

We see that the mean differs slightly from the population mean

Variable |

Obs

Mean

Std. Err.

[95% Conf. Interval]

-------------+--------------------------------------------------------------scl |

4666

228.2925

.6520838

227.0141

229.5709

due to sampling variation.

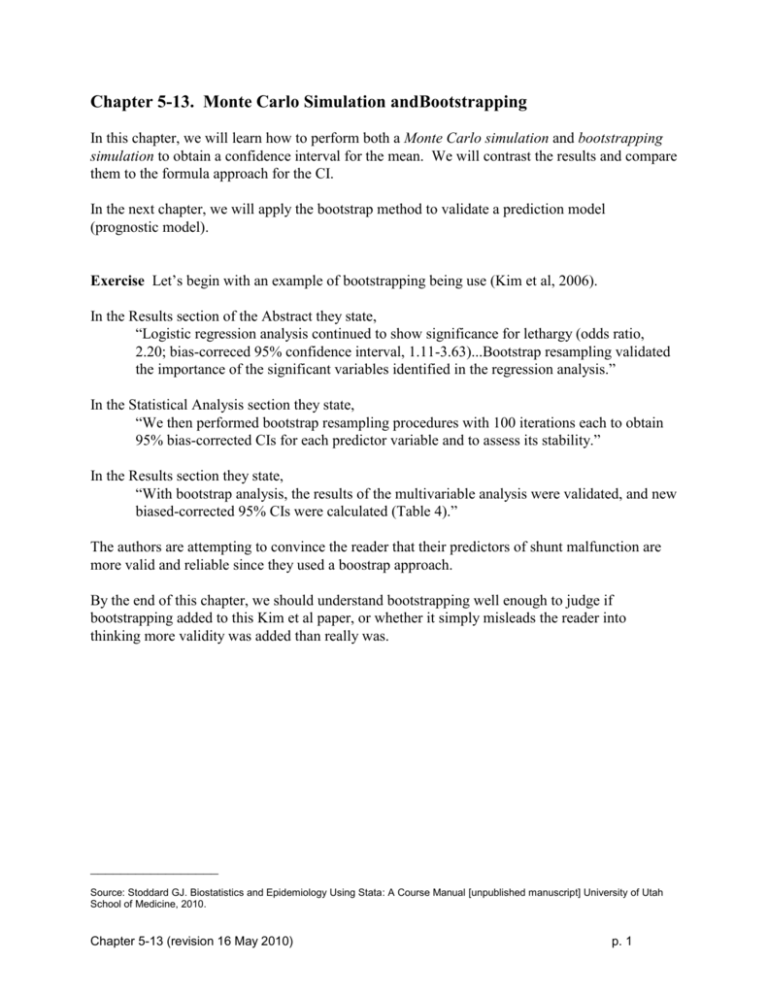

Looking at the histogram of the n=199 SCL values,

10

0

5

Percent

15

20

histogram scl , percent

150

200

250

300

Serum Cholesterol

350

we see that the SCL variable is skewed to the right.

The central limit theorem states that the sampling distribution of the mean SCL is normally

distributed, even though the distribution of individual SCLs is skewed.

Therefore, the 95% confidence interval around the mean, which assumes a normal sampling

distribution that is symmetrical, is a still a correct confidence interval.

Chapter 5-13 (revision 16 May 2010)

p. 7

Monte Carlo Approach

From the sample we used in the formula approach, we obtain the mean and standard deviation,

which we will need in a moment,

Statistics

Summaries, tables & tests

Summary and descriptive statistics

Summary statistics

Main tab: Variables: scl

OK

summarize scl

<or abbreviate to:>

sum scl

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+-------------------------------------------------------scl |

199

233.4472

42.53158

150

375

We do not have a theoretical probability distribution that has exactly the skewness of our sample

that we can use in a Monte Carlo simulation to arrive at a 95% CI for the mean. Therefore, we

will just use random samples from a Normal distribution with the same mean (233.4472) and

standard deviation (42.53158) as an approximation. We will use sample sizes of n=199, the

same number of SCL values in our original sample.

* compute a Monte Carlo 95% CI from a normal

* distribution with mean of 233.4472 and SD of 42.53158

clear

set seed 999

quietly set obs 10000

gen scl=.

gen meanscl=.

forvalues i=1(1)10000 {

quietly replace scl=233.4472+42.53158*invnorm(uniform()) in 1/199

quietly sum scl, meanonly

quietly replace meanscl=r(mean) in `i'/`i'

}

histogram meanscl, percent normal

sum meanscl

centile meanscl, centile(2.5 97.5)

Note: In this simulation, we used Stata’s “invnorm(uniform())” which returns a value from the

standard normal distribution. To convert this to a normal distribution with a given mean and

standard deviation, we use the fact that

X Mean

= z , which is Normal with mean=0 and SD=1 (Standard Normal)

SD

X Mean SDz

X Mean SDz , which is Normal with desired mean and SD

Chapter 5-13 (revision 16 May 2010)

p. 8

8

6

4

0

2

Percent

225

230

235

meanscl

240

245

. sum meanscl

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+-------------------------------------------------------meanscl |

10000

233.4123

3.031482

222.9115

245.1845

. centile meanscl, centile(2.5 97.5)

-- Binom. Interp. -Variable |

Obs Percentile

Centile

[95% Conf. Interval]

-------------+------------------------------------------------------------meanscl |

10000

2.5

227.3847

227.207

227.5685

|

97.5

239.3856

239.2764

239.5407

Using the 2.5-th and 97.5-th percentiles as the 95% CI, along with the computed mean of the

sample of means, we get:

Monte Carlo:

mean = 233.4123 , 95% CI (227.3847 , 239.3856)

which is very close to the original sample values from the ci command:

Variable |

Obs

Mean

Std. Err.

[95% Conf. Interval]

-------------+--------------------------------------------------------------scl |

199

233.4472

3.014984

227.5016

239.3928

Original Sample:

mean = 233.4472 , 95% CI (227.5016 , 239.3928)

At this point, we have verified that Monte Carlo simulation produces the same result as statistical

formulas. In other words,

Taking a series of samples to produce the long run average

gives the same answer as statistical formulas.

Chapter 5-13 (revision 16 May 2010)

p. 9

In this example, we contrived the Monte Carlo population to match the sample result, by

assuming the population had the same mean and SD as the sample. That is why the Monte Carlo

approach matched the sample result so closely.

Monte Carlo:

mean = 233.4123 , 95% CI (227.3847 , 239.3856)

Original Sample:

mean = 233.4472 , 95% CI (227.5016 , 239.3928)

Population:

mean = 228.2925 , 95% CI (227.0141 , 229.5709)

Bootstrapping Approach

First, we bring our original sample back into stata, since we cleared it from memory before

running the Monte Carlo experiment.

use "2.20.Framingham.dta", clear

set seed 999

sample 200, count

drop if scl==. // drop missing value observation

sum scl

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+-------------------------------------------------------scl |

199

233.4472

42.53158

150

375

Notice we dropped the missing scl observation from the Stata memory.

It’s always a good idea to drop the missing values from the dataset before performing a bootstrap.

The idea of the bootstrap is to draw sample of equal sizes. It draws sample from observations in

memory, regardless of whether they are missing or not. This results in samples of unequal sizes

from iteration to iteration. This may not matter if the number of missing values is small relative

to the sample size, but it would matter otherwise.

Chapter 5-13 (revision 16 May 2010)

p. 10

Computing the bootstrap, using 1,000 re-samples,

* compute bootstrap

set seed 999

set obs 1000

capture drop seqnum bootwt meanscl

gen seqnum=_n // create a variable to sort on

gen bootwt=.

gen meanscl=.

forvalues i=1(1)1000{

bsample 199 ,weight(bootwt), in 1/199

sort seqnum // bsample unsorts the data

quietly sum scl [fweight=bootwt], meanonly

quietly replace meanscl=r(mean) in `i'/`i'

* use list to see results for first two iterations

list seqnum scl bootwt meanscl in 1/5 if `i'<=2

}

sum meanscl

centile meanscl, centile(2.5 97.5)

1.

2.

3.

4.

5.

1.

2.

3.

4.

5.

+----------------------------------+

| seqnum

scl

bootwt

meanscl |

|----------------------------------|

|

1

289

0

228.0302 |

|

2

275

1

. |

|

3

217

1

. |

|

4

271

1

. |

|

5

234

3

. |

+----------------------------------+

+----------------------------------+

| seqnum

scl

bootwt

meanscl |

|----------------------------------|

|

1

289

0

228.0302 |

|

2

275

0

234.8744 |

|

3

217

1

. |

|

4

271

2

. |

|

5

234

1

. |

+----------------------------------+

. sum meanscl

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+-------------------------------------------------------meanscl |

1000

233.4235

3.010982

224.3467

242.1859

. centile meanscl, centile(2.5 97.5)

-- Binom. Interp. -Variable |

Obs Percentile

Centile

[95% Conf. Interval]

-------------+------------------------------------------------------------meanscl |

1000

2.5

227.6337

226.9178

228.0157

|

97.5

239.4345

238.7897

240.2121

Using the 2.5-th and 97.5-th percentiles as the 95% CI, along with the computed mean of the

sample of means, we get a mean of 233.4235 and 95% CI of (227.6337 , 239.4345).

Chapter 5-13 (revision 16 May 2010)

p. 11

Contrasting the Results

Contrasting the results from the three approaches:

Formula:

mean = 233.4472 , 95% CI (227.5016 , 239.3928)

Monte Carlo:

mean = 233.4123 , 95% CI (227.3847 , 239.3856)

Bootstrap:

mean = 233.4235 , 95% CI (227.6337 , 239.4345)

We see that all three approaches produce very similar results.

In particular, notice how similar the bootstrapping algorithm was to the Monte Carlo experiment.

In fact, all we did was treat the sample as if it was the population, and then applied Monte Carlo

sampling to generate an empirical estimate of the statistic’s sampling distribution.

Definition sampling without replacement: each sampled observation can be selected only (do

not put the observation “back in the hat” after selecting it).

Definition sampling with replacement: each observation can be selected on each draw in the

sample (put the observation “back in the hat” after selecting it).

In bootstrap sampling, sampling with replacement is used, and is necessary. If we sampled

n=199 observations without replacement, we would always obtain the original sample, and no

variability would be introduced into the simulation.

Sampling with replacement seems strange to us, because we do not do this when we take a

sample of patients in our research. Its use is justified in the following box.

Chapter 5-13 (revision 16 May 2010)

p. 12

Sampling with replacement

In bootstrapping, we take a sample with replacement.

As strange as that type of sampling might seem, it turns out that standard error of the mean,

which is required for the 95% CI for the mean, is based on the assumption that sampling is either

with replacement or that the samples are drawn from infinite populations. (Daniel, 1995, pp.125127)

This is because the statistical theory underlying this statistic assumes that every data point is

independently and identically distributed (i.i.d.). If sampling is done from a small population

without replacement, the way samples are usually drawn, the probability of drawing any

remaining data point is larger than the data point before. For example, if the population size is

N=100 from a uniform distribution, which means every data point has an equal probability 1/100,

then the actual probabilities of drawing these data points, done without replacement is:

1st observation: 1/100

2nd observation: 1/99

3rd observation: 1/98

Therefore, the probability distribution changes every time a data point is removed by sampling

without replacement, and thus violates the i.i.d. assumption.

When sampling is done without replacement, then, a finite population correction is required. For

example, when sampling without replacement, the correct formula for the standard error of the

mean is no longer

s

SE

n

but is instead

s

s

N n

SE

(finite population correction factor) =

N 1

n

n

When the population size, N, is much larger than the sample size, n, this correction factor is so

close to 1 that its effect is negligible. In practice, statisticians ignore the correction factor when

the sample is no more than 5 percent of the population size, or n/N 0.05. (Daniel, 1995,

pp.125-127)

In bootstrapping however, the sample becomes the population, or sampled population, so

sampling with replacement is necessary to satisfy the i.i.d. assumption. This i.i.d. assumption

was stated above in the statistical theory justification for bootstrapping as, “We can construct an

empirical probability distribution function (EDF) of x from the sample by placing a probability of

1/n at each point x1, x2, ... xn. This EDF of x is the nonparametric maximum likelihood estimate

(MLE) of the population distribution function, F(X).” That is, sampling with replacement is

necessary to provide the 1/n probability for each data point.

Chapter 5-13 (revision 16 May 2010)

p. 13

Easier Approach

You can also use the bootstrap command for this bootstrapped 95% CI, but it uses the “normalbased” confidence interval, rather than the “percentile” confidence interval used above. Either

approach is okay, but the results are not identical.

The above bootstrap approach changed the number of observations in memory to n=1,000, with a

lot of missing observations for scl. We need to return the data in Stata memory to its original

state, with a sample size of n=199, before we bootstrap again.

use "2.20.Framingham.dta", clear

set seed 999

sample 200, count

drop if scl==. // drop missing value observation

sum scl

We will compute the bootstrap CI, using a seed of 999 so our result matches what is shown

below. Using the menu,

Statistics

Resampling

Bootstrap estimation

Main tab: Stata command to run: sum scl

Other statistical expressions: r(mean)

Replications: 1000

Options tab: Sample size: 199

Advanced tab: Random-number seed: 999

OK

bootstrap r(mean), reps(1000) size(199) seed(999) : sum scl

Bootstrap results

command:

_bs_1:

Number of obs

Replications

=

=

199

1000

summarize scl

r(mean)

-----------------------------------------------------------------------------|

Observed

Bootstrap

Normal-based

|

Coef.

Std. Err.

z

P>|z|

[95% Conf. Interval]

-------------+---------------------------------------------------------------_bs_1 |

233.4472

3.069715

76.05

0.000

227.4307

239.4638

------------------------------------------------------------------------------

which is very close to the “percentile” method used above, which gave:

Bootstrap:

mean = 233.4235 , 95% CI (227.6337 , 239.4345)

Chapter 5-13 (revision 16 May 2010)

p. 14

Many Forms of Bootstrap Estimates

What has been presented is called the “percentile method” of bootstrapping, which is the easiest

to understand. Other methods add some statistical rigor and frequently result in tighter

confidence limits. A number of these are presented by Carpenter and Bithell (2000). Four of

these other forms are available in Stata, using the “estat” command.

The “BCa” method, however, must be specified to get it, since it requires more computation

time.

bootstrap r(mean), reps(1000) size(199) seed(999) bca : sum scl

estat bootstrap, all

Bootstrap results

command:

_bs_1:

Number of obs

Replications

=

=

199

1000

summarize scl

r(mean)

-----------------------------------------------------------------------------|

Observed

Bootstrap

Normal-based

|

Coef.

Std. Err.

z

P>|z|

[95% Conf. Interval]

-------------+---------------------------------------------------------------_bs_1 |

233.4472

3.069715

76.05

0.000

227.4307

239.4638

-----------------------------------------------------------------------------. estat bootstrap, all

Bootstrap results

command:

_bs_1:

Number of obs

Replications

=

=

199

1000

summarize scl

r(mean)

-----------------------------------------------------------------------------|

Observed

Bootstrap

|

Coef.

Bias

Std. Err. [95% Conf. Interval]

-------------+---------------------------------------------------------------_bs_1 |

233.44724 -.0524171

3.0697148

227.4307

239.4638

(N)

|

227.5477

239.4824

(P)

|

227.5729

239.4824 (BC)

|

227.6131

239.4874 (BCa)

-----------------------------------------------------------------------------(N)

normal confidence interval

(P)

percentile confidence interval

(BC)

bias-corrected confidence interval

(BCa) bias-corrected and accelerated confidence interval

Chapter 5-13 (revision 16 May 2010)

p. 15

To get a feel for how the confidence limits from the four approaches center around the sample

(observed) mean, we can run the following commands from the do-file editor.

display " (N):

" observed

display " (P):

" observed

display " (BC):

" observed

display "(BCa):

" observed

observed coef-lower

coef-upper bound: "

observed coef-lower

coef-upper bound: "

observed coef-lower

coef-upper bound: "

observed coef-lower

coef-upper bound: "

bound: " 233.4472-227.4307

233.4472-239.4638

bound: " 233.4472-227.5477

233.4472-239.4824

bound: " 233.4472-227.5729

233.4472-239.4824

bound: " 233.4472-227.6131

233.4472-239.4874

///

///

///

///

which produces,

(N):

(P):

(BC):

(BCa):

observed

observed

observed

observed

coef-lower

coef-lower

coef-lower

coef-lower

bound:

bound:

bound:

bound:

6.0165

5.8995

5.8743

5.8341

observed

observed

observed

observed

coef-upper

coef-upper

coef-upper

coef-upper

bound:

bound:

bound:

bound:

-6.0166

-6.0352

-6.0352

-6.0402

Only the “normal” method attempts to have confidence bounds which are symmetric about the

mean. The other methods are wider on the right, or upper side, of the interval, which is the same

direction in which the data are skewed.

Bias

The bias that is being corrected is the same bias that statisticians are familiar with.

Letting T be a statistic, and be a population parameter, the bias is E(T) - , where E(T) is the

expected value of the test statistic, which is the long-run average. The bias shown in the above

output is the bootstrap estimate of this bias.

Steyerberg (2009, p.94) explains it for the BCa approach,

“Bias-corrected percentile method: Bias in estimation of the distribution is accounted for,

based on the difference between the median of the bootstrap estimates and the sample

estimate (“BCa”).108

-------------108

Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman & Hall,

1993.

Chapter 5-13 (revision 16 May 2010)

p. 16

It was the BC, or bias-corrected” confidence interval that Kim et al (2006) used (our example

article on page 1).

Here is the approach they used:

bootstrap _b, reps(1000) size(199) seed(999): logistic chdfate age scl

estat bootstrap, all

Logistic regression

Log likelihood = -117.00523

Number of obs

Replications

Wald chi2(2)

Prob > chi2

Pseudo R2

=

=

=

=

=

199

1000

11.93

0.0026

0.0581

-----------------------------------------------------------------------------|

Observed

Bootstrap

Normal-based

chdfate | Odds Ratio

Std. Err.

z

P>|z|

[95% Conf. Interval]

-------------+---------------------------------------------------------------age |

1.011431

.0199457

0.58

0.564

.9730842

1.05129

scl |

1.013314

.0042096

3.18

0.001

1.005097

1.021599

-----------------------------------------------------------------------------. estat bootstrap, all

Logistic regression

Number of obs

Replications

=

=

199

1000

-----------------------------------------------------------------------------|

Observed

Bootstrap

chdfate |

Coef.

Bias

Std. Err. [95% Conf. Interval]

-------------+---------------------------------------------------------------age |

.01136646 -.0002439

.0197203

-.0272846

.0500175

(N)

|

-.0294536

.0515303

(P)

|

-.0311151

.0496985 (BC)

scl |

.01322643

.0006473

.00415434

.0050841

.0213688

(N)

|

.0057071

.0216384

(P)

|

.0042645

.0204473 (BC)

_cons | -4.4385145 -.1585981

1.2091945

-6.808492 -2.068537

(N)

|

-7.261674 -2.431381

(P)

|

-6.951354 -2.297873 (BC)

-----------------------------------------------------------------------------(N)

normal confidence interval

(P)

percentile confidence interval

(BC)

bias-corrected confidence interval

Each of these CI’s are then converted to odds ratios, using

display exp(-.0272846)

.97308426

Chapter 5-13 (revision 16 May 2010)

p. 17

How many resamples should we draw?

Running the same Stata commands, but this time using 10,000 resamples instead of 1,000 we get

the results in the following table.

. sum meanscl

Variable |

Obs

Mean

Std. Dev.

Min

Max

-------------+-------------------------------------------------------meanscl |

10000

233.4252

2.976414

221.4673

244.5126

. centile meanscl, centile(2.5 97.5)

-- Binom. Interp. -Variable |

Obs Percentile

Centile

[95% Conf. Interval]

-------------+------------------------------------------------------------meanscl |

10000

2.5

227.6231

227.4975

227.7314

|

97.5

239.3166

239.1831

239.4556

The two results, this one and the preceding one, are:

Bootstrap (10,000 resamples):

Bootstrap ( 1,000 resamples):

mean = 233.4252 , 95% CI (227.4975 , 239.1831)

mean = 233.6320 , 95% CI (227.7398 , 239.0903)

We see that 1,000 resamples are as good as 10,000 resamples.

So, how many resamples should we draw?

That is an empirical question that depends on the statistics to be estimated and the accuracy

desired (Efron, 1979, sec. 2; Mooney and Duval, 1993, p 21). However, the improvement in the

sampling distribution estimation is slight for more than 1,000 resamples, in most cases (Efron &

Tibshirani, 1986, sec. 9; Mooney and Duval, 1993, p 21).

Chapter 5-13 (revision 16 May 2010)

p. 18

Example Applications

1) Better estimates of confidence intervals.

We saw an application of this with the Kim et al paper (2006).

2) Computing a CI for a statistic for which a formula is not available.

For example, if you wanted to compute a CI around a ratio of two physiologic measures, the

bootstrap approach allows you to do this without having to mathematically derive a proper

formula.

3) Validating a model.

How to do this is covered in the following chapter.

Exercise Return to the Kim et al (2006) paper. The authors simply computed the biasedcorrected 95% CI for the regression coefficient for each predictor variable. Then they made the

claim, “Bootstrap resampling validated the importance of the significant variables identified in

the regression analysis.” By “validated”, the authors imply that these same predictors will appear

as significant predictors in future datasets. However, all they really did was provide perhaps a

more accurate confidence interval for their sample only. Many authors make this mistake of

thinking that the bootstrap CI does a lot more than it really does.

To actually validate the important of predictor variables, a more sophisticated approach is

required, which is covered in the following chapter.

Chapter 5-13 (revision 16 May 2010)

p. 19

References

Carpenter J, Bithell J. (2000). Bootstrap confidence intervals: when, which, what? A practical

guide for medical statisticians. Statist. Med. 19:1141-1164.

Daniel WW. (1995). Biostatistics: a Foundation for Analysis in the Health Sciences. 6th ed. New

York, John Wiley & Sons.

Dupont WD. (2002). Statistical Modeling for Biomedical Researchers: A Simple Introduction to

the Analysis of Complex Data. Cambridge UK, Cambridge University Press.

Efron B. (1979). Bootstrap methods: another look at the jackknife. Annals of Statistics 7:1-26.

Efron B, Stein C. (1981). The jackknife estimate of variance. Annals of Statistics 9:586-596.

Efron B, Tibshirani R. (1986). Bootstrap methods for standard errors, confidence intervals, and

other measures of statistical accuracy. Statistical Science 1:54-77.

Efron B, Tibshirani RJ. (1998). An Introduction to the Bootstrap. Boca Raton, Florida,

Chapman & Hall/CRC.

Kim TY, Stewart G, Voth M, et al. (2006). Signs and symptoms of cerebrospinal fluid shunt

malfunction in the pediatric emergency department. Pediatric Emergency Care 22(1):2834.

Mooney CZ, Duval RD. (1993). Bootstrapping: A Nonparametric Approach to Statistical

Inference. Newbury Park, CA, Sage Publications.

Rao BLSP. (1987). Asymptotic Theory of Statistical Inference. New York, John Wiley.

Rohatgi VK. (1984). Statistical Inference. New York, John Wiley.

Steyerberg EW. (2009). Clinical Prediction Models: A Practical Approach to Development,

Validation, and Updating. New York, Springer.

van Belle G, Fisher LD, Heagerty PJ, Lumley T. Biostatistics: A Methodology for the

Health Sciences. 2nd ed. Hoboken, NJ, John Wiley & Sons.

Chapter 5-13 (revision 16 May 2010)

p. 20