Lecture 9 slides

advertisement

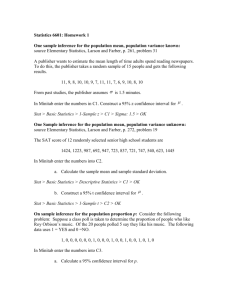

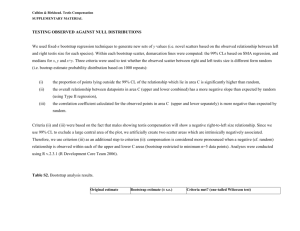

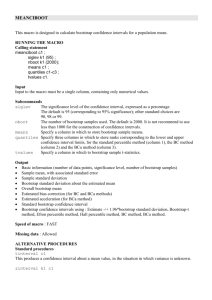

Lecture 9. Model Inference and Averaging Instructed by Jinzhu Jia Outline Bootstrap and ML method Bayesian method EM algorithm MCMC (Gibbs sampler) Bagging General model average Bumping The Bootstrap and ML Methods One Example with one dim data: Cubic Spline model: 𝑦 = 𝜇 𝑥 = j=1,2…,7 𝛽𝑗 ℎ𝑗 𝑥 + 𝜖, Let 𝐻 = 𝐻1 , 𝐻2 , … , 𝐻7 be the basis matrix Prediction error: One Example Bootstrap for the above example 1. Draw B datasets with each of size N = 50 with replacement 2. For each data set Z*, we fit a cubic spline 3. Using B = 200 bootstrap samples, we can obtain 95% confidence bands at each x_i Connections Non-parametric bootstrap Parametric bootstrap: The process is repeated B times, say B = 200 The bootstrap data sets: Conclusion: the parametric bootstrap agree with the least squares! In general, the parametric bootstrap agree with the MLE. ML Inference Density function or probability mass function Likelihood function Loglikelihood function ML Inference Score function Information Matrix: Observed Informaion matrix: 𝐼(𝜃 ) Fisher Information Matrix Asymptotic result: Where is the true parameter Estimate for standard error of Confidence interval: ML Inforence confidence region: Example: revisit the previous smoothing example Bootstrap V.S. ML The advantage of bootstrap: it allows us to compute MLE of standard errors even when no formulas are available Bayesian Methods Two parts: 1. sampling model for our data given parameters 2. prior distribution for parameters: Finally, we have the posterior distribution: Bayesian methods Differences between Bayesian methods and standard (‘frequentist’) methods BM uses of a prior distribution to express the uncertainty present before seeing the data, BM allows the uncertainty remaining after seeing the data to be expressed in the form of a posterior distribution. Bayesian methods: prediction In contrast, ML method uses future data to predict Bayesian methods: Example Revisit the previous example We first assume Prior: known. Bayesian methods: Example How to choose a prior? Difficult in general Sensitivity analysis is needed EM algorithm It is used to simplify difficult maximum likelihood problems, especially when there are missing data. Gaussian Mixture Model Gaussian Mixture Model Introduce missing variable But are unknown Iterative method: 1. Get expectation of 2. Maximize it Gaussian Mixture Model EM algorithm MCMC for sampling from Posterior MCMC is used to draw samples from some (posterior) distribution Gibbs sampling -- Basic idea: To sample from 𝑝 𝑥1 , 𝑥2 , … , 𝑥𝑝 Draw 𝑥1 ∼ 𝑝 𝑥1 𝑥2 , … , 𝑥𝑝 Draw 𝑥𝑗 ∼ 𝑝 𝑥𝑗 𝑥1 , … , 𝑥𝑗−1 , 𝑥𝑗+1 , … , 𝑥𝑝 Repeat Gibbs sampler: Example Gibbs sampling for mixtures Bagging Bootstrap can be used to assess the accuracy of a prediction or parameter estimate Bootstrap can also be used to improve the estimate or prediction itself. Reduce variances of the prediction Bagging If is linear in data, then bagging is just itself. Take cubic smooth spline as an example. Property: x fixed Bagging Bagging is not good for 0-1 loss Model Averaging and Stacking A Bayesian viewpoint Model Weights Get the weights from BIC Model Averaging Frequentist viewpoint Better prediction and less interpretability Bumping Find a better single model. Example: Bumping Homework Due May 23 1. reproduce Figure 8.2 2.reproduce Figures 8.5 and 8.6 3. 8.6 P293 in ESLII_print5