documentation

advertisement

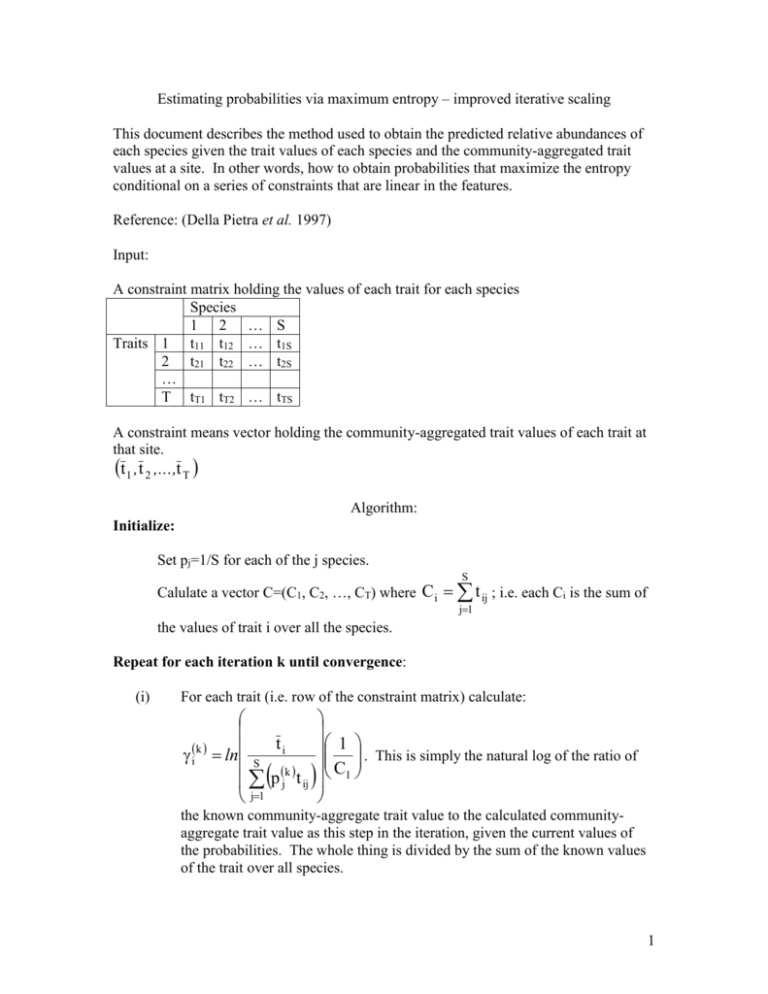

Estimating probabilities via maximum entropy – improved iterative scaling

This document describes the method used to obtain the predicted relative abundances of

each species given the trait values of each species and the community-aggregated trait

values at a site. In other words, how to obtain probabilities that maximize the entropy

conditional on a series of constraints that are linear in the features.

Reference: (Della Pietra et al. 1997)

Input:

A constraint matrix holding the values of each trait for each species

Species

1 2 … S

Traits 1 t11 t12 … t1S

2 t21 t22 … t2S

…

T tT1 tT2 … tTS

A constraint means vector holding the community-aggregated trait values of each trait at

that site.

t1 ,t 2 ,...,t T

Algorithm:

Initialize:

Set pj=1/S for each of the j species.

S

Calulate a vector C=(C1, C2, …, CT) where C i t ij ; i.e. each Ci is the sum of

j1

the values of trait i over all the species.

Repeat for each iteration k until convergence:

(i)

For each trait (i.e. row of the constraint matrix) calculate:

t

1 . This is simply the natural log of the ratio of

ik ln S i

C1

k

p j t ij

j1

the known community-aggregate trait value to the calculated communityaggregate trait value as this step in the iteration, given the current values of

the probabilities. The whole thing is divided by the sum of the known values

of the trait over all species.

1

(ii)

T

k

k i 1 i t ij

k

Calculate the normalisation term : Z p j e

j1

(iii)

Calculate the new probabilities of each species at iteration k:

S

T

p jk 1

k t

k i 1 i ij

pj e

p jk 1 p jk

S

(iv)

If

.

Z

2

tolerance then stop, else repeat steps (i) to (iii).

j1

Note that this convergence also occurs when all of the γi are equal to 0 since, when this

occurs, then the following is true (to within the tolerance value):

T

T

i k t ij S

0t ij S

Zk p jk e i 1

p jk e i 1 p jk 1 1;

j1

j1

j1

S

T

k 1

pj

k t

k i 1 i ij

pj e

Z

p jk 1

1

p jk ;

S

p jk 1t ij t i ;

j1

ti

k

1 ln1 1 0

i ln S

C

C1

k

i

p

t

j ij

j1

When convergence is achieved then the resulting probabilities ( p̂ j ) are those that

simultaneously maximize the entropy conditional on the community-aggregate traits and

that maximize the likelihood of the Gibbs distribution:

T

T

i t ij

i t ij e i 1

1

ˆp j

e i 1

. This means that one can solve for the Lagrange

T

Z

S i t ij

e i 1

j1

multipliers (i.e. weights on the traits, λi) by solving the linear system of equations:

2

T

ln( ˆp1 ) i t i1 ln( Z )

i 1

T

ln( ˆp 2 ) i t i 2 ln( Z )

i 1

, i.e.

T

ln( ˆp S ) i t iS ln( Z )

i 1

ln( ˆp1 )

t 11

t

ln( ˆp 2 )

21

1 2 T

ˆ

ln( p S )

t T1

t 12

t 22

t T2

t1S ln( Z )

t 2S ln( Z )

ln( Z ) . This

t TS ln( Z )

system of linear equations has T+1 unknowns (the T values of λ plus ln(Z)) and S

equations. So long as the number of traits is less than S-1, this system is soluble.

In fact, the solution is the well-known least squares regression: simply regress the values

of ln( ˆp j ) of each species on the trait values of each species in a (multiple regression).

The intercept is the value of ln(Z) and the slopes are the values of λi and these slopes

(Lagrange multipliers) measure by how much the ln(probability), i.e. the ln(relative

abundance) changes as the value of the trait changes.

Maxent program in R

Finds p that maximizes p ln p subject to K equality constraints of the form:

k i t ij p j

. These are often moment constraints that represent information about the

j

mean, variance etc.

Example: You know only that the mean value of a 6-sided die is 3.5 and that the “trait”

value assigned to each side is the number of spots (t1j=1,2,3,4,5,6).

Function: Maxent(constraint.means,constraint.matrix)

constraint.means is a vector giving the K equality constraints

constraint.matrix is a matrix giving the trait values of the j states for each equality

constraint.

In the present example there is only one equality constraint: t1j p j 3.5 so

constraint.means=3.5

constraint.matrix= 1 2 3 4 5 6

3

out<-Maxent(constraint.means=c(3.5),constraint.matrix=rbind(1:6))

-1.8

-2.2

-2.0

log(out$probabilities)

-1.6

-1.4

> out$probabilities

[1] 0.1666667 0.1666667 0.1666667 0.1666667 0.1666667 0.1666667

1

2

3

4

5

6

1:6

If we regress these probabilities against the trait values (i.e. 1,2,3,4,5,6) then

the slope is 0 and the intercept is -1.7918. This means that changing the values of the

trait do not change the probability at all. Note that

pj

e 0 ti

e1.7918

0.16666 for all i and this is the uniform distribution: 1/6

Example 2: You know only that the mean value of a 6-sided die is 4 and that the “trait”

value assigned to each side is the number of spots (t1j=1,2,3,4,5,6).

out<-Maxent(constraint.means=c(4),constraint.matrix=rbind(1:6))

> out

$probabilities

[1] 0.1031964 0.1228346 0.1462100 0.1740337 0.2071522 0.2465732

$final.moments

[,1]

[1,] 3.998791

$constraint.means

[1] 4

$constraint.matrix

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 1 2 3 4 5 6

4

-1.4

-1.6

-1.8

log(out$probabilities)

-2.0

-2.2

1

2

3

4

5

6

1:6

If we regress these probabilities against the trait values (i.e. 1,2,3,4,5,6) then

the slope is 0.1742 and the intercept is -2.4453. Thus, increasing the value of the trait by

1 unit increases the ln(probability) by 0.1742 units.

Note that

pj

e 0.1742 t i

for all i and this is the exponential distribution.

e 2.4453

Example 3: Now, you know only that the mean value of a 6-sided die is 4, the variance is

10 and that the “trait” value assigned to each side is the number of spots (t1j=1,2,3,4,5,6).

Constraints:

t1j p j 4

j

t 22 j p j 14

j

The second mean value constraint comes from the fact that S 2 x x 2j p j .

j

> out<-Maxent(constraint.means=c(4,14),constraint.matrix=rbind(1:6,(1:6)^2))

> out

$probabilities

[1] 1.180483e-10 1.188473e-04 2.223062e-01 7.725863e-01 4.988563e-03

[6] 5.984626e-08

$final.moments

[,1]

[1,] 3.782444

[2,] 14.487328

$constraint.means

5

[1] 4 14

-10

-15

-20

log(out$probabilities)

-5

0

$constraint.matrix

[,1] [,2] [,3] [,4] [,5] [,6]

[1,] 1 2 3 4 5 6

[2,] 1 4 9 16 25 36

1

2

3

4

5

6

1:6

If we regress these probabilities against the two trait values (i.e. t1= 1,2,3,4,5,6 and

t2=1,4,9,16,25,36) then we get

ln( ˆp ) 42.9705 23.2547 t1 3.144100 t12

This function is maximal at around t=3.7. Thus, increasing the value of the trait by 1 unit

increases the ln(probability) by 0.1742 units.

Factoring the above equation and solving gives

2

e 13.66707.39630 t1 t1

pj

e 1 t1 3.69815 for all i and this is the a discrete version of the

1

2

normal distribution.

Maxent function

function(constraint.means, constraint.matrix, initial.probs =

NA)

{

# MAxent function in R. Written by Bill Shipley

# Bill.Shipley@USherbrooke.ca

# Improved Iterative Scaling Algorithm from Della Pietra, S.

# Della Pietra, V., Lafferty, J. (1997). Inducing features of random fields.

# IEEE Transactions Pattern Analysis And Machine Intelligence

# 19:1-13

#.............................

# constraint.means is a vector of the community-aggregated trait values

# constraint.matrix is a matrix with each trait defining a row and each

# species defining a column. Thus, two traits and 6 species would produce

6

# a constraint.matrix with two rows (the two traits) and 6 columns (the

# six species)

if(!is.vector(constraint.means)) return(

"constraint.means must be a vector")

if(is.vector(constraint.matrix)) {

constraint.matrix <- matrix(constraint.matrix,

nrow = 1, ncol = length(

constraint.matrix))

}

n.constraints <- length(constraint.means)

dim.matrix <- dim(constraint.matrix)

if(dim.matrix[2] == 1 & dim.matrix[1] > 1) {

constraint.matrix <- t(constraint.matrix)

dim.matrix <- dim(constraint.matrix)

}

if(n.constraints != dim.matrix[1])

return("Number of constraint means not equal to number in

constraint.matrix"

)

n.species <- dim.matrix[2]

if(is.na(initial.probs)) {

prob <- rep(1/n.species, n.species)

}

if(!is.na(initial.probs)) {

prob <- initial.probs

}

# C.values is a vector containing the sum of each trait value over the species

C.values <- apply(constraint.matrix, 1, sum)

test1 <- test2 <- 1

while(test1 > 1e-008 & test2 > 1e-008) {

denom <- constraint.matrix %*% prob

gamma.values <- log(constraint.means/denom)/

C.values

if(sum(is.na(gamma.values)) > 0)

return("missing values in gamma.values"

)

unstandardized <- exp(t(gamma.values) %*%

constraint.matrix) * prob

new.prob <- as.vector(unstandardized/sum(

unstandardized))

if(sum(is.na(new.prob)) > 0)

return("missing values in new.prob")

test1 <- 100 * sum((prob - new.prob)^2)

test2 <- sum(abs(gamma.values))

prob <- new.prob

}

7

list(probabilities = prob, final.moments = denom,

constraint.means = constraint.means,

constraint.matrix = constraint.matrix, entropy

= -1 * sum(prob * log(prob)))

}

Della Pietra, S., V. Della Pietra, and J. Lafferty. (1997). Inducing features of random

fields. IEEE Transactions Pattern Analysis and Machine Intelligence 19:1-13.

8