Generation of human reliability data for the air traffic

advertisement

GENERATION OF HUMAN RELIABILITY DATA FOR THE AIR

TRAFFIC INDUSTRY

Barry Kirwan, EUROCONTROL

Eric Perrin,

EUROCONTROL

Brian Hickling, EUROCONTROL

Huw Gibson, Birmingham

University

Ed Smith, DNV Consulting

SUMMARY

Air Traffic Management (ATM) deals with the safe and efficient passage of aircraft across national and

international airspace. In Europe, ATM, as with other industries, must now comply with formalized risk assessment

procedures, for example those embodied in EUROCONTROL Safety Regulatory Requirements (ESARR) 4 [1]. In

order to demonstrate that systems are acceptably safe, a Safety Assessment Methodology (SAM) has been proposed

by EUROCONTROL [2] and is being applied by many countries in Europe. Safety cases for existing or new systems,

or significant system changes, can utilize fault and event tree (or equivalent) approaches to model and quantify risk

as is done in other industries such as nuclear power, chemical, process, and petrochemical. However, there has been

little emphasis to date on Human Reliability Assessment (HRA) in the world of ATM, although it is recognized that

the high degree of safety evident in this industry is mainly due to the human element (in particular the air traffic

controller). The context of this paper therefore concerns the feasibility of HRA in air traffic risk assessments. As a

first step towards HRA in ATM, this paper focuses on the degree to which quantitative human error data can be

generated to substantiate or calibrate an ATM HRA approach.

Two separate exercises are reported. The first concerns collection of human error data from a real-time

simulation involving air traffic controllers and pilots. This study focused on communication errors between

controllers and pilots. The second relates to a formal expert judgment study using direct numerical estimation (also

called Absolute Probability Judgment) and Paired Comparisons protocols to elicit and structure the controller and

pilot expertise.

The results showed that stable HEPs can be provided from real time simulations, at least with respect to

communications activities, and to a lesser extent from expert judgment approaches. These results suggest that the

approach of HRA can be adapted to ATM safety case methodologies and frameworks. An example of a recent

developing air traffic safety case which has utilized the HRA approach is briefly discussed.

The conclusion is that HRA is feasible, but that more data do need to be collected, since ATM dynamics and

safety scenario timings, as well as its operational culture and performance shaping factors, are different to other

industries where HRA application is ‘the norm’.

1

Copyright © #### by ASME

INTRODUCTION

Human Reliability Assessment (HRA) has been around for some time, notably since the Three Mile Island

nuclear power plant accident in 1979, when the Technique for Human Error Rate Prediction (THERP) [3] became

the predominant technique in use. Since that time, the usage of HRA has spread to other process-control-related

industries (petrochemical; chemical and process). Recently it has also spread to the transportation sector, notably the

rail industry, and the medical and air traffic management domains are increasingly focusing on the management and

assessment of human error [4].

HRA has several main attributes [3, 5], principally determining what can go wrong (human error identification),

determining the risk significance of errors (or correct performance: the kernel of this function being human error

quantification), and identifying how to mitigate human-related risks or assure safe human performance (error

reduction). HRA can be seen as an engineering function (belonging principally to the sub-discipline of Reliability

Engineering) or as a Human Factors function. In truth it belongs to both domains, and normally people carrying out

HRA are ‘hybrid’ practitioners with a mixture of reliability engineering and Human Factors/psychology expertise.

HRA is however usually most clearly associated with its second main function, namely the quantification of human

error likelihood (i.e. how frequently will a particular error actually occur?). This likelihood is usually expressed as a

probability, called a Human Error Probability (HEP), which is effectively the probability of an error per demand. In

theory, and in practice, HRA rests upon a fundamental premise that HEPs can be quantified, i.e. that they exist as

stable quantitative values.

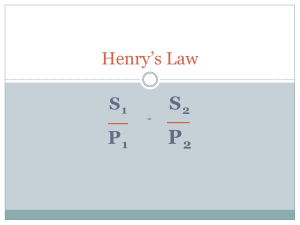

The quantitative expression for an HEP is straightforward and is shown below, based on the idea that a HEP can

be measured by observation1:

HEP = number of errors observed / number of opportunities for error

Whenever HRA is being applied to a new industry, it must map onto the risk or safety management framework

and indeed the organizational ‘culture’ of that industry. Where such an industry already has a quantitative approach

to risk (e.g. nuclear power), HRA may ‘find its niche’ quickly. Conversely, where risk is managed more qualitatively,

it may encounter resistance and incredulity from those who need to be convinced in order for it to be utilized

effectively. Thus, HRA in the medical domain at the moment is mainly being used qualitatively (for error

identification and reduction purposes). But in ATM, the whole industry in Europe has been undergoing a paradigm

shift in safety assessment, moving from a more ‘implicit’ and qualitative approach to a formalized methodological

framework with quantitatively stated target levels of safety that safety cases need to reach. This leads to formal use of

techniques such as fault and event trees, and in some cases dynamic risk assessment approaches. The former

approaches in particular will need HEPs if human error plays a significant role in ATM safety. In fact, human

performance dominates safe system performance in ATM (controllers literally control where aircraft go, in real time,

using radar and radio-telephony), and so should be a major component in any safety case. Nevertheless there is some

skepticism in the industry as to whether HRA is meaningful, and in particular, whether HEPs are a stable entity.

Since ATM is significantly different to industries where HRA is accepted (ATM is very dynamic and its human

elements operate in a much faster timeframe than, say, nuclear power), the argument that ‘HRA works and is

accepted elsewhere’ is not sufficient.

As a preliminary step to developing a HRA methodology for ATM, it was decided to explore whether HRA’s

basic premise that HEPs can be quantified and remain stable, is true also in ATM. However, ATM’s culture is more

judgment-based than scientifically based (again, unlike nuclear power, for example). Many of those in key

management positions today were once controllers themselves and would be more convinced by controllers saying

that HRA’s estimates were reasonable, than by reading a scientific or academic report. Therefore a two-pronged

approach was taken to explore the applicability of HRA to ATM. The first was to determine if HEPs could be

collected in realistic (high fidelity) real-time simulations of ATM operations2. The second approach was to carry out

expert judgment procedures to quantify HEPs for an ongoing ATM safety case, using controllers and pilots as

subjects. The hypotheses were simple, and capable of being rejected (i.e. scientifically they are ‘falsifiable’):

1 Assuming that errors occur in a stochastic fashion, for example following a Poisson distribution. The confidence limits around such HEPs

of course depend on the respective sizes of the numerator and denominator, more observations leading to higher confidence and narrower

uncertainty bounds around the resultant HEP.

2 Although the pilot situation is less high fidelity, using ‘pseudo-pilots’, trained pilots sitting at a special terminal with scripts and direct

communications with the air traffic controllers.

2

Copyright © #### by ASME

Can robust HEPs be elicited for ATM safety related tasks?

Are HEPs as may be used in safety cases credible to controllers and pilots?

If the answer to either is ‘no’, then this bodes badly for HRA in this work domain, and perhaps other approaches

to manage human related risk must be investigated. If the answer to both is ‘yes’, then it means HRA in ATM is a

feasible proposal. It does not of course mean the road to full acceptance and implementation will be easy, or even

acceptable, but that is beyond the scope of this paper, which seeks only to establish feasibility at this early stage of

HRA in ATM. The remainder of this paper therefore presents the abridged results of the two studies, showing indeed

that the premise for HRA in ATM appears to be supported. References to the full studies are given. A final

concluding section outlines a way forward for developing HRA as an effective approach in ATM.

STUDY 1: HEP DATA COLLECTION IN REAL-TIME SIMULATION (CO-SPACE) [6]

The primary principle in air traffic management is to keep aircraft separate, by certain minimum distances both

vertically and horizontally. This is currently generally the controllers’ task when dealing with civil/commercial

traffic. This task can lead to high workload in certain high density areas, as different ‘streams’ of aircraft are

approaching busy airports. An option is therefore to allow the crew of one aircraft some degree of autonomy in

separating their aircraft from the one in front, for example, via the use of specialized cockpit equipment. In the

EUROCONTROL Co-space project, therefore, a new allocation of spacing tasks between controller and flight crew

is envisaged as one possible option to improve air traffic management. It relies on a set of new “spacing”

instructions, whereby the flight crew can be tasked by the controller to maintain a given spacing to a target aircraft.

The motivation is neither to “transfer problems” nor to “give more freedom” to flight crew, but really to identify a

more effective task distribution beneficial to all parties, without modifying responsibility for separation provision. In

Co-Space the airborne spacing assumes availability of airborne Automatic Dependent Surveillance (ADS-B) along

with cockpit automation (Airborne Separation Assistance System; ASAS). ASAS is a set of new ATC instructions, to

allow, under the right conditions, the delegation of separation from ATC to pilots. No significant change on ground

systems is initially required. These procedures and systems are under development in the Co-Space project, and in

parallel a number of extensive real-time simulations (RTS) are being conducted to evaluate the adequacy of the

resulting system performance. These RTS are carried out to assess usability and usefulness of time-based spacing

instructions in TMA under very high traffic conditions, with and without the use of spacing instructions. In the

pursuit of HRA feasibility, and also because one day the airborne separation assurance concept may be the subject of

its own quantified safety case, it was agreed by the project team that HEP data collection could be attempted during

the simulation.

The Real Time Simulation (RTS) involved Approach controllers from Gatwick, Orly and Roma. They employed

two generic approach sectors derived from an existing environment (Paris Terminal Maneuvering Area or TMA). Each

air traffic control ‘sector’ (an area of airspace) was feeding into a single landing runway airport and was controlled by a

unique Approach position manned with an executive and a planning controller. The role of each executive was to

integrate two flows onto the final approach, and to transfer them to Tower controllers. Seven air traffic controllers

(ATCO) positions, including the TMA one, were used for each one hour simulated session. The traffic was presequenced when entering each approach sector via two initial approach ‘fixes’. The traffic followed standard

trajectories. No departure traffic and no ‘stacks’ (vertical holding points, as used in some airports) were simulated. The

RTS utilized paper (rather than the new electronic) strips and a separate arrival manager tool. Controllers talked to pilots

using standard radio-telephony headsets.

Results

During the simulation, a total of 613 communication ‘transactions’ between controllers and plots were analyzed,

which contained 3,411 communication elements, and a number of errors. Tables 1 & 2 show the types of error made

and by whom (controller or pilot), and how the errors were recovered (Table 2). This typology is in the context of air

traffic operations. Table 3 shows the types of error that occurred in more general terms – such information is useful

when trying to determine how to improve human performance. Table 3 shows in particular that simple numerical

errors are common. This is seen in practice when two aircraft having a similar call sign occur in the same controller’s

airspace in real life, leading to what is called a ‘call sign confusion’ error. Such an error can lead to a loss of safe

standard separation distance between aircraft if the controller gives the right message to the wrong aircraft. A number

3

Copyright © #### by ASME

of airlines today work hard with the ATM community to try to prevent similar call signs occurring in the same sector

of airspace, to reduce the frequency of this type of error and so avoid its potential consequences.

Table 4 shows errors with and without ASAS. Chi-square analysis was undertaken to identify if there were any

significant differences between sessions where ASAS was used and those where ASAS was not used. No significant

differences were identified. An equivalent analysis was also undertaken to identify if transactions which contained an

ASAS instruction were more susceptible to communication errors than those which did not. Again, the analysis did

not identify any significant differences between the two cases. This suggests that ASAS usage does not impact on the

likelihood of communication errors. Table 5 shows errors by controllers and pilots (in this case ‘pseudo-pilots’ –

however these are nevertheless actual qualified pilots, but working in a computer simulator workstation rather than a

cockpit or cockpit simulator). The error rates are in this case strikingly similar even though the task environment and

training is very different.

Table 1. Errors during the ASAS Real Time Simulation

Error type

Controller

30

Slip

No read back

No response

Contradict previous

instruction

Query

Context required

Use of non-English language

Change of plan

Break

Station calling

Expedite

Total

Pseudo-pilot

31

4

1

1

2

1

4

1

17

2

3

2

63

Total

61

4

2

2

10

8

1

Percentage

52%

3%

2%

2%

9%

9%

2%

14%

2%

3%

2%

100%

11

12

2

17

2

3

2

118

55

Table 2. How errors were recovered

Error type

Slip

No read back

No response

Contradict previous instruction

Query

Context required

Use of non-English language

Change of plan

Break

Station calling

Expedite

Total

None

8

1

Recovery

Other

Later

5

4

2

1

2

2

Self

44

Not Identified

11

2

9

2

17

2

3

2

81

13

4

10

5

10

Total

61

4

2

2

11

12

2

17

2

3

2

118

Copyright © #### by ASME

Table 3. Details of error nature

Slip type

Incorrect numeric element within a numeric (e.g. 516 for 515)

Whole numeric substituted for another (e.g. say 56 7 for 123)

Numeric omission (e.g. say 1233 for 12335)

Phonetic alphabet

Company identifier (e.g. Britannia for Ryan air)

Pilot read back of controller use of 'please'

Repetition of phrases or call signs

Errors in words/sequences in a standard phrase (e.g. ‘its er two

nine er flight level two nine zero’)

Total

Percentag

e

67%

2%

2%

5%

8%

2%

7%

Frequency

41

1

1

3

5

1

4

5

61

8%

100%

Table 4: Communication errors and use of ASAS

All errors

Slips

Number of elements

Likelihood of error

Likelihood of slips

Session used ASAS

71

39

1921

0.0370

0.0203

Session did not use ASAS

47

22

1490

0.0315

0.0148

Table 5: Controller versus pseudo-pilot slip rates

Slips

Number of elements

Likelihood of slips

Controllers

30

1705

0.018

Pseudo-Pilots

31

1706

0.018

How do the data compare with data collected in the field?

Table 6 presents equivalent data from this study as the final column against data from actual studies for different

UK and US airspace types (i.e. studies which have measured human error rates in the field). The data do provide

very similar human error probabilities to the other studies. This suggests that the communication performance in the

trial is similar to that experienced during live Air Traffic Control.

This study as a whole found that HEPs can be collected, and that they appeared to exhibit the property of

stability. As a key finding for example, the likelihood of communication slips (e.g. say 5 4 6 when 5 6 4 was

intended), were shown to be constant across a range of conditions. Differences were not identified between error

rates with/without ASAS, between ASAS and other communications, between different instruction types, between

different controllers or between controllers and pseudo-pilots. These results would therefore support the required

premise for HRA, at least for the task of communication, which is itself a safety critical one in the ATM industry.

A separate study [7] presents unrecovered readback error rates for ATC communication of 0.006 from a number of

field studies. The Co-Space simulator data provides an unrecovered readback error rate of 0.003. While low cell

counts prohibit statistical comparisons, these data are certainly in the same 'ball park' and a tentative conclusion is

that the performance in the simulation is comparable with data collected in the field.

5

Copyright © #### by ASME

STUDY 2: HEP DATA GENERATION USING EXPERT JUDGMENT: THE GBAS CAT-1 SAFETY CASE

GBAS: Ground-Based Augmentation System

CAT-I/II/III operations at European airports are presently supported by an Instrument Landing Systems (ILS).

The continued use of ILS-based operations as long as operationally acceptable and economically beneficial is

promoted by the European Strategy for the planning of All Weather Operations (AWO). However, in the ECAC

(European Civil Aviation Conference) region, the forecast traffic increase will create major operational constraints at

all airports, in particular in Low Visibility Conditions (LVC) with the decreased capacity of runways. Consequently,

the technical limitations of ILS such as Very High Frequency (VHF) interference, multipath effects due to, for

example, new building works at and around airports, and ILS channel limitations will be a major constraint to its

continued use. Within this context GBAS is expected to maintain existing all weather operations capability at

CATI/II and III airports. GBAS CAT-I (ILS look-alike operations) is seen as a necessary step in order to extend its

use to the more stringent operations of CAT-II/III precision approach and landing. Initial implementation of GBAS

could be achieved in ECAC as early as 2008.

A safety case is therefore being prepared for GBAS to see if it can be implemented. Within this developing

safety case a number of potentially critical human errors were identified in the associated fault and event trees. No

real-time simulation for GBAS has yet occurred, and its use is different from ILS. Furthermore, since ILS was

implemented a long time ago, prior to the current safety paradigm, there was no safety case for ILS with which to

compare identified human errors. Consequently, since there were no prior identified HEPs and none available from

the real world (GBAS is not yet implemented) and few relevant ones from ILS operation, it was decided to attempt to

use expert judgment approaches to quantify some of the key HEPs for the GBAS safety assessment.

In HRA a number of approaches are recommended for using expert judgment [4, 8]. This study chose two

methods which have formerly been used successfully in a new HRA application area (offshore petrochemical) [9].

The approaches are Absolute Probability Judgment and Paired Comparisons, as outlined below. In addition, the

Human Error Assessment and Reduction Technique (HEART) [10] was applied to address those HEPs that were not

tackled by the APJ session and also to overlap with the APJ figures so that effective cross checking between the

techniques could be conducted.

Absolute probability Judgment (APJ)

Absolute Probability Judgment [4, 8] is the most direct approach to the quantification of human error

probabilities (HEPs). It relies on the use of experts to estimate HEPs based on their knowledge and experience. The

method used in this project was the Consensus Group Method, following these steps:

1.

2.

3.

4.

5.

6.

Task statements were prepared in the form of a booklet with room for individuals to enter their estimate for

each task plus any key assumptions they made.

During the session, each scenario and each task was explained to the experts.

The experts were then given time to enter their individual estimate for each task.

A discussion was held in which each expert gave a view, usually starting with those who had given the most

extreme (high and low) estimates.

The group was then facilitated in order to try to obtain a consensus value. If that was not possible, each

individual would be asked to revisit their original estimates in light of the discussion and revise if necessary.

Following the session all the booklets were collected and a list of the consensus values and the aggregated

individual estimates (where consensus failed) was made.

A dry run session was held with three ex-controllers at the EUROCONTROL Experimental Centre (EEC) on

18th August 2004. This session was intended to test out some of the task questions and the general method. It

provided a valuable indication of the time necessary for such sessions in an ATM context and led to a revised

question set making the language more appropriate for controllers and pilots.

Prior to the full session, briefing material was sent to all participants explaining the objectives and the format of

the exercise. The full expert session was held on 30th November and 1st December 2004 at the EUROCONTROL

Experimental Centre in Bretigny, South of Paris (France). The first day was dedicated to pilot estimates of error

probabilities; the second day focussed on estimates by controllers and a ground system maintenance specialist. In the

session itself, prior to beginning the process described above, an introduction and warm up exercise was carried out.

The introduction gave a brief history to the method and explained how the session would be run. The warm up

6

Copyright © #### by ASME

exercise involved using the templates to estimate errors involving the use of a car. This helped the specialists

understand how the process worked.

Paired Comparison (PC)

This technique does not require experts to make any quantitative assessments. Rather the experts are asked to

compare a set of pairs of tasks and for each pair the expert must decide which has the highest likelihood of error. In

the context of this study this technique was used to test “within-judge consistency”. Experts may exhibit internal

inconsistencies that will be shown as “circular triads” (this is where, for example, an expert successively says that A

is greater than B, B is greater than C and C is greater than A - effectively saying that A is greater than itself. It is

therefore necessary to determine the number of circular triads and decide if this is so high that the results should be

rejected, and there are mathematical approaches to determining how many triads are ‘allowable’ before the judgment

is considered unsound [8].

In the ‘dry run’ seven questions were used with a total of 21 pair comparisons3 to be made. The comparisons

were randomized for each subject. The participants were asked to answer the questions without delay and without

reference back to previous answers. Only three questions were put on each page of the answer booklet to reduce the

chance of referring back. The three participants found the exercise reasonably straightforward and no significant

changes were identified for the full session itself.

Results

Paired Comparisons

Applying the Paired Comparisons calculation method one person from the pilot group and one person from the

controller group were ‘screened out’ (too many circular triads). The criterion chosen was if there was more than a

10% chance that the preferences had been allocated purely at random then the results were set to one side. Thus, for

example in the case of the pilot group, if the number of circular triads (c) was 3 or more then there was a greater than

10% chance that the ordering was random.

Absolute probability Judgment

It proved difficult for the participants to arrive at a consensus when directly estimating HEPs. Following

discussion a number of participants were willing to change their HEP values (e.g. if they were presented with new

information, or they had misunderstood an aspect of the context of the error). However, this very rarely led to a

consensus across the whole group. Therefore aggregation of individual estimates was required and geometrical

means were produced. The Geometric mean (GeoMean) is obtained by multiplying ‘n’ probabilities together and

then taking the nth root. This approach is used because an arithmetic mean of probabilities across several orders of

magnitude is biased towards the higher probabilities (consider for example the arithmetic and geometric means for

the following values: 0.1; 0.01; and 0.001). A median can also be used, but in this case the GM approach was chosen.

Some excerpts from the results are shown in Tables 6. In several cases the ranges between maximum and

minimum estimates were too large, so that little confidence could be attached to the aggregated values. In other

cases cross checks against the little available historical data (input and output) indicated under-prediction (optimism)

from APJ. The values refer to events in the risk model that need to be quantified. They relate to the following three

types of errors:

C1: Capturing false information about final approach path

D1: Failure to maintain a/c on final approach path

F1: Selecting wrong runway

It can be seen that there was a considerable range in the estimates (estimates within a factor of ten are desirable).

There were several factors contributing to this. First, it was a diverse group, and they were of varied experience.

Secondly, their familiarity with GBAS was also variable. Third, expert judgment work was new for all these experts,

and there was only one day to get used to the process and make the assessments. Perhaps the most significant factor

3 The combinations involve comparing every question with every other one and removing the double counting of order. The

number is calculated by n (n-1)/2 = 21 in the case of 7 questions and 15 in the case of 6 questions.

7

Copyright © #### by ASME

however, for both the pilot and the controller sub-group, was the detailed nature of the assessments required. The

‘granularity’ of the assessments, namely specific errors in very specific contexts, was more precise than they were

used to. In particular the experts needed significant contextual information. Furthermore, there was a tendency for

the experts in both groups to think in terms of failure scenarios rather than concentrating on a single failure within a

cutset, where such a failure on its own would be unlikely to lead to complete failure. This appeared to make the

experts optimistic, since they believed the numbers they were quantifying would mean system failure, rather than a

contribution (i.e. necessary but insufficient) towards system failure.

Table 6. Pilot APJ Session – extract of results

Excluding PC test (unsound expertise discarded)

Potential

Error {Code

in Risk Model}

C1a

C1b

D1

F1a

F1b

F1c

Maximum

1.1E-03

2.5E-04

1.0E-03

4.0E-04

1.0E-03

1.0E-03

Minimum

2.0E-05

1.0E-05

1.0E-04

1.0E-05

1.0E-04

1.0E-04

Range

55

25

10

40

10

10

Geometric Mean

2.1E-04

3.5E-05

4.3E-04

6.9E-05

4.0E-04

4.6E-04

The lessons learned with this study were several. First, more time is needed, both to get used to the process, and

also to fully understand what is being quantified. Experts need to understand when HEPs are effectively conditional

and only part of a cutset, not leading to total failure. This requires more time in understanding the fault or event tree,

and suggests a basic risk assessment appreciation, which could be provided during an extended warm-up or training

period. A further lesson is the need for true experts, e.g. current pilots and controllers (and not retired ones or very

new ones), and sufficient in number to gain evidence for statistical clustering of values around a mean HEP value. A

final lesson learned is that having some existing data, and using it during the training session, acts as a good means

of ‘calibration’ of experts.

Despite the difficulties, the overall process for both controllers and pilots showed that some good estimates

could be provided and used in actual safety cases (in several cases experts were asked to quantify HEPs where other

reliability estimates for which the true observed values were known, and there was a good degree of agreement by

some of the experts). The experts themselves also ‘warmed’ to the process, seeing the benefits of being involved and

also the necessity of carrying out such quantitative analysis. They also felt it important to have their expertise

informing such quantitative HRA, rather than being left to safety analysts who may not understand the real

operational context and factors that influence pilot and controller behavior. In short, they saw their role in HRA.

The developing GBAS safety case (currently at the Preliminary System Safety Assessment [PSSA] stage [11])

has undergone a favorable review in the industry. The GBAS PSSA has used a judicious mixture of actual data,

expert judgment, and use of a non-industry specific HRA technique (HEART, [10]) to address the system’s key

human involvements. The benefits for the safety case in terms of a better and more realistic representation of human

performance, and resulting specific safety requirements on the future GBAS system, have vindicated the approach. In

particular, the HRA approach as a whole led to qualitative safety requirements that were judged as necessary by the

Air Navigation Service Provider stakeholders. The mechanics, benefits and limitations of practical HRA in ATM

have therefore been demonstrated, and as a whole it has led to added safety value.

CONCLUSIONS & FUTURE DIRECTIONS

This paper has concerned itself with the feasibility of HRA in ATM. Two studies have leant support for a

fundamental premise of HRA, namely that the HEP exists and can be a stable entity, and that the main ‘players’ in

ATM (pilots and controllers) can see the benefits of HRA and their role with respect to HRA. These results suggest

that HRA can indeed function usefully in ATM.

In order to realize an integration of HRA into ATM safety assessment practice, several things must happen. First,

more data must be elicited, for data calibration purposes, and to extend the premise of HRA beyond communications.

Second, there must be a better understanding of the contextual factors and their role in influencing human

performance. These factors, known generically in HRA as Performance Shaping factors, may differ in ATM

8

Copyright © #### by ASME

compared to other industries. Work is now ongoing in EUROCONTROL to achieve a better understanding of such

factors based on analysis of incidents. This work will lead to a database of some HEPs and associated contextual

factors, which will help assessors know the approximate ‘ballpark’ value for a specific controller task type, and what

factors they should consider when carrying out assessments for new or existing systems. Ultimately, a specific HRA

technique should probably be developed for ATM, since such databases will never be large enough to answer all

questions, and may be too ‘rooted’ in current operations to enable sufficient extrapolation to next generation ATM

concepts.

In conclusion therefore, HRA is feasible in ATM, and can add valuable insights in ATM safety cases. There now

remains much work to be done to migrate from a state of ‘feasibility’ to one of full integration of HRA into safety

and risk management of current and future ATM systems. But the first steps have been taken.

ACKNOWLEDGMENTS & DISCLAIMER

The authors would like to express thanks to the Co-Space team and the GBAS participants for their invaluable

contributions to this study, and Oliver Straeter who is now working with the team on the further development of a

data-informed HRA approach for EUROCONTROL. The opinions expressed in this paper are however those of the

authors and do not necessarily reflect those of the organizations mentioned above or affiliated organizations.

REFERENCES

[1] EUROCONTROL, ESARR4, Risk Assessment and Mitigation in ATM, Edition 1.0, 5th April 2001,

http://www.eurocontrol.int/src/gallery/content/public/documents/deliverables/esarr4v1.pdf

[2] http://www.eurocontrol.int/safety/GuidanceMaterials_SafetyAssessmentMethodology.htm

[3] Swain, A.D. and Guttmann, H.E., 1983, Human reliability analysis with emphasis on nuclear power plant

applications. USNRC/CR 1278, Washington DC 20555.

[4] Kirwan, B. Rodgers, M. Schaefer, D. (Eds.), 2005, Human Factors Impacts in Air Traffic Management.

Ashgate Publishing, Aldershot.

[5] Kirwan, B. (1994) A guide to practical human reliability assessment. London: Taylor & Francis.

[6] Gibson, W.H., and Hickling, B., 2006 (in press), Feasibility study into the collection of human error

probability data, EEC Report, EUROCONTROL Experimental Centre, Bretigny sur Orge, BP 15, F-91222 CEDEX

France.

[7] Gibson, W.H. Megaw, E.D., Young, M and Lowe, E. (2006) A Taxonomy of Human Communication Errors

and Application to Railway Track Maintenance. Cognition, Technology and Work.

[8] Seaver, D.A. and Stillwell, W.G. (1983) Procedures for using expert judgement to estimate human error

probabilities in nuclear power plant operations. NUREG/CR-2743, Washington DC 20555.

[9] Basra, G. and Kirwan, B. (1998) Collection of offshore human error probability data. Reliability Engineering

and System Safety, 61, 77-93.

[10] Williams, J.C., 1986, "HEART - A Proposed Method for Assessing and Reducing Human Error",

Proceedings of the 9th "Advances in Reliability Technology" Symposium, University of Bradford.

[11] EUROCONTROL, 2005, Category-I (CAT-I) Ground-Based Augmentation System (GBAS) PSSA Report,

v1.0, 18th Nov. contact eric.perrin@eurocontrol.int

9

Copyright © #### by ASME