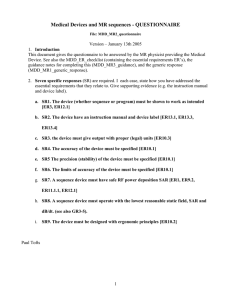

MDD_MR3_guidance

advertisement

Medical Devices and MR sequences – GUIDANCE File: MDD_MR3_guidance Version – May 16th 2005 1. Introduction a. Orientation: This document contains guidance notes, intended to support the MR physicist in answering the questionnaire (MDD_MR2_questionnaire). b. Background.: The EU Medical Devices Directive (MDD) places some obligations on anyone who gives software (such as a MR sequence, or analysis program) to an external organisation. The obligations also constitute a ‘best practice’ which improves the scientific quality of our devices. Geoff Cusick, from the Department of Medical Physics and Bioengineering, UCLH, gave a very informative talk to the Division on this topic on May 10th 2004. Then document MDD_MR_ER gives the Essential Requirements (ER’s) arising from the MDD. This document addresses the issues arising from work carried out by physicist members of the Division, and sets out to define a simple procedure for validation of such products within the context of the MDD. c. Aims of this project: i. Understand the MDD and its Essential Requirements (ER’s) ii. Identify the subset of ER’s relevant to MR (and particularly quantitative MR, qMR, seen as a measuring device) iii. Develop MR responses to the MDD, both generic and specific to a particular device iv. Develop a friendly checklist for MR physicists who dread approaching the MDD itself. d. Procedure/timeline/jobs: This is still a draft procedure, and feedback from various sources is needed in order to establish its validity (to ensure we are operating within ‘best practice’, as seen by the MR community). Specifically: i. Within the Division of Neuroradiology and Neurophysics, each group has a representative to be concerned about these issues. This should be convened to test and provide input. ii. Feedback from UCLH trust (via Geoff Cusick). (also existing practice for measuring devices in other areas e.g. electrical) iii. IPEM MR Special Interest group (SIG), and any national bodies they recommend iv. UCL HR (for issues of employer/employee liability) v. I presented this work at an IPEM meeting on Software as a Medical Device on November 12th 2004. A summary of feedback is given in appendix 5. vi. More is needed on the regulatory bodies limits on B1 SAR and dB/dt. vii. We have to ‘beta-test’ this on a few people and devices internally. viii. Eventually it’s probably good for web- and journal publication (MAGMA?) ix. We should/could start adding ‘validation’ paragraphs to our methods journal papers, using information from this MDD procedure. x. We may need to set up formal collaborations, honorary contracts etc to prevent some sequences being formal medical devices, or to limit liability. xi. A document will be presented to the Division in January 2005 xii. The generic and guidance text should be applicable to IoN/Queen Square machines from various manufacturers. e. More background information is given in appendix 1. 2. General guidance notes for completing the questionnaire a. These are proposed as part of developing good practice. Some are suggested as a result of errors that have been made locally. 1 b. All devices should have an instruction manual of some kind; this could be a few lines of on-line help, and/or a pointer to a fuller document. c. When errors are made, these should be analysed and learnt from. The procedures should be modified to at least prevent such known errors from taking place again. Some case studies are given in the appendix. See also the cases from eWEEK (see references at end) d. Devices should have ‘test modes’, where the likely errors can be anticipated. The appendix case studies suggest ‘test modes’ for specific devices e. Before being handed over for routine use by non-technical people, an independent person should look at the device and check its operation. f. For quantitative MR we are in essence producing a measuring instrument, and should follow, as much as possible, the traditions that have built up in that area. These include specifying the accuracy and precision, or systematic and random errors, or using the modern approach of uncertainty and uncertainty budget. We cannot claim to be producing qMR tools unless we have followed this practice. g. Human error is always present (see the case studies!). Design has to include recognition and acceptance of its presence in prototype devices, by designing test procedures to detect it. h. Testing software and sequences: Gareth Barker’s advice: see his email of 28 June (appendix 4) 3. specific guidance notes for the questionnaire, for each Specific Response (SR). These are prompts (questions) to drive your SR. For non-quantitative devices (e.g. a visualisation sequence) ignore SR3-6. a. SR1. The device (whether sequence or data analysis program) must be shown to work as intended [ER3, ER12.1,ER13.6p] i. technical description: Name the device. What type of device is it? describe the design of the device. Why was it developed? What kind of output does it give? How does it work? ii. anticipate the most likely errors (up to three) that could occur and how the chance of these happening can be minimised. Look at the case studies where errors have occurred. Recognise that human error is always present. iii. Provide test modes to anticipate problems (including those of human error through inadequate training). For a sequence, this could be to vary one or more of the user-set parameters (the ones that the user is expected to alter as part of using the device) and to monitor that the response is as expected. For programs, provide test image data and ensure the user can replicate the expected results (within given confidence limits). iv. Provide independent review of the device by an experienced person or persons (one physicist and maybe one non-technical user). The degree of independence (i.e. lack of involvement in the development process) should be in accordance with the level of risk associated with device failure. v. Monitor the device usage during a beta-test period. This could be for one month, or 20 measurements, after the device has been handed over to the user. vi. Through upgrades (hardware or software) all these validation procedures will have to be repeated. vii. Quality Assurance (QA) phantom results and normal control values may be used to support the validity of the device viii. Accuracy and precision data (see below) also support validity b. SR2. The device have an instruction manual and a device label [ER13.1, ER13.3, ER13.4,ER13.6a, ER13.6b, ER13.6p] i. write a (short) set of instructions for a non-technical user. Test the manual with at least 2 users. The manual must include the author (manufacturer) of the device. Anticipate potential problems and errors and include these in the manual. Include ‘test modes’ and QA data, and suggested test intervals. Include the device label information where appropriate. 2 c. d. e. f. g. h. i. ii. The manual should ideally be ‘attached’ to the device (i.e. accessible from the device, through e.g. a website link, or ‘on-line help’. iii. Device label: This is short and ideally fixed to the device. For a pulse sequence (GE or Siemens) this is generally not possible. For an analysis program, this could be brief text which is displayed when the program first runs. iv. The label should include: 1. identification of the device 2. name of maker 3. ‘for research purposes only’ 4. date of manufacture 5. version number 6. any special operating instructions, precautions or warnings SR3. (if quantitative) the device must give output with proper units [ER10.3] i. give the output in proper SI units where possible (for example ms, percent units (pu), microseconds). Stored integer values in calculated maps should be at good enough resolution so as not to degrade the data, whilst not coming near the 16-bit signed integer limit of 32767 ; this typically means values of between 1000-10,000. Use the scaling factor facility in the display program dispim and in the UNC file header so that real floating point values can be used directly by the user, without seeing the integer values. SR4. (if quantitative) the accuracy of the device must be specified [ER10.1, ER13.6p] i. show that the quantity being produced is close to the truth, as far as possible. For a sequence, use a search coil to confirm aspects of a new pulse that may be crucial, particularly its amplitude. For an analysis program, show that the quantity being measured is true in phantoms, if possible [some quantities, for example blood flow, may only meaningfully exist in the brain, since accurate enough phantoms do not (yet) exist. Others, such as volume, can meaningfully be tested in phantoms (test objects)]. SR5 (if quantitative) the precision of the device must be specified [ER10.1] i. measure the reproducibility by repeated measurements, using repeated scans if necessary. Use paired measurements and the Bland Altman analysis to estimate standard deviation and 95% confidence limits. SR6. (if quantitative) the limits of accuracy of the device must be specified [ER10.1, ER13.6p] i. ‘Limits of accuracy’. Presumably it means the 95% CL for total uncertainty (whether arising from systematic or random sources). This can be calculated from the mean inaccuracy, and the 95% confidence limit on repeated measurements, by combining the two quantities. Total uncertainty, and the ‘uncertainty budget’, are discussed on p68 of QMRI of the brain SR7. A sequence device must have safe RF power deposition SAR [ER1, ER9.2, ER11.1.1, ER12.1] i. GE machines: this is an ongoing subject under investigation. A statement from GE (or a quote from the manual) on how SAR is calculated and controlled would help here. ii. Siemens machines: There is no ‘research mode’. When a new sequence is written, and passed through ‘Unit Test Mode’, all the inbuilt checks (including SAR and dB/dt) are all applied. However if a local coil is built, this would need local testing for SAR. There is a ‘first level’ where inbuilt checks are disabled – however this is never used. SR8. A sequence device must operate with the lowest reasonable static field, SAR and dB/dt [ER9.2b, ER11.1.1] i. Has anything been done that might increase the dB/dt? Are the maximum gradient and the slew rate unchanged? (which gradient direction is most likely to have been altered?) SR9. Ergonomic principles [ER10.2]. 3 i. The process of using the device and reading the output should, as far as possible, be convenient and user-friendly 4. Appendix 1a - Summary of Geoff Cusick’s talk on May 10th 2004 – need for compliance with MDD (This can be downloaded via the Division website – see the MDD link) a. Geoff Cusick, from the Department of Medical Physics and Bioengineering, UCLH, gave a very informative talk to the Division on this topic on May 10th 2004. This document addresses the issues arising from work carried out by physicist members of the Division, and sets out to define a simple procedure for validation of such products within the context of the MDD. b. What is a medical device? “a medical device means any .. apparatus… including software.. intended for human beings for…diagnosis [or]… monitoring…of disease” [slide 6]. “software may be a medical device”; [this includes] “’instrument control” [and] “ image analysis” [slide 7] c. The device has to be “placed in the market” for the MDD to formally (legally) apply [slide 8]. This could be a formal commercial sale, or giving the device to an external agency (e.g. from UCL to the NHS or to another university). A letter of collaboration between the parties at the outset of the work can remove the need for formal transfer at a later stage. d. In a R&D setting, indemnity of the individuals has been an ongoing issue. Do the physicists need personal indemnity cover (insurance) in case of a mishap? (This could be the MR instrument damaging a subject, or incorrect image analysis leading to incorrect treatment). GC’s advice is that provided the researcher has followed ‘best practice’, the employer would back the researcher, and courts would see compliance with the MDD as compliance with best practice. Thus we are led to conclude that to indemnify ourselves we should comply with MDD, even though in most cases it is not legally required [slide 9] 5. Appendix 1b - Summary of Geoff Cusick’s talk – what the MDD requires a. There are two primary components: i. demonstrating the device works (i.e. establish the ‘benefits to the patient’) ii. managing the risk (i.e. show the risks are ‘acceptable’). If the consequences of failure are high, this needs more scrutiny, possibly including an external or independent assessor. b. There is a whole set of Essential Requirements (ER’s) well described in the UCLH checklist (downloadable – see below). Each one must be considered or declared ‘not applicable’ [slide 10]. I have picked out the obviously relevant ones for us. There are [also ??] some ER’s where a blanket statement will probably suffice, and can be recycled for each device. (e.g. for MR: static field can be covered by saying the device has been supplied by a reputable manufacturer). A response to these must include pointers to either generic or specific statements in the MR checklist (see below). c. ER – does it work? : i. ‘The device must achieve the performances intended by the manufacturer…’ [ER 3] ii. ‘Devices with a measuring function must ... provide sufficient accuracy and stability. The limits of accuracy must be indicated.” [ER10.1] iii. ‘The … scale must be designed … with ergonomic principles’ [ER10.2] iv. ‘The measurements must be expressed in legal units’ [ER10.3] v. ‘Instructions for use must be included in the packaging for every device’ [ER 13.1]. This implies an on-line instruction manual for image analysis programs. ‘Where appropriate, the instructions must contain the degree of accuracy … for devices with a measuring function’ [ER 13.6 (p)] d. ER – manage the risk: i. ‘…any risks [must] constitute acceptable risks when weighed against the benefits to the patient…’ [ER 1] 4 ii. ‘Devices must be designed .. to remove or minimise .. risks connected with magnetic fields [and] temperature’ [ER 9.2] iii. ‘devices shall be designed …[to minimise] exposure of patients … to [any form of] radiation’ [ER 11.1.1].This includes RF heating and potentially dB/dt from the switched gradients. iv. ‘Devices incorporating electronic programmable systems must…ensure …performance of these systems’. [ER12.1]. Applies to RF heating. 6. Appendix 1c - Summary of Geoff Cusick’s talk - how to do this at Queen Square?. GC’s advice is to follow good design practice, which is something like: a. Establish documentation, and populate it. It can be short and simple. [slide 13] b. The technical document is: i. Need – what need led to this device ii. Design – how we are dealing with the need iii. Implementation – how the device was built iv. Validation – does it work? v. Safety – consider and minimise the risks vi. Much of this information might already be in a published paper, in which case this can be referenced. c. User documentation – instruction manual d. Do this at the start, during development, and as part of support. e. Developing MDD compliance at Queen Square. i. GC’s view is that we can do this simply and deftly once we learn how to do it. ii. Developing the validation part (and our skills at designing validation) would reduce the risk of making fundamental errors (such as happened in the qMT sequence or the MTR maps which are 10x the actual MTR value). However validation has to be designed to be maximally sensitive to error whilst taking minimal time. If done well, it is probably a mindset modification which achieves these 2 objectives, and also increases the scientific value of the development and the ensuing journal papers. The ER’s I have picked out above are good practice in scientific instrument design, and we should embrace those. 7. Appendix 2a: Example of specific response for a pulse sequence to ER’s a. ongoing 8. Appendix 2b: Example of specific response for image analysis to ER’s a. ongoing 9. Appendix 3a: Case study B1 mapping sequence. a. These case studies are intended to be learning experiences; the individuals involved should not in any way feel bad about this! b. History: an inexperienced scientist implemented a B1 mapping technique, which worked correctly under their use. It was handed over to a non-technical user, with an instruction sheet. The sheet had an error in it. The result was that the sequence was used incorrectly. This continued for several months without being detected. c. Learning: i. A more experienced independent person should have had oversight of this before handing over the device. 5 ii. Inbuilt test mode would have forced the users to find the error (provided it was used!). For this device, reduce TG (the transmitter output) by 10 units, and remeasure B1 at the coil centre. It should be scaled by a factor of 10-10/200 = 0.891 (i.e. 11% reduction). iii. Monitoring, by a technical person, of the device during its first weeks of use would probably have detected the error. iv. The manual is part of the device, and need to be tested! Silently watch how a naïve user uses the device. 10. Appendix 3b: Case study 2D qMT sequence. a. History: i. A sequence to apply 3 different amplitudes of MT saturation pulse amplitude was written. ii. The resulting data appeared to fit a model, and were published in 3 places iii. An independent physicist, developing their own qMT sequence, found they could not reproduce the original data. iv. Several experienced physicists became involved, and suggested measuring the MT pulses directly. v. The amplitude of one pulse was found to be wrong, and the explanation found. vi. A retrospective correction of old data could be made; the resulting corrected data were of higher quality, as judged by fitting the model, and also agreeing with those from other groups. b. Learning: i. Do not assume a new sequence is doing what you think. In particular, recognise that pulse amplitudes may be wrong on a (GE) scanner. ii. Invent independent ways of testing the sequence 1. progressively increase Bsat and observe the signal. Do this in a phantom, and use Bsat values at least as high as in-vivo. Do this 20kHz off-resonance (where the behaviour of the imaging pulse is monitored), and signal should be constant, and 1khz off resonance, where a progressive reduction should be seen. 2. observe MT and imaging pulses with search coil for progressive experiment described above; measure amplitude (with confidence limits) relative to first imaging pulse. Measure its width, and estimate its area (and hence FA) relative to the imaging pulse. 3. We still need a way of testing the offset frequency, although errors in this are less likely iii. Ask an independent person – do you believe this sequence is doing what I have programmed it to do? 11. Appendix 3c: Case study qMT model fitting a. History: i. New qMT data were being fitted by a new model implementation, showing good agreement. ii. An independent person tried to plot the same data and model, using an independent implementation of the model, and could not. iii. Comparison with a 3rd model implementation showed that the new implementation was probably the one that was wrong. iv. Detailed examination showed which part was in disagreement (the super-lorentzian lineshape) v. The source was a typographic error in a published paper (that had not been corrected by the authors). b. Learning: 6 i. With complicated mathematical formulae, implement everything twice, as independently as possible (e.g. in C and a spreadsheet, with two different people). ii. Recognise that published formula may have errors, and go back to original work and several versions to check for agreement, or else re-derive the expression. Make basic checks on published expressions (e.g. is the area under the lineshape equal to unity?). 12. Appendix 3d: Case study MTR factor of 10 error a. History: i. MTR values are in the range 10-40pu; they are stored in computer files as integers (range 100-400) to obtain 0.1pu precision. ii. The clinical researcher was dealing with the integer values in all their analysis, including graphing iii. The integer values were passed to a statistician for complex analysis iv. The statistician estimated values of difference and slope using the integer values v. An independent person, who knows about MTR, recognised the factor of 10 error. b. Learning: i. Output all map values as proper floating point values, with proper units, with no access for the user to the raw integer values. ii. Independent review works 13. Appendix 3e: Case study – gains not fixed in Gd enhancement scans a. History: i. Scans pre- and post-Gd did not have the transmitter and receiver gains fixed. A nonresearch scanner was used. The auto-prescan procedure was repeated for the post Gd-scan, and some scanner changes took place. ii. These changes can be partly compensated retrospectively, but inaccurate attenuators and models limit how well this works. iii. A new radiographer noticed the problem, after about 18 months, and from then on data collection was correct. iv. In data analysis, methods that are independent of scanner gain were investigated. b. Learning: i. Analyse at least some of the data as soon as it is collected ii. Beware of data collection problems with staff who are unfamiliar with a new kind of scanning. 14. Appendix 3f: Case study – wrong volumes from oblique scans: a. Dispim volumes had been used by an enterprising radiographer to measure tumour volumes b. The results seemed ‘reasonable’ c. Oblique slices are incorrectly handled (the inter-slice distance is wrong) d. Dispim volumes are underestimated by a variable amount, depending on the angulation; could be up to 30% error. e. Detailed analysis of volumes had been carried out, and a paper prepared. f. With hindsight, the volumes should have been checked with simple phantoms 15. Appendix 4: detailed and very helpful guidance from Gareth Barker (edited from his email of June 28th 2004) a. units: Dispimage has scale factors set in the header for intensity values, and to convert pixels too distance units, but no idea about putting actual unit on anything. Adding 'mm' to the end of any measured/displayed value would be (relatively) easy, but wouldn't actually be true/enforcable unless all the processing programs were compliant. It all 7 b. c. d. e. f. stems from the original conversion, really - the values in the headers are whatever the scanner uses - in our case this is mm for distances, and arbitrary units for anything else. This may not be true for other data (other scanners) and can also easily be over written by anything along the way (eg by conversion to a format that doesn't support pixel sizes, or by using a program that doesn't preserve the headers). Most of our programs should be OK, but we'd have to check. (Getting dispunc to display MTR in pu is jut a matter of adding a header element called 'value_scale' and setting it to 0.1, by the way, but anything that processes these images will need too be updatetd to 'pass the change along'). Validation -A lot of this is what we already do, but don't really document. It may be that publishing the technique and phantom data (if believable) would go some way towards this? Validating software formally is almost impossible, though, particularly given that we don't have control over all of it (ie we have to take the GE stuff on trust). Trials: What we do (are required to do) for clinical trials depends on where the software comes from. If it's commercial, we're pretty much allowed to believe it. If it is 'small user base' (i.e. written by someone else, and used by several people) we test it as a 'black box', but only within it's expected input range. If it's locally written, we have to explicitly test it with 'bad' values and check that it falls over gracefully. We could do similar things for general analysis programs (including passing in the wrong files, etc etc) For pulse sequences we could do things like setup/run with a variety of input parameters (including some noto very reasonable ones) and make sure that all the software SAR calculations (provided by GE, so not our responsibility) gave sensible numbers, that scaled as we expected, and remained within acceptable ranges. Gradient strengths and slew rates are controlled in hardware, so we can push responsibility there back to GE. We'd also need to show that all the parameters needed by the analysis are correctly represented in the image headers. (This means checking, one off, the transfer and conversion programs for things like TE and TR, and also checking on a per sequence basis that things like the 'op user' CVs make sense and are correctly interpreted by the processing packages. Risk just say we obey all the NRPB and MDA guidelines? (Which everyone needs to actually read!! - they're far from clear!) 16. Appendix 5 – feedback from IPEM talk on Nov 15th 2004 a. TickIT may provide a framework for software QA.(talk by Paul Ganney from Hull (paul.ganney@hey.nhs.uk); based on a BSI document). Need not be onerous, he said. Includes a ‘decommissioning process’. This is used by DTI as a standard. Related to ISO9001. Looks good. Paul Tofts is following this up. b. Regarding Liability: there is no case law on Software Medical Devices, and no cases have ever been brought for negligence, so the chances of being sued are ‘low risk’. c. Regarding our employer: we should get them to ‘accept vicarious liability’ (which Trusts have done) d. IPEM report 90 is relevant: Report 90 Safe Design, Construction and Modification of Electromedical Equipment e. An anaesthetist speaker: in ‘research mode’ you have to be able to take risks. Use the ethics committee. f. A computer manager speaker: distinguishes 2 kinds of software: i. Home made for one user, can be written by an amateur ii. Professionally written, by an expert, for distribution (though much good software is written by people without formal computing qualifications). g. If software environment (e.g. compiler) version changes, need to repeat (last stage of) validation 17. References: a. GC’s ppt talk: http://www.medphys.ucl.ac.uk/~geoff/Presentations/The%20Medical%20Devices%20Directive%20for%20R&D.ppt b. UCLH checklist: http://www.medphys.ucl.ac.uk/~geoff/Presentations/MDD%20ER%20Checklist.doc c. These are also linked from the Division website (MR MDD) d. Penny Gowland (Nottingham) gave a very good summary of safety issues at the British Chapter September 2004. http://www.magres.nottingham.ac.uk/teaching/edinburg_safety_new.ppt (7 Mb) 8 e. FDA document Criteria for Significant Risk Investigations of Magnetic Resonance Diagnostic Devices http://www.fda.gov/cdrh/ode/guidance/793.pdf is very short and advocates the ‘least burdensome approach’! f. ‘Can software kill?’ cases from eWEEK. http://www.eweek.com/article2/0,1759,1544231,00.asp g. Circulation: Neuroradiology and Neurophysics Division, Geoff Cusick, Richard Lanyon, Gareth Barker Paul Tofts Currently Unresolved issues: 1. how can brief instructions be built into the sequence and program? (SR2) 9