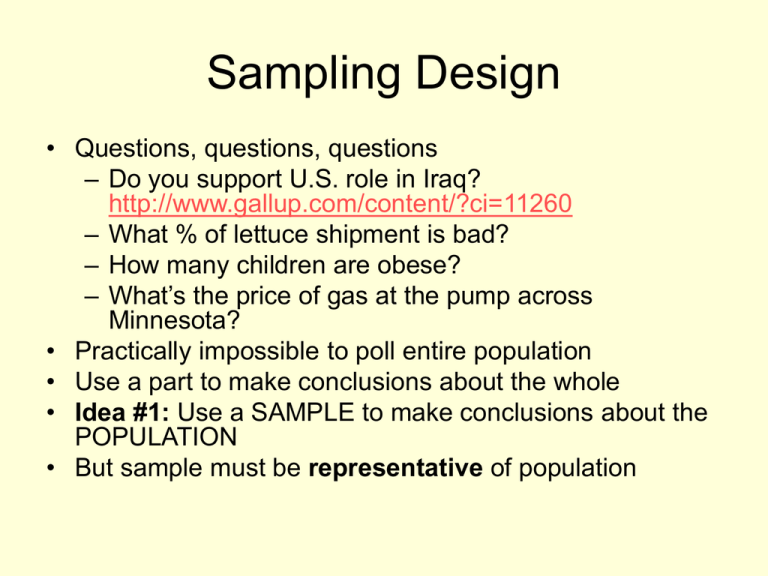

Sampling Design

• Questions, questions, questions

– Do you support U.S. role in Iraq?

http://www.gallup.com/content/?ci=11260

– What % of lettuce shipment is bad?

– How many children are obese?

– What’s the price of gas at the pump across

Minnesota?

• Practically impossible to poll entire population

• Use a part to make conclusions about the whole

• Idea #1: Use a SAMPLE to make conclusions about the

POPULATION

• But sample must be representative of population

Polling began in Pennsylvania

• Harrisburg Pennsylvanian in 1824 predicted

Andrew Jackson the victor

– He did win the popular vote

– But, like Al Gore, he didn’t win the electoral votes and

John Quincy Adams took the election

•

•

•

•

Straw polls were convenience samples

Solicited opinions of “man on the street”

No science of sampling for 100 years

Conventional wisdom: bigger the better

1936 election and the

Literary Digest survey

• Magazine had predicted every

election since 1916

• Sent out 10 million surveys---and

2.4 million responded

• They said: Landon would win 57%

of the vote

• What happened: 62% Roosevelt

landslide

What went wrong?

• Sample not representative

• Lists came from subscriptions,

phone directories, club members

• Phones were a luxury in 1936

• Selection Bias toward the rich

• Voluntary response: Republicans

were angry and more likely to respond

• Context: Great Depression

– 9 million unemployed

– Real income down 33%

– Massive discontent, strike waves

• Economy was main issue in the election

How to do it right

• Idea #2: Randomize

• Randomization insures sample is

representative of population

• Randomization protects against bias

• Simple Random Sample (SRS): every

combination of people has equal chance

to be selected

Some examples of

non-random, biased samples

• 100 people at the Mall of America

• 100 people in front of the Metrodome after a

Twins game

• 100 friends, family and relatives

• 100 people who volunteered to answer a survey

question on your web site

• 100 people who answered their phone during

supper time

• The first 100 people you see after you wake up

in the morning

Is blind chance better than careful

planning and selection?

• Another classic fiasco

• 1948 Election:

Truman versus

Dewey

• Ever major poll

predicted Dewey

would win by 5

percentage points

Truman showing the Chicago Daily

Tribune headline the morning after the

1948 election.

What went wrong?

• Pollsters tried to design a representative sample

• Quota Sampling

• Each interviewer assigned a fixed quota of

subjects in numerous categories (race, sex, age)

• In each category, interviewers free to choose

• Left room for human choice and inevitable bias

• Republicans were wealthier, better educated,

and easier to reach

– Had telephones, permanent addresses,

“nicer” neighborhoods

• Interviewers chose too many Republicans

Quota Sampling biased

• Republican bias in Gallup Poll

Year

Prediction

Actual

of GOP vote GOP vote

Error in

favor of GOP

1936

44

38

6

1940

48

45

3

1944

48

46

2

1948

50

45

5

• Quota sampling eventually abandoned for

random sampling

• Repeated evidence points to

superiority of random sampling

How large a sample?

• Not 10 million, not even 10,000!

• Remarkably it doesn’t depend on size of

population, as long as population is at least 100

times larger than sample

• Idea #3: Validity of the sample depends on the

sample size, not population size

• Like tasting a flavor at the ice cream shop

• SRS of 100 will be as accurate on Carleton

College as in New York City!

• Most polls today rely on 1,000-2,000 people

Gallup Poll record in

presidential elections since 1948

Year

Sample

Size

Winning

candidate

Gallup

Election

prediction result

Error

1952

5,385

Eisenhower

51.0%

55.4%

4.4%

1956

8,144

Eisenhower

59.5%

57.8%

1.7%

1960

8,015

Kennedy

51.0%

50.1%

0.9%

1964

6,625

Johnson

64.0%

61.3%

2.7%

1968

4,414

Nixon

43.0%

43.5%

0.5%

1972

3,689

Nixon

62.0%

61.8%

0.2%

1976

3,439

Carter

49.5%

51.1%

1.6%

1980

3,500

Reagan

51.6%

55.3%

3.7%

1984

3,456

Reagan

59.0%

59.2%

0.2%

1988

4,089

Bush

56.0%

53.9%

2.1%

1992

2,019

Clinton

49.0%

43.2%

5.8%

1996

Clinton

52.0%

50.1%

1.9%

2000

Bush

48.0%

47.9%

0.1%

A peek ahead . . .

• A good rule of thumb is that the margin of

error in a sample is 1 , where n is the

n

sample size.

• For n = 1,600, that’s 2.5%.

• Most political polls report margins of error

between 2-3%.

• The rule of thumb margin of error doesn’t

depend on population size, only on sample

size

Other sampling schemes

Stratified sampling

• Goal: Random sample of 240 Carleton students

• To insure representation across disciplines,

divide population into strata

– Arts and Literature 20%

– Social Sciences 30%

- Humanities 15%

- Math/Natural Sciences 35%

• Choose 240 x .20 = 48 Arts and Literature

240 x .15 = 36 Humanities

240 x .30 = 72 Social Sciences

240 x .35 = 84 Math/Natural Sciences

Within strata, choose a simple random sample

Stratified sampling

• Advantages: Sample will be representative for

the strata; Can gain precision of estimate

• Disadvantages: Logistically difficult; must know

about the population; May not be possible

• Note Stratified sample is not a simple random

sample

• Every possible group of 240 students is not

equally likely to be selected

Cluster sampling – an example

• Warehouse contains 10,000 window frames

stored on pallets

• Goal: Estimate how many frames have wood rot

• Determining if a frame has wood rot is costly

• Sample 500 window frames

• Pallets numbered 1 to 400

• Each pallet contains 20 to 30 window frames

• Sample pallets, not windows.

• Pick SRS of 20 pallets from population of 400.

• Cluster sample consists of all frames on each

pallet

Cluster sampling

• Door-to-door surveys

– City blocks are the clusters

• Airlines get customer opinions

– Individual flights are the clusters

• Advantage: Much easier to implement

depending on context

• Disadvantage: Greater sampling

variability; less statistical accuracy

Who

likes

Statistics?

Most common forms of bias

Response bias

Anything that biases/influences responses

Non-response bias

When a large fraction of those sampled don’t

respond, such as

Voluntary response bias

Most common source of bias in polls

Sampling badly:

Convenience sampling

Sample individuals who are at hand

Survey students on the Quad or in

Sayles or in Stats class

Internet polls are prime suspects

American Family Association online poll

on gay marriage

You critique it

► Before

2000 election: What to do with large

government surplus

► (1) “Should the money be used for a tax cut, or

should it be used to fund new government

programs?”

► (2) “Should the money be used for a tax cut, or

should it be spent on programs for education, the

environment, health care, crime-fighting, and

military defense?”

► (1): 60% for tax cut; (2): 22% for tax cut

Another type of response bias

“Some

people say that the 1975 Public

Affairs Act should be repealed. Do you

agree or disagree that it should be

repealed.”

Washington Post, Feb. 1995

Results: For repeal: 24%, Against repeal: 19%,

No opinion: 57%

No such thing as the Public Affairs Act!

Non-response

Non-respondents can be very different from

respondents

Student surveys at end of term had about 20%

response rate

General Social Survey (www.norc.org) has 7080% response rate, with 90 minute survey!

Huge variability in media and government

response rates

Typically, media rates at about 25%;

government at about 50%.

Takes large amount of money, time, and

training to insure good response.

Do you believe the poll?

What questions should you ask?

Who carried out survey?

What is the population?

How was sample selected?

How large was the sample?

What was the response rate?

How were subjects contacted?

When was the survey conducted?

What are the exact questions asked?