Sampling Issues

advertisement

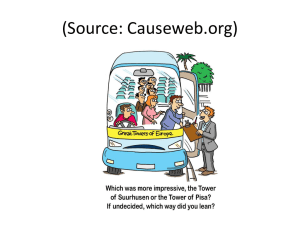

ECON 309 Lecture 4: Sampling Issues I. Random Sampling There are many different methods for getting a sample, but all have their problems. Simple random sampling: a sampling process in which each member of the population has an exactly equal chance of getting selected. (Imagine putting a slip representing each one in a hat and then pulling out the observations.) Problems: Extremely difficult to do. How do you guarantee that every member of the population has an equal chance of being selected? There are many impediments, which we will discuss later. Systematic sampling: a sampling process that selects every kth member of the population (such as from a phone book) to be in one’s sample. Problems: Still requires having a comprehensive list, which is very difficult to get. If you have a list, it might not represent the whole population you’re interested in. Sometimes the observation won’t be available (e.g., when you call people, sometimes they hang up or aren’t home); a bias can arise if the unavailable observations differ systematically from the available observations. Cluster sampling: a sampling process that divides the population into groups, and then uses the observations from just one group. Example: using a single class at CSUN as a sample of CSUN students, or using one state as a sample of the whole United States. Problems: The cluster(s) used must be representative of the whole population. If the class is in the business school, it might not represent the views of CSUN students in the fine arts. Stratified sampling: a sampling process that divides the population into mutually exclusive groups, then picks observations from each group in proportion to its representation in the overall population. The groups could be sexes, races, etc. The idea is to make sure all relevant parts of the population are represented in the final sample. Problem: How do you know the correct proportions to use? For instance, how do you know for sure that 12% of the population is black? II. Selection Bias Samples won’t accurately represent the intended population if the members of sample have characteristics that are likely to deviate from those of the larger population. This can happen for a variety of reasons (in fact, too many different reasons to list them all). Usually, it happens because the means used for collecting the data tend to exclude some subsets of the population. Example: The famous poll that predicted Alf Landon would beat FDR in the 1936 election. The poll was conducted by telephone, and supporters of FDR were disproportionately people with telephones. Example: If you want to know the buying behavior of customers, and you sample your customers at a particular time of day, the resulting conclusions are only reliably true of day customers. (In other words, you’re sampling from a smaller population than the population you’re curious about.) Example: Any survey that relies on voluntary responses. The people who respond will often differ substantially from those who don’t. Huff gives the example of the reported incomes of Yale graduates from a particular year. Who do you think is most likely to respond to the poll – someone’s who’s done well in life or someone who’s done poorly? This is a major problem for almost any survey methodology that involves people, because people can always refuse to participate, and those who do refuse tend to be different from those who don’t. (The highly unscientific Internet polls are extreme examples.) Simple examples of biased samples are easiest to find with surveys. But even if you’re not using a survey methodology, you can still run into this problem. For instance, suppose you’re basing your study of U.S. income distribution on tax returns. This will bias your sample toward people who file tax returns – meaning people who earn an income high enough to justify doing so. Your sample will also leave out the tax avoiders. Notice that the buying behavior example above is also not necessarily survey-based (you might just be observing their purchases). The general name for the phenomenon we’ve been discussing is selection bias. The way that the observations are selected can bias the results. Often, selection bias takes the form of self-selection: when people can choose whether or not to participate, those who agree have “selected themselves.” III. Reporting Bias This is a bias that creeps in not because of getting a sample that doesn’t represent the population, but because respondents’ answers aren’t reliable. This can be true for many different reasons: 1. People will often lie or misrepresent themselves in some way. Example: Huff cites the survey of households to find out what magazines they read. But people may say they read things they don’t actually read, in order to seem more erudite or high-class. Example: Huff also mentions a survey showing that people brush their teeth an average of 1.02 times per day. But people might just be giving what they know is the “right” answer, while their actual hygienic habits differ substantially. Example: The ABC sex survey (discussed in the prior lecture). Some people may exaggerate their numbers; others may want to downplay their numbers People also may have different notions of what constitutes “sex.” Example: When asked whether they have a gun in the household, people might not wish to respond to for fear of admitting they are violating the law (if the gun is not registered) or giving information to people they’d rather not have it (criminals, government officials who might wish to confiscate guns in the future). 2. People will sometimes just guess. Example: The ABC sex survey again. In the upper range of numbers (above 20 or 30), there were an inordinately large number of responses that were multiples of 5 or 10. People with large numbers of partners were probably just guessing the number. Example: The same thing happens with self-reported income – people will tend to report dollar figures in multiples of $5000 or $10,000. 3. The wording of questions affects the results in unexpected ways. Example: When people are asked, “Do you favor or oppose allowing students and parents to choose a private school to attend at public expense?”, 37% say they favor it, while 55% say they oppose it. But when they are asked, “Do you favor or oppose allowing students and parents to choose any school, public or private, to attend using public funds?”, 60% say they favor it, while 33% say they oppose it. Which one of these questions does a better job of gauging whether people would like to see competition introduced in the education system? (It’s not obvious to me. But you can bet that opponents will cite the former survey, advocates the latter.) 4. People respond differently depending on the characteristics of the interviewer. Example: Huff cites the case of blacks giving different answer to a question depending on whether the interviewer was black or white. When asked which was more important, winning WW2 or making democracy work better at home, blacks were more likely to say the latter when the interviewer was black. (Is this just another case of people lying? Possibly, but it’s intriguing because it means people are more likely to lie to some interviewers than others. Or they might not be lying at all; people may have conflicting attitudes that are brought out by different interviewers.)