Distributed-Nonnegative-Matrix-Factorization-for-Web-Scale

advertisement

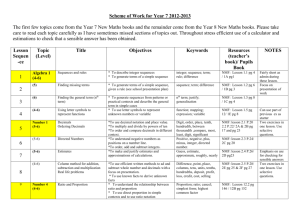

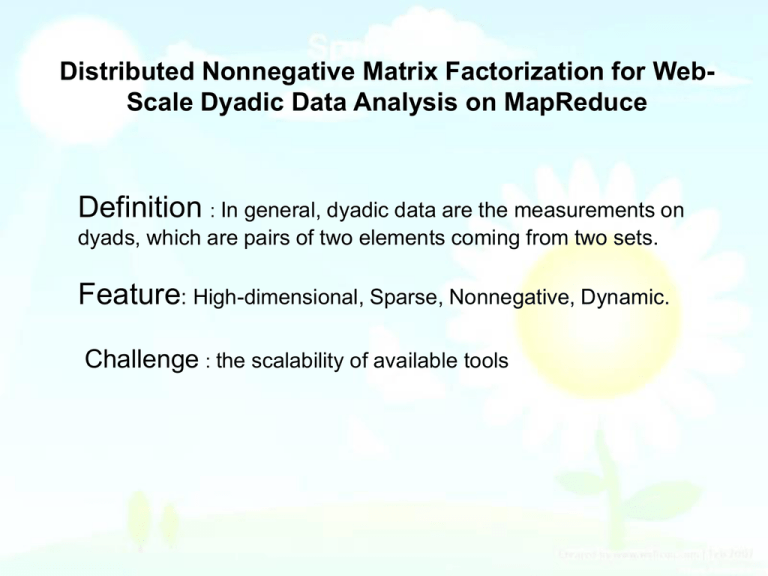

Distributed Nonnegative Matrix Factorization for WebScale Dyadic Data Analysis on MapReduce Definition : In general, dyadic data are the measurements on dyads, which are pairs of two elements coming from two sets. Feature: High-dimensional, Sparse, Nonnegative, Dynamic. Challenge : the scalability of available tools What we do In this paper, by observing a variety of Web dyadic data that conforms to different probabilistic distributions, we put forward a probabilistic NMF framework, which not only encompasses the two classic GNMF and PNMF, but also presents the Exponential NMF (ENMF) for modeling Web lifetime dyadic data. Furthermore, we scale up the NMF to arbitrarily large matrices on MapReduce clusters. GNMF maximizing the likelihood of observing A w.r.t. W and H under the i.i.d. assumption is equivalent to minimizing PNMF then maximizing the likelihood of observing A becomes to minimizing ENMF ~ Ai , j ~ Exponential ( Ai , j ) maximizing the likelihood of observing A w.r.t. W and H becomes to minimizing (a)just like the cuda programming model, and (b) is map-reduce programming. (a) puts the full row or column in the shared memory, and goes on computing with multi-core GPU. So if we have a very long column that can’t put in the shared memory, (a) can’t work efficiently, because the access overhead becomes large. The updating formula for H (Eqn. 1) is composed of three components: X W A T Y W WH T H H. X . / Y Then computing Y=CH Experiment The experiment is based on GNMF. Sandbox Experiment This is designed for a clear understanding the algorithm but not for showcasing the scalability. And all the reported time is the time taken just by one iteration. Experiment on real Web data The experiment is for investigating the effectiveness of this approach, with a particular focus on its scalability, because a method that does not scale well will be of limited utility in practice. Sandbox Experiment We generate the sample matrices which has m rows and n columns. And the m = pow(2,17), n=pow(2, 16), sparsity=pow(2, -10) and sparsity = pow(2, -7). Performance The time taken for one iteration needed more and more as the number of nonzero cells in A increase. Performance The time taken increases as the value of k is added. And the slope for sparsity= pow(2,-10) is much smaller than that for sparsity= pow(2,-7). Both is smaller than 1. Performance The ideal speedup is along the diagonal, which upper-bounds the practical speedup because of the Amdahl’s Law. On the matrix with = pow(2, -7), the speedup is nearly 5 when 8 workers are enabled. The dominant factor becomes extra overhead such as shuffle, as the sparsity decreases. Experiment on real Web data We record a UID-by-website count matrix involving 17.8 million distinct UIDs and 7.24 million distinct websites, which is training set. For effective test, we take the top-1000 UIDs that have the largest number of associated websites, and this gives us 346040 distinct (UID, website) pairs. To measure the effectiveness, we randomly hold out a visited website for each UID, and mix it with another 99 un-visited sites. The 100 websites then constitute the test case for that UID. The goal is to check the rank of the holdout visited website among the 100 websites for each UID, and the overall effectiveness s measured by the average rank across the 1000 test cases, the smaller the better. Scalability On the one hand, it shows that the elapse time increases linearly with the number of iterations, but on the other hand, we note that the average time per iteration becomes smaller when more iterations are executed in one job. Scalability It reveals the linearity between the elapse time and the dimensionality k. Scalability It lists how the algorithm scales with increasingly larger data sampled from increasingly longer time periods. Conclusion