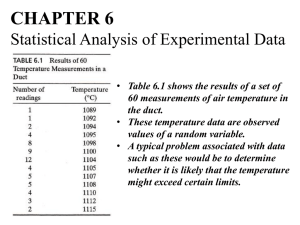

Stochastic Nature of Radioactivity

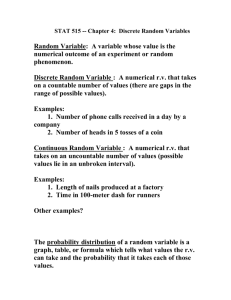

advertisement

PROBABILITY AND THE BINOMIAL DISTRIBUTION Physics 270 LAWS OF CHANCE o o o o o Experiment with one die m – frequency of a given result (say rolling a 4) m/n – relative frequency of this result All throws are equally probable. The throws are independent. The measured relative frequency tends to a limit – 1/6. LAWS OF CHANCE BINOMIAL PROBABILITY deals with the probability of several successive decisions, each of which has two possible outcomes If an event has a probability, p, of happening, then the probability of it happening twice is p2, and in general pn for n successive trials. If we want to know the probability of rolling a die three times and getting two fours and one other number (in that specific order) it becomes: P (44n) P (4)P (4)P (n) 0.023 1 6 2 5 6 BINOMIAL PROBABILITY However this is only sufficient for problems where the order is specific. If order is not important in the above example, then there are 3 ways that 2 rolls of four and 1 other could occur: 110, 101, 011, where 1 represents a roll of four and 0 represents a non-four roll. Since there are 3 ways of achieving the same goal, the probability is 3 times that of before, or 6.9%. BINOMIAL DISTRIBUTION BINOMIAL DISTRIBUTION BINOMIAL DISTRIBUTION General Formula P (m ) m m n m Cn a b Cnm n! m !(n m)! where m n completely specified by n and a expectation value: m na standard deviation: = (nab)1/2 BINOMIAL DISTRIBUTION Example: A baseball player’s batting average is 0.333. • The probability for a hit: a = 0.333 • The probability for an out: b = 1 a = 0.667 Average number of hits the player gets in 100 at bats • µ = na = (100)(0.333) = 33 The standard deviation for 100 at bats nab (100)(0.333)(0.667) 4.7 So, we can expect, 33 ± 4.7 hits for every 100 at bats POISSON DISTRIBUTION If the probability p is small and the number of observations is large the binomial probabilities are hard to calculate. In this instance it is much easier to approximate the binomial probabilities by poisson probabilities. The binomial distribution approaches the poisson distribution for large n and small p. p(m) m e m! POISSON DISTRIBUTION The Poisson distribution can be derived from an approximation of the Binomial distribution. n! P (m) am b n m m !(n m)! n! n(n 1) (n m)! b n m (1 a)n m (n m 1)(n m)! nm (n m)! a 2 (n m)(n m 1) 1 a(n m) 2! an 1 an 2! 2 e an POISSON DISTRIBUTION Example: Radioactive Decay n = 1020 atoms, half-life = 1012 y = 5 × 1019 s • The probability for a decay: 1/ = 2 × 1020/s Average number of decays per second: • µ = na = (1020 atoms)(2 × 1020/s) = 2/s zero decays What’s the probability of more than 1in in one second? 20 e 2 e 2 20 e 2 21e 2 p( 1)p(0) 1 p(0) p (1) 1 59.4% 0.135 or 13.5% 0! 1! 0! 1 GAUSSIAN DISTRIBUTION o when there are a large number of events per observation o requires many observations o resembles a normal distribution (It is continuous!) o o is the standard deviation is the mean observed number of events 1 ( r )2 /2 2 p( r ) e 2 GAUSSIAN DISTRIBUTION The probability of r being in the range r1 and r2 is given by 1 p( r1 r r2 ) 2 r2 r1 e ( r )2 /2 2 which cannot be evaluated analytically, (it can be looked up in a table). If the limits are at +/ ∞, then it normalizes to 1. dr GAUSSIAN DISTRIBUTION It is very unlikely (< 0.3%) that a measurement taken at random from a Gaussian data set will be more than ± 3σ from the true mean of the distribution. GAUSSIAN DISTRIBUTION ● An example illustrating the small difference between the two distributions under the above conditions: Consider tossing a coin 10 000 times. ■ a(heads) = 0.5 ■ n = 10 000 ❒ mean number of heads = μ = na = 5000 ❒ standard deviation σ = [na(1 a)]0.5 = 50