Lecture 14: Penalized Regression II

advertisement

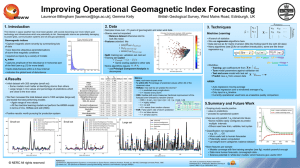

Penalized Regression, Part 2 Penalized Regression Recall in penalized regression, we re-write our loss function to include not only the squared error loss but a penalty term M q L q x p q Our goal then becomes to minimize our a loss function (i.e. SS) In the regression setting we can write M(q ) in terms of our regression parameters b as follows M b y Xb y Xb p b ' The penalty function takes the form p b j 1 b j , for q 0 r q Ridge Regression Last class we discussed ridge regression as an alternative to OLS when covariates are collinear Ridge regression can reduce the variability and improve accuracy of a regression model However, there is not a means of variable selection in ridge regression Ideally we want to be able to reduce the variability in a model but also be able to select which variables are most strongly associated with our outcome The Lasso versus Ridge Regression In ridge regression, the new function is n i i Yi b0 j 1 X ij b j p 2 j 1 b j2 p Consider instead the estimator which minimizes n i i Yi b0 j 1 X ij b j p 2 j 1 b j p The only change is to the penalty function and while the change is subtle, is has a big impact on our regression estimator The Lasso The name lasso stands for “Least Absolute Shrinkage and Selection Operator” Like ridge regression, penalizing the absolute values of the coefficients shrinks them towards zero But in the lasso, some coefficients are shrunk completely to zero Solutions where multiple coefficient estimates are identically zero are called sparse Thus the penalty performs a continuous variable selection, hence the name Geometry of Ridge versus Lasso 2-dimensional case b2 b2 βˆ βˆ b1 2 2 Solid areas represent the constraint regions b1 b2 t & b1 b2 t The ellipses represent the contours of the least square error function b1 The Lasso Because the lasso penalty has an absolute value operation, the objective function is not differentiable and therefore lacks a closed form As a result, we must use optimization algorithms to find the minimum Examples of these algorithms include -Quadratic programming (limit ~100 predictors) -Least Angle Regression/LAR (limit ~10,000 predictors) Selection of Since lasso is not a linear estimator, we have no H matrix such that yˆ Hy Thus determining the degrees of freedom are more difficult to estimate One means is to estimate the degrees of freedom based on the number of non-zero parameters in the model and then use AIC, BIC or Cp to select the best Alternatively (and often more preferred) we could select via cross-validation Forward Stagewise Selection Alternative method for variable subset selection designed to handle correlated predictors Iterative process that begins with all coefficients equal to zero and build regression function in successive small steps Similar algorithm to forward selection in that predictors added successively to the model However, it is much more cautious than forward stepwise model selection -e.g. for a model with 10 possible predictors stepwise takes 10 steps at most, stagewise may take 5000+ Forward Stagewise Selection Stagewise algorithm: (1) Initialize model such that βˆ 0 ˆ y 0 and r y y (2) Find the predictor Xj1 that is most correlated with r and add it to the model (here j1 arg max j cˆ j ) (3) Update bˆ j bˆ j j where j h sign cˆ j -Note, h is a small constant controlling step-length 1 1 1 1 1 (4) Update μˆ μˆ j X j and r r j X j 1 1 (5) Repeat steps 2 thru 4 until cˆ 0 1 1 Stagewise versus Lasso Although the algorithms look entirely different, their results are very similar! They will trace very similar paths for addition of predictors to the model They both represent special cases of a method called least angle regression (LAR) Least Angle Regression LAR algorithm: (1) Initialize model such that βˆ 0 ˆ y 0 and r y y Also initialize an empty “active set” A (subset of indices) (2) Find the predictor X j1 that is most correlated with r where j1 arg max j cˆ j ; update the active set to include X j A1 X j 1 1 (3) Move bˆ j toward sign cˆ j until some other covariate X j has the same correlation with r that bˆ j does. Update the active set to include X j2 A2 X j1 , X j2 1 1 2 1 (4) Update r and move along bˆ j , bˆ j towards the joint OLS direction for the regression of r on X j , X j until a third covariate X j2 is as correlated with r as the first two predictors. Update the active set to include X j A3 X j , X j , X j 1 2 1 3 1 2 2 3 (5) Continue until all k covariates have been added to the model In Pictures Consider a case where we have 2 predictors… x2 x2 y2 yˆ 2 yˆ 0 yˆ 1 x1 y1 Efron et al. 2004 Relationship Between LAR and Lasso LAR is a more general method than lasso A modification of the LAR algorithm produces the entire lasso path for varied from 0 to infinity Modification occurs if a previously non-zero coefficient estimated to be zero at some point in the algorithm If this occurs, the LAR algorithm is modified such that the coefficient is removed from the active set and the joint direction is recomputed This modification is the most frequently implements version of LAR Relationship Bt/ LAR and Stagewise LAR is also a more general method than stagewise selection Can also reproduce stagewise results using modified LAR Start with the LAR algorithm and determine the best direction at each stage If the direction for any predictor in the active set doesn’t agree in sign with the correlation between r and Xj, adjust to move in the direction of corr(r, Xj) As step sizes go to 0, we get a modified version of the LAR algorithm Summary of the Three Methods • LARS – Uses least square directions in the active set of variables • Lasso – Uses the least square directions – If the variable crosses 0, it is removed from the active set • Forward stagewise – Uses non-negative least squares directions in the active set Degrees Freedom in LAR and lasso Consider fitting a LAR model with k < p parameters Equivalently use a lasso bound t that constrains the full regression fit General definition for the effective degrees of freedom (edf) for an adaptively fit model: df yˆ 2 i 1 Cov yˆi , yi 1 N For LARS at the kth step, the edf for the fit vector is exactly k For lasso, at any stage in the fit the effective degrees of freedom is approximately the number of predictors in the model Software Packages What if we consider lasso, forward stagewise, or LAR as alternatives? There are 2 packages in R that will allow us to do this -lars -glmnet The lars package has the advantage of being able to fit all three model types (plus a typical forward stepwise selection algorithm) However, the glmnet package can fit lasso regression models for different types of regression -linear, logistic, cox-proportional hazards, multinomial, and poisson Body Fat Example Recall our regression model > summary(mod13) Call: lm(formula = PBF ~ Age + Wt + Ht + Neck + Chest + Abd + Hip + Thigh + Knee + Ankle + Bicep + Arm + Wrist, data = bodyfat, x = T) Coefficients: Estimate (Intercept) -18.18849 Age 0.06208 Wt -0.08844 Ht -0.06959 Neck -0.47060 Chest -0.02386 Abd 0.95477 Hip -0.20754 Thigh 0.23610 Knee 0.01528 Ankle 0.17400 Bicep 0.18160 Arm 0.45202 Wrist -1.62064 Std. Error 17.34857 0.03235 0.05353 0.09601 0.23247 0.09915 0.08645 0.14591 0.14436 0.24198 0.22147 0.17113 0.19913 0.53495 t value -1.048 1.919 -1.652 -0.725 -2.024 -0.241 11.04 -1.422 1.636 0.063 0.786 1.061 2.270 -3.030 Pr(>|t|) 0.29551 0.05618 . 0.09978 . 0.46925 0.04405 * 0.81000 < 2e-16 *** 0.15622 0.10326 0.94970 0.43285 0.28966 0.02410 * 0.00272 ** Residual standard error: 4.305 on 238 degrees of freedom. Multiple R-squared: 0.749, Adjusted R-squared: 0.7353 . F-statistic: 54.65 on 13 and 238 DF, p-value: < 2.2e-16 Body Fat Example LAR: >library(lars) >par(mfrow=c(2,2)) >object <- lars(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), type="lasso") >plot(object, breaks=F) >object2 <- lars(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), type="lar") >plot(object2, breaks=F) >object3 <- lars(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), type=“for") >plot(object3, breaks=F) >object4 <- lars(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), type=“stepwise") >plot(object4, breaks=F) Body Fat Example A closer look at the model: >object <- lars(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), type="lasso") > names(object) [1] "call" "type" "df" "lambda" "R2" "RSS" "Cp" [9] "entry" "Gamrat" "arc.length" "Gram" "beta" "mu" "actions" "normx" "meanx" > object$beta Age Wt Ht Neck Chest Abd Hip Thigh Knee Ankle 0 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000 0.0000000 0.000000000 0.00000000 0.00000000 0.0000000 1 0.00000000 0.00000000 0.00000000 0.00000000 0.00000000 0.5164924 0.000000000 0.00000000 0.00000000 0.0000000 2 0.00000000 0.00000000 -0.04395065 0.00000000 0.00000000 0.5314218 0.000000000 0.00000000 0.00000000 0.0000000 3 0.01710504 0.00000000 -0.13752803 0.00000000 0.00000000 0.5621288 0.000000000 0.00000000 0.00000000 0.0000000 4 0.04880181 0.00000000 -0.15894236 0.00000000 0.00000000 0.6550929 0.000000000 0.00000000 0.00000000 0.0000000 5 0.04994577 0.00000000 -0.15905246 -0.02624509 0.00000000 0.6626603 0.000000000 0.00000000 0.00000000 0.0000000 6 0.06499276 0.00000000 -0.15911969 -0.25799496 0.00000000 0.7079872 0.000000000 0.00000000 0.00000000 0.0000000 7 0.06467180 0.00000000 -0.15921694 -0.26404701 0.00000000 0.7118167 -0.004720494 0.00000000 0.00000000 0.0000000 8 0.06022586 -0.01117359 -0.14998300 -0.29599536 0.00000000 0.7527298 -0.022557736 0.00000000 0.00000000 0.0000000 9 0.05710956 -0.02219531 -0.14039586 -0.32675736 0.00000000 0.7842966 -0.035675017 0.00000000 0.00000000 0.0000000 10 0.05853733 -0.04577935 -0.11203059 -0.39386199 0.00000000 0.8425758 -0.101022340 0.09657784 0.00000000 0.0000000 11 0.06132775 -0.07889636 -0.07798153 -0.45141574 0.00000000 0.9142944 -0.171178163 0.20141924 0.00000000 0.1259630 12 0.06214695 -0.08452690 -0.07220347 -0.46528070 -0.01582661 0.9402896 -0.194491760 0.22553958 0.00000000 0.1586161 13 0.06207865 -0.08844468 -0.06959043 -0.47060001 -0.02386415 0.9547735 -0.207541123 0.23609984 0.01528121 0.1739954 Bicep Arm Wrist 0 0.00000000 0.0000000 0.000000 1 0.00000000 0.0000000 0.000000 2 0.00000000 0.0000000 0.000000 3 0.00000000 0.0000000 0.000000 4 0.00000000 0.0000000 -1.169755 5 0.00000000 0.0000000 -1.198047 6 0.00000000 0.2175660 -1.535349 7 0.00000000 0.2236663 -1.538953 8 0.00000000 0.2834326 -1.535810 9 0.04157133 0.3117864 -1.534938 10 0.09096070 0.3635421 -1.522325 11 0.15173471 0.4229317 -1.587661 12 0.17055965 0.4425212 -1.607395 13 0.18160242 0.4520249 -1.620639 Body Fat Example A closer look at the model: > names(object) [1] "call" "type" "df" "lambda" "R2" "RSS" "Cp" "actions" [9] "entry" "Gamrat" "arc.length" "Gram" "beta" "mu" "normx" "meanx" > object$df Intercept 1 12 2 13 3 4 5 6 7 8 9 10 11 14 > object$Cp 0 1 2 3 4 5 6 7 8 9 10 698.4 93.62 85.47 65.41 30.12 30.51 19.39 20.91 18.68 17.41 12.76 11 12 13 10.47 12.06 14.00 Body Fat Example Glmnet: >fit<-glmnet(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), alpha=1) >fit.cv<-cv.glmnet(x=as.matrix(bodyfat[,3:15]), y=as.vector(bodyfat[,2]), alpha=1) >plot(fit.cv, sign.lambda=-1) >fit<-glmnet(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), alpha=1, 0.02123575) Body Fat Example Glmnet: >fit<-glmnet(x=as.matrix(bodyfat[,3:15]),y=as.vector(bodyfat[,2]), alpha=1) >names(fit) [1] "a0" "beta" [10] "offset" "call" "df" "dim" "nobs" "lambda" "dev.ratio" "nulldev" "npasses" "jerr" > fit$lambda [1] 6.793883455 6.190333574 5.640401401 5.139323686 4.682760334 4.266756812 3.887709897 3.542336464 3.227645056 [10] 2.940909965 2.679647629 2.441595119 2.224690538 2.027055162 1.846977168 1.682896807 1.533392893 1.397170495 [19] 1.273049719 1.159955490 1.056908242 0.963015426 0.877463790 0.799512325 0.728485854 0.663769178 0.604801754 [28] 0.551072833 0.502117041 0.457510347 0.416866389 0.379833128 0.346089800 0.315344136 0.287329832 0.261804242 [37] 0.238546274 0.217354481 0.198045308 0.180451508 0.164420694 0.149814013 0.136504949 0.124378225 0.113328806 [46] 0.103260988 0.094087566 0.085729086 0.078113150 0.071173793 0.064850910 0.059089734 0.053840365 0.049057335 [55] 0.044699216 0.040728261 0.037110075 0.033813318 0.030809436 0.028072411 0.025578535 0.023306209 0.021235749 [64] 0.019349224 0.017630292 0.016064066 0.014636978 0.013336669 0.012151876 0.011072337 0.010088701 0.009192449 [73] 0.008375817 0.007631733 0.006953750 0.006335998 0.005773126 0.005260257 Body Fat Example Glmnet: >fit.cv<-cv.glmnet(x=as.matrix(bodyfat[,3:15]), y=as.vector(bodyfat[,2]), alpha=1) > names(fit.cv) [1] "lambda" "cvm" "cvsd" "cvup" "cvlo" "nzero" "name" "glmnet.fit" [9] "lambda.min" "lambda.1se" > fit.cv$lambda.min [1] 0.02123575 Ridge versus Lasso Coefficient Paths Ridge Trace Plot Lasso LARS Stagewise Stepwise Body Fat Example Variable OLS Ridge Lasso Age 0.0635 0.0743 0.0607 Weight -0.0824 -0.0668 0.0000 Height -0.2391 -0.0922 -0.2639 Neck -0.3881 -0.4667 -0.3140 Chest -0.1321 0.0071 -0.0916 Abdomen 0.9017 0.8703 0.8472 Hip -0.2129 -0.1750 -0.1408 Thigh 0.2026 0.2301 0.1499 Knee -0.0082 -0.0108 0.0000 Ankle -0.0085 0.1374 0.0000 Bicep 0.1902 0.1561 0.1792 Arm 0.1913 0.4329 0.0563 Wrist -1.6053 -1.6678 -1.5348 If we remove the outliers and clean up the data before analysis… Variable OLS Ridge Lasso Age 0.0653 0.0953 0.0473 Weight -0.0024 0.02867 0.0000 Height -0.2391 -0.3987 -0.2926 Neck -0.3881 -0.2171 -0.1379 Chest -0.1321 0.0643 0.0000 Abdomen 0.9017 0.5164 0.7368 Hip -0.2129 0.0428 0.0000 Thigh 0.2026 0.1894 0.2710 Knee -0.0082 0.0584 0.0000 Ankle -0.0085 -0.1798 0.0000 Bicep 0.1902 0.1436 0.0560 Arm 0.1913 -0.0932 0.0000 Wrist -1.6053 -1.4160 -1.4049 Body Fat Example What can we do in SAS? SAS can also do cross-validation However, it only fits linear regression Here’s the basic SAS code ods graphics on; proc glmselect data=bf plots=all; model pbf=age wt ht neck chest abd hip thigh knee ankle bicep arm wrist/selection=lasso(stop=none choose=AIC); run; ods graphics off; The GLMSELECT Procedure LASSO Selection Summary Effect Effect Number Step Entered Removed Effects In AIC 0 Intercept 1 1 1325.7477 ----------------------------------------------------------------------------------------------1 Abd 2 2 1070.4404 2 Ht 3 3 1064.8357 3 Age 4 4 1049.4793 4 Wrist 5 5 1019.1226 5 Neck 6 6 1019.6222 6 Arm 7 7 1009.0982 7 Hip 8 8 1010.6285 8 Wt 9 9 1008.4396 9 Bicep 10 10 1007.1631 10 Thigh 11 11 1002.3524 11 Ankle 12 12 999.8569* 12 Chest 13 13 1001.4229 13 Knee 14 14 1003.3574 Penalized regression methods are most useful when -high collinearity exists -when p >> n Keep in mind you still need to look at the data first Could also consider other forms of penalized regression, though in practice alternatives are not used