Windows Azure Storage

advertisement

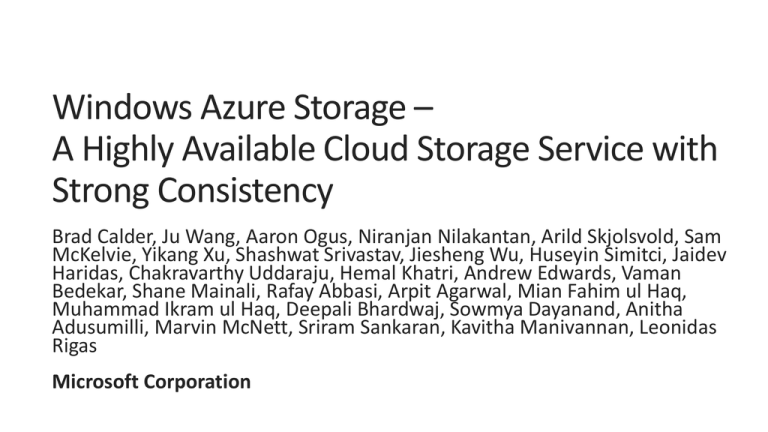

Windows Azure Storage – A Highly Available Cloud Storage Service with Strong Consistency Brad Calder, Ju Wang, Aaron Ogus, Niranjan Nilakantan, Arild Skjolsvold, Sam McKelvie, Yikang Xu, Shashwat Srivastav, Jiesheng Wu, Huseyin Simitci, Jaidev Haridas, Chakravarthy Uddaraju, Hemal Khatri, Andrew Edwards, Vaman Bedekar, Shane Mainali, Rafay Abbasi, Arpit Agarwal, Mian Fahim ul Haq, Muhammad Ikram ul Haq, Deepali Bhardwaj, Sowmya Dayanand, Anitha Adusumilli, Marvin McNett, Sriram Sankaran, Kavitha Manivannan, Leonidas Rigas Microsoft Corporation • • • • Blobs Tables Queues Drives Windows Azure Storage High Level Architecture Design Goals Access blob storage via the URL: http://<account>.blob.core.windows.net/ Storage Location Service Data access LB LB Front-Ends Front-Ends Partition Layer Partition Layer Stream Layer Intra-stamp replication Storage Stamp Inter-stamp (Geo) replication Stream Layer Intra-stamp replication Storage Stamp • • • Append-only distributed file system All data from the Partition Layer is stored into files (extents) in the Stream layer An extent is replicated 3 times across different fault and upgrade domains • • Checksum all stored data • • • With random selection for where to place replicas for fast MTTR Verified on every client read Scrubbed every few days Re-replicate on disk/node/rack failure or checksum mismatch Stream Layer (Distributed File System) M M Paxos M Extent Nodes (EN) • • • • Provide transaction semantics and strong consistency for Blobs, Tables and Queues Stores and reads the objects to/from extents in the Stream layer Provides inter-stamp (geo) replication by shipping logs to other stamps Scalable object index via partitioning Partition Master Lock Service Partition Layer Partition Server Partition Server Partition Server Partition Server M Stream Layer M Paxos M Extent Nodes (EN) • • • Front End Layer FE FE Stateless Servers Authentication + authorization Request routing FE FE FE Partition Master Lock Service Partition Layer Partition Server Partition Server Partition Server Partition Server M Stream Layer M Paxos M Extent Nodes (EN) Incoming Write Request Ack Front End Layer FE FE FE FE FE Partition Master Lock Service Partition Layer Partition Server Partition Server Partition Server Partition Server M Stream Layer M Paxos M Extent Nodes (EN) Partition Layer • Need a scalable index for the objects that can • Spread the index across 100s of servers • Dynamically load balance • Dynamically change what servers are serving each part of the index based on load Blob Index Account Account Name Name Container Container Name Name Blob Blob Name Name aaaa aaaa aaaa aaaa aaaaa aaaaa …….. ……… …….. ……… …….. ……… …….. ……… …….. ……… …….. ……… …….. …….. Account Container harry pictures Name Name …….. …….. Front-End harry pictures …….. Server …….. ……… ……… …….. …….. A-H: PS1 ……… ……… …….. …….. PS2 Account H’-R: Container richard videos Name R’-Z: Name PS3 …….. …….. richard videos …….. …….. Partition ……… ……… …….. Map…….. …….. Blob sunrise Name …….. sunset …….. ……… …….. ……… …….. Blob soccer Name …….. tennis …….. ……… …….. ……… …….. ……… …….. ……… …….. zzzz zzzz zzzz zzzz zzzzz zzzzz Storage Stamp PS 1 PS 2 A-H: PS1 Partition H’-R: PS2 Master R’-Z: PS3 Partition Server A-H Partition Server H’-R Partition Map Partition Server R’-Z PS 3 Writes Commit Log Stream Metadata log Stream Read/Query Checkpoint File Table Blob Data Checkpoint File Table Blob Data Checkpoint File Table Blob Data Stream Layer Stream //foo/myfile.data Extent E1 Extent E2 Extent E3 Block Block Block Ptr E4 Block Block Block Block Block Block Block Ptr E3 Block Ptr E2 Block Block Block Block Ptr E1 Extent E4 Paxos Partition Layer Create Stream/Extent EN1 Primary EN2, EN3 Secondary SM Stream SM Master Allocate Extent replica set EN 1 Primary EN 2 Secondary A EN 3 Secondary B EN Paxos Partition Layer Ack EN1 Primary EN2, EN3 Secondary SM SM SM Append EN 1 Primary EN 2 Secondary A EN 3 Secondary B EN Stream //foo/myfile.dat Ptr E1 Ptr E2 Extent E1 Ptr E3 Extent E2 Ptr E4 Ptr E5 Extent E3 Extent E4 Extent E5 Paxos Partition Layer Append SM Stream SM Master Seal Extent 120 120 Seal Extent Sealed at 120 Ask for current length EN 1 EN 2 EN 3 Primary Secondary A Secondary B EN 4 Paxos SM Stream SM Master Partition Layer 120 Seal Extent Sealed at 120 Sync with SM EN 1 EN 2 EN 3 Primary Secondary A Secondary B EN 4 Paxos Partition Layer Append Seal Extent 120 SM SM SM Seal Extent Sealed at 100 Ask for current length 100 EN 1 EN 2 EN 3 Primary Secondary A Secondary B EN 4 Paxos SM SM SM Partition Layer 100 Seal Extent Sealed at 100 Sync with SM EN 1 EN 2 EN 3 Primary Secondary A Secondary B EN 4 • For Data Streams, Partition Layer only reads from offsets returned from successful appends • • SM SM SM Partition Server Committed on all replicas Row and Blob Data Streams • Offset valid on any replica EN 1 Safe to read from EN3 EN 2 EN 3 Network partition • PS can talk to EN3 • SM cannot talk to EN3 Primary Secondary A Secondary B • Logs are used on partition load • Commit and Metadata log streams SM SM SM • Check commit length first • Only read from • • Check commit length Use EN1, EN2 for loading Partition Server Unsealed replica if all replicas have the same commit length A sealed replica Seal Extent Check commit length EN 1 EN 2 EN 3 Network partition • PS can talk to EN3 • SM cannot talk to EN3 Primary Secondary A Secondary B Design Choices and Lessons Learned • Multi-Data Architecture • Use extra resources to serve mixed workload for incremental costs • • • • Blob -> storage capacity Table -> IOps Queue -> memory Drives -> storage capacity and IOps • Multiple data abstractions from a single stack • Greatly simplifies replication protocol and failure handling • Consistent and identical replicas up to the extent’s commit length • • • • Keep snapshots at no extra cost Benefit for diagnosis and repair Erasure Coding Tradeoff: GC overhead • Improvements at lower layers help all data abstractions • Simplifies hardware management • Tradeoff: single stack is not optimized for specific workload pattern • Allows each to be scaled separately • Important for multitenant environment • Moving toward full bisection bandwidth between compute and storage • Tradeoff: Latency/BW to/from storage Windows Azure Storage Summary http://blogs.msdn.com/windowsazurestorage/