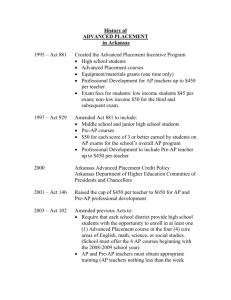

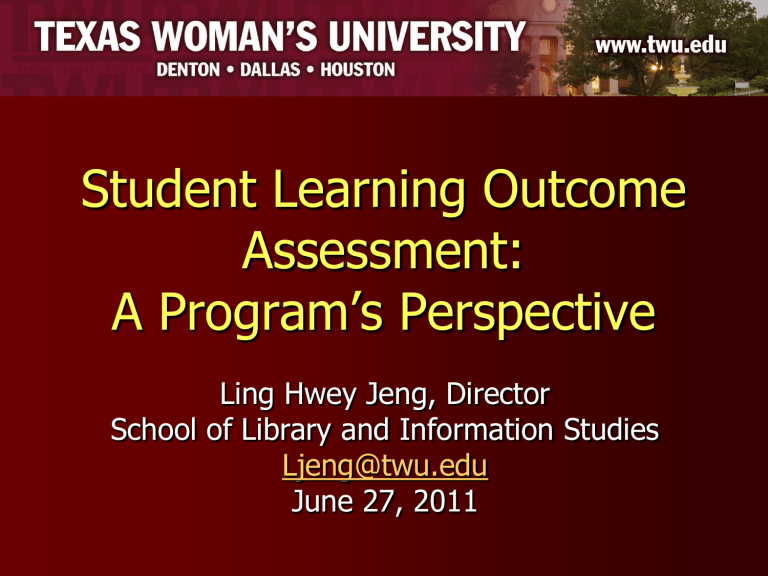

Student Learning Outcome Assessment: A Program`s Perspective

Student Learning Outcome

Assessment:

A Program’s Perspective

Ling Hwey Jeng, Director

School of Library and Information Studies

Ljeng@twu.edu

June 27, 2011

Operational Assumptions

Every program is unique, but it is still possible to have common process for quality assessment.

Data used to inform decisions must be open, consistent, and continuous.

Evidence of student academic attainment must be explicit, clear and understandable.

No assessment can be good without direct measures on student learning outcomes.

2

Accreditation

Paradigm shift

– from “what faculty teach” to “what students learn”

Focus shift

– from structure “input, process, output” to outcomes

3

The Anatomy of Accreditation

Input (e.g., enrollment, faculty recruitment, facility)

Process (e.g., curriculum, services, advising)

Output (e.g., grades, graduation, placement)

Outcome

– Goal oriented planning, support, teaching, as shown in evidence of learning

4

Example: Structure and Outcomes

Input Process Outputs Outcomes

Students backgrounds, enrollment

Student services and programs grades, graduation, placement

Skills gained, attitudes changes

Faculty backgrounds, recruitment teaching assignment, class size work units, publications, conference presentations citations, impacts

Program history, budget, resources allocated policies, procedures, governance participation rates, resources utilization program objectives achieved

5

Example: Output v. Outcome

Output > Outcome

Course grades > Skills learned

Faculty publications

Enrollment growth

> Citation impacts

> Objective achieved

6

Two Levels of Assessment

Program level assessment

Course level assessment

7

Implicit in COA Standards

is the expectation that assessment is

Explicit and in writing

Integrated in the program’s planning process

Done at both program level and course level

Accessible to those affected by the assessment

Implemented and used as feedback by the program

8

The Guiding Principles of Assessment

Backward planning

– Start with where we want to end

Triangulation

– Multiple measures, both direct and indirect

Gap analysis

– Inventory of what has been done

9

Steps for Assessment

Identify standards, sources of evidence and constituent inputs

Define student learning objectives

Develop outcome measures

– Direct and indirect measure

Collect and analyze data

– Methods, frequency, patterns

Review and use data as feedback

– Impacts on decisions made

10

Faculty Expectations as the Basis

The really important things faculty think students should know, believe, or be able to do when they receive their degrees

Approaches to establishing faculty expectations

Top down:

– use external standards to define faculty expectations

Bottom up:

– identify recurring faculty expectations among courses, and use the list to develop overarching program level expectations

11

Example: A Bottom Up Approach

Take all course syllabi

Examine what expectations (i.e., course objectives) are included in individual courses

Make a list of recurring ones as the basis for program level expectations

Ask what else need to be at program level

State the expectations in program objectives

12

Activities for Direct Measures

(e.g.)

written exams oral exams performance assessments standardized testing licensure exams oral presentations projects demonstrations case studies simulation portfolios juried activities with outside panel

13

Activities for Indirect Measures

questionnaire surveys

interviews

focus groups

employer satisfaction studies

advisory board

job/placement data

(examples only)

14

Demonstration of Assessment

Program objectives aligned with mission and goals

Multiple inputs in developing program objectives (both constituents and disciplinary standards)

Program objectives stated in terms of student learning outcomes

15

Demonstration of Assessment

(Cont.)

Student learning outcome assessment addressed at both course level and program level

Triangulation with both direct and indirect measures

A formal, systematic process to integrate results of assessment into continuous planning

16

Words for Thought

Assessment of input and process (i.e., structure) only determines capacity. It does not determine what students learn.

Don’t confuse “better teaching” with “better learning.” One is the means and the other is the outcome.

Everything we do in the classroom is about something outside the classroom.

It’s what the learners do that determines what and how much is learned.

If I taught something and no one learned it, does it count?

17

A Program Director’s Perspective

Map the objectives with professional standards

Make visible the invisible expectations

Make sure what we measure is what we value

Begin with what could be agreed upon

Include both program measures and course embedded measures

Make use of assessment in grading

Harness the accreditation process to make it happen

18