Programming for Linguists

advertisement

Programming for

Linguists

An Introduction to Python

22/12/2011

Feedback

Ex. 1)

Read in the texts of the State of the

Union addresses, using the state_union

corpus reader. Count occurrences of

“men”, “women”, and “people” in each

document. What has happened to the

usage of these words over time?

import nltk

from nltk.corpus import state_union

cfd = nltk.ConditionalFreqDist((fileid, word) for

fileid in state_union.fileids( )

for word in state_union.words(fileids = fileid))

fileids = state_union.fileids( )

search_words = ["men", "women", "people"]

cfd.tabulate(conditions = fileids, samples =

search_words)

Ex 2)

According to Strunk and White's Elements

of Style, the word “however”, used at the

start of a sentence, means "in whatever

way" or "to whatever extent", and not

"nevertheless". They give this example of

correct usage: However you advise him,

he will probably do as he thinks best.

Use the concordance tool to study actual

usage of this word in 5 NLTK texts.

import nltk

from nltk.book import *

texts = [text1, text2, text3, text4, text5]

for text in texts:

print text.concordance("however")

Ex 3)

Create a corpus of your own of minimum

10 files containing text fragments. You can

take texts of your own, the internet,…

Write a program that investigates the

usage of modal verbs in this corpus using

the frequency distribution tool and plot the

10 most frequent words.

import nltk

from nltk.corpus import

PlaintextCorpusReader

corpus_root = “/Users/claudia/my_corpus”

#corpus_root = “C:\Users\...”

my_corpus = PlaintextCorpusReader

(corpus_root, '.*’)

words = my_corpus.words( )

cfd = nltk.ConditionalFreqDist((fileid, word) for fileid

in my_corpus.fileids( ) for word in

my_corpus.words(fileid))

fileids = my_corpus.fileids( )

modals = ['can', 'could', 'may',

'might', 'must', 'will’

cfd.tabulate(conditions = fileids,

samples = modals)

fd = nltk.FreqDist(words)

all_tokens = fd.keys( )

for t in all_tokens:

if re.match(r'[^a-zA-Z0-9]+', t):

all_tokens.remove(t)

most_frequent=all_tokens[:10]

most_frequent.plot( )

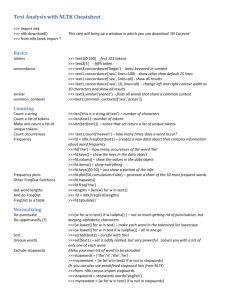

Ex 1)

Choose a website. Read it in in Python

using the urlopen function, remove all

HTML mark-up and tokenize it. Make a

frequency dictionary of all words ending

with ‘ing’ and sort it on its values

(decreasingly).

Ex 2)

Write the raw text of the text in the

previous exercise to an output file.

import nltk

import re

url= “website”

from urllib import urlopen

htmltext= urlopen(url).read( )

rawtext= nltk.clean_html(htmltext)

rawtext2= rawtext.lower( )

tokens= nltk.wordpunct_tokenize(rawtext2)

my_text= nltk.Text(tokens)

wordlist_ing= [w for w in tokens if re.search(r'^.*ing$',w)]

freq_dict= { }

for word in wordlist_ing:

if word not in freq_dict:

freq_dict[word] = 1

else:

freq_dict[word] = freq_dict[word]+1

from operator import itemgetter

sorted_wordlist_ing = sorted(freq_dict.iteritems(), key=

itemgetter(1), reverse=True)

Ex 2)

output_file = open(“dir/output.txt","w")

output_file.write(str(rawtext2)+"\n")

output_file.close( )

Ex 3)

Write a script that performs the same

classification task as we saw today using

word bigrams as features instead of single

words.

Some Mentioned Issues

Loading your own corpus in NLTK with no

subcategories:

import nltk

from nltk.corpus import PlaintextCorpusReader

loc = “/Users/claudia/my_corpus” #Mac

loc = “C:\Users\claudia\my_corpus” #Windows 7

my_corpus = PlaintextCorpusReader(loc, “.*”)

Loading your own corpus in NLTK with

subcategories:

import nltk

from nltk.corpus import

CategorizedPlaintextCorpusReader

loc=“/Users/claudia/my_corpus” #Mac

loc=“C:\Users\claudia\my_corpus” #Windows 7

my_corpus = CategorizedPlaintextCorpusReader(loc,

'(?!\.svn).*\.txt', cat_pattern=

r'(cat1|cat2)/.*')

Dispersion Plot

determine the location of a word in the text: how many words

from the beginning it appears

Exercises

Write a program that reads a file, breaks

each line into words, strips whitespace

and punctuation from the text, and

converts the words to lowercase.

You can get a list of all punctuation marks

by:

import string

print string.punctuation

import nltk, string

def strip(filepath):

f = open(filepath, ‘r’)

text = f.read( )

tokens = nltk.wordpunct_tokenize(text)

for token in tokens:

token = token.lower( )

if token in string.punctuation:

tokens.remove(token)

return tokens

If you want to analyse a text, but filter out

a stop list first (e.g. containing “the”,

“and”,…), you need to make 2 dictionaries:

1 with all words from your text and 1 with

all words from the stop list. Then you need

to subtract the 2nd from the 1st. Write a

function subtract(d1, d2) which takes

dictionaries d1 and d2 and returns a new

dictionary that contains all the keys from

d1 that are not in d2. You can set the

values to None.

def subtract(d1, d2):

d3 = { }

for key in d1.keys():

if key not in d2:

d3[key] = None

return d3

Let’s try it out:

import nltk

from nltk.book import *

from nltk.corpus import stopwords

d1 = { }

for word in text7:

d1[word] = None

wordlist = stopwords.words(“english”)

d2 = { }

for word in wordlist:

d2[word] = None

rest_dict = subtract(d1, d2)

wordlist_min_stopwords=rest_dict.keys( )

Questions?

Evaluation Assignment

Deadline = 23/01/2012

Conversation in the week of 23/01/12

If you need any explanation about the

content of the assignment, feel free to email me

Further Reading

Since this was only a short introduction to

programming in Python, if you want to

expand your programming skills further:

see chapters 15 – 18 about objectoriented programming

Think Python. How to Think Like a

Computer Scientist?

NLTK book

Official Python documentation:

http://www.python.org/doc/

There is a newer version of Python

available, but it is not (yet) compatible with

NLTK

Our research group:

CLiPS: Computational Linguistics and

Psycholinguistics Research Center

http://www.clips.ua.ac.be/

Our projects:

http://www.clips.ua.ac.be/projects

Happy holidays and success

with your exams