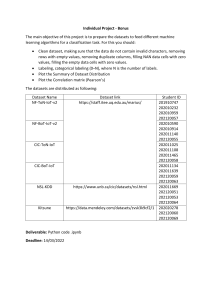

Project Implementation Plan for Concept Drift Detection in Network Intrusion Datasets Objective: This project is dedicated to identifying concept drift within network intrusion datasets from the years 2017 and 2018 and subsequently adapting machine learning models to these changes. The project will utilize Google Colab for all computational tasks, offering a flexible and powerful cloud-based environment for data analysis, model training, and evaluation. Phase 1: Data Preparation and Preprocessing Data Collection: Download the 2017 and 2018 CIC IDS datasets from specified Google Drive links. Combination and Consolidation: Aggregate the files from each year into a singular dataset to streamline the analysis process. Label Standardization: Convert the datasets' multi-class labels to binary (Benign/Malicious) to simplify the classification challenge. Data Cleaning: Identify and eliminate any records with null or infinite values to ensure the integrity of the datasets. Initial Analysis: Evaluate the distribution of malicious versus benign instances both pre- and post-cleanup to gauge data balance and cleaning impact. Phase 2: Model Training and Evaluation Classifier Retrieval: Access machine learning classifier scripts designated for each dataset year within the "Ratio Bias" folder of the provided Google Drive link. Application of Classifiers: Deploy the classifiers on the preprocessed datasets for 2017 and 2018, tailoring the models to distinguish effectively between benign and malicious network activities. Evaluation Metrics: Assess the performance of each classifier, emphasizing the accuracy, precision, recall, and F1 scores to identify the most effective models. Phase 3: Concept Drift Adaptation Detection of Drift: Train models on the 2017 dataset and evaluate them on the 2018 dataset to detect any concept drift, signified by variations in model performance metrics. Comparative Analysis: Analyze F1 scores under different conditions—training and testing on the same dataset versus cross-year evaluation—to understand the extent and impact of concept drift. Model Adaptation Strategies: Based on observed drift, modify the machine learning models through approaches such as retraining with recent data or implementing continual learning techniques to preserve or enhance model accuracy over time.