ACM 116 Problem Set 3 Solutions

advertisement

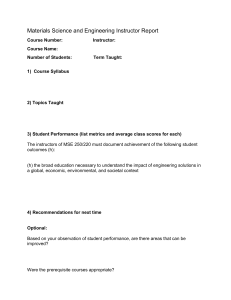

ACM 116 Problem Set 3 Solutions Hannes Helgason Problem 1 (a) Since A and B are i.i.d. zero-mean random variables we get that the mean of X(t) is E(X(t)) = cos(ωt)EA + sin(ωt)EB = 0, independent of t, and the autocorrelation, E(X(t)X(t + τ )) = E A2 cos(ωt) cos(ω(t + τ )) + AB cos(ωt) sin(ω(t + τ )) +B 2 sin(ωt) sin(ω(t + τ )) + BA sin(ωt) cos(ω(t + τ )) = E(A2 ) cos(ωt) cos(ω(t + τ )) + sin(ωt) sin(ω(t + τ )) = E(A2 ) cos(ωτ ) depends only on the time difference τ (we assume that the variance of A and B is finite, and therefore so is the variance of the process). Thus, the process X(t) is weakly stationary. (b) If a process X(t) is strongly stationary, all of its moments have to be independent of t. The third moment of X(t), E(X 3 (t)) = (EA3 )(cos3 (ωt) + sin3 (ωt)), is not a constant with respect to t unless EA3 = 0. Clearly we can find many examples of distributions for A so that the third moment is not zero while E(A) = 0. For example take A to be a discrete random variable with P (A = −1) = 1/3, P (A = 1/2) = 2/3. Then E(A) = −1 × 1/3 + 1/2 × 2/3 = 0 and E(A3 ) = −1 × 1/3 + 1/8 × 2/3 = −1/4. We could just as well consider the fourth order moment: E(X 4 (t)) = (EA4 )(cos4 (ωt) + sin4 (ωt)) + 6(EA2 )2 cos2 (ωt) sin2 (ωt). and taking A ∼ U nif [−1, 1] we get E(X 4 (t)) = 1/5(cos4 (ωt) + sin4 (ωt)) + 2/3 cos2 (ωt) sin2 (ωt) so the fourth moment is in general not independent of t. Above is one way to get a contradiction to show that in general the process is in general not strongly stationary. Another possible way would be to look at process for the times t = 0 and t = π/ω, that is X(0) = A and X(π/ω) = −A. This implies that if the distribution of A is not symmetric around zero, the process X(t) is not strictly stationary. Problem 2 (a) Since X(t) and Y (t) are independent, with zero mean and have identical covariance functions we have that E(Z 2 (t)) = E a2 X 2 (t) + 2abX(t)Y (t) + b2 Y 2 (t) = (a2 + b2 )E(X 2 (t)) = (a2 + b2 )RX (0). Thus, the second order moment of the process is a finite constant, independent of time t. The cross-correlation between X(t) and Y (t) is E(X(t + τ )Y (t)) = E(X(t + τ ))E(Y (t)) = 0, 1 so we get E(Z(t)Z(t + τ )) = = a2 E(X(t)X(t + τ )) + abE(X(t)Y (t + τ )) + abE(Y (t)X(t + τ )) + b2 E(Y (t)Y (t + τ )) a2 RX (τ ) + b2 RY (τ ), which gives us that the autocorrelation of Z(t) depends only on τ , and since the mean of Z(t), E(Z(t)) = 0, is constant, we conclude that Y (t) is weakly stationary. (b) If X(t) and Y (t) are also jointly Gaussian, then Z(t), a linear combination of these two processes, is Gaussian with mean E(Z(t)) = 0, variance 2 E(Z 2 (t)) = (a2 + b2 )RX (0) = (a2 + b2 )σX , 2 where σX is the variance of X(t) and autocorrelation function equal to RZ (τ ) = E(Z(t)Z(t + τ )) = (a2 + b2 )RX (τ ). (Note that to get a full description of a Gaussian process, in addition to the mean, we need to know its autocorrelation, not just the variance.) For given times t1 , t2 , . . . , tn , the density of Z := [Z(t1 ), Z(t2 ), . . . Z(tn )]T is N (0, Σ), where the entry (i, j) of the covariance matrix is Σi,j = E[Z(ti )Z(tj )] = (a2 + b2 )RX (tj − ti ). Problem 3 (a) Lets first assume that the processes Xt and Zt both have mean zero. We require the estimate of Xt , X̂t , to be linear: p t+p X X hk Yt−k . ht−k Yk = X̂t = k=−p k=t−p To determine the optimum filter, we need to find hk such that the mean square error is minimum. Or equivalently, the optimal filter must satisfy the orthogonality condition: 0 = E((Xt − X̂t )Yj ) ∀j ∈ I. That is, E(Xt Yj ) = E(X̂t Yj ), ∀j ∈ I. Plugging in for X̂t then gives: p X E(Xt Yj ) = E hk Yt−k Yj k=−p = p X hk E(Yt−k Yj ) ∀j ∈ I. k=−p If we assume that Xt and Yt are jointly wide-sense stationary (and both wide-sense stationary) then we can write the equation above in the form γX,Y (t − j) = p X hk γY (t − k − j) ∀j ∈ I, p X hk γY (m − k) ∀m ∈ I. k=−p or by the substitution m = t − j, γX,Y (m) = k=−p 2 (b) The matrix equation for the 2p + 1 coefficients is γX,Y (−p) h−p γY (0) γY (1) · · · γY (2p) γY (1) γY (0) · · · γY (2p − 1) h−p+1 γX,Y (−p + 1) .. = .. .. .. .. . . . . . γY (2p) ··· hp γY (0) γX,Y (p) (c) Since Zt has mean zero and is independent of Xt we have E(Xt Yj ) = E(Xt Xj ) + E(Xt )E(Zj ) = E(Xt Xj ) and therefore γY,X (τ ) = E(Yt Xt+τ ) = γX (τ ) = 4 · 2−|τ |. Using in addition the fact that Zt is white noise with variance σ 2 = 1 we get γY (τ ) = E(Yt Yt+τ ) = E(Xt Xt+τ ) + E(Zt Zt+τ ) = γX (τ ) + δ(τ ). Thus, the matrix equation for the optimal filter is γY (0) γY (1) γY (2) γX,Y (−1) h−1 γY (1) γY (0) γY (1) h0 = γX,Y (0) γY (2) γY (1) γY (0) h1 γX,Y (1) or 5 2 2 5 1 2 which gives 1 2 h−1 2 h0 = 4 5 h1 2 h−1 1/11 h0 = 8/11 . h1 1/11 (d) Since the error et = Xt −X̂t is orthogonal to every Yj , it is indeed orthogonal to every linear combination of Yj ’s and therefore E(et X̂t ) = 0 and the mean squared error is E(e2t ) = = E(et (Xt − X̂t )) = E(et Xt ) = E(Xt2 ) − E(X̂t Xt ) p p X X hk γY,X (k). hk E(Yt−k Xt ) = γX (0) − γX (0) − k=−p k=−p This gives us that the mean squared error is E(e2t ) = 4− p X hk 4 · 2−|k| = 4 − 4(1/2h−1 + h0 + 1/2h1 ) k=−p = 8 ≈ 0.727. 11 (e) The Matlab code for this problem can be found at the end of this solution set. From the plot of the autocorrelation we see that it decreases very rapidly and for τ = 10 it’s close to 0.004. Thus, if we want to estimate the process Xt at time t = t0 , we don’t expect to gain much by using measurements taken at times greater than 10 time-steps from t0 , or even less. On the figure that shows the exact MSE and numerical estimate of MSE for different values of p, and as we can see by increasing p, above say 2, doesn’t improve MSE considerably. 3 Numerical and exact MSE for different values of p.− #simulations=10000 4 0.745 Numerical MSE Exact MSE 3.5 0.74 3 0.735 2.5 MSE γX(τ) 0.73 2 0.725 1.5 0.72 1 0.715 0.5 0 −10 −8 −6 −4 −2 0 τ 2 4 6 8 0.71 10 1 2 3 4 5 6 7 8 9 10 p Problem 4 (a) Let Xi be the time to be served by server i. As each Xi is exponentially distributed ( µi e−µi x , for x ≥ 0 fXi (x) = 0, otherwise. By conditioning on X1 we get P (X3 > X1 ) = = = Z ∞ P (X3 > X1 | X1 = x)µ1 e−µ1 x dx Z0 ∞ Z0 ∞ P (X3 > x)µ1 e−µ1 x dx e−µ3 x µ1 e−µ1 x dx 0 = µ1 µ3 + µ1 (b) We wish to calculate P (X1 + X2 < X3 ). By conditioning on X1 we get, for x > 0, Z x Z x−x1 −µ2 x2 dx2 µ1 e−µ1 x1 dx1 µ2 e P (X1 + X2 < x) = x2 =0 x1 =0 µ1 1−e−µ2 x −µ2 1−e−µ1 x if µ1 6= µ2 µ1 −µ2 = 1 − e−µx − µxe−µx if µ1 = µ2 = µ Now by conditioning on X3 we get P (X1 + X2 < X3 ) = = Z ∞ Z0 ∞ P (X1 + X2 < X3 | X3 = x)µ3 e−µ3 x dx P (X1 + X2 < x)µ3 e−µ3 x dx 0 = µ1 µ2 (µ1 + µ3 )(µ2 + µ3 ) in both cases, µ1 6= µ2 and µ1 = µ2 . (c) Let T = X1 + X2 + X3 + W be the total time we spend in the system, where W is the waiting time while we wait for server 3, after being serviced by server 2. Then, since if X1 + X2 ≥ X3 , W = 0, the 4 expected waiting time for server 3 is E(W ) = E(W | X1 + X2 < X3 )P (X1 + X2 < X3 ) + E(W | X1 + X2 ≥ X3 )P (X1 + X2 ≥ X3 ) = E(W | X1 + X2 < X3 )P (X1 + X2 < X3 ) 1 µ1 µ2 = µ3 (µ1 + µ3 )(µ2 + µ3 ) were we have used the memoryless property. Thus, the expected amount of time we spend in the system is E(T ) = E(X1 ) + E(X2 ) + E(X3 ) + E(W ) = 1 1 1 1 µ1 µ2 + + + µ1 µ2 µ3 µ3 (µ1 + µ3 )(µ2 + µ3 ) Another solution, that involves a bit more calculations: In what follows, we take µ1 6= µ2 - similar calculations can be done in the case where µ1 = µ2 . First we compute the density function by differentiation: d P (X1 + X2 < x) dx µ1 µ2 e−µ2 x − e−µ1 x = µ1 − µ2 fX1 +X2 (x) = After being served by server 1 and 2, the original customer in server 3 either has or has not been served. The time remaining is the sum of 1 or 2 exponential distributions, as per the memoryless property. ( 1 if server 3 empty, probability 1 − e−µ3 t E(time left | at server 3 at time t) = µ23 otherwise, probability e−µ3 t µ3 1 (1 − e−µ3 t ) + 2e−µ3 t E(time left | at server 3 at time t) = µ3 1 + e−µ3 t = µ3 We then integrate this expected time left over all possible times of being at server 3. Z ∞ E(time left) = E(time left | at server 3 at time t)fX1 +X2 (t)dt 0 Z ∞ 1 + e−µ3 t µ1 µ2 t+ = e−µ2 t − e−µ1 t dt µ3 µ1 − µ2 0 1 2 µ2 µ1 1 + + + − = µ1 µ2 µ3 (µ1 − µ2 )(µ1 + µ3 ) (µ1 − µ2 )(µ2 + µ3 ) Problem 5 (a) The number of rainfalls in the first 5 days follows a P oi(5 · 0.2 = 1) distribution. P (empty after 5 days) = = ≈ P (no rain in the first 5 days) e−1 0.368 (b) The only possible scenarios for the reservoir to be empty are if it doesn’t rain in the first 5 days or if exactly one rainfall of 5000 units occurs sometime in the first 5 days, but no additional rain falls in the 10 day period. Now P (no rain in the first 5 days) = e−1 P (exactly 1 rain in the first 5 days) = e−1 P (no rain in days 6 − 10) = e−1 5 11 1! giving, P (empty sometime in first 10 days) = e−1 + e−1 0.8e−1 ≈ 0.476 Problem 6 Consider the time between 2005 and 2050, a 45 year span. We will make the following approximate assumptions and introduce notation: N = # people who become lawyers by 2050 ∼ P oi(500 · 45) Ii = indicator for whether lawyer i is still in practice in 2050 Ti = starting time of i-th lawyer Assume that time each lawyer practices follows an exponential distribution with rate 1/30. Then we have E(Ii | Ti = ti ) = e− 45−ti 30 . P n Fix an N = n and consider E i=1 Ii . Even though the Ti ’s are distributed as an ordering of n uniform random variables, this expectation is the same as if the Ti ’s were n unordered uniform random variables on [0, 45]. For ease of calculation, we will proceed as if they were unordered. For a Ui ∼ U nif [0, 45], P (lawyers who starts at Ui still practices in 2050) = Z 45 e− 45−x 30 0 1 dx 45 Hence, E n X i=1 Ii ! =n E i=1 Ii ! =E 45 e− 45−x 30 0 1 dx 45 45 30 1 − e− 30 =n 45 For a random N , we have N X Z P∞ 30 n=1 n 45 1−e 30 45 30 45 1 = = − 45 30 −500·45 (500·45)n e n! ! 45 1 − e− 30 E(N ) 45 − e− 30 (500 · 45) ≈ 11653 45 This result assumes that there are no lawyers in 2005. We may simply add N0 e− 30 to the result if there are originally N0 lawyers. Appendix Matlab-code for Problem 3 (e) % ACM 116, Homework 3, fall 2005 % numerical simulations for problem 3 (e) B = 10000; ps = 1:10; 6 squaredError = zeros(B,length(ps)); % exact MSE MSEexact = zeros(1,length(ps)); for j=1:length(ps), p = ps(j); nobs = 2*p + 1; % number of observations gamma = 4*2.^(-abs(0:(nobs-1))); Sigma = toeplitz(gamma,gamma); % get filter coefficients crosscorr = 4*2.^(-abs((-p):p)).’; h = (Sigma+eye(nobs))\crosscorr; % calculate the exact MSE MSEexact(j) = gamma(1) - crosscorr.’*h; % repeat procedure B times to get an % estimate of the MSE for k=1:B, % using a built-in function in Matlab to % generate samples X = mvnrnd(zeros(1,nobs),Sigma); % add noise to get observations Y = X + randn(1,nobs); Xhat = fliplr(Y)*h; squaredError(k,j) = (Xhat - X(p+1)).^2; end end % numerical MSE MSEnum = mean(squaredError); figure(1); tau = -10:10; stem(tau,4*2.^(-abs(tau))); xlabel(’\tau’);ylabel(’\gamma_X(\tau)’); figure(2); plot(ps,MSEnum,’o’,ps,MSEexact,’x’); xlabel(’p’);ylabel(’MSE’); legend(’Numerical MSE’,’Exact MSE’); title(strcat(... ’Numerical and exact MSE for different values of p.’,... ’- #simulations=’,num2str(B))); 7