15.1 Tracking

advertisement

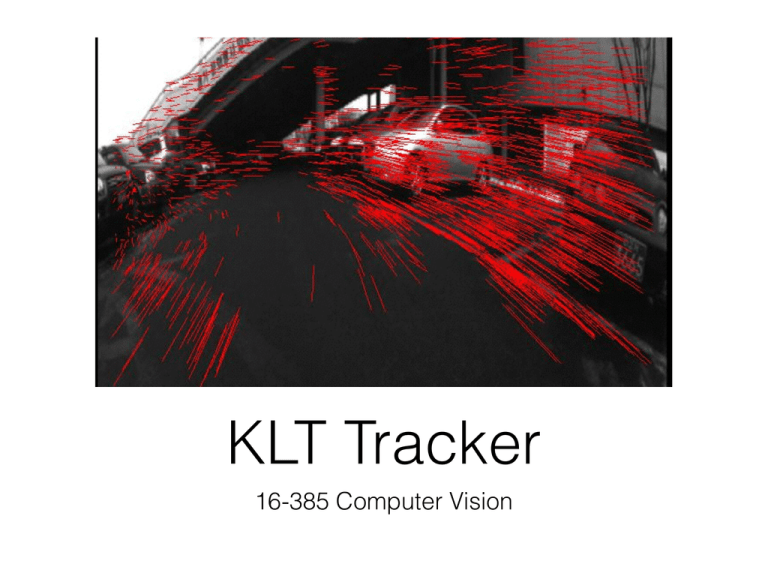

KLT Tracker 16-385 Computer Vision Feature-based tracking How should we select features? How should we track them from frame to frame? Lucas Kanade-Lucas-Tomasi (KLT) Tracker Kanade An Iterative Image Registration Technique with an Application to Stereo Vision. (1981) Kanade Tomasi Detection and Tracking of Feature Points. (1991) The original KLT algorithm Tomasi Shi Good Features to Track. (1994) Kanade-Lucas-Tomasi How should we track them from frame to frame? How should we select features? Lucas-Kanade Tomasi-Kanade Method for aligning (tracking) an image patch Method for choosing the best feature (image patch) for tracking What are good features for tracking? What are good features for tracking? Intuitively, we want to avoid smooth regions and edges. But is there a more is principled way to define good features? What are good features for tracking? Can be derived from the tracking algorithm What are good features for tracking? Can be derived from the tracking algorithm ‘A feature is good if it can be tracked well’ Recall the Lucas-Kanade image alignment method: X 2 [I(W(x; p)) T (x)] error function (SSD) x X 2 [I(W(x; p + p)) T (x)] incremental update x Recall the Lucas-Kanade image alignment method: X 2 [I(W(x; p)) T (x)] error function (SSD) x X 2 [I(W(x; p + p)) T (x)] incremental update x linearize X x @W I(W(x; p)) + rI p @p 2 T (x) Recall the Lucas-Kanade image alignment method: X 2 [I(W(x; p)) T (x)] error function (SSD) x X 2 [I(W(x; p + p)) T (x)] incremental update x linearize X x Gradient update @W I(W(x; p)) + rI p @p p=H 1 X x H= X x @W rI @p @W rI @p > > [T (x) @W rI @p 2 T (x) I(W(x; p))] Recall the Lucas-Kanade image alignment method: X 2 [I(W(x; p)) T (x)] error function (SSD) x X 2 [I(W(x; p + p)) T (x)] incremental update x linearize X x @W I(W(x; p)) + rI p @p p=H Gradient update x H= X x Update 1 X p @W rI @p @W rI @p p+ p > > [T (x) @W rI @p 2 T (x) I(W(x; p))] Stability of gradient decent iterations depends on … p=H 1 X x @W rI @p > [T (x) I(W(x; p))] Stability of gradient decent iterations depends on … p=H 1 X x @W rI @p Inverting the Hessian X @W H= rI @p x > > [T (x) @W rI @p When does the inversion fail? I(W(x; p))] Stability of gradient decent iterations depends on … p=H 1 X x @W rI @p Inverting the Hessian X @W H= rI @p x > > [T (x) I(W(x; p))] @W rI @p When does the inversion fail? H is singular. But what does that mean? Above the noise level 1 0 2 0 both Eigenvalues are large Well-conditioned both Eigenvalues have similar magnitude Concrete example: Consider translation model W(x; p) = Hessian H= W = @p x + p1 y + p2 X @W rI @p x X 1 0 = 0 1 x P I I x x x P = x Ix Iy > @W rI @p Ix ⇥ Ix Iy P I I y x x P x Iy Iy Iy ⇤ 1 0 0 1 1 0 0 1 How are the eigenvalues related to image content? interpreting eigenvalues λ2 λ2 >> λ1 What kind of image patch does each region represent? 1 2 ⇠0 ⇠0 λ1 >> λ2 λ1 interpreting eigenvalues λ2 corner horizontal edge λ2 >> λ1 λ1 ~ λ2 flat λ1 >> λ2 vertical edge λ1 interpreting eigenvalues λ2 corner horizontal edge λ2 >> λ1 λ1 ~ λ2 flat λ1 >> λ2 vertical edge λ1 What are good features for tracking? What are good features for tracking? min( 1, 2) > KLT algorithm 1. Find corners satisfying min( 1, 2) > 2. For each corner compute displacement to next frame using the Lucas-Kanade method 3. Store displacement of each corner, update corner position 4. (optional) Add more corner points every M frames using 1 5. Repeat 2 to 3 (4) 6. Returns long trajectories for each corner point (Demo)