Kanade–Lucas–Tomasi Tracking (KLT tracker)

advertisement

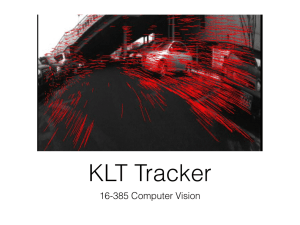

Kanade–Lucas–Tomasi Tracking (KLT tracker) Tomáš Svoboda, svoboda@cmp.felk.cvut.cz Czech Technical University in Prague, Center for Machine Perception http://cmp.felk.cvut.cz Last update: September 17, 2008 importance for Computer Vision gradient based optimization good features to track Talk Outline experiments Importance in Computer Vision Improved many times, most importantly by Carlo Tomasi [5, 4] Free implementation(s) available1. Firstly published in 1981 as an image registration method [3]. 2/22 1 2 After more than two decades, a project2 at CMU dedicated to this single algorithm and results published in a premium journal [1]. Part of plethora computer vision algorithms. http://www.ces.clemson.edu/~stb/klt/ http://www.ri.cmu.edu/projects/project_515.html Tracking of dense sequences — camera motion 3/22 I J Tracking of dense sequences — object motion 4/22 I J Alignment of an image (patch) 5/22 Goal is to align a template image T (x) to an input image I(x). x column vector containing image coordinates [x, y]>. The I(x) could be also a small subwindow withing an image. Original Lucas-Kanade algorithm I 6/22 Goal is to align a template image T (x) to an input image I(x). x column vector containing image coordinates [x, y]>. The I(x) could be also a small subwindow withing an image. Set of allowable warps W(x; p), where p is a vector of parameters. For translations x + p1 W(x; p) = y + p2 W(x; p) can be arbitrarily complex The best alignment minimizes image dissimilarity X x [I(W(x; p)) − T (x)]2 Original Lucas-Kanade algorithm II 7/22 X [I(W(x; p)) − T (x)]2 x is a nonlinear optimization! The warp W(x; p) may be linear but the pixels value are, in general, non-linear. In fact, they are essentially unrelated to x. It is assumed that some p is known and best increment ∆p is sought. The the modified problem X [I(W(x; p + ∆p)) − T (x)]2 x is solved with respect to ∆p. When found then p gets updated p ← p + ∆p Original Lucas-Kanade algorithm III 8/22 X [I(W(x; p + ∆p)) − T (x)]2 x linearized by performing first order Taylor expansion X x ∂W [I(W(x; p)) + ∇I ∆p − T (x)]2 ∂p ∂I ∂I , ∂y ] is the gradient image3 computed at W(x; p). The term ∇I = [ ∂x the Jacobian of the warp. 3 ∂W ∂p is As a vector it should have been a column wise oriented. However, for sake of clarity of equations row vector is exceptionally considered here. Original Lucas-Kanade algorithm IV 9/22 Derive X x ∂W [I(W(x; p)) + ∇I ∆p − T (x)]2 ∂p with respect to ∆p > X ∂W ∂W I(W(x; p)) + ∇I ∆p − T (x) 2 ∇I ∂p ∂p x setting equality to zero yields > X ∂W −1 [T (x) − I(W(x; p))] ∇I ∆p = H ∂p x where H is the Hessian matrix H= X x > ∂W ∂W ∇I ∇I ∂p ∂p The Lucas-Kanade algorithm—Summary 10/22 Iterate: 1. Warp I with W(x; p) 2. Warp the gradient ∇I with W(x; p) 3. Evaluate the Jacobian image ∇I ∂∂W p ∂W ∂p 4. Compute the Hessian H = 5. Compute ∆p = H h P −1 x at (x; p) and compute the steepest descent P h ∇I x ∇I ∂W ∂p i> ∂W ∂p ∇I ∂W ∂p i [T (x) − I(W(x; p))] 6. Update the parameters p ← p + ∆p until k∆pk ≤ i> h Example of convergence 11/22 video Example of convergence 12/22 Convergence video: Initial state is within the basin of attraction Example of divergence 13/22 Divergence video: Initial state is outside the basin of attraction What are good features (windows) to track? 14/22 How to select good templates T (x) for image registration, object tracking. −1 ∆p = H X x ∂W ∇I ∂p > [T (x) − I(W(x; p))] where H is the Hessian matrix H= X x > ∂W ∂W ∇I ∇I ∂p ∂p The stability of the iteration is mainly influenced by the inverse of Hessian. We can study its eigenvalues. Consequently, the criterion of a good feature window is min(λ1, λ2) > λmin (texturedness). What are good features (windows) to track? 15/22 Consider translation W(x; p) = 1 0 ∂W ∂p = 0 1 x + p1 . The Jacobian is then y + p2 i> h P h i ∂W ∂W ∇I ∇I x ∂p " ∂p # ∂I P 1 0 1 0 ∂I ∂I ∂x [ ∂x , ∂x ] = ∂I x 0 1 0 1 ∂y 2 2 ∂I ∂I P ∂x ∂x∂y 2 = 2 x ∂I ∂I H = ∂x∂y ∂y The image windows with varying derivatives in both directions. Homeogeneous areas are clearly not suitable. Texture oriented mostly in one direction only would cause instability for this translation. What are the good points for translations? 16/22 The Hessian matrix H= ∂x X x ∂I 2 2 ∂I ∂x∂y 2 ∂I ∂x∂y 2 ∂I ∂y Should have large eigenvalues. We have seen the matrix already, where? What are the good points for translations? 16/22 The Hessian matrix H= ∂x X x ∂I 2 2 ∂I ∂x∂y 2 ∂I ∂x∂y 2 ∂I ∂y Should have large eigenvalues. We have seen the matrix already, where? Harris corner detector [2]! Experiments - no occlusions 17/22 video Experiments - occlusions 18/22 video Experiments - occlusions with dissimilarity 19/22 video Experiments - object motion 20/22 video References 21/22 [1] Simon Baker and Iain Matthews. Lucas-Kanade 20 years on: A unifying framework. International Journal of Computer Vision, 56(3):221–255, 2004. [2] C. Harris and M. Stephen. A combined corner and edge detection. In M. M. Matthews, editor, Proceedings of the 4th ALVEY vision conference, pages 147–151, University of Manchaster, England, September 1988. on-line copies available on the web. [3] Bruce D. Lucas and Takeo Kanade. An iterative image registration technique with an application to stereo vision. In Proceedings of the 7th International Conference on Artificial Intelligence, pages 674–679, August 1981. [4] Jianbo Shi and Carlo Tomasi. Good features to track. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 593–600, 1994. [5] Carlo Tomasi and Takeo Kanade. Detection and tracking of point features. Technical Report CMU-CS-91-132, Carnegie Mellon University, April 1991. End 22/22