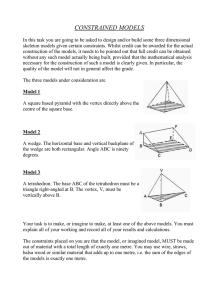

A Beginner`s Guide to Measurement

advertisement