Chapter 13 ARIMA Model and the BOX-Jenkins Methodology

advertisement

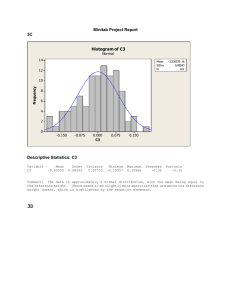

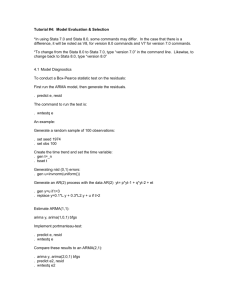

Chapter 13 ARIMA Model and the BOX-Jenkins Methodology ARIMA models Box and Jenkins (1976) first introduced ARIMA models, the term deriving from: AR= autoregessive I = integrated MA=moveing average Stationarity A key concept underlying time series processes is that of stationarity. A time series is covariance stationarity when it has the following three characteristics: (a) exhibits mean reversion in that it fluctuates around a constant long-run mean; (b) has a finite variance that is time-invariant; (c) has a theoretical correlogram that diminishes as the lag length increases. In its simplest terms a time series Yt is said to be stationary if; (a) E(Yt)=constant for all t; (b) Var(Yt)=constant for all t; (c) Cov(Yt,Tt+k)=constant for all t and all , or if its mean, its variance and its covariances remain constant over time. Stationarity is important because if the series is non-stationary then all the typical results of the classical regression analysis are not valid. Regressions with non-stationary series may have no meaning and therefore called “spurious”. Long-term forecast of a stationary series will converge to the unconditional mean of the series. Autoregressive time series models The AR(1) model Yt Yt 1 ut (13.1) where we do not include a constant the AR(1) and 1 and ut is a gaussian (white noise) error term. 1 Condition for stationarity Equation (13.1) introduces the constraint 1 . If 1 , then Yt will trend to get bigger and bigger each period and so we would have an explosive series. Example of stationarity in the AR(1) model Smpl 1 1 Genr yt=0 Smpl 2 500 Genr yt=0.4*yt(-1)+nrnd Smpl 1 500 Plot yt If we want to create a time series ( say Xt) which has 1 , we type the following commands: Smpl 1 1 Genr xt=1 Smpl 2 500 Genr xt=1.2*xt(-1)+nrnd Smpl 1 200 Plot xt The AR(p) model A generalization of the AR(1) model is AR(p) model AR(2) model, and will have the form: Yt 1Yt 1 2Yt 2 ut (13.2) AR(p) model, Yt 1Yt 1 2Yt 2 ... pYt p ut (13.3) or using the summation symbol: p Yt iYt i ut (13.4) i 1 Finally, using the lag operator L (the lag operator L has the property: LnYt=Yt-n) we can write the AR(p) model as: Yt (1 1L 2 L2 ... p Lp ) ut (13.5) ( L)Yt ut (13.6) where ( L)Yt is a polynomial function of Yt. 2 Stationarity in the AR(p) model The condition for stationarity of an AR(p) process is guaranteed only if the p roots of the polynomial equation ( z ) 0 are greater than 1 in absolute value, where z is a real variable. The condition for the AR(1) process according to the polynomial notation reduces to: (1 z ) 0 (13.7) Being greater than 1 in absolute value. If this is so, and if the first root is equal toλ, then the condition is: 1 1 (13.8) 1 (13.9) For the AR(p) model to be stationary is that the summation of the p autoregressive coefficients should be less than 1: p i 1 i 1 (13.10) Properties of the AR models Defining the unconditional mean and the variance of the AR(1) process, which are given by: E (Yt ) E (Yt 1 ) E (Yt 1 ) 0 Where Yt 1 Yt ut 1 . Substituting repeatedly for lagged Yt we have: Yt 1 tY0 ( t u1 t 1u2 ... 0ut 1 ) And since 1, t will be close to zero for large t. Thus we have that: E (Yt 1 ) 0 (13.11) and V a (rYt ) V a (rYt 1 ut ) 2 Y2 u2 u2 1 2 Y2 The covariance among two random variables Xt and Zt is defined to be: Cov( X t , Zt ) E[ X t E ( X t )][ Zt E (Zt )] Cov(Yt , Yt 1 ) E[Yt E (Yt )][Yt 1 E (Yt 1 )] Which is called the autocovariance function. 3 (13.12) …..(13.13) …..(13.14) For the AR(1) model the autocovariance function will be given by: Cov(Yt , Yt 1 ) E[YtYt 1 ] [Yt E (Yt 1 )] [ E (Yt )Yt 1 ] [ E (Yt ) E (Yt 1 )] E (YtYt 1 ) E[(Yt 1 ut )Yt 1 ] E (Yt 1Yt 1 ) E (utYt 1 ) Y2 (13.15) We can easily show that: Cov(Yt , Yt 2 ) E (YtYt 2 ) E[(Yt 1 ut )Yt 2 ] E[( (Yt 2 ut 1 ) ut )Yt 2 ] E ( Yt 2Yt 2 ) 2 2 (13.16) 2 Y and in general: C o (vYt , Yt k ) k Y2 (13.17) The autocorrelation function will be given by: C o (rYt , Yt k ) C o (vYt , Yt k ) K Y2 k 2 Y V a (rYt )V a (rYt k ) (13.18) So, for an AR(1) series the autocorrelation function (ACF) (and the graph of it which plots the value of Cor(Yt,Yt-k) against k and is called correlogram) will decay exponentially as k increases. Finally the partial autocorrelation function (PACF) involves plotting the estimated coefficient Yt-k from an OLS estimate of an AR(k) process, against k. If the observations are generated by an AR(p) process then the theoretical partial autocorrelations will be high and significant for up to p lags and zero for lags beyond p. 4 Moving average models The MA(1) model Yt ut ut 1 …(13.19) The MA(q) model Yt ut 1ut 1 2ut 2 ... qut q …(13.20) Which can be rewritten as: q Yt ut j ut j …(13.21) i 1 Or, using the lag operator: Yt (1 1L 2 L2 ... p Lq )ut (13.22), (13.23) ( L)ut Because any MA(q) process is, by definition, an average of q stationary white-noise processes, it follows that every moving average model is stationary, as long as q is finite. Invertibility in MA models A property often discussed in connection with the moving average processes is that of invertibility. A time series Yt is invertible if it can be represented by a finite-order MA or convergent autoregressive process. Invertiblity is important because the use of the ACF and PACF for identification implicitly assume that the Yt sequence can be well-approximated by an autoregressive model. For an example consider the simple MA(1) model: Yt ut ut 1 … (13.24) Using the lag operator this can be rewritten as: Yt (1 L)ut ut (13.24) Yt (1 L) If 1 , then the left-hand side of (13.25) can be considered as the sum of infinite geometric progression. ut Yt (1 L 2 L2 3 L3 ...) 5 (13.26) MA(1) process: Yt ut ut 1 ut 1 Yt 1 ut 2 Substituting this into the original expression we have: Yt ut (Yt 1 ut 2 ) ut Yt 1 2ut 2 ut Yt 1 2Yt 2 3ut 3 And repeating this an infinite number of times we finally get the expression (13.26). Thus, the MA(1) process has been inverted into an infinite order AR process with geometrically declining weights. Note that for the MA(1) process to be invertible it is necessary that 1 In general the MA(q) processes are invertible if the roots of the polynomial ( z ) 0 (13.27) Are greater than 1 in absolute value. Properties of the MA models The mean of the MA process will be clearly equal to zero as it is the mean of white noise terms. The variance will be given by: V a (rYt ) V a (rut ut 1 ) u2 2 u2 u2 (1 2 ) (13.28) The autocovariance will be given by: Cov(Yt , Yt 1 ) E[(ut ut 1 )(ut 1 ut 2 )] E (ut ut 1 ) E (ut21 ) 2 E (ut 1ut 2 ) u2 (13.29)(13.30) (13.31) And since ut is serially uncorrelated it is easy to see that: C o (vYt , Yt k ) 0 f o rk 1 (13.32) From this we can understand that for the MA(1) process the autocorrelation function will be: u2 Cov(Yt , Yt k ) for k 1 2 2 Cor (Yt , Yt k ) u (1 ) 1 2 Var (Yt )Var (Yt k ) for k 1 0 (13.33) So, if we have an MA(q) model we will expect the correlogram (ACF) to have q spikes for k=q, and then go down to zero immediately. The partial autocorrelation function (PACF) for MA process should decay slowly. 6 ARMA models We can have combinations of the two processes to give anew series of models called ARMA(p, q) models. The general form of the ARMA(p, q) models is following: Yt 1Yt 1 2Yt 2 ... pYt p ut (13.34) 1ut 1 2ut 2 ... qut q Which can be rewritten, using the summations as: p q i 1 i 1 Yt iYt i ut j ut j (13.35) or, using the lag operator: Yt (1 1L 2 L2 ... p Lp ) (1 1L 2 L2 ... p Lq )ut ( L)Yt ( L)ut (13.36),(13.37) In the ARMA(p, q) model the condition for stationarity has to deal with the AR(p) part of the specification only. Integrated processes and the ARIMA models An integrated series The ARMA(p, q) model can only be made on time series Yt that stationary. In order to avoid this problem, and in order to induce stationarity, we need to detrend the raw data through a process called differencing. Yt Yt Yt 1 (13.39) As most economic and financial time series show trends to some degree, we nearly always end up taking first differences of the input series. If, after first differencing, a series is stationary then the series is also called integrated to order one, and denoted I(1). ARIMA models If a process Yt has an ARIMA(p, d, q) representation, the has an ARMA(p ,q) representation as presented by the equation below: dYt (1 1L 2 L2 ... p Lp ) (1 1L 2 L2 ... p Lq )ut 7 (13.41) Box-Jenkins model selection In general Box-Jenkins popularized a three-stage method aimed at selecting an appropriate (parsimonious) ARIMA model for the purpose of estimating and forecasting a univariate time series. Three stages are: (a) identification, (b) estimation, and (c) diagnostic checking. Identification A comparison of the sample ACF and PACF to those of various theoretical ARIMA processes may suggest several plausible models. If the series is non-stationary the ACF of the series will not die down or show signs of decay at all. A common stationarity-inducing transformation is to take logarithms and then first differences of the series. Once we have achieved stationarity, the next step is identify the p and q orders of the ARIMA model Read Table 13.1 model ACF PACF Pure white noise All autocorrelation are zero All partial autocorrelation are zero MA(1) Single positive spike at lag 1 Damped sinewave or exponential decay AR(1) Damped sinewave or exponential decay Single positive spike at lag 1 ARMA(1,1) Decay(exp. Or sinewave) Decay(exp. Or sinewave) beginning at lag 1 beginning at lag 1 Decay(exp. Or sinewave) Decay(exp. Or sinewave) beginning at lag q beginning at lag p ARMA(p,q) Estimation In this second stage, the estimated model are compared using AIC and BIC. Diagnostic checking In the diagnostic checking stage we examine the goodness of fit of model. We must be careful here to avoid overfitting.( the procedure of adding another coefficient in appropriate) The special statistic that we use here are the Box-Piece statistic (BP) and the Ljung-Box (LB) Q-statistic, which serve to test for autocorrelations of the residual. The Box-Jenkins approach step by step 8