ICE Summary Report Fall/Spring 2014-15

advertisement

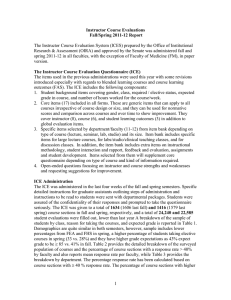

Instructor Course Evaluations Fall/Spring 2014-15 Report The Instructor Course Evaluation System (ICES) prepared by the Office of Institutional Research & Assessment (OIRA) and approved by the Senate was administered on-line fall and spring 2014-15 in all faculties, with the exception of Faculty of Medicine (FM). The Instructor Course Evaluation Questionnaire (ICE) The items used in the previous administrations were used this year with some revisions introduced especially with regards to blended learning courses and course learning outcomes (FAS). The ICE includes the following components: 1. Student background items covering gender, class, required / elective status, expected grade in course, and number of hours worked for the course/week. 2. Core items (17) included in all forms. These are generic items that can apply to all courses irrespective of course design or size, and they can be used for normative scores and comparison across courses and over time to show improvement. They cover instructor (8), course (6), and student learning outcomes (3) in addition to global evaluation items. 3. Specific items selected by department/faculty (11-12) from item bank depending on type of course (lecture, seminar, lab, studio) and its size. Item bank includes specific items for large lecture courses, for labs/studio/clinical teaching classes, and for discussion classes. In addition, the item bank includes extra items on instructional methodology, student interaction and rapport, feedback and evaluation, assignments and student development. Items selected from them will supplement core questionnaire depending on type of course and kind of information required. 4. Open-ended questions focusing on instructor and course strengths and weaknesses and requesting suggestions for improvement. ICE Administration The ICE was administered on-line in the last three weeks of the fall and spring semesters using the new Blue on-line system. The system is user-friendly in terms of access using all kinds of smart devices, screen scrolling, and provides extensive reporting including graphics. Specific detailed instructions were provided for students on how to access the system and periodic reminders were sent. To enhance response rate, a 3-week grade hold was placed on students who do not fill their ICEs. Faculty was also provided with facility to add additional items of their choice and to follow up on response rates of their various course sections. Students were assured of the confidentiality of their responses and prompted to take the questionnaire seriously. The ICE was given to a total of 2,313 (2,398 last fall) and 2401 (2397 last spring) course sections in fall and spring, respectively, and a total of 44,637 and 46,787 (36,478 and 33,287 last year) student evaluations were filled out, much higher than previous years because of increased response rate, around 90% in both semesters. All course sections were included in the evaluations. A breakdown of the sample of students by reason for taking the courses, and expected grade is reported in Table 1. Demographics are quite similar in both semesters, however, sample includes a higher percentage of students 1 taking required courses and electives from outside the major in spring (25% vs. 19%; 7% vs. 4% respectively) and they, in general, have similar grade expectations in both semesters though much lower than previous years as around 33% expect grade to be ≥ 85 vs. around 44 last year and slightly lower expectations previous year of 47%. Number of hours worked is slightly lower in spring probably because of higher nature of elective courses. Table 2 provides the detailed breakdown of the surveyed population of courses and the percentage of course sections by faculty and also reports mean response rate per faculty, while Table 3 provides the breakdown by department. Faculty response rate was high ranging from 88-94% in the fall and spring, while departmental ranged from 83100% in fall and 82-100% in spring, with the exception of CAMES. SN has highest response rate in fall, while SN and FHS have highest in spring term. Table 1: ICE (Fall and Spring 2014-15) Sample Description Faculty % % Class F S FAFS 4 5 Freshman FAS 62 62 Sophomore FEA 21 20 Junior FHS 3 2 Senior OSB 9 9 4rth Year SNU 1.5 1 5th Year Graduate Special Reason for taking Course Required from major Elective from major Elective outside major Required outside major University required Number of hours 1. ≤ 3 2. 4 – 6 3. 7 – 10 % F 39 37 19 % S 35 32 25 Expected Grade 4 1 % F 5 33 27 23 5 0 4.6 0.4 % S 2 25 28 31 9 0 3.6 0.4 1. ≥ 90 2. 85 - 89 3. 80 - 84 % F 3 29 58 2 30 58 7 4. 70 - 79 11 10 1 5. < 70 0 0 %F 12 71 15 %S 13 74 12 2 %S Table2: Surveyed Population of Courses & Response Rates by Faculty Faculty Responses Mean Resp. Rate F S F S Agricultural & Food Sciences 1728 2114 88% 89% Arts & Sciences 24598 24723 89% 88% Business Engineering & Architecture 3370 3476 86% 91% 8158 8039 89% 91% Health Sciences Nursing 1088 686 88% 92% 561 527 94% 92% 39598 39768 89% 89% Table 3: Response Rates by Department Response Rate Per Department Department Fall Agricultural Science 88% Animal & Veterinary Sciences 81% Arabic 90% Architecture & Graphic Design 81% Biology 91% Business Administration 86% Center for American Studies 83% Center for Arab & Middle Eastern Studies 57% Chemical Engineering 86% Chemistry 90% Civil & Environmental Engineering 91% Civilization Sequence Program 88% Spring 86 88 92 86 91 91 88 72 92 93 90 80 AUB 3 Computer Science Economics Education Electrical & Computer Engineering Engineering Management English Environmental Health Epidemiology & Population Health Fine Arts & Art History Geology Health Management & Policy Health Promotion &Communicative Health History and Archaeology Landscape Design & Eco-Management Mathematics Mechanical Engineering Medical Imaging Sciences Medical Laboratory Sciences Nursing Nutrition & Food Science Pathology & Lab Medicine Philosophy Physics Political Studies & Public Administration Psychology Rural Community Development Sociology, Anthropology & Media Studies Undeclared University Preparatory Program 90% 90% 89% 95% 90% 91% 92% 83% 87% 84% 85% 89% 86% 87% 88% 89% 100% 97% 94% 91% 86% 86% 90% 80% 89% 100% 85% 81% 86% 88 89 94 95 93 91 93 86 90 91 91 96 92 86 90 91 100 87 92 94 88 90 87 82 89 88 85 Results Results, including comments were reported to each faculty member, department chair, and dean electronically. In addition to item means, averages were reported for the instructor, the course and for student learning outcome development. In addition, category, faculty, and university means were reported for each item and for each subgroup. Averages were computed using all course sections and not only those with equal to or more than 40% response rates to be comparable to previous year’s statistics. In addition, three additional reports were provided to the deans: one summarizing institutional performance on 17 core items by faculty, another providing summary data for all departments within their faculty, and a third providing a summary for each department in the faculty. Department chairs also got a copy of their department summary. 4 Figures 1 and 2 present summary normative data for ICE subscales for the University and per faculty for fall and spring 2014-15 in comparison with 2010-15 scores. Unlike previous administrations, students’ evaluations of teachers (A) were, in general, similar (4.2) to their evaluations of courses (B) (4.2), though higher than those of learning outcomes(C) (4.1). ICE results this year show a rise from previous years possibly because of higher response rate and satisfaction with new system. Averages for fall and spring 2014-15 are (A, mean=4.23; 4.22, course (B, mean=4.17 4.15, and learning outcome (C, 4.06; 4.07). Ratings in spring were quite similar to the fall, though usually they differed. Four-year results show stability of ICE ratings on the subscales over time, except for this year which showed higher averages. Will wait to see if this trend persists. With respect to instructor effectiveness by faculty (Figure 2a), mean scores ranged between 4.1-4.4 in the fall and spring, with the exception of FM which are only few courses. FEA reports lowest rating of 4.1, while SNU and FHS report highest means in fall, with OSB and FAFS catching up in spring. All faculties showed improvement this year, though most had shown stable performance in last few years with exception of FEA whose ratings were dropping in recent years. With respect to course evaluations by faculty, ratings ranged between 4.1-4.4 (4.1-4.3 in spring). SNU and FHS are leading and FEA has lowest averages. All faculties improved this year, with FAS, HSON, and OSB showing gradual improvement on course evaluation in recent years. Figure 1. Subscale Averages 2010-15 5 Figure 2 A As to learning outcomes by faculty, scores ranged from 3.9-4.3. FAS had lowest score in both terms, while HSON had highest in fall and was accompanied with FAFS and FHS in spring. OSB and FAS have shown gradual improvement on outcomes in recent years. Figure 2B 6 Figure 2 C Additional items’ means ranged 4.1-4.4. All faculties improved this year and are showing gradual improvement on additional items in recent years. FEA does not have additional items in its ICE form. Figure 2 D 7 As to item # 8, overall effectiveness of instructor, it averaged 4.2 for all faculties in both terms in comparison with 4.0 last year, also, item # 14, overall course effectiveness, averaged 4.1 in both terms while it was 3.9 and 4.0 in fall and spring of last year. A breakdown of items 8 and 14 averages by faculty is reported in Table 4. For item 8, SNU has highest average in fall, with FHS and FAFS leading in spring and FEA reporting lowest average in both terms. Figure 3 presents 4-year trend of overall items. It does show first stability then a drop in 2013 and then rise since 2014. Table 4: Average of Overall Items by Faculty Item # 8 Item # 14 Faculty F S F S FAFS 4.3 4.2 4.4 4.3 FAS 4.2 4.1 4.1 4.1 OSB 4.3 4.2 4.3 4.2 FEA 4.1 4.0 4.1 4.0 FHS 4.4 4.3 4.3 4.3 HSON 4.4 4.4 4.3 4.2 AUB 4.2 4.1 4.2 Figure 3 8 4.1 Appendix presents item statistics for the items by faculty, and for the whole university. Item means ranged between 4.0-4.4, with highest on instructor and some of lowest on learning outcomes. Table 5 presents subscale means by category of courses in every faculty. Lowest (red font) and highest (green font) categories within each faculty were highlighted to facilitate comparison for improvement. 9 Table 5: Subscale Means per Category per Faculty Faculty FAFS FAFS FAFS FAFS FAFS FAFS FAFS FAS FAS FAS FAS FAS FAS FEA FHS FHS FHS FHS FHS HSON HSON OSB OSB OSB OSB OSB OSB CATEGORY Graduate Lecture Lecture & Lab Large Lecture Lab Teaching Small Lecture Seminar Studio Education-Method Education-Non-Method Humanities Sciences Social Sciences Blended Learning FEA Discussion Lecture + Assignment Discussion Lecture Lecture + Assignment Large Lecture & Lab Large Lecture HSON FORM A HSON FORM B ACCT BUSS FINA Blended MKTG & MNGT OPIM Instructor Course Learning Effectiveness Effectiveness Outcomes F S F S F S 4.6 4.6 4.4 4.6 4.5 4.5 4.2 4.3 4.1 4.2 4.0 4.1 4.3 4.3 4.3 4.3 4.2 4.2 4.6 4.4 4.5 4.4 4.5 4.4 4.3 4.4 4.2 4.4 4.2 4.3 4.5 4.5 4.5 4.4 4.4 4.5 3.9 4.1 3.8 4.0 3.8 4.0 4.6 4.8 4.5 4.6 4.5 4.4 4.4 4.4 4.4 4.3 4.3 4.2 4.3 4.1 4.2 4.1 4.1 3.9 4.1 4.2 4.0 4.1 3.8 3.9 4.3 4.3 4.2 4.2 4.1 4.1 4.1 4.1 4.1 4.1 4.2 4.4 4.3 4.4 4.3 4.4 4.4 4.2 4.7 4.3 4.2 4.5 4.3 4.3 4.4 4.4 4.4 4.3 4.6 4.6 3.5 4.1 4.5 4.1 4.4 4.3 4.4 4.2 4.5 4.3 4.4 4.1 4.7 4.2 4.3 4.4 4.2 4.2 4.3 4.5 4.5 3.6 4.1 4.4 4.1 4.2 4.2 4.4 4.2 4.0 4.5 4.1 4.1 4.4 3.9 4.0 4.3 4.6 4.6 3.3 3.9 4.4 4.1 4.0 4.1 4.3 4.3 4.2 4.4 4.2 4.3 4.2 4.3 4.1 Table 6 reports means by department. Lowest (red font) and highest (green font) departments within each faculty were highlighted to facilitate comparison for improvement. Learning outcomes in science departments are lowest in FAS, while landscape design is lowest in FAFS. With respect to OSB, INFO and MHRM have lower averages; while in FEA ARCH and CHEN have lowest averages. With respect to FHS, LABM courses are consistently low while EPHD courses have highest evaluations. 10 Table 6. Subscale Means by Department per Faculty Faculty FAFS FAFS FAFS FAFS FAFS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS FAS OSB OSB OSB OSB OSB Department AGSC AVSC LDEM NFSC RCOD AMST ARAB AROL BIOL CHEM CHIN CMPS CVSP ECON EDUC ENGL FAAH FREN GEOL HIST MATH MCOM MEST PHIL PHYS PSPA PSYC SOAN STAT UPEN UPHU UPIT UPMA UPSC ACCT BUSS DCSN ENTM FINA Instructor Effectiveness F S 4.4 4.4 4.5 4.3 4.1 4.3 4.4 4.4 4.6 4.6 4.4 4.1 4.1 4.4 4.3 4.2 4.3 4.1 4.0 4.0 4.6 4.1 4.1 4.2 3.8 4.1 4.1 4.4 4.5 4.3 4.2 4.4 4.4 4.4 4.1 4.3 4.3 4.6 4.6 4.2 4.3 4.5 4.4 4.7 4.3 4.4 4.4 3.9 4.0 4.4 4.4 4.5 4.5 4.3 4.4 4.3 4.4 4.3 4.2 4.7 4.0 3.8 4.0 4.3 4.2 4.3 4.4 4.3 4.3 4.4 4.4 4.5 4.3 4.4 4.4 11 Course Effectiveness F S 4.3 4.3 4.3 4.2 3.9 4.2 4.4 4.4 3.9 4.5 4.5 4.0 4.0 4.2 4.3 4.1 4.2 4.0 4.0 3.8 4.6 4.0 4.1 4.1 3.8 4.1 4.0 4.4 4.4 4.2 4.2 4.3 4.3 4.4 4.1 4.3 4.2 4.5 4.5 4.1 4.2 4.4 4.4 4.5 4.3 4.4 4.3 3.9 4.0 4.3 4.3 4.4 4.4 4.3 4.3 4.2 4.4 4.2 4.1 4.7 3.9 4.0 3.9 4.4 4.1 4.2 4.2 4.2 4.2 4.2 4.3 4.0 4.4 4.3 4.4 Learning Outcomes F S 4.2 4.3 4.4 4.2 3.9 4.2 4.3 4.3 4.7 4.4 4.6 3.8 3.8 4.0 4.2 3.9 4.1 3.7 3.7 4.1 4.6 3.8 3.9 4.0 3.7 3.9 3.9 4.3 4.3 4.0 3.9 4.3 4.3 4.6 4.1 4.1 4.0 4.4 4.4 3.8 4.0 4.4 4.3 4.6 4.3 4.3 4.3 3.6 3.8 4.3 4.3 4.4 4.4 4.2 4.2 3.9 4.0 4.3 4.2 4.7 4.0 3.9 4.0 4.3 4.10 3.9 4.0 4.0 4.1 4.2 4.3 4.6 4.3 4.2 4.4 OSB OSB OSB OSB OSB FEA FEA FEA FEA FEA FEA FEA FEA FEA FEA FHS FHS FHS FHS FHS FHS FHS FHS HSON INFO MFIN MHRM MKTG MNGT ARCH CHEN CIVE EECE ENMG ENSC GRDS MECH URDS URPL ENHL ENSC EPHD HMPD HPCH LABM MLSP PBHL NURS 4.2 4.4 4.0 4.4 4.3 3.9 3.9 4.3 4.2 4.2 4.4 4.0 4.1 3.9 4.3 4.5 4.2 4.5 4.5 4.4 3.8 4.3 4.3 4.4 4.0 4.3 4.5 4.5 3.6 3.9 4.2 4.1 4.2 4.0 3.9 4.0 3.8 4.2 4.3 4.5 4.3 4.4 3.7 4.2 4.3 4.2 4.3 3.8 4.4 4.3 4.0 3.9 4.2 4.3 4.0 4.5 4.0 4.1 4.3 4.2 4.5 4.00 4.5 4.4 4.3 3.8 4.3 4.3 4.4 3.9 4.3 4.4 4.4 3.8 3.9 4.1 4.2 4.0 4.1 3.9 4.1 4.0 4.1 4.2 4.5 4.3 4.3 3.6 4.2 4.3 4.1 4.3 3.8 4.4 4.1 3.7 4.2 4.4 3.8 4.3 4.4 4.1 3.5 4.3 4.0 4.3 4.1 4.3 4.2 4.7 4.3 4.3 3.3 4.0 4.3 Conclusion: Accomplishments and Areas of Improvement ICE results have shown significant improvement this year after a period of stability. Response rates are increasing and whole process is being taken more seriously. The fall and spring administrations went smoothly as we have become more organized and were able to anticipate problems ahead of time. The on-line administration was a success and reduced the administrative and paper work and the grade hold resulted in increased response rate averaging around 90%. Faculty were provided with options to add questions and to view and monitor response rates in their respective sections; while students were sent reminders, and periodic reporting of progress in response rate was posted on OIRA website and shared using emails. Reports generated followed new Blue format, were more detailed, and included identifying faculty members’ strengths and challenges. In addition, summary institutional, faculty, and departmental reports were issued to deans and department chairs. These summary reports were also published on OIRA website for possible review by faculty and students, and this step provided evidence that the evaluations are taken seriously by faculty and by the administration. 12 Procedures to produce the ICE reports were improved and faculty requests were met. OIRA ICE database was integrated with the new Blue software and this enabled us to produce trends report by teacher, course or department and/or by item. Despite the above accomplishments, several problems were encountered that we hope can be overcome in future administrations: 1. The problem of getting up-to-date accurate information regarding courses/sections offered and their enrollment has improved though still exists in some faculties. OIRA is being better provided with up to date information on courses with labs and lectures with different instructors, and on courses being taught by more than one instructor or sections that need to be combined. We obtain needed information from deans’ offices directly; however, these also do not always have most update information, especially with regard to part timers who have not signed their contracts. To overcome this problem, OIRA has resorted to automatic update of its database so that any changes on the system will be automatically updated on the ICE database. 2. OIRA will continue to work on meeting faculty requests for combining course sections for same instructor, for conducting ICEs for courses with different start and end date, and other needs. 3. OIRA will introduce Text Analytics this fall to provide more in depth summary of students’ comments. These can be done for a single course, several courses, and departments. 13 Appendix: ICE Item Averages by Faculty 1 Faculty FAFS FAS OSB FEA FHS HSON AUB F 4.4 4.4 4.5 4.3 4.5 4.5 4.4 Faculty FAFS FAS OSB FEA FHS HSON AUB F 4.2 4.1 4.2 4.0 4.3 4.3 4.1 Faculty FAFS FAS OSB FEA FHS HSON AUB F 4.3 4.1 4.2 4.0 4.4 4.3 4.1 2 S 4.4 4.3 4.5 4.3 4.4 4.5 4.3 F 4.5 4.4 4.5 4.4 4.6 4.5 4.4 S 4.3 4.1 4.3 4.0 4.2 4.1 4.1 F 4.3 4.1 4.3 4.1 4.4 4.4 4.2 S 4.3 4.1 4.3 4.0 4.3 4.3 4.1 F 4.3 4.1 4.2 4.0 4.3 4.4 4.1 6 3 S 4.5 4.3 4.5 4.3 4.6 4.5 4.4 F 4.4 4.2 4.4 4.2 4.4 4.4 4.3 7 12 4 S 4.4 4.2 4.4 4.2 4.4 4.4 4.3 F 4.3 4.2 4.3 4.1 4.4 4.5 4.2 8 S 4.3 4.1 4.3 4.1 4.4 4.3 4.2 F 4.3 4.2 4.3 4.1 4.4 4.4 4.2 F 4.3 4.2 4.3 4.1 4.4 4.4 4.2 14 S 4.3 4.1 4.3 4.0 4.3 4.2 4.1 F 4.2 4.1 4.2 4.0 4.3 4.4 4.1 14 F 4.2 4.0 4.2 4.0 4.3 4.3 4.1 9 S 4.4 4.1 4.3 4.1 4.3 4.3 4.2 13 5 S 4.4 4.2 4.4 4.1 4.3 4.3 4.2 10 S 4.3 4.2 4.3 4.1 4.4 4.4 4.2 F 4.3 4.2 4.3 4.1 4.4 4.4 4.2 15 S 4.3 4.1 4.2 4.0 4.3 4.2 4.1 S 4.2 4.0 4.2 4.0 4.2 4.2 4.1 11 S 4.3 4.2 4.3 4.2 4.4 4.4 4.2 F 4.2 4.1 4.2 4.0 4.3 4.3 4.1 16 S 4.3 4.1 4.2 4.0 4.3 4.1 4.1 17 F 4.2 3.9 4.0 S 4.2 3.9 4.1 F 4.3 4.0 4.2 S 4.4 4.0 4.3 F 4.1 3.9 4.1 S 4.2 3.9 4.1 4.2 4.3 4.0 4.2 4.2 4.0 4.4 4.4 4.2 4.4 4.4 4.2 4.2 4.3 4.0 4.3 4.2 4.0