From: AAAI Technical Report SS-93-07. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved.

Classification Learning: FromParadigmConflicts to Engineering Choices

David L. Waltz

Thinking Machines Corporation and

Brandeis University

Abstract

Classification learning applies to a wide range of tasks, fromdiagnosis and

troubleshooting to pattern recognition and keywordassignment. Manymethodshave

been used to build classification systems, including artificial neural networks,rule-based

expert systems (both hand-built and inductively learned), fuzzy rule systems, memorybased and case-based systems and nearest neighbor systems, generalized radial basis

functions, classifier systems, and others. Researchsubcommunitieshave tended to

specialize in one or another of these mechanisms,and manypapers have argued for the

superiority of one methodsvis-a-vis others. I will argue that none of these methodsis

universal, nor does any one methodhave a priori superiority over all others. To support

this argument,I showthat all these methodsare related, and in fact can be viewedas

lying at points along a continuous spectrum, with memory-basedmethodsoccupying a

pivotal position. I further argue that the selection of one or another of these methods

should generally be seen as an engineering choice, even whenthe research goal is to

explore the potential of somemethodfor explaining aspects of cognition; methodsand

problemareas must be considered together. Finally a set of properuesis identified that

can be used to characterize each of the classification methods,and to begin to build an

engineeringscience for classification tasks.

1.0 Unified Framework

for Classification Learning

A widevariety of classification learning methodscan be seen as related, as points on a

spectrum of methods. Memory-basedReasoning (MBR)is the key to this analysis. The

idea of MBR

is to use a training set without modification as the basis of a nearest

neighbor classification method.Anynewexampleto be classified is comparedto each

element in the training set and the distance from the newexampleis computedfor each

training set element. Thenearest neighbor(or nearest k neighbors) are found in the

training set, and their classifications used to decide on the classification for the new

example.In a single nearest neighbor version of MBR,the class of the closest training

set neighboris assigned to the newexample.In a k-nearest neighborversion, if all k

nearest neighbors have the sameclass, it is assigned to the newexample;if morethan one

class appears within the nearest k neighbors, then a voting or distance-weightedvoting

schemeis used to classify the newexample.As stated, MBR

has no learning. (It is

certainly possible -- and for real worldproblemsgenerally a goodidea -- to include

learning with MBR;

wewill comeback to this issue later.)

First, wecan relate MBR

to rule-based systems; in particular, if lookedat the right way, a

single-nearest-neighbor MBR

system is already a rule-based system. To see this, note that

MBR

cases consist of situtations and actions, like production rules. There are as many

"rules" as there are cases in the MBR

training set database. Each"left handside" is the

conjunctionof all the features of the case. Each"right handside" is the classification.

Usingthis observation, wecan see that there is a spectrumof rule-based systems between

MBR

and an "ordinary" rule-based system, with a relatively small numberof rules. We

can movealong this spectrum by using AI learning techniques: for example, we can find

irrelevant features by noting that certain left-hand side variables haveno correlation with

classifications, and can thus be eliminated, yielding shorter rules. Also, somecases may

be repeated, and as variables are eliminated, morecases will becomeidentical, and can

128

thus be merged.Moreover,if ranges or sets are used instead of specific variables, more

cases can be collapsed. (Relevant AI methodsinclude ID-3, AQVAL,

version spaces,

COBWEB,etc.)

Second,wecan also relate MBR

to neural nets. If lookedat the right way, single-nearestneighbor MBR

systems are a kind of neural net, namely one with as manyhidden units as

there are examplesin the training set. Eachinput unit codes for a feature/variable, and is

fully connectedto each hidden unit. There are as manyoutput units as there are possible

classifications, and each hiddenunit has a single link to the appropriateoutput unit. All

the hiddenunits are connectedin a "winner-take-all" networkconfiguration. It is also

possible to define a spectrum of methodsbetween MBR

and "ordinary" neural nets. We

can reduce the numberof MBRhidden units by a numberof methods: we can remove

duplicate cases; wecan replace similar cases with a single case, using methodssimilar to

those of generalized radial basis functions, whichyield a set of Khiddenunits, each with

a central pseudo-case,together with radii of influence (this also has close relations to

G-rossberg’s ARTsystem); we can use methodslike those of Kibler and Aha, whoremove

cases whoseneighbors all have the sameclasification, leaving only those cases that are

on the boundariesbetweenregions of similar categorizations; etc.

2. Relative advantages and disadvantages of various methods

For the past few years, I and a numberof mycolleagues have been involved with several

projects that have allowed us to comparevarious classification learning methods.I will

concentrate here on exampleswhere we have been able to comparethe results of varous

systems quantitalvely. Examplesinclude MBRTalk

and the research of Wolpert, which

comparedneural nets with MBR;PACE,a system that classified Census Bureau returns,

and allowed direct comparison with AIOCS,an expert system; a memory-basedsystem

for assigning keywordsto news articles that can be comparedwith CONSTRUE,

an

expert system for a very similar task as well as with humanindexers; and workon PHIPSI, a system for protein secondarystructure prediction, whichlet us compareneural nets

with MBR

as well as with statistical techniques. Other projects have let us compareMBR

with CART

and various statistical regression methods.

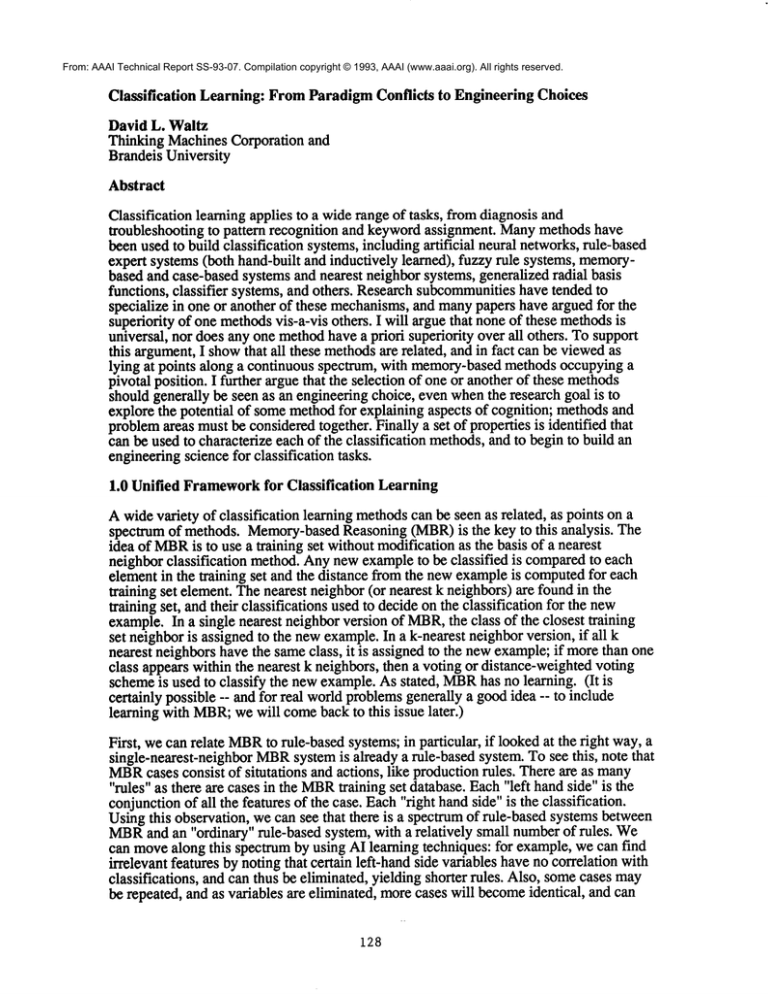

These various systems can be comparedalong a numberof dimensions. These include 1)

quality of classification decisions; 2) computationalcost; 3) programmer

effort required;

4) memory

requirements; 5) learning cost; 6) update cost; 7) ability to generalize

extrapolate beyonda training set; 8) ability to scale to very large training sets and/or to

very large numbersof categories; 9) ability to provide and explanation for classifications;

10) range of inputs handled(e.g. numbers,symbolicvariables, free text, etc.); and

ability to provide a confidencescore for classifications. Theproperties of each of the

various classification methodsare characterized, and comparedwith the requirementsfor

various classification tasks.

Manyof these dimensionsare summarizedin the following table:

Accu-Learn

Decsn Progr Updte MemExpl? Conf Text? Noise Scales

racy

Cost

Cost Cost

MBR

++

++

--

+

++

--

+

ANNs

+

-

++

++

-

+

......

ID-3

+

-

++

+

-?

Cost

meas?

Reqts

+

129

+

Tol?

+

"?

--

?

+

+

+

+

"?

+?

GRBF

++

-

+

~?

+

-?

RBSs

+?

-

++

....

+

+

~? -?

~

+

+

+

....

Keyto abbreviations

MBR--- Memory-BasedReasoning

ANN= Artificial Neural Nets

ID-3 = Quinlan’s system or CART

GRBF= Generalized Radial Basis Functions (Poggio)

RBSs= Rule-Based Systems (Expert Systems)

Accuracy= level of correctness of classifications

Learn Cost = computational cost of learning

DecsnCost = cost of makinga classification, once the system has been trained

Progr Cost = programmercost, the amountof humaneffort required to build

a system

UpdteCost --- computationaland/or humancost to train/reprogram system to correctly

classify newcases

MemReqts = memoryspace requirements

Expl? -- does the system provide an explanation of its behavior?

Conf meas?= does the system provide a measureof its confidence in its

classification?

Text? = can free text be handled as an input?

NoiseTol? = is the systemtolerant to noise? (or is it "brittle"?)

Scales? = can the system be scaled up to deal with really large training

sets and/or high numbersof classifications per second?

++ = very strong point

+ = strong point

~ = a mixed bag

- = weakpoint/system not goodat this

-- = very weakpoint/system can’t do this, or does badly

? - hard to judge

Highlights of the table

MBR

has been the most accurate -- or amongthe two or three most accurate -- methods

on every domainwe’veexplored, as long as the numberof cases is very large (the larger

the numberof cases, the morelikely that one of themwill be an exact or nearly exact

matchfor a newcase). MBR

does not require learning in its simplest form; all other

methodseither require considerableresources for learning, or else can’t be learned at all

and must therefore be hand-coded.Updatescost little -- one simply adds newitems to

the case-base, removesold ones, and the system immediatelybegins making

classifications based on the newinformation. MBR

provides explanations for its

classifications (in terms of precedents). MBR

provides confidencemeasures(i.e.

nearness of nearest neighbors), unlike any other methods. AndMBR

can use text as

inputs (e.g. by using methodsborrowedfrom Information Retrieval to judge the relative

similarity of free text passages). AnMBR

system’s great drawbackis that it requires large

amountsof memory,and large computational power for decision-making.

Artificial NeuralNets are easy to programand require very little computationalpowerin

order to makeclassifications, once a networkis trained. Theyare, however,expensiveto

130

train or update, provide no explanations or justifications, provide no confidence

measures,and cannot deal with free text inputs.

Decision trees (ID-3, CART,...) require only small computational powerto make

decisions, oncethe tree is learned.

Generalized Radial Basis Functions have been proven to have high accuracy.

Rule-basedSystemsare computationallycheap once built, can deal with free text inputs,

can supply explanations (in the form of the sequenceof rules applied to obtain the

classification), and, at least in someforms can give a confidence measure. However,they

require large programmer("knowledgeengineer") effort to build or update, are intolerant

of noise ("brittle") -- unlike the rest of the methodsconsideredhere -- and they scale

poorly: few, if any, rule-based systems exceed1,000 rules.

3. Understandingthe properties of various methods-- towardan engineering science

for classification tasks

In the long run, hardwarecosts will becomenegligible, designs will be completedand

implemented,and systems will be fielded. Themost critical issue then remainingis

accuracy: can a system actually classify reliably? Can it track changes?The accuracy that

can be achieved by any given system dependsa great deal on the underlying nature of the

classification domain.

AI has commonly

assumedthat a very small numberof rules or principles underlie each

domain.Thegoal of a learning system is to find the compact,concise rule set that

captures the domain. Themost extreme exampleis a physical law, e.g. Newton’slaw of

motion, F = maas found by a Bacon-like system. A similar spirit motivates MDL

(Minimum

Description Length) learning, AQVAL,

etc. Believers in such systems point

evidence of bad performancedue to "overtraining" of neural nets.

But manydomainsare not simple, and for these rules maysimply be inappropriate (or at

best only appropriate for somefraction of the domain). Thefirst examplewe stumbledon

was NETtalk, Terry Sejnowskiand Charlie Rosenberg’s neural net system for

pronouncing English words: MBR

gave dramatically higher generalization accuracy than

did NETtalk.Thereason is, I think, closely related to the fact that Englishpronunciation

is patterned, but has a vast numberof exceptions. Neuralnets (or rule-based systems) can

capture the regularities, but only a finite portion of the domainis regular, and after some

point, one mayneed to add a newrule (or hidden unit) for each newexample.The

doaminwherethe numberof rules is proportional to the numberof examplesis, by

definition, MBR.To give one concrete example: NETtaiknever learned to pronounce

"psychology"correctly becausethere was only one item (out of 4000) in the training set

that had initial "psy..." and therefore no hiddenunit developedto recognizethis

possibility. With MBR,even a single examplethat matchesexactly dominatesall others,

so MBR

was able to find and respond appropriately to "psy..." I suspect that many

domainshave this property of a few common

patterns that can be captured by rules,

shading into large numbersof exceptions and idiosyncratic examples: medical diagnosis,

software or hardwaretroubleshooting, linguistic structures of actual utterances, motifs for

protein structures (another domainwe’velookedat closely), etc. all have this form. This

form is similar to Zipfs law for the relative occurrenceof various wordsin a language’s

vocabulary(Zipfs law states that "The relative frequencyof a wordis inversely

proportional to the rank of the word." "Rank"refers to a word’s order of frequencyin the

language; "a" and "the" have ranks 1 and 2, whereasvery rare wordshave high rank.) The

upshot is that, for manydomainsof interest, we mayneed MBR-likeabilities to handle

131

the residue of the domainthat can’t be captured by a small numberof rules. Of course,

since MBR

can workas a rule-based system as well, it is useful as a uniform, general

method. Perhaps the best option wouldbe an MBR

that learned to find the minimum

numberof examplesthat wouldcover a domain, using clustering, duplicate removal, and

other learning methods.

Until we better understand learning methodsand the domainsto which we want to apply

them, the best methodologymaywell be to try a variety of learning methods, and then

keepthe one that gives the best results within budgetlimitations. I don’t wantto leave the

impression tha MBR

is a panacea: far from it. Anygiven learning methodwill be best for

at least somedomains.It is an important research goal to understandand generate a priori

guidelines for matchinga learning methodwith a particular domain.

Referenceswill be providedin a future, fuller accountof these ideas.

132