European Conference on Complex Systems’ 09 Programme & Abstracts

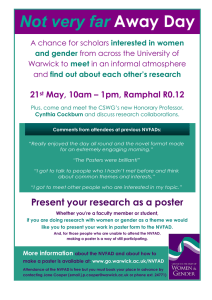

advertisement