Particle Markov Chain Monte Carlo Christophe Andrieu University of Bristol

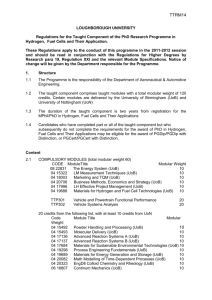

advertisement

Particle Markov Chain Monte Carlo

Christophe Andrieu

University of Bristol

(joint with A. Doucet & R. Holenstein)

UoB

16/03/2009

C.A. (UoB)

PMCMC

16/03/2009

1 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

Both classes of methods require the design of so-called proposal

distributions whose aim is to propose samples which are then

corrected in order to produce samples from π.

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

Both classes of methods require the design of so-called proposal

distributions whose aim is to propose samples which are then

corrected in order to produce samples from π.

The design of such instrumental distributions is di¢ cult since,

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

Both classes of methods require the design of so-called proposal

distributions whose aim is to propose samples which are then

corrected in order to produce samples from π.

The design of such instrumental distributions is di¢ cult since,

they should be “easy” to sample from,

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

Both classes of methods require the design of so-called proposal

distributions whose aim is to propose samples which are then

corrected in order to produce samples from π.

The design of such instrumental distributions is di¢ cult since,

they should be “easy” to sample from,

should capture important features of the target distribution π (scale,

dependence structure).

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Background

Aim: sample from a probability distribution π with di¢ cult patterns

of dependence of its possibly numerous components.

Sampling directly from π is rarely possible directly and the most

popular techniques are

Markov chain Monte Carlo (MCMC),

Sequential Monte Carlo (SMC) aka Particle …lters.

Both classes of methods require the design of so-called proposal

distributions whose aim is to propose samples which are then

corrected in order to produce samples from π.

The design of such instrumental distributions is di¢ cult since,

they should be “easy” to sample from,

should capture important features of the target distribution π (scale,

dependence structure).

The success of MCMC and SMC relies on their ability to break down

the di¢ cult problem of sampling from π into simpler sub-problems.

C.A. (UoB)

PMCMC

16/03/2009

2 / 33

Objectives

Given their di¤ering strengths it seems natural to combine MCMC

and SMC.

C.A. (UoB)

PMCMC

16/03/2009

3 / 33

Objectives

Given their di¤ering strengths it seems natural to combine MCMC

and SMC.

We know how to use MCMC within SMC (Gilks & Berzuini, JRSS B,

2001), but what about the reverse?

C.A. (UoB)

PMCMC

16/03/2009

3 / 33

Objectives

Given their di¤ering strengths it seems natural to combine MCMC

and SMC.

We know how to use MCMC within SMC (Gilks & Berzuini, JRSS B,

2001), but what about the reverse?

We show here how to use SMC as proposal mechanisms in MCMC in

a simple and principled way.

C.A. (UoB)

PMCMC

16/03/2009

3 / 33

Objectives

Given their di¤ering strengths it seems natural to combine MCMC

and SMC.

We know how to use MCMC within SMC (Gilks & Berzuini, JRSS B,

2001), but what about the reverse?

We show here how to use SMC as proposal mechanisms in MCMC in

a simple and principled way.

In particular we show that this can allow one to simplify the design of

proposal distributions in numerous scenarios.

C.A. (UoB)

PMCMC

16/03/2009

3 / 33

Motivating Example: General State-Space Models

Let fXk gk

1

be a Markov process de…ned by

X1

C.A. (UoB)

µ and Xk j (Xk

1

PMCMC

= xk

1)

f ( j xk

1) .

16/03/2009

4 / 33

Motivating Example: General State-Space Models

Let fXk gk

1

be a Markov process de…ned by

X1

µ and Xk j (Xk

1

= xk

1)

f ( j xk

1) .

We only have access to a process fYk gk 1 such that, conditional

upon fXk gk 1 , the observations are statistically independent and

Yk j (Xk = xk )

C.A. (UoB)

PMCMC

g ( j xk ) .

16/03/2009

4 / 33

Bayesian Inference in General State-Space Models

Given a collection of observations y1:T := (y1 , ..., yT ), we are

interested in inference about X1:T := (X1 , ..., XT ) .

C.A. (UoB)

PMCMC

16/03/2009

5 / 33

Bayesian Inference in General State-Space Models

Given a collection of observations y1:T := (y1 , ..., yT ), we are

interested in inference about X1:T := (X1 , ..., XT ) .

In this Bayesian framework, inference relies on

p ( x1:T j y1:T ) =

p (x1:T , y1:T )

p (y1:T )

where

T

p (x1:T , y1:T ) = µ (x1 ) ∏ f ( xk j xk

|

C.A. (UoB)

k =2

{z

prior

PMCMC

T

1)

∏ g ( yk j xk )

}k|=1 {z

likelihood

}

16/03/2009

5 / 33

Bayesian Inference in General State-Space Models

Given a collection of observations y1:T := (y1 , ..., yT ), we are

interested in inference about X1:T := (X1 , ..., XT ) .

In this Bayesian framework, inference relies on

p ( x1:T j y1:T ) =

p (x1:T , y1:T )

p (y1:T )

where

T

p (x1:T , y1:T ) = µ (x1 ) ∏ f ( xk j xk

|

k =2

{z

prior

T

1)

∏ g ( yk j xk )

}k|=1 {z

likelihood

}

Except for a few models including …nite state-space HMM and linear

Gaussian models, this posterior distribution does not admit a closed

form expression.

C.A. (UoB)

PMCMC

16/03/2009

5 / 33

Standard MCMC Approaches

We sample an ergodic Markov chain fX1:T (i )g of invariant

distribution p ( x1:T j y1:T ) using a Metropolis-Hastings (MH)

algorithm; e.g. an independent MH sampler.

C.A. (UoB)

PMCMC

16/03/2009

6 / 33

Standard MCMC Approaches

We sample an ergodic Markov chain fX1:T (i )g of invariant

distribution p ( x1:T j y1:T ) using a Metropolis-Hastings (MH)

algorithm; e.g. an independent MH sampler.

At iteration i

Sample X1:T

W.p. 1 ^

q (x1:T ) .

p (X 1:T ,y1:T ) q (X 1:T (i 1 ))

q (X 1:T ) p (X 1:T (i 1 ),y1:T )

X1:T (i ) = X1:T (i

C.A. (UoB)

set X1:T (i ) = X1:T , otherwise set

1).

PMCMC

16/03/2009

6 / 33

Standard MCMC Approaches

We sample an ergodic Markov chain fX1:T (i )g of invariant

distribution p ( x1:T j y1:T ) using a Metropolis-Hastings (MH)

algorithm; e.g. an independent MH sampler.

At iteration i

Sample X1:T

W.p. 1 ^

q (x1:T ) .

p (X 1:T ,y1:T ) q (X 1:T (i 1 ))

q (X 1:T ) p (X 1:T (i 1 ),y1:T )

X1:T (i ) = X1:T (i

set X1:T (i ) = X1:T , otherwise set

1).

Very simple but very ine¢ cient.

C.A. (UoB)

PMCMC

16/03/2009

6 / 33

Standard MCMC Approaches

We sample an ergodic Markov chain fX1:T (i )g of invariant

distribution p ( x1:T j y1:T ) using a Metropolis-Hastings (MH)

algorithm; e.g. an independent MH sampler.

At iteration i

Sample X1:T

W.p. 1 ^

q (x1:T ) .

p (X 1:T ,y1:T ) q (X 1:T (i 1 ))

q (X 1:T ) p (X 1:T (i 1 ),y1:T )

X1:T (i ) = X1:T (i

set X1:T (i ) = X1:T , otherwise set

1).

Very simple but very ine¢ cient.

For good performance, we need to select q (x1:T ) p ( x1:T j y1:T ) but

this is essentially impossible as soon as, say, T > 10.

C.A. (UoB)

PMCMC

16/03/2009

6 / 33

Standard MCMC Approaches

We sample an ergodic Markov chain fX1:T (i )g of invariant

distribution p ( x1:T j y1:T ) using a Metropolis-Hastings (MH)

algorithm; e.g. an independent MH sampler.

At iteration i

Sample X1:T

W.p. 1 ^

q (x1:T ) .

p (X 1:T ,y1:T ) q (X 1:T (i 1 ))

q (X 1:T ) p (X 1:T (i 1 ),y1:T )

X1:T (i ) = X1:T (i

set X1:T (i ) = X1:T , otherwise set

1).

Very simple but very ine¢ cient.

For good performance, we need to select q (x1:T ) p ( x1:T j y1:T ) but

this is essentially impossible as soon as, say, T > 10.

Errors tend to accumulate.

C.A. (UoB)

PMCMC

16/03/2009

6 / 33

Common Approaches and Limitations

Standard practice consists of building an MCMC kernel updating

subblocks

p (xn:n +K

C.A. (UoB)

1 jy1:T , x1:n 1 , xn +K :T )

∝

PMCMC

n +K

∏ f (xk jxk

k =n

n +K 1

1)

∏

k =n

g (yk jxk ) .

16/03/2009

7 / 33

Common Approaches and Limitations

Standard practice consists of building an MCMC kernel updating

subblocks

p (xn:n +K

1 jy1:T , x1:n 1 , xn +K :T )

∝

n +K

∏ f (xk jxk

k =n

n +K 1

1)

∏

k =n

g (yk jxk ) .

Tradeo¤ between length K or the blocks and ease of design of good

proposals.

C.A. (UoB)

PMCMC

16/03/2009

7 / 33

Common Approaches and Limitations

Standard practice consists of building an MCMC kernel updating

subblocks

p (xn:n +K

1 jy1:T , x1:n 1 , xn +K :T )

∝

n +K

∏ f (xk jxk

k =n

n +K 1

1)

∏

k =n

g (yk jxk ) .

Tradeo¤ between length K or the blocks and ease of design of good

proposals.

Many complex models (e.g. volatility models, in…nite HMM) are such

that it is only possible to sample forward from the prior but impossible

to evaluate it pointwise: how could one use MCMC for such models?

C.A. (UoB)

PMCMC

16/03/2009

7 / 33

Sequential Monte Carlo aka Particle Filters

SMC methods provide an alternative way to approximate

p ( x1:T j y1:T ) and to compute p (y1:T ).

C.A. (UoB)

PMCMC

16/03/2009

8 / 33

Sequential Monte Carlo aka Particle Filters

SMC methods provide an alternative way to approximate

p ( x1:T j y1:T ) and to compute p (y1:T ).

To sample from p ( x1:T j y1:T ), SMC proceeds sequentially by …rst

approximating p ( x1 j y1 ) at time 1 then p ( x1:2 j y1:2 ) at time 2 and so

on.

C.A. (UoB)

PMCMC

16/03/2009

8 / 33

Sequential Monte Carlo aka Particle Filters

SMC methods provide an alternative way to approximate

p ( x1:T j y1:T ) and to compute p (y1:T ).

To sample from p ( x1:T j y1:T ), SMC proceeds sequentially by …rst

approximating p ( x1 j y1 ) at time 1 then p ( x1:2 j y1:2 ) at time 2 and so

on.

SMC methods approximate the distributions of interest via a cloud of

N particles which are propagated using Importance Sampling and

Resampling steps.

C.A. (UoB)

PMCMC

16/03/2009

8 / 33

Vanilla SMC - The Boostrap Filter

At time 1

(i )

Sample X1

(i )

∑N

i = 1 W1

C.A. (UoB)

(i )

µ (x1 ) and compute W1

∝g

(i )

y1 j X1 , ,

= 1.

PMCMC

16/03/2009

9 / 33

Vanilla SMC - The Boostrap Filter

At time 1

(i )

Sample X1

(i )

∑N

i = 1 W1

(i )

µ (x1 ) and compute W1

(i )

∝g

y1 j X1 , ,

= 1.

(i )

Resample N times from p

b ( x1 j y1 ) = ∑N

i =1 W1 δX (i ) (x1 ).

1

At time k, k > 1

C.A. (UoB)

PMCMC

16/03/2009

9 / 33

Vanilla SMC - The Boostrap Filter

At time 1

(i )

Sample X1

(i )

∑N

i = 1 W1

(i )

µ (x1 ) and compute W1

(i )

∝g

y1 j X1 , ,

= 1.

(i )

Resample N times from p

b ( x1 j y1 ) = ∑N

i =1 W1 δX (i ) (x1 ).

1

At time k, k > 1

(i )

Sample Xk

(i )

Wk

∝g

C.A. (UoB)

(i )

f

xk j Xk

(i )

yk j Xk

(i )

1

(i )

, set X1:k = X1:k

(i )

, ∑N

i = 1 Wk

(i )

1 , Xk

and compute

= 1.

PMCMC

16/03/2009

9 / 33

Vanilla SMC - The Boostrap Filter

At time 1

(i )

Sample X1

(i )

∑N

i = 1 W1

(i )

µ (x1 ) and compute W1

(i )

∝g

y1 j X1 , ,

= 1.

(i )

Resample N times from p

b ( x1 j y1 ) = ∑N

i =1 W1 δX (i ) (x1 ).

1

At time k, k > 1

(i )

Sample Xk

(i )

Wk

∝g

(i )

f

xk j Xk

(i )

yk j Xk

(i )

1

(i )

, set X1:k = X1:k

(i )

, ∑N

i = 1 Wk

(i )

1 , Xk

and compute

= 1.

(i )

Resample N times from p

b ( x1:k j y1:k ) = ∑N

i =1 Wk δX (i ) (x1:k ).

1:k

C.A. (UoB)

PMCMC

16/03/2009

9 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

C.A. (UoB)

PMCMC

16/03/2009

10 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ X1 j y1 ] , E

b [ X2 j y1 :2 ] (top) and particle

Figure: p ( x1 j y1 ) , p ( x2 j y1 :2 )and E

approximation of p ( x1 :2 j y1 :2 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

11 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, 2, 3 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :3 j y1 :3 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

12 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, .., 4 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :4 j y1 :4 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

13 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, ..., 10 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :10 j y1 :10 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

14 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, ..., 20 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :20 j y1 :20 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

15 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, ..., 24 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :24 j y1 :24 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

16 / 33

SMC Output

At time T , we obtain the following approximation of the posterior of

interest p ( x1:T j y1:T )

N

p

b ( x1:T j y1:T ) =

∑ WT

(i )

i =1

δX (i ) (x1:T )

1:T

and an approximation of p (y1:T ) given by

T

b ( yk j y1:k

p

b (y1:T ) = p

b (y1 ) ∏ p

T

1)

k =2

C.A. (UoB)

PMCMC

=

∏

k =1

1

N

N

∑

i =1

g

(i )

yk j Xk

16/03/2009

!

.

17 / 33

SMC Output

At time T , we obtain the following approximation of the posterior of

interest p ( x1:T j y1:T )

N

p

b ( x1:T j y1:T ) =

∑ WT

(i )

i =1

δX (i ) (x1:T )

1:T

and an approximation of p (y1:T ) given by

T

b ( yk j y1:k

p

b (y1:T ) = p

b (y1 ) ∏ p

T

1)

k =2

=

∏

k =1

1

N

N

∑

i =1

g

(i )

yk j Xk

!

.

These approximations are asymptotically (i.e. N ! ∞) consistent

under very weak assumptions.

C.A. (UoB)

PMCMC

16/03/2009

17 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, ..., 24 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :24 j y1 :24 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

18 / 33

Some Theoretical Results

Under mixing assumptions (Del Moral, 2004), we have

kLaw (X1:T )

where X1:T

C.A. (UoB)

p ( x1:T j y1:T )ktv

C

T

N

p

b ( x1:T j y1:T ).

PMCMC

16/03/2009

19 / 33

Some Theoretical Results

Under mixing assumptions (Del Moral, 2004), we have

kLaw (X1:T )

where X1:T

p ( x1:T j y1:T )ktv

C

T

N

p

b ( x1:T j y1:T ).

Under mixing assumptions (Chopin, 2004; Del Moral & D., 2004) we

also have

T

V [p

b (y1:T )]

D .

2

p (y1:T )

N

C.A. (UoB)

PMCMC

16/03/2009

19 / 33

Some Theoretical Results

Under mixing assumptions (Del Moral, 2004), we have

kLaw (X1:T )

where X1:T

p ( x1:T j y1:T )ktv

C

T

N

p

b ( x1:T j y1:T ).

Under mixing assumptions (Chopin, 2004; Del Moral & D., 2004) we

also have

T

V [p

b (y1:T )]

D .

2

p (y1:T )

N

Loosely speaking, the performance of SMC only degrades linearly with

time instead of exponentially for naive approaches.

C.A. (UoB)

PMCMC

16/03/2009

19 / 33

Combining MCMC and SMC

‘Idea’: Use the output of our SMC algorithm to de…ne ‘good’

high-dimensional proposal distributions for MCMC.

C.A. (UoB)

PMCMC

16/03/2009

20 / 33

Combining MCMC and SMC

‘Idea’: Use the output of our SMC algorithm to de…ne ‘good’

high-dimensional proposal distributions for MCMC.

Problem: Consider X1:T

p

b ( x1:T j y1:T ) then

Law (X1:T ) := q (x1:T ) = E

N

∑

i =1

(i )

WT δ X ( i )

1:T

!

(x1:T ) .

However, q (x1:T ) does not admit a closed-form expression and so we

cannot directly use the MH algorithm to correct for the discrepancy

between q (x1:T ) and p ( x1:T j y1:T ).

C.A. (UoB)

PMCMC

16/03/2009

20 / 33

Combining MCMC and SMC

‘Idea’: Use the output of our SMC algorithm to de…ne ‘good’

high-dimensional proposal distributions for MCMC.

Problem: Consider X1:T

p

b ( x1:T j y1:T ) then

Law (X1:T ) := q (x1:T ) = E

N

∑

i =1

(i )

WT δ X ( i )

1:T

!

(x1:T ) .

However, q (x1:T ) does not admit a closed-form expression and so we

cannot directly use the MH algorithm to correct for the discrepancy

between q (x1:T ) and p ( x1:T j y1:T ).

Hence the need for a new methodology....

C.A. (UoB)

PMCMC

16/03/2009

20 / 33

Particle IMH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b(1 ) ( x1:T j y1:T ) and p

b(1 ) (y1:T ).

C.A. (UoB)

PMCMC

16/03/2009

21 / 33

Particle IMH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b(1 ) ( x1:T j y1:T ) and p

b(1 ) (y1:T ).

Sample X1:T (1)

At iteration i; i

C.A. (UoB)

p

b(1 ) ( x1:T j y1:T ) .

2

PMCMC

16/03/2009

21 / 33

Particle IMH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b(1 ) ( x1:T j y1:T ) and p

b(1 ) (y1:T ).

Sample X1:T (1)

At iteration i; i

p

b(1 ) ( x1:T j y1:T ) .

2

Run an SMC algorithm to obtain p

b ( x1:T j y1:T ) and p

b (y1:T ).

C.A. (UoB)

PMCMC

16/03/2009

21 / 33

Particle IMH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b(1 ) ( x1:T j y1:T ) and p

b(1 ) (y1:T ).

Sample X1:T (1)

At iteration i; i

p

b(1 ) ( x1:T j y1:T ) .

2

Run an SMC algorithm to obtain p

b ( x1:T j y1:T ) and p

b (y1:T ).

Sample X1:T

C.A. (UoB)

p

b ( x1:T j y1:T ) .

PMCMC

16/03/2009

21 / 33

Particle IMH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b(1 ) ( x1:T j y1:T ) and p

b(1 ) (y1:T ).

Sample X1:T (1)

At iteration i; i

p

b(1 ) ( x1:T j y1:T ) .

2

Run an SMC algorithm to obtain p

b ( x1:T j y1:T ) and p

b (y1:T ).

Sample X1:T

W.p.

p

b ( x1:T j y1:T ) .

1^

p

b (y1:T )

(y1:T )

p

b(i 1 )

set X1:T (i ) = X1:T and p

b(i ) (y1:T ) = p

b (y1:T ), otherwise set

X1:T (i ) = X1:T (i 1) and p

b(i ) (y1:T ) = p

b(i 1 ) (y1:T ) .

C.A. (UoB)

PMCMC

16/03/2009

21 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ X1 j y1 ] , E

b [ X2 j y1 :2 ] (top) and particle

Figure: p ( x1 j y1 ) , p ( x2 j y1 :2 )and E

approximation of p ( x1 :2 j y1 :2 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

22 / 33

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

15

20

25

15

20

25

time index

1.6

1.4

state

1.2

1

0.8

0.6

0.4

5

10

time index

b [ Xk j y1 :k ] for k = 1, 2, 3 (top) and particle

Figure: p ( xk j y1 :k ) and E

approximation of p ( x1 :3 j y1 :3 ) (bottom)

C.A. (UoB)

PMCMC

16/03/2009

23 / 33

Main result

Consider the distribution of all the r.v. generated by the particle …lter

ψ x11:N , ..., xT1:N , a11:N , ..., aT1:N 1

C.A. (UoB)

PMCMC

16/03/2009

24 / 33

Main result

Consider the distribution of all the r.v. generated by the particle …lter

ψ x11:N , ..., xT1:N , a11:N , ..., aT1:N 1

Proposition. For any N 1 the PIMH sampler is an independent

MH sampler de…ned on the extended space

f1, . . . , N g X TN f1, . . . , N g(T 1 )N with target density

k, x11:N , ..., xT1:N , a11:N , . . . , aT1:N 1

=

1

NT

bk

µ (x 1 1 )

T

k jy

p (x1:T

1:T )

∏n =2 r (ank

1 j wn

1 )f

ak

ak 1

1)

(xn n jx1:nn

1:N

ψ x11:N , ..., xT1:N , a1:T

1

k

which admits a conditional distribution for x1:T

equal to

p ( x1:T j y1:T ).

C.A. (UoB)

PMCMC

16/03/2009

24 / 33

Waste Recycling

The ‘standard’estimate of

iterations is

C.A. (UoB)

R

f (x1:T ) p ( x1:T j y1:T ) dx1:T for L MCMC

1 L

f (X1:T (i )).

L i∑

=1

PMCMC

16/03/2009

25 / 33

Waste Recycling

The ‘standard’estimate of

iterations is

R

f (x1:T ) p ( x1:T j y1:T ) dx1:T for L MCMC

1 L

f (X1:T (i )).

L i∑

=1

We generate N particles at each iteration i of the MCMC algorithm

to decide whether to accept or reject one single candidate. This

seems terribly wasteful...

C.A. (UoB)

PMCMC

16/03/2009

25 / 33

Waste Recycling

The ‘standard’estimate of

iterations is

R

f (x1:T ) p ( x1:T j y1:T ) dx1:T for L MCMC

1 L

f (X1:T (i )).

L i∑

=1

We generate N particles at each iteration i of the MCMC algorithm

to decide whether to accept or reject one single candidate. This

seems terribly wasteful...

It is possible to use all the particles by considering the estimate

!

N

1 L

(k )

(k )

∑ WT (i ) f (X1:T (i )) .

L i∑

=1 k =1

C.A. (UoB)

PMCMC

16/03/2009

25 / 33

Waste Recycling

The ‘standard’estimate of

iterations is

R

f (x1:T ) p ( x1:T j y1:T ) dx1:T for L MCMC

1 L

f (X1:T (i )).

L i∑

=1

We generate N particles at each iteration i of the MCMC algorithm

to decide whether to accept or reject one single candidate. This

seems terribly wasteful...

It is possible to use all the particles by considering the estimate

!

N

1 L

(k )

(k )

∑ WT (i ) f (X1:T (i )) .

L i∑

=1 k =1

We can even recycle the populations of rejected particles...

C.A. (UoB)

PMCMC

16/03/2009

25 / 33

Extensions

If T is too large compared to the number N of particles, then the

SMC approximation might be poor and results in an ine¢ cient MH

algorithm.

C.A. (UoB)

PMCMC

16/03/2009

26 / 33

Extensions

If T is too large compared to the number N of particles, then the

SMC approximation might be poor and results in an ine¢ cient MH

algorithm.

We can use a mixture/composition of PMH transition probabilities

that update subblocks of the form Xa:b for 1 a < b T , e¤ectively

targeting conditional distributions of the type p (xa:b jxa 1 , xb +1 , ya:b ).

C.A. (UoB)

PMCMC

16/03/2009

26 / 33

Extensions

If T is too large compared to the number N of particles, then the

SMC approximation might be poor and results in an ine¢ cient MH

algorithm.

We can use a mixture/composition of PMH transition probabilities

that update subblocks of the form Xa:b for 1 a < b T , e¤ectively

targeting conditional distributions of the type p (xa:b jxa 1 , xb +1 , ya:b ).

Given that we know the exact structure of the extended target

distribution, we can rejuvenate some of the particles conditional upon

the others for computational purposes.

C.A. (UoB)

PMCMC

16/03/2009

26 / 33

Nonlinear State-Space Model

Consider the following model

Xk

Yk

where Vk

C.A. (UoB)

=

=

1

Xk

2

1

+ 25

Xk 1

1 + Xk2

+ 8 cos 1.2k + Vk ,

1

Xk2

+ Wk

20

N 0, σ2v , Wk

N 0, σ2w and X1

PMCMC

N 0, 52 .

16/03/2009

27 / 33

Nonlinear State-Space Model

Consider the following model

Xk

Yk

=

=

1

Xk

2

1

+ 25

Xk 1

1 + Xk2

+ 8 cos 1.2k + Vk ,

1

Xk2

+ Wk

20

N 0, σ2v , Wk N 0, σ2w and X1 N 0, 52 .

We investigate the evolution of the expected acceptance ratio as a

function of N in two scenarios.

where Vk

C.A. (UoB)

PMCMC

16/03/2009

27 / 33

Nonlinear State-Space Model

Consider the following model

Xk

Yk

=

=

1

Xk

2

1

+ 25

Xk 1

1 + Xk2

+ 8 cos 1.2k + Vk ,

1

Xk2

+ Wk

20

N 0, σ2v , Wk N 0, σ2w and X1 N 0, 52 .

We investigate the evolution of the expected acceptance ratio as a

function of N in two scenarios.

Use the prior as proposal distribution!

where Vk

C.A. (UoB)

PMCMC

16/03/2009

27 / 33

A More Realistic Problem

In numerous scenarios, µ (x ), f ( x 0 j x ) and g ( y j x ) are not known

exactly but depend on an unknown parameter θ and we note µθ (x ),

fθ ( x 0 j x ) and gθ ( y j x ).

C.A. (UoB)

PMCMC

16/03/2009

28 / 33

A More Realistic Problem

In numerous scenarios, µ (x ), f ( x 0 j x ) and g ( y j x ) are not known

exactly but depend on an unknown parameter θ and we note µθ (x ),

fθ ( x 0 j x ) and gθ ( y j x ).

We set a prior p (θ ) on θ and we are interested in

p ( θ, x1:T j y1:T ) =

C.A. (UoB)

p ( x1:T , y1:T j θ ) p (θ )

p (y1:T )

PMCMC

16/03/2009

28 / 33

A Marginal Metropolis-Hastings Algorithm

To sample from p ( θ, x1:T j y1:T )one could suggest the ideal algorithm

(which e¤ectively integrates x1:T out).

C.A. (UoB)

PMCMC

16/03/2009

29 / 33

A Marginal Metropolis-Hastings Algorithm

To sample from p ( θ, x1:T j y1:T )one could suggest the ideal algorithm

(which e¤ectively integrates x1:T out).

At iteration i

Sample θ

q ( θ j θ (i 1)) and x1:T

pθ (x1:T jy1:T )

p ( y1:T jθ )p (θ )

q ( θ (i 1 )jθ )

W.p. 1 ^ q ( θ jθ (i 1 )) p ( y jθ (i 1 ))p (θ (i 1 )) set θ (i ) = θ and

1:T

x1:T (i ) = x1:T , otherwise set θ (i ) = θ (i 1) and

x1:T (i ) = x1:T (i 1).

C.A. (UoB)

PMCMC

16/03/2009

29 / 33

A Marginal Metropolis-Hastings Algorithm

To sample from p ( θ, x1:T j y1:T )one could suggest the ideal algorithm

(which e¤ectively integrates x1:T out).

At iteration i

Sample θ

q ( θ j θ (i 1)) and x1:T

pθ (x1:T jy1:T )

p ( y1:T jθ )p (θ )

q ( θ (i 1 )jθ )

W.p. 1 ^ q ( θ jθ (i 1 )) p ( y jθ (i 1 ))p (θ (i 1 )) set θ (i ) = θ and

1:T

x1:T (i ) = x1:T , otherwise set θ (i ) = θ (i 1) and

x1:T (i ) = x1:T (i 1).

Problems: sampling

x1:T exactly and expression for

R

p ( y1:T j θ ) = p ( x1:T , y1:T j θ ) dx1:T ?

C.A. (UoB)

PMCMC

16/03/2009

29 / 33

Particle Marginal MH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b ( y1:T j θ (1)) and sample

x1:T (1) p̂θ (1 ) (x1:T jy1:T )

At iteration i; i

C.A. (UoB)

2

PMCMC

16/03/2009

30 / 33

Particle Marginal MH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b ( y1:T j θ (1)) and sample

x1:T (1) p̂θ (1 ) (x1:T jy1:T )

At iteration i; i

2

Sample θ

q ( θ j θ (i 1)), run an SMC algorithm to obtain

p

b ( y1:T j θ ) and a sample x1:T

p̂θ (x1:T jy1:T )

C.A. (UoB)

PMCMC

16/03/2009

30 / 33

Particle Marginal MH Sampler

At iteration 1

Run an SMC algorithm to obtain p

b ( y1:T j θ (1)) and sample

x1:T (1) p̂θ (1 ) (x1:T jy1:T )

At iteration i; i

2

Sample θ

q ( θ j θ (i 1)), run an SMC algorithm to obtain

p

b ( y1:T j θ ) and a sample x1:T

p̂θ (x1:T jy1:T )

W.p.

1^

q ( θ (i 1)j θ )

p

b ( y1:T j θ ) p (θ )

q ( θ j θ (i 1)) p

b ( y1:T j θ (i 1)) p (θ (i

1))

set θ (i ) = θ and x1:T (i ) = x1:T otherwise set θ (i ) = θ (i

x1:T (i ) = x1:T (i 1).

C.A. (UoB)

PMCMC

1) and

16/03/2009

30 / 33

More in CA, AD & RH...

Identifying the structure of the underlying target distribution allows us

to de…ne a ‘Particle Gibbs sampler’: this requires one to use a

‘conditional SMC update’.

C.A. (UoB)

PMCMC

16/03/2009

31 / 33

More in CA, AD & RH...

Identifying the structure of the underlying target distribution allows us

to de…ne a ‘Particle Gibbs sampler’: this requires one to use a

‘conditional SMC update’.

More generally, sampling not constrained to the PIMH or the PMMH

updates.

C.A. (UoB)

PMCMC

16/03/2009

31 / 33

More in CA, AD & RH...

Identifying the structure of the underlying target distribution allows us

to de…ne a ‘Particle Gibbs sampler’: this requires one to use a

‘conditional SMC update’.

More generally, sampling not constrained to the PIMH or the PMMH

updates.

Application to Levy-driven stochastic volatility, for which sampling

from the prior is the only known possibility.

C.A. (UoB)

PMCMC

16/03/2009

31 / 33

Extensions

This methodology is by no means limited to state-space models and

in particular there is no need for any Markovian assumption.

C.A. (UoB)

PMCMC

16/03/2009

32 / 33

Extensions

This methodology is by no means limited to state-space models and

in particular there is no need for any Markovian assumption.

Wherever SMC have been used, PMCMC can be used: self-avoiding

random walks, contingency tables, mixture models, SMC samplers etc.

C.A. (UoB)

PMCMC

16/03/2009

32 / 33

Extensions

This methodology is by no means limited to state-space models and

in particular there is no need for any Markovian assumption.

Wherever SMC have been used, PMCMC can be used: self-avoiding

random walks, contingency tables, mixture models, SMC samplers etc.

Sophisticated SMC algorithms can also be used including ‘clever’

importance distributions and resampling schemes.

C.A. (UoB)

PMCMC

16/03/2009

32 / 33

Extensions

This methodology is by no means limited to state-space models and

in particular there is no need for any Markovian assumption.

Wherever SMC have been used, PMCMC can be used: self-avoiding

random walks, contingency tables, mixture models, SMC samplers etc.

Sophisticated SMC algorithms can also be used including ‘clever’

importance distributions and resampling schemes.

Theoretical results of (Andrieu & Roberts, Ann. Stats, to appear) can

be used in this setup.

C.A. (UoB)

PMCMC

16/03/2009

32 / 33

Discussion

PMCMC allows us to design ‘good’high dimensional proposals based

only on low dimensional proposals,

C.A. (UoB)

PMCMC

16/03/2009

33 / 33

Discussion

PMCMC allows us to design ‘good’high dimensional proposals based

only on low dimensional proposals,

PMCMC provides a simple way to perform inference for models for

which only forward simulation is possible,

C.A. (UoB)

PMCMC

16/03/2009

33 / 33

Discussion

PMCMC allows us to design ‘good’high dimensional proposals based

only on low dimensional proposals,

PMCMC provides a simple way to perform inference for models for

which only forward simulation is possible,

PMCMC is computationally expensive but can be combined with

standard MCMC steps,

C.A. (UoB)

PMCMC

16/03/2009

33 / 33

Discussion

PMCMC allows us to design ‘good’high dimensional proposals based

only on low dimensional proposals,

PMCMC provides a simple way to perform inference for models for

which only forward simulation is possible,

PMCMC is computationally expensive but can be combined with

standard MCMC steps,

Connections to the EAV (Expected Auxiliary Variable) approach for

simulations,

C.A. (UoB)

PMCMC

16/03/2009

33 / 33

Discussion

PMCMC allows us to design ‘good’high dimensional proposals based

only on low dimensional proposals,

PMCMC provides a simple way to perform inference for models for

which only forward simulation is possible,

PMCMC is computationally expensive but can be combined with

standard MCMC steps,

Connections to the EAV (Expected Auxiliary Variable) approach for

simulations,

Extension to ‘dependent’proposals in the spirit of MTM (Liu et al.,

JASA 2000) is feasible.

C.A. (UoB)

PMCMC

16/03/2009

33 / 33