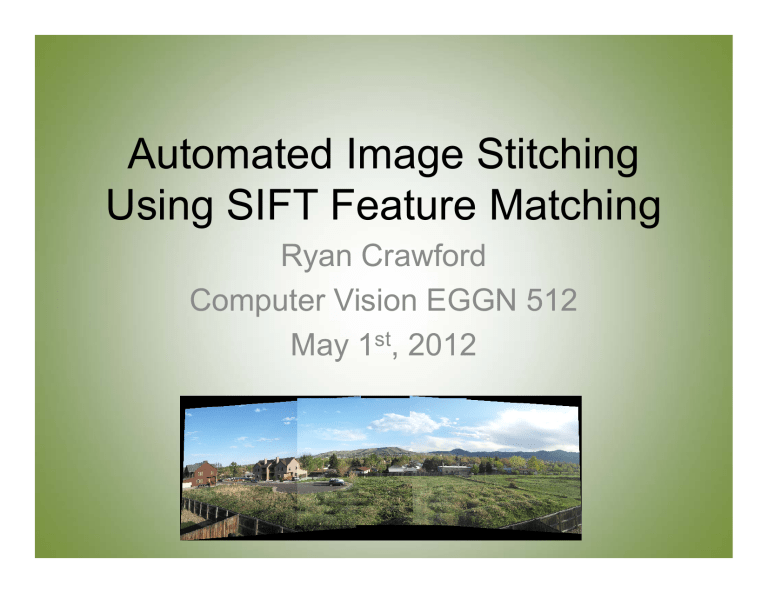

Automated Image Stitching Using SIFT Feature Matching Ryan Crawford Computer Vision EGGN 512

Automated Image Stitching

Using SIFT Feature Matching

Ryan Crawford

Computer Vision EGGN 512

May 1 st , 2012

Outline

• Introduction

• Finding & Matching Features

• Verifying Matches

• Compositing Images

• Results

• Errors & Limitations

• Reducing Seams

• Conclusion

Introduction

• Algorithms for image stitching (or

“mosaicking”) are some of the oldest computer vision problems

• Goal: Take several overlapping pictures of a scene and then let the computer determine the best way to combine them into a single image

Applications

• Image panoramas

• WAAS imagery 1

• Satellite imagery

• Radiology

• Microscopy

• Cell phone applications

[1] J. Prokaj and G. Medioni, “Accurate Efficient Mosaicking for Wide Area Aerial

Surveillance”, University of Southern California Computer Vision, Los Angeles, CA,

WACV 2012 pp. 273-280.

Process

1. Collect a set of overlapping images

2. Choose a reference image

3. For each image “x”:

1. Find feature matches between reference and x

2. Determine transform from x to reference

3. Transform x and place both on composite surface

4. Composite becomes new reference

4. Run aestethic algorithms (reduce seams, adjust for lighting, etc.)

SIFT

• Scale Invariant Feature Transform

• Find and match features between two images

• Works very well even under rotation and scaling changes between images

• Approach 2 :

– Create a scale space of images: progressively blur images with a Gaussian

– Take difference between images (DoG)

– Find the local extrema in this scale space

SIFT cont.

– Choose keypoints (dependent on specified threshold)

– For each keypoint create a 16x16 window and find histograms of gradient directions

– Combine these into a feature vector (128 dimensions)

– Implemented with VL SIFT Matlab coding 3

[2] Lowe, David G. "Distinctive Image Features from Scale-Invariant

Keypoints." International Journal of Computer Vision 60.2 (2004): 91-110.

Print.

Matching Detected Features

• Use vl_sift to find features in each image

– Can limit number of features detected with threshold specifications

• Use vl_ubcmatch to match features between two images

– Candidate matches are found by examining the Euclidian distance between keypoint feature vectors

[3] Vedaldi, A., and B. Fulkerson. "VLFeat: An Open and Portable Library of Computer

Vision Algorithms." 2008. Web. 1 Apr. 2012. <http://www.vlfeat.org>.

Example

4709 Features Detected 8485 Features Detected

Example

324 Matches detected, but there are some outliers

Verifying Matches

• Used a coarse Hough space method 4

– 4-dimensional space: x,y,scale, θ

– Each match “votes” for a pose

– The bin with the highest number of votes is the mostly likely transformation between image 1 and image 2

– Used an affine transformation (rotation, scaling, translation) Can approximate small, out of plane rotations

[4] Hoff, William. "SIFT-Based Object Recognition." Colorado School of Mines,

Golden, Colorado. 15 Apr. 2012. Lecture.

Derived affine transformation:

1.0e+003 *

0.0010 -0.0000 -2.1750

-0.0001 0.0010 0.2636

0 0 0.0010

Largest bin corresponds to 223 features

Outliers are filtered out

Alternate Methods

• Other matching methods include:

– RANSAC

• Not suitable if there are a lot of outliers

– Correlation-Based

• Computationally expensive

– Minimum variance of intensity estimate 5

• Uses reference coordinate system rather than reference image, robust to lens distortion

[5] Sawhney, H.S.; Kumar, R.; , "True multi-image alignment and its application to mosaicing and lens distortion correction," Pattern Analysis and Machine Intelligence,

IEEE Transactions on , vol.21, no.3, pp.235-243, Mar 1999.

Compositing Images

• Once we have the transformation from the first image to the reference image, we need to place the images on a composite surface

• I used a planar surface

– Affine transformation

• Used Matlab’s imtranform function

– Option to output “xdata” and “ydata”

– Scalars corresponding to offset in x or y (positive or negative)

Compositing Images Cont.

• 4 Possible scenarios for where image should go: UL, LL, LR, UR 6

• Create a blank canvas for each image that is big enough to hold reference and transformed image

• Base location on xdata and ydata

• Use Matlab’s imsubtract and imadd functions to combine the two canvases

[6] Michael Carroll and Andrew Davidson. “Image Stitching with MatLab”. University of Louisiana State University. Department of Electrical and Computer Engineering.

Results

Purely in-plane rotation

Errors & Limitations

• Mis-registration can cause blurring

• Repeated similar features can throw off the matching algorithm

• Moving objects or people can cause

“ghosting”

• Used a planar compositing surface

– Cylindrical would work better for large out of plane camera rotations 7

[7] Szeliski, Richard. "Image Stitching." Computer Vision: Algorithms and

Applications . London: Springer, 2011. 375-406. Print.

Repeated Similar features

The windows in the buildings are all very similar, so it’s hard to match features effectively in this image

Ghosting

The person shown was in one frame of the picture and not the other, resulting in a “ghost”

Other Examples

Reducing Seams

• There are several ways to reduce the visible seams in mosaics 7 :

– Feathering (weighted average)

– LaPlacian Blending

– Gradient-Domain Image Stitching

– Regions of Differences (ROD)

• I tried averaging and LaPlacian blending but was unsuccessful in reducing the visible seams

Conclusion

• My algorithm works well as long as the out of plane rotation is not too big

• Similar repeated features caused the matching to fail

• Ghosting occurs when there are moving objects

• Future work: Try other matching algorithms,

Implement seam reduction, use a cylindrical composite surface, stitch together 10+ images

QUESTIONS?

References

[1] J. Prokaj and G. Medioni. “Accurate Efficient Mosaicking for Wide Area Aerial

Surveillance”, University of Southern California Computer Vision, Los Angeles, CA,

WACV, 2012, pp. 273-280. Print.

[2] Lowe, David G. "Distinctive Image Features from Scale-Invariant

Keypoints." International Journal of Computer Vision 60.2, 2004, pp. 91-110. Print.

[3] Vedaldi, A., and B. Fulkerson. "VLFeat: An Open and Portable Library of

Computer Vision Algorithms." 2008. Web. 1 Apr. 2012. <http://www.vlfeat.org>.

[4] Hoff, William. "SIFT-Based Object Recognition." Colorado School of Mines,

Golden, Colorado. 15 Apr. 2012. Lecture.

[5] Sawhney, H.S., and Kumar, R. "True multi-image alignment and its application to mosaicing and lens distortion correction," Pattern Analysis and Machine

Intelligence, IEEE Transactions on , vol.21, no.3, Mar 1999, pp.235-243. Print.

[6] Michael Carroll and Andrew Davidson. “Image Stitching with MatLab”.

University of Louisiana State University. Department of Electrical and Computer

Engineering.

[7] Szeliski, Richard. "Image Stitching." Computer Vision: Algorithms and

Applications . London: Springer, 2011, pp. 375-406. Print.