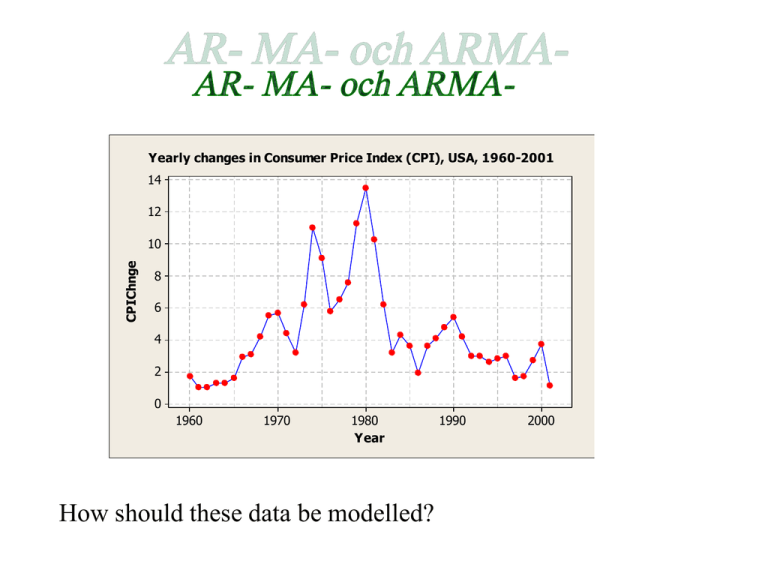

How should these data be modelled? 14 12 10

advertisement

Yearly changes in Consumer Price Index (CPI), USA, 1960-2001

14

12

CPIChnge

10

8

6

4

2

0

1960

1970

1980

Year

How should these data be modelled?

1990

2000

Identification step: Look at the SAC and SPAC

Autocorrelation Function for CPIChnge

(with 5% significance limits for the autocorrelations)

1.0

0.8

Autocorrelation

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

11

10

11

Partial Autocorrelation Function for CPIChnge

(with 5% significance limits for the partial autocorrelations)

1.0

Looks like an AR(1)process. (Spikes are clearly

decreasing in SAC and

there is maybe only one

sign. spike in SPAC)

Partial Autocorrelation

0.8

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

Then we should try to fit the model

yt yt 1 at

The parameters to be estimated are and .

One possibility might be to uses Least-Squares estimation

(like for ordinary regression analysis)

Not so wise, as both response and explanatory variable are

randomly varying.

Maximum Likelihood better So-called Conditional

Least-Squares method can be derived

Use MINITAB’s ARIMA-procedure!!

AR(1)

We can always ask for forecasts

MTB > ARIMA 1 0 0 'CPIChnge';

SUBC>

Constant;

SUBC>

Forecast 2 ;

SUBC>

GSeries;

SUBC>

GACF;

SUBC>

GPACF;

SUBC>

Brief 2.

ARIMA Model: CPIChnge

Estimates at each iteration

Iteration

SSE

Parameters

0

316.054

0.100

4.048

1

245.915

0.250

3.358

2

191.627

0.400

2.669

3

153.195

0.550

1.980

4

130.623

0.700

1.292

5

123.976

0.820

0.739

6

123.786

0.833

0.645

7

123.779

0.836

0.626

8

123.778

0.837

0.622

9

123.778

0.837

0.621

Relative change in each estimate less than 0.0010

Final Estimates of Parameters

Type

AR

SE Coef

T

P

0.8369

0.0916

9.13

0.000

0.6211

0.2761

2.25

0.030

3.809

1.693

1

Coef

Constant

Mean

Number of observations:

Residuals:

ˆ

ˆ

42

SS =

122.845 (backforecasts excluded)

MS =

3.071

DF = 40

Time Series Plot for CPIChnge

(with forecasts and their 95% confidence limits)

15

All spikes should be

within red limits here,

i.e. no correlation

should be left in the

residuals!

5

0

1

5

10

15

20

25

30

35

40

Time

ACF of Residuals for CPIChnge

PACF of Residuals for CPIChnge

(with 5% significance limits for the autocorrelations)

(with 5% significance limits for the partial autocorrelations)

1.0

1.0

0.8

0.8

0.6

0.6

Partial Autocorrelation

Autocorrelation

CPIChnge

10

0.4

0.2

0.0

-0.2

-0.4

-0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-0.8

-1.0

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

1

2

3

4

5

6

Lag

7

8

9

10

Modified Box-Pierce (Ljung-Box) Chi-Square statistic

Lag

Chi-Square

DF

P-Value

12

24

36

48

26.0

35.3

39.8

*

10

22

34

*

0.004

0.036

0.227

*

Forecasts from period 42

95% Limits

Period

Forecast

Lower

Upper

43

1.54176

-1.89376

4.97727

44

1.91148

-2.56850

6.39146

Actual

Ljung-Box statistic:

K

Q* K n d n d 2 n d l rl 2 (aˆ )

l 1

where

n is the sample size

d is the degree of non-seasonal differencing used to

transform original series to be stationary. Non-seasonal

means taking differences at lags nearby.

rl2(â) is the sample autocorrelation at lag l for the residuals

of the estimated model.

K is a number of lags covering multiples of seasonal cycles,

e.g. 12, 24, 36,… for monthly data

Under the assumption of no correlation left in the residuals the

Ljung-Box statistic is chi-square distributed with K – nC degrees of

freedom, where nC is the number of estimated parameters in model

except for the constant

A low P-value for any K should be taken as evidence for

correlated residuals, and thus the estimated model must be revised.

In this example:

Here, data is not supposed

to possess seasonal

variation so interest is

mostly paid to K = 12.

Modified Box-Pierce (Ljung-Box)

Chi-Square statistic

K

Lag

Chi-Square

DF

P-Value

12

24

36

48

26.0

35.3

39.8

*

10

22

34

*

0.004

0.036

0.227

*

P – value for K =12 is

lower than 0.05

Model needs revision!

A new look at the SAC and SPAC of original data:

Autocorrelation Function for CPIChnge

(with 5% significance limits for the autocorrelations)

1.0

0.8

The second spike in SPAC might be

considered crucial!

Autocorrelation

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

11

If an AR(p)-model is correct, the ACF

should decrease exponentially

(monotonically or oscillating)

and PACF should have exactly p

significant spikes

Partial Autocorrelation Function for CPIChnge

(with 5% significance limits for the partial autocorrelations)

1.0

Partial Autocorrelation

0.8

0.6

0.4

Try an AR(2)

0.2

0.0

-0.2

i.e.

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

11

yt 1 yt 1 2 yt 1 at

Type

Coef

SE Coef

T

P

AR

1

1.1684

0.1509

7.74

0.000

AR

2

-0.4120

0.1508

-2.73

0.009

1.0079

0.2531

3.98

0.000

4.137

1.039

Constant

Mean

Number of observations:

Residuals:

42

SS =

103.852 (backforecasts excluded)

MS =

2.663

DF = 39

PREVIOUS MODEL:

Residuals:

SS = 122.845

(backforecasts excluded)

MS =

3.071

Modified Box-Pierce (Ljung-Box) Chi-Square statistic

Lag

DF = 40

Chi-Square

Modified Box-Pierce (Ljung-Box) ChiSquare statistic

Lag

Chi-Square

DF

P-Value

12

24

36

48

26.0

35.3

39.8

*

10

22

34

*

0.004

0.036

0.227

*

Forecasts from period 42

95% Limits

Period

Actual

Forecast

Lower

Upper

43

1.54176

-1.89376

4.97727

44

1.91148

-2.56850

6.39146

12

24

36

48

18.6

30.6

36.8

*

9

21

33

*

0.029

0.081

0.297

*

DF

P-Value

Forecasts from period 42

95% Limits

Period

Forecast

Lower

Upper

43

0.76866

-2.43037

3.96769

44

1.45276

-3.46705

6.37257

Actual

ACF of Residuals for CPIChnge

(with 5% significance limits for the autocorrelations)

1.0

0.8

Autocorrelation

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

7

8

9

10

Lag

Might still be problematic!

PACF of Residuals for CPIChnge

(with 5% significance limits for the partial autocorrelations)

1.0

Partial Autocorrelation

0.8

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

Could it be the case of an Moving Average (MA) model?

MA(1):

yt at at 1

{at } are still assumed to be uncorrelated and identically

distributed with mean zero and constant variance

MA(q):

yt at 1 at 1 q at q

• always stationary

• mean =

• is in effect a moving average with weights

1,1 , 2, , q

for the (unobserved) values at, at – 1, … , at – q

Time Series Plot of AR(1)_0.2

Time Series Plot of MA(1)_0.2

5

3

2

3

MA(1)_0.2

AR(1)_0.2

4

2

1

0

-1

1

-2

0

1

20

40

60

80

100

Index

120

140

160

180

-3

200

1

30

60

Time Series Plot of AR(1)_0.8

120

150

Index

180

210

240

270

300

240

270

300

Time Series Plot of MA(1)_0.8

4

14

13

3

12

2

11

1

MA(1)_0.8

AR(1)_0.8

90

10

9

8

0

-1

-2

7

-3

6

5

-4

1

20

40

60

80

100

Index

120

140

160

180

200

1

30

60

90

120

150

Index

180

210

Time Series Plot of AR(1)_(-0.5)

Time Series Plot of MA(1)_(-0.5)

5

4

4

3

2

2

MA(1)_(-0.5)

AR(1)_(-0.5)

3

1

0

1

0

-1

-1

-2

-2

-3

-3

1

20

40

60

80

100

Index

120

140

160

180

200

1

30

60

90

120

150

Index

180

210

240

270

300

Try an MA(1):

Final Estimates of Parameters

Type

Coef

SE Coef

T

P

-1.0459

0.0205

-51.08

0.000

Constant

4.5995

0.3438

13.38

0.000

Mean

4.5995

0.3438

MA

1

Number of observations:

Residuals:

42

SS =

115.337 (backforecasts excluded)

MS =

2.883

DF = 40

Modified Box-Pierce (Ljung-Box) Chi-Square statistic

Lag

Chi-Square

DF

P-Value

12

24

36

48

38.3

92.0

102.2

*

10

22

34

*

0.000

0.000

0.000

*

Not at all good!

Forecasts from period 42

95% Limits

Period

Forecast

Lower

Upper

43

1.27305

-2.05583

4.60194

44

4.59948

-0.21761

9.41656

Actual

Much wider!

Time Series Plot for CPIChnge

(with forecasts and their 95% confidence limits)

15

5

0

1

5

10

15

20

25

30

35

40

Time

ACF of Residuals for CPIChnge

PACF of Residuals for CPIChnge

(with 5% significance limits for the autocorrelations)

(with 5% significance limits for the partial autocorrelations)

1.0

1.0

0.8

0.8

0.6

0.6

Partial Autocorrelation

Autocorrelation

CPIChnge

10

0.4

0.2

0.0

-0.2

-0.4

-0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-0.8

-1.0

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

1

2

3

4

5

6

Lag

7

8

9

10

Still seems to be problems with residuals

Look again at ACF and PACF of original series:

Autocorrelation Function for CPIChnge

(with 5% significance limits for the autocorrelations)

The pattern corresponds

neither with pure AR(p), nor

with pure MA(q)

1.0

0.8

Autocorrelation

0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

11

Partial Autocorrelation Function for CPIChnge

(with 5% significance limits for the partial autocorrelations)

Could it be a combination of

these two?

1.0

Partial Autocorrelation

0.8

0.6

0.4

Auto Regressive Moving

Average (ARMA) model

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

11

ARMA(p,q):

yt 1 yt 1 p yt p at 1 at 1 q at q

• stationarity conditions harder to define

• mean value calculations more difficult

• identification patterns exist, but might be complex:

– exponentially decreasing patterns or

– sinusoidal decreasing patterns

in both ACF and PACF (no cutting of at a certain lag)

Time Series Plot of ARMA(1,1)_(-0.2)(-0.2)

3

2

2

ARMA(1,1)_(-0.2)(-0.2)

3

1

0

-1

-2

1

0

-1

-2

-3

-3

1

30

60

90

120

150

Index

180

210

240

270

300

1

30

60

90

120

Time Series Plot of ARMA(2,1)_(0.1)(0.1)_(-0.1)

3

ARMA(2,1)_(0.1)(0.1)_(-0.1)

ARMA(1,1)_(0.2)(0.2)

Time Series Plot of ARMA(1,1)_(0.2)(0.2)

2

1

0

-1

-2

-3

-4

1

30

60

90

120

150

Index

180

210

240

270

300

150

Index

180

210

240

270

300

Always try to keep p and q small.

Try an ARMA(1,1):

Type

Coef

SE Coef

T

P

AR

1

0.6558

0.1330

4.93

0.000

MA

1

-0.9324

0.0878

-10.62

0.000

1.3778

0.4232

3.26

0.002

4.003

1.230

Constant

Mean

Number of observations:

Residuals:

42

SS =

77.6457 (backforecasts excluded)

MS =

1.9909

DF = 39

Modified Box-Pierce (Ljung-Box) Chi-Square statistic

Lag

Chi-Square

12

24

36

48

8.4

21.5

28.3

*

9

21

33

*

0.492

0.429

0.699

*

DF

P-Value

Much better!

Forecasts from period 42

95% Limits

Period

Forecast

Lower

Upper

43

-1.01290

-3.77902

1.75321

44

0.71356

-4.47782

5.90494

Actual

Time Series Plot for CPIChnge

(with forecasts and their 95% confidence limits)

15

5

0

Now OK!

-5

1

5

10

15

20

25

30

35

40

Time

ACF of Residuals for CPIChnge

PACF of Residuals for CPIChnge

(with 5% significance limits for the autocorrelations)

(with 5% significance limits for the partial autocorrelations)

1.0

1.0

0.8

0.8

0.6

0.6

Partial Autocorrelation

Autocorrelation

CPIChnge

10

0.4

0.2

0.0

-0.2

-0.4

-0.6

0.4

0.2

0.0

-0.2

-0.4

-0.6

-0.8

-0.8

-1.0

-1.0

1

2

3

4

5

6

Lag

7

8

9

10

1

2

3

4

5

6

Lag

7

8

9

10

Calculating forecasts

For AR(p) models quite simple:

yˆ t 1 ˆ ˆ1 yt ˆ2 yt 1 ˆp yt ( p 1)

yˆ t 2 ˆ ˆ1 yˆ t 1 ˆ2 yt ˆp yt ( p 2 )

yˆ t p ˆ ˆ1 yˆ t ( p 1) ˆ2 yˆ t ( p 2 ) ˆp yt

yˆ t p 1 ˆ ˆ1 yˆ t p ˆ2 yˆ t ( p 1) ˆp yˆ t 1

at + k is set to 0 for all values of k

For MA(q) ??

MA(1):

yˆt ˆ at ˆ at 1

If we e.g. would set at and at – 1 equal to 0

the forecast would constantly be ˆ

which is not desirable.

Note that

yt 1 at 1 at 2

yt at at 1

at 2 0

at yt yt 1 (1 )

aˆt yt ˆ yt 1 (1 ˆ) ˆ

Similar investigations for ARMA-models.