Front cover Redguides

advertisement

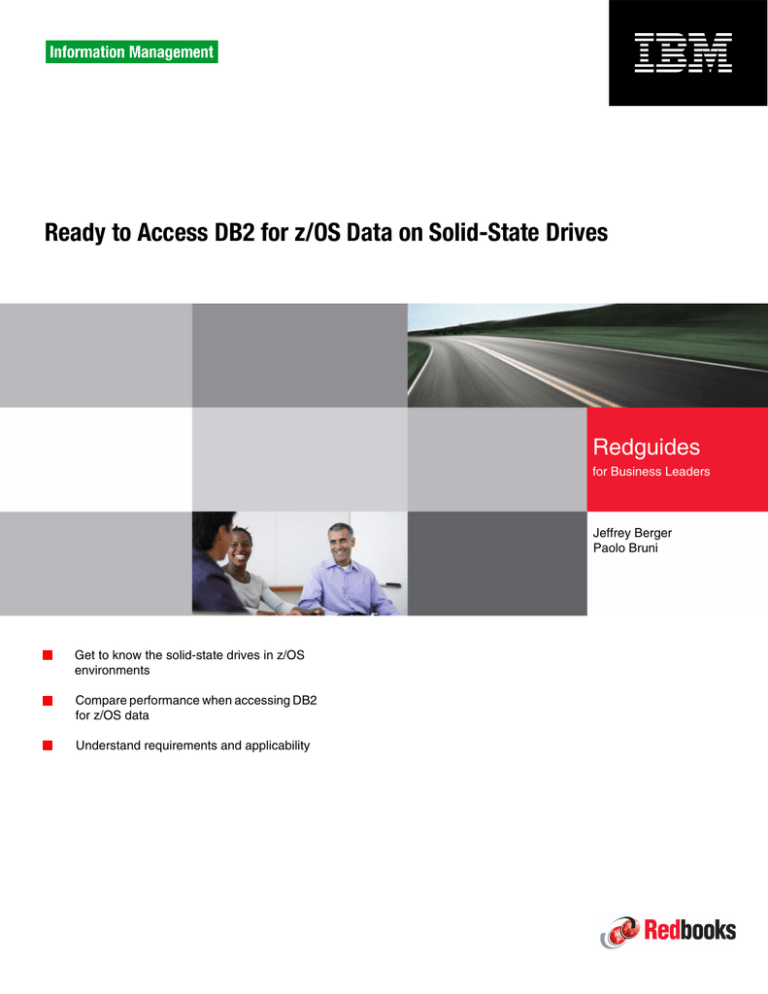

Front cover Ready to Access DB2 for z/OS Data on Solid-State Drives Redguides for Business Leaders Jeffrey Berger Paolo Bruni Get to know the solid-state drives in z/OS environments Compare performance when accessing DB2 for z/OS data Understand requirements and applicability Executive overview The dollar-to-data ratio of solid-state drives (SSDs) is still high when compared with the dollar-to-data ratio of traditional spinning disks in the enterprise storage market; however, the SSDs ratio is expected to improve with investments in the not too distant future. By avoiding seeks and rotational delays, SSDs have the potential to improve the performance of disk storage. This IBM® Redguide™ publication provides a broad understanding of SSDs and shares several early experiences in a laboratory setting using SSDs to improve the performance when accessing DB2® for z/OS® data. Disclaimer The performance data contained in this document was obtained in various controlled laboratory environments and is for reference purposes only. Do not adapt these performance numbers to your own environments as system performance standards. The results that you might obtain in other operating environments can vary significantly. Users of this information must verify the applicability for their specific environment. © Copyright IBM Corp. 2009. All rights reserved. 1 Executive summary The traditional storage technology for most large computer systems consists of spinning disks, which are known today as hard disk drives (HDDs), but solid-state drives (SSDs) have been gaining momentum lately. SSDs are much more expensive than HDDs, but the price is expected to drop and might in the future compete against spinning disks. On February 2009, IBM announced the IBM System Storage™ DS8000® Turbo series with the enhancements of full disk encryption and key management and a solid-state drive option. Laboratory measurements are confirming the high potential to improve the performance of DB2 for z/OS. We present several of these measurements and the lessons that were learned from the study in this paper. Rotating disks that spin at rates of 15 000 rotations per minute typically achieve response times in the range of 4 to 8 milliseconds (for cache misses). In contrast, SSDs provide access times in tens of microseconds, rendering data access times that are more like dynamic random access memory (DRAM). However, the access time of the SSD drive itself ignores the functionality required of an enterprise storage subsystem and the effects of such function on response time. An enterprise storage subsystem provides layers of virtualization in order to shield the software from dependencies on hardware geometry. An enterprise storage subsystem needs to provide continuous availability, and it needs to enable multiple hosts to share the storage. This functionality, which is provided by the DS8000 storage server, adds to the response time of SSDs in an enterprise system, bringing it to the level of hundreds of microseconds. Furthermore, for predominant sequential accesses (cache hits), HDDs and SSDs show similar performance. Nevertheless, we must not underestimate the value of eliminating the seek and rotational delays of HDDs. Among the lessons learned in the measurement study of DB2 for z/OS is that solid-state drives appear to cause greater stress on the channel subsystem, because SSDs enable higher levels of throughput. More improvements in the system as a whole enable solid-state drives to further realize their full potential. IBM has delivered High Performance FICON® (zHPF) to the z/OS environment to help make this happen. IBM recommends zHPF for an SSD environment. Even when the channel subsystem is not stressed, zHPF provides lower response times when accessing SSDs. Another lesson is that disk drives are just one aspect of the I/O subsystem. I/O improvements, such as those improvements made by IBM for the z/OS environment in recent years, continue to be important. Among these improvements are high speed channels, the Modified Indirect Data Address Word (MIDAW)1 facility, Hyper Parallel Access Volumes (HyperPAVs), and Adaptive Multistream Prefetch (AMP)2. DB2 buffer pools and cache in the storage server continue to be important. It is not uncommon for an enterprise system to experience average DB2 synchronous I/O time of two milliseconds or less, and that does not count the zero I/O time for DB2 buffer hits. So, it is simplistic to argue that the busiest data sets belong on expensive fast-performing devices, such as SSD. However, data sets with low access rates do not belong on SSD either. Rather, databases with a large working set and high access rates have the most to gain from SSD performance. But large databases require large disk capacity; consequently, you cannot expect to purchase just a small amount of SSD storage and expect to realize a large performance benefit. 1 2 2 Refer to How does the MIDAW facility improve the performance of FICON channels with DB2 and other workloads, IBM REDP-4201. The Adaptive Multi-stream Prefetching (AMP) technology was developed by IBM Research. AMP introduces an autonomic, workload-responsive, self-optimizing prefetching technology that adapts both the amount of prefetch and the timing of prefetch on a per-application basis in order to maximize the performance of the system. Ready to Access DB2 for z/OS Data on Solid-State Drives A special case to consider is one where the type of workload suddenly changes. A classic example is the daily stock market opening, following on the heels of a batch workload. If the nightly batch workload has caused important data from the previous day’s work to be flushed from the cache, the stock market opening might result in a lower cache hit ratio than what will transpire later in the day. If the performance during the opening is critical enough, it is helpful to use solid-state drives, even though hard disk drives probably suffice to maintain good performance later in the day. SSD is also useful to smooth out other “rough spots” during the day. Solid-state drives Recent trends in direct access storage devices have introduced the use of NAND flash semiconductor memory technology for solid-state drives (SSDs). Flash memory is a nonvolatile computer memory that can be electrically erased and reprogrammed. It is a technology that is primarily used in memory cards and USB flash drives for the general storage and transfer of data between computers and other digital products. Flash memory offers good read access times and better kinetic shock resistance than hard disks. Flash memory, once packaged in a memory card, is durable and can withstand intense pressure, extremes of temperature, and even immersion in water. Because SSDs have no moving parts, they have better performance for random accesses than spinning disks (hard disk drives (HDDs)), and require less energy to operate. SSDs are currently priced more per unit of storage than HDDs but less than previous semiconductor storage. The industry expects these relationships to remain that way for a number of years although the gap in price will likely narrow over time. As a result, both technologies are likely to coexist for a while. In February 2009, IBM announced the IBM System Storage DS8000 Turbo series with solid-state drives (SSDs)3. Earlier, in October 2008, IBM had announced a complementary feature called High Performance FICON for System z® (zHPF)4. zHPF exploits a new channel protocol especially designed for more efficient I/O operations. IBM recommends zHPF for an SSD environment. zHPF requires a z10 processor and z/OS 1.10 (or an SPE5 retrofitted to z/OS 1.8 or 1.9), as well as a storage server, such as the DS8000, that supports it. zHPF provides lower response times when accessing SSDs. The DS8000 storage subsystem with SSDs Solid®-state drives are plug-compatible in a DS8000 and are configured using RAID in exactly the same fashion as hard disks. A RAID “rank” always consists of 8 disks. The measurements that are described in this study used RAID 5, which offers the best combination of space capacity, performance, and reliability. Let us first review how the device adapters are configured in a DS8000. A DS8000 configuration normally consists of one device adapter pair for each set of 64 disks, or eight ranks. In other words, there is one device adapter for each set of four ranks. As more ranks 3 4 5 IBM Corp. 2009. US Announcement Letter 109-120: IBM System Storage DS8000 series (Machine types 2421, 2422, 2423, and 2424) delivers new security, scalability, and business continuity capabilities. IBM United States Hardware Announcement 108-870: IBM System Storage DS8000 series (Machine types 2421, 2422, 2423, and 2424) delivers new functional capabilities (zHPF and RMZ resync). When new function is delivered as service, it is called a small programming enhancement (SPE). SPEs are delivered and tracked the same way as problems. An APAR is assigned to the SPE, and it is delivered as a program temporary fix (PTF). 3 are added to the DS8000 subsystem, the number of device adapters is increased up to a maximum of sixteen device adapters (for 64 ranks). Next, let us review the channel subsystem. A DS8100 supports up to 64 external ports, and a DS8300 supports up to 128 external ports, which can be used either for host channel connections (known as host adapters) or to connect to another DS8000 in order to replicate data on another storage server. For a small number of SSD ranks, if the ranks are limited to one or two device adapters, the device adapters will limit the performance capacity of the subsystem unless the cache hit ratio is good. However, if a few SSD ranks are spread across four or more device adapters, the performance limitations of the channel subsystem will outweigh the limitations of the device adapters, no matter what the hit ratio is. Thus, if it is necessary to sustain very high I/O rates and there are more than just two or three SSD ranks, the performance capacity of channel subsystem is critical to the performance capacity of the I/O subsystem as a whole. DB2 accesses We examine the performance characteristics of the following typical DB2 access types6: DB2 synchronous I/Os DB2 sequential I/O DB2 list prefetch DB2 list prefetch with striping DB2 synchronous I/O using one or two RAID ranks DB2 synchronous I/Os The best and most obvious benefit of the SDDs in the DS8000 is the improvement for DB2 synchronous read I/Os. The metric used in Figure 1 is the response time for synchronous reads measured by DB2 itself (which includes overhead in z/OS to process the I/O) and adding channel overhead as measured on the z10 processor. The z/OS processing overhead on the z10 was measured to be 12 microseconds elapsed time. As can be seen in Figure 1, zHPF affects the performance of cache hits as well as SSD accesses. For cache hits, zHPF lowers the response time from 290 microseconds to 229 microseconds. For SSD accesses, zHPF lowers the response time from 838 microseconds to 739 microseconds. HDD accesses were not re-evaluated using zHPF, because a delta of 100 microseconds is considered insignificant to HDD. In comparison, the response times for hard disks (spinning at 15 000 RPM) range from about 4 000 microseconds for “short seeks” to 8 000 microseconds for long seeks. “Short seeks” are indicative of an individual data set or an individual volume being a hot spot. “Long seeks” are what you might observe when seeking between the extreme inner and outer cylinders of a hard disk, but are extremely atypical. When the I/O is uniformly spread across the disks, we can expect the average response time to be about 6 000 microseconds, which is about seven times higher than the DS8000 can achieve using SSDs without zHPF and about eight times higher than if zHPF is used. 6 4 Except where specifically stated otherwise, all SSD measurements were performed on a z10 processor with 8 FICON Express 4 channels using a DS8000 storage server. The SSDs used for the study were manufactured by STEC. Both the z10 and the DS8000 contained pre-release microcode that supported SSDs and zHPF. HDDs were measured on a z9® processor and were not remeasured on a z10 due to time constraints, but seeks and rotational delays are unaffected by the processor. Ready to Access DB2 for z/OS Data on Solid-State Drives Microseconds 10000 8000 8000 6000 3860 4000 2000 229 290 739 838 Sh SSD or ts ee Lo k ng se ek ac he H SS it D +z HP F C C ac he hi t+ zH PF 0 Figure 1 DB2 synchronous I/O response time DB2 sequential I/O Sequential I/O performance has been steadily improving for many years. High-speed FICON channels, with fast host adapters in the storage server, are crucial to providing good sequential performance. RAID 5 architecture eliminates the effect of hard disk drives on sequential performance, because the data is striped across eight drives, enabling the server to exploit the bandwidth of several drives in parallel. The sequential bandwidth of the disks in a RAID 5 rank exceeds the sequential bandwidth of a device adapter in the DS8000, but the channel subsystem is not yet fast enough to enable one sequential stream to absorb the bandwidth of a device adapter. Besides high-speed channels, two other recent enhancements have contributed to faster sequential I/O. In 2005, the MIDAW facility was introduced. The Modified Indirect Data Address Word (MIDAW) facility was introduced in the IBM z9 processor to improve FICON performance, especially when accessing IBM DB2 databases. This facility is a new method of gathering data into and scattering data from discontinuous storage locations during an I/O operation. MIDAW requires a z9 or z10 processor, with z/OS 1.7 or a PTF to z/OS 1.6. MIDAWs cuts FICON channel utilization for DB2 sequential I/O streams by half or more and improves the sequential throughput of extended format data sets by about 30%. In a sense, zHPF is a complement to MIDAW. They both address the issue of channel efficiency, but whereas one affects sequential I/O, the other affects random I/O. The other new feature that improves sequential performance is Adaptive Multistream Prefetch (AMP). AMP was first introduced in Release 2.4G of the DS8000. It does not require any z/OS changes. AMP typically improves the sequential read throughput by another 30%. AMP achieves this improvement by increasing the prefetch quantity sufficiently to meet the needs of the application. For example, if the application is CPU bound, there is no need to prefetch a lot of data from the disk. At the opposite extreme, if the application is I/O bound and the channels are extremely fast, AMP will prefetch enough data to enable the “back-end” operations to keep up with the channel. 5 As more data is prefetched, more disks are employed in parallel. Therefore, high throughput is achieved by employing parallelism at the disk level. Besides enabling one sequential stream to be faster, AMP also reduces disk thrashing when there is disk contention. Disk thrashing is not an issue with SSD, because SSD does not have to seek from one cylinder to another cylinder, but parallelism is still important to SSD. Dynamic prefetch Figure 2 and Figure 3 show the performance of DB2’s dynamic prefetch as a function of DB2 page size when the pages are clustered. These measurements were done using the DS8000 storage server and were achievable because of high speed channels, MIDAW, and AMP. Figure 2 shows the throughput in megabytes per sec. MB/sec 300 238 250 4K 8K 280 280 16K 32K 200 100 0 DB2 page size Figure 2 Dynamic prefetch throughput Figure 3 shows the reciprocal, which is the time per page. Microseconds 60 40 20 58.5 58.5 16K 32K 32.7 17.6 0 4K 8K DB2 page size Figure 3 Dynamic prefetch page time The throughput of dynamic prefetch ranges from 238 to 280 MB/sec as the page size increases, and the time per page ranges from 17.6 microseconds to 58.5 microseconds. 6 Ready to Access DB2 for z/OS Data on Solid-State Drives The performance of dynamic prefetch is about the same for hard disks and solid-state drives, because sequential performance is gated not by the drives, but rather by the channels and host adapters. Sequential writes Another question about SSD is how it performs sequential writes. This question by examined by considering the Basic Sequential Access Method (BSAM). Figure 4 shows the performance of BSAM reads and writes as the number of buffers varies. The results were the same for HDDs and SSDs. Extended Format (EF) data sets were used for the measurements, because EF is recommended for optimal BSAM performance. 300 MB/sec 226 200 174 136 151 Reads Writes 100 0 3 tracks 5 tracks Buffers per I/O Figure 4 BSAM The DB2 utilities and high-level languages, such as COBOL and PL/I, all read and write three tracks per I/O, but this chart also shows how five tracks of buffers resulted in a little bit better performance. With three tracks per I/O, we get 174 MB/sec for reads and we get 136 MB/sec for writes. Again, SSD does not alter the performance of sequential I/O, because sequential performance is insensitive to disk performance. As with dynamic prefetch, you might not achieve this kind of BSAM performance without a DS8000, without fast channels, without MIDAWs, or without AMP. DB2 list prefetch Now, let us look at poorly clustered data or sparse data. When DB2 uses an index to select the pages, DB2 can elect to sort the record identifiers (RIDs) of the pages and fetch them using list prefetch. Because the pages are sorted, the seek times for HDD are much less than had the pages not been sorted. As the fetched pages become denser, the time per page goes down, albeit DB2 has to read more pages. The DB2 buffer manager further cuts the time by scheduling up to two dynamic prefetch engines in parallel. Figure 5 shows the list prefetch time per page as a function of the page density. With SSD, the time per page is independent of the number of pages fetched. That time is only 327 microseconds, which is 40% of the time for random access. Part of that reason is the parallelism of list prefetch. The other reason is that list prefetch reading 32 pages per I/O reduces the number of I/O operations. 7 Microseconds As we can see from Figure 5, hard disks actually do quite well when the density of pages is high. In fact, HDD and SSD perform exactly the same when DB2 fetches at least 14% of the pages. When DB2 fetches only 1% of the pages, SSD is almost four times faster than HDD, but even then SSDs are not as beneficial as they are for random I/Os. The reason is that even when DB2 fetches one page out of a hundred on HDDs, the seek distance is relatively small and part of the pages requires no physical seek. 1500 1200 900 600 300 0 HDD SSD Cache 0 3 6 9 12 15 % of pages read Figure 5 List prefetch time per page Another observation from Figure 5 is that, if the data is entirely in cache, the time per page is only 47 microseconds, which is still seven times faster than SSD. Thus, the value of cache hits when using list prefetch is not as high as it is for synchronous I/Os. The reason for this difference is that list prefetch relieves part of the channel overhead incurred by synchronous I/Os. Seconds Figure 6 illustrates what these times per page mean in terms of elapsed time. A table was created with five million pages, and an index was used to qualify a percentage of the pages. As the percentage increases, the elapsed time increases. The elapsed time increases linearly for SSD and for cache, but not so with hard disks. Refer to Figure 6. 250 200 150 100 50 0 HDD SSD Cache 0 3 6 9 % of pages read Figure 6 List prefetch elapsed time 8 Ready to Access DB2 for z/OS Data on Solid-State Drives 12 15 DB2 list prefetch with striping DB2 uses two engines for list prefetch. Each engine executes one list prefetch I/O at a time. A list prefetch I/O serially processes a batch of 32 4K pages. Striping the table space is a way to increase the degree of parallelism. Because a RAID 5 rank contains eight disks, it is natural to expect that a RAID 5 rank can sustain up to 8 degrees of parallelism. However, the downside of using striping to increase the parallelism of an HDD rank is that it might cause additional seeking, because striping disrupts the “skip sequential” nature of the page ordering that DB2 does. SSD has no such problem. Thus, SSD makes striping within a rank more effective. Striping with HDDs is more effective when the stripes are spread across ranks (with at most one stripe per rank), but it is not practical except in a benchmark situation to dedicate a rank to a stripe. Figure 7 illustrates the effect of striping where 2% of the 4K pages are fetched. Because the outer part of the HDD disks were used for the non-striped (that is, one stripe) case, the HDD times represent the best case. The HDD time per page was 1041 microseconds, the same as shown in Figure 6. The next HDD measurement used eight stripes, and the stripes were allocated on the first eight consecutive volumes on the outer part of the disks; the result was 632 microseconds, resulting in a 41% reduction. However, when the first four volumes (outer) and last four volumes (inner) were used, the seek times were so poor that the response time increased to 1019 microseconds, just 2% less than when striping was not used. Had the stripes been randomly placed, we can expect a response time in the neighborhood of 800 microseconds per page. Microseconds 1250 1041 1019 1000 500 HDD short seeks 632 750 HDD long seeks 327 250 77 65 122 SSD 0 1 stripe 8 stripes rank 1 8 stripes 2 ranks 8 stripes 8 ranks Figure 7 List prefetch with striping Next, we can see from Figure 7 what happens when the eight HDD stripes are spread across eight ranks. Here, the response time drops significantly to just 122 microseconds, representing about 8.5 times reduction. The reason the response time decreased by more than eight times is that spreading the stripes in this way reduced the seek distances. Yet, as impressive as this reduction might seem, it is impractical to dedicate a rank for each stripe. Now, let us examine how solid-state drives improve the performance in an extremely consistent manner, without major concerns about how stripes compete with each other in a RAID rank. The response time without striping is 327 microseconds. Allocating eight stripes in one RAID rank reduces this time to 77 microseconds, a 76.5% reduction. Thus, increasing the degree of I/O parallelism from two to sixteen provides a four-fold reduction in response time. Dividing the stripes among two RAID ranks only improves the performance to 66 microseconds, an 80% reduction compared to no striping, but not much better than when the stripes were concentrated on one rank. 9 The fact that solid-state drives cause striping to be so effective for list prefetch is just the tip of the benefits. As we are about to see, the same reason that makes striping more effective also enables solid-state drives to sustain much higher throughput for DB2 synchronous I/Os. DB2 synchronous I/O using one or two RAID ranks The next question concerns how the performance of one RAID rank scales as the I/O rate for one RAID rank increases. The measurement was conducted in a way that cache hits were avoided. The workload used for this study is called DB2IO. Each thread in DB2IO accesses a separate table space consisting of one row per 4K page. An index is scanned to select qualifying pages, and the query was designed so that no two qualifying pages reside on the same track to prevent track-level caching from producing cache hits. The cache was flushed prior to each run. For “short seeks”, the table spaces and indexes were forced into a small number of contiguous volumes on the outer cylinders of the hard disks. For “long seeks”, half of the table spaces and indexes were placed on the inner volumes, and the other half of the table spaces and indexes were placed on the outer volumes. When using SSDs, volume placement does not affect response time, but because SSD space was limited, there can only be 64 table spaces per SSD rank, thereby limiting the number of DB2IO threads to 64 per rank. Sixty-four threads were sufficient to determine the knee of the response time curve. Milliseconds The results of these tests of DB2IO without zHPF are shown in Figure 8. (This set of tests was done on a z9 processor with only 4 FICON Express 4 channels.) The performance for both short and long HDD seeks are shown along with SSD. You can interpolate the typical HDD response time somewhere between short and long seeks. As stated earlier, when the I/O is uniformly spread across the disks, we can expect the average response time to be about 6 000 microseconds. Figure 8 suggests that the knee of the curve is somewhere between 1 000 and 2 000 I/O operations per second (IO/sec). 25 20 15 10 5 0 0 3 6 9 12 15 18 I/O rate (IO/sec) HDD Short seeks HDD Long seeks SSD Figure 8 One RAID rank: 100% cache miss The SSD response time starts out at 838 microseconds for low rates, and it remains below 1 millisecond up to about 10 000 IO/sec. The response time continues to climb as the number 10 Ready to Access DB2 for z/OS Data on Solid-State Drives of threads increases. The response time with 64 threads was about 4 milliseconds with 18 600 IO/sec. One SSD rank can achieve about ten times as much I/O as one HDD rank. The limitation of the rank is not the SDDs, but rather the device adapter in the DS8000. Considerations specific to SSDs We now examine several of the key new aspects of utilizing SSDs for DB2 data: How does performance scale for a large number of drives What is the importance of HyperPAV SSD migration Does clustering still matter How does performance scale for a large number of drives Because SSDs provide extremely high throughput on the “back end”, increased channel utilization can cause more queuing on the front end. When the channel utilization increases beyond 50%, queuing begins to affect response times significantly. To minimize channel utilization, it is good to configure many channels and it is important to employ zHPF to achieve good channel efficiency. A good cache hit ratio avoids contention on the back end, but does nothing to relieve the contention on the front end. Thus, it is quite possible for the back end performance capacity to far exceed the performance capabilities of the channel subsystem. Channel efficiency is defined as the relationship between throughput and channel utilization. zHPF improves the channel efficiency in much the same way that MIDAW does, except that where MIDAW affects sequential operations, such as DB2 dynamic and sequential prefetch, zHPF affects random I/Os, such as DB2 synchronous I/Os. zHPF is a new channel program architecture that replaces the old Channel Command Word (CCW) architecture that has existed since the 1950s. The same physical channels support a mixture of the new and old channel programs. Now, the Channel Activity Report in Resource Monitor Facility (RMF) shows a count of how many of each type of I/O were executed. We examine a set of measurements in order to evaluate the constraints of the critical components. Milliseconds Figure 9 illustrates the case where there are two SSD ranks configured behind one Device Adapter (DA) pair, that is, two Device Adapters. This example considers a workload without any cache hits using Extended Format (EF) data sets. 6 8 FICON 4 8 zHPF 2 4 FICON 0 0 10 20 30 40 4 zHPF 2 zHPF Thousand IO/sec Figure 9 One DA pair: 16 SSDs: 100% cache miss 11 We start by comparing FICON to zHPF with eight channels. As the throughput increases beyond 18 000 IO/sec, the channel utilization without zHPF is such that queuing on the channel and host adapter begin to add to the I/O response time. Without zHPF, the I/O response time reaches 4 milliseconds at 31 000 IO/sec, but with zHPF, the I/O response time does not reach 4 milliseconds until the throughput reaches 38 000 IO/sec. To accentuate the effects of high channel utilization, we now examine what happens if the number of channels is reduced to four channels. With zHPF, there is extremely little reduction in performance, but with FICON, the maximum throughput drops from 31 000 to only 22 000 IO/sec. Finally, if the number of channels is reduced to two channels, even zHPF cannot achieve more than 24 000 IO/sec, which is still slightly better than FICON does with four channels. The conclusion is that zHPF is about twice as efficient as FICON. We can see this improvement more clearly and in more detail in Figure 10, which plots the relationship between throughput and channel utilization for both EF and non-EF data sets. Because media manager appends a 32-byte suffix to the end of each VSAM Control Interval of an EF data set, EF reduces FICON efficiency by about 20%. However, EF has no effect on the efficiency of zHPF. Both FICON and zHPF achieve slightly higher efficiency with cache hits compared to cache misses. Channel Utilization (%) Figure 10 shows that one channel using zHPF can achieve slightly over 23 000 cache misses per second. In theory, eight of these channels can achieve 184 000 cache misses per second. If the channel efficiency was really a constant, we expect that eight channels at 38 000 IO/sec are 20.6% busy. However, the actual channel utilization at 38 000 IO/sec with eight channels is 25.6%. So, it is not clear how well we can extrapolate from one channel to many channels, or if the channel utilization metric is precise enough to do this extrapolation. 100 FICON non- EF misses FICON EF misses 50 FICON non-EF hits FICON EF hits 0 0 10 20 Thousand IO/sec 30 zHPF misses zHPF hits Figure 10 Channel efficiency: DB2 synchronous reads Another factor to consider is the effect of cache hits. The relationship between the utilization of the channels and the utilization of the device adapters is determined by the cache hit ratio using the following formula: DA IO/sec = cache miss rate x channel IO/sec For example, if the channel IO/sec is 40 000 and the cache hit ratio is 50%, the DA IO/sec is only 20 000. The more cache hits there are, the less stress there is on the device adapters, and the more likely it is that channel performance characteristics will dominate and limit the throughput capacity of the I/O subsystem. That is why High Performance FICON is so important for solid-state drives. 12 Ready to Access DB2 for z/OS Data on Solid-State Drives Figure 11 shows SSD compared to HDD performance with a workload of random reads without any cache hits in the following configurations7: Sixteen HPF, 96 x 146 GB HDDs on 6 DA pairs in 12 x 6+P RAID5 arrays. Eighty-four HDDs are active and 12 HDDs are hot spares. The throughput of this configuration is limited to 20 000 IO/sec, at which point the HDDs have become a bottleneck. Thirty-two HPF, 384 x 146 GB HDDs on 6 DA pairs in 48 x 6+P RAID5 arrays. Three hundred and sixty HDDs are active and 24 HDDs are hot spares. Quadrupling the HDDs and doubling the channels improve the HDDs throughput to 90 000 IO/sec. Sixteen HPF, 96 x 146 GB SSDs on 6 DA pairs in 12 x 6+P RAID5 arrays. Eighty-four SSDs are active and 12 are hot spares. The SSDs dramatically improve the response times at lower IO/sec and raise the maximum throughput to 120 000 IO/sec. These results show extremely good SSD response times with zHPF all the way to 120 000 IO/sec, where the DS8300 controllers become the performance bottleneck. 4 KB random read: large configuration Milliseconds 20 15 16-HPF-96SSD 16-HPF-96HDD 10 32-HPF-384HDD 5 0 0 20 40 60 80 100 120 140 Thousand IO/sec Figure 11 SSD compared to HDD in large configuration: Random read Looking ahead to the future, it is almost certain that both channels and device adapters will become faster, and solid-state drives provide the motivation for such improvements. However, by improving the efficiency of the channels, High Performance FICON can be used to reduce the number of channels needed to take full advantage of the performance capacity of solid-state drives or to enable a given set of channels to exploit a larger disk configuration. What is the importance of HyperPAV HyperPAV has been available since Release 2.4 of the DS8000 and z/OS 1.8, with PTFs retrofit to z/OS 1.6 and 1.7. HyperPAV is an enhancement to the old style of managing Parallel Access Volume (PAV) aliases. Without HyperPAV, alias unit control blocks (UCBs) are “bound” to a specific base address, and the overhead of rebinding an alias to a separate base address is not insignificant. Also, the concept of binding an alias has the potential to waste part of the addresses when two or more logical partitions (LPARs) share the storage. 7 Chart extracted with permission from IBM System Storage DS8000 with SSDs An In-Depth Look at SSD Performance in DS8000, white paper, by Lee LaFrese, Leslie Sutton, David Whitworth, Storage Systems Performance, Storage Systems Group, IBM. 13 HyperPAV eliminates the binding of aliases to a base address. Instead, an alias is assigned to a base address only for the duration of an I/O. Furthermore, at a given point in time, various LPARs can use the same alias address for separate base addresses. SSD has the potential to increase the I/O rate for a system. As the I/O rate increases, there is a potential for faster changes in the I/O distribution with respect to volumes. Bigger swings can create more alias rebinds. Hence, HyperPAV is needed to combat the problems associated with alias rebinds. HyperPAV helps eliminate IOSQ time by pushing the I/O queuing down to a lower level of the I/O subsystem, which is usually the disks. Assuming that disk performance is the biggest physical limitation of throughput, the number of UCB addresses must be chosen so that there can be enough I/O parallelism to enable high disk utilization. Allocating more UCB addresses than what is needed to achieve high disk utilization is useless (except for those cases where the physical disks are not the primary limitation of throughput). Doing so only shifts the queuing from the IOS queues to the disk queues. In other words, the value of further IOSQ reduction is be largely offset by much higher disk contention. How does SSD change this phenomenon? First, let us consider that out of a typical HDD response time of 6 milliseconds, most of the time occupies both a UCB address and a disk. However with SSDs, a much lower percentage of the response is actually using a disk and a greater percentage of the time is spent occupying the channels, which is also true of cache hits. Rather than needing a sufficient number of UCB addresses to drive the HDD utilization above a desired level, it is more interesting to consider how many UCB addresses are needed to drive the channel utilization to a certain desired level, such as 50%, for example. The value of further IOSQ reduction is largely offset by higher channel contention. So, if you observe a little IOSQ time, but you do not want to see higher channel utilizations, you might prefer to allow the IOSQ time to remain until you can do something to relieve the channel contention, such as employing zHPF or increasing the number of channels. SSD migration Identifying good data candidates to move to SSD is beyond the scope of this paper, but we can talk about it briefly. In general, disconnect time is the component of I/O response time that is often a good indicator of disk seek and rotational delay. However, disconnect time is also an indicator of write delays due to synchronous remote data replication. For this reason, IBM is enhancing the SMF type 42 subtype 6 records to distinguish the disconnect time of reads and writes. The DS8000 provides the ability to obtain cache statistics for every volume in the storage subsystem. These measurements include the count of the number of operations from DASD cache to the back-end storage, the number of random operations, the number of sequential reads and sequential writes, the time to execute those operations, and the number of bytes transferred. These statistics are placed in the SMF 74 subtype 5 record. New z/OS SAS-based tooling analyzes the SMF 42-6 and SMF 74-5 records to produce a report that identifies data sets and volumes that can benefit from residing on SSD technology. These new tools are available for download from the z/OS downloads Web page: http://www.ibm.com/systems/z/os/zos/downloads/flashda.html You can obtain reports to: Identify page sets with high amounts of READ_ONLY_DISC time Analyze DASD cache statistics to identify volumes with high I/O rates Identify the data sets with the highest amount of write activity (number of write requests) 14 Ready to Access DB2 for z/OS Data on Solid-State Drives You can use IBM Data Mobility Services – Softek z/OS Dataset Mobility Facility (zDMF) host-based software to move allocated mainframe data sets. DB2 Reorg utility can move table spaces to volumes mapped by SSD drives. Does clustering still matter There is still debate about whether clustering still matters to DB2 performance when solid-state drives are used. Certainly SSDs enable list prefetch to perform much better, but list prefetch still does not perform as well as dynamic prefetch. The reason is that, although the disk itself is insensitive to clustering, other parts of the I/O subsystem are still sensitive to clustering. DB2 for z/OS evolves taking into account hardware changes (CPU speed and disk drive characteristics) that affect access path selection. DB2 optimizer adjusts its cost modeling as needed. DB2 9 already has a statistic called DATAREPEATFACTOR, which indicates the density of data, helping in deciding if RIDs need to be sorted for list prefetch. We think that clustering is likely to become less important as time goes on. If clustering matters less, a corollary question is whether REORG still matters. The answer is yes. The major reason that is most often given for doing REORGs against a DB2 table space is to reclaim space. Another reason is to rebuild compression dictionaries. A performance reason is to avoid page overflows, which occur when a row becomes larger than its original size - a situation that happens often with compressed table spaces. When such a row is selected, two synchronous I/Os can occur instead of one. Although SSD might reduce the time for each I/O, two I/Os are still slower than one. Conclusion From the performance perspective, solid-state drives and High Performance FICON are both exciting new technologies that might change the landscape of enterprise storage in the future. The traditional concerns about seek and rotational delays might disappear. This study indicates that the response time for DB2 synchronous I/Os is typically between 250 (cache hit) and 800 microseconds using SSDs, depending on the cache hit ratio, and that zHPF also contributes to lower response time. SSDs are another enhancement in a continuing series of major performance enhancements to the DS8000 I/O subsystem that have included high-speed channels, MIDAWs, HyperPAV, and AMP. To complement the higher throughput capability of the disk subsystem, IBM recommends High Performance FICON to increase the throughput capability of the channel subsystem. Because SSDs are a new storage technology, the industry will need more experience regarding their reliability and failure mode, but their performance for synchronous I/O shows an opportunity for all but the non-sequentially dominant workloads. 15 References For more information about accessing DB2 for z/OS data on solid-state drives: IBM United States Announcement 109-120: IBM System Storage DS8000 series (Machine types 2421, 2422, 2423, and 2424) delivers new security, scalability, and business continuity capabilities: http://www.ibm.com/common/ssi/cgi-bin/ssialias?infotype=AN&subtype=CA&htmlfid=8 97/ENUS109-120&appname=USN IBM United States Hardware Announcement 108-870: IBM System Storage DS8000 series (Machine types 2421, 2422, 2423, and 2424) delivers new functional capabilities (zHPF and RMZ resync): http://www.ibm.com/common/ssi/cgi-bin/ssialias?infotype=AN&subtype=CA&htmlfid=8 97/ENUS108-870&appname=USN Mark Mosheyedi and Patrick Wilkison, STEC, Enterprise SSDs, ACM Queue: http://mags.acm.org/queue/20080708/ Jeffrey Berger and Paolo Bruni, IBM, How does the MIDAW facility improve the performance of FICON channels with DB2 and other workloads, IBM REDP-4201, available at: http://www.redbooks.ibm.com/redpapers/pdfs/redp4201.pdf IBM System z and System Storage DS8000: Accelerating the SAP Deposits Management Workload With Solid State Drives, white paper available at: http://www.ibm.com/support/techdocs/atsmastr.nsf/WebIndex/WP101442 Lee LaFrese, Leslie Sutton, David Whitworth, Storage Systems Performance, Storage Systems Group, IBM, IBM System Storage DS8000 with SSDs An In-Depth Look at SSD Performance in DS8000 Performance, white paper - currently in draft version. 16 Ready to Access DB2 for z/OS Data on Solid-State Drives Notices This information was developed for products and services offered in the U.S.A. IBM may not offer the products, services, or features discussed in this document in other countries. Consult your local IBM representative for information on the products and services currently available in your area. Any reference to an IBM product, program, or service is not intended to state or imply that only that IBM product, program, or service may be used. Any functionally equivalent product, program, or service that does not infringe any IBM intellectual property right may be used instead. However, it is the user's responsibility to evaluate and verify the operation of any non-IBM product, program, or service. IBM may have patents or pending patent applications covering subject matter described in this document. The furnishing of this document does not give you any license to these patents. You can send license inquiries, in writing, to: IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY 10504-1785 U.S.A. The following paragraph does not apply to the United Kingdom or any other country where such provisions are inconsistent with local law: INTERNATIONAL BUSINESS MACHINES CORPORATION PROVIDES THIS PUBLICATION "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF NON-INFRINGEMENT, MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Some states do not allow disclaimer of express or implied warranties in certain transactions, therefore, this statement may not apply to you. This information could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. IBM may make improvements and/or changes in the product(s) and/or the program(s) described in this publication at any time without notice. Any references in this information to non-IBM Web sites are provided for convenience only and do not in any manner serve as an endorsement of those Web sites. The materials at those Web sites are not part of the materials for this IBM product and use of those Web sites is at your own risk. IBM may use or distribute any of the information you supply in any way it believes appropriate without incurring any obligation to you. Information concerning non-IBM products was obtained from the suppliers of those products, their published announcements or other publicly available sources. IBM has not tested those products and cannot confirm the accuracy of performance, compatibility or any other claims related to non-IBM products. Questions on the capabilities of non-IBM products should be addressed to the suppliers of those products. This information contains examples of data and reports used in daily business operations. To illustrate them as completely as possible, the examples include the names of individuals, companies, brands, and products. All of these names are fictitious and any similarity to the names and addresses used by an actual business enterprise is entirely coincidental. COPYRIGHT LICENSE: This information contains sample application programs in source language, which illustrate programming techniques on various operating platforms. You may copy, modify, and distribute these sample programs in any form without payment to IBM, for the purposes of developing, using, marketing or distributing application programs conforming to the application programming interface for the operating platform for which the sample programs are written. These examples have not been thoroughly tested under all conditions. IBM, therefore, cannot guarantee or imply reliability, serviceability, or function of these programs. This document REDP-4537-00 was created or updated on December 7, 2009. © Copyright International Business Machines Corporation 2009. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. 17 Trademarks ® IBM, the IBM logo, and ibm.com are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. These and other IBM trademarked terms are marked on their first occurrence in this information with the appropriate symbol (® or ™), indicating US registered or common law trademarks owned by IBM at the time this information was published. Such trademarks may also be registered or common law trademarks in other countries. A current list of IBM trademarks is available on the Web at http://www.ibm.com/legal/copytrade.shtml The following terms are trademarks of the International Business Machines Corporation in the United States, other countries, or both: DB2® DS8000® FICON® IBM® Redbooks (logo) Solid® System Storage™ System z® ® z/OS® z9® SAP, and SAP logos are trademarks or registered trademarks of SAP AG in Germany and in several other countries. Other company, product, or service names may be trademarks or service marks of others. 18 Ready to Access DB2 for z/OS Data on Solid-State Drives