Document 10772768

advertisement

A Proposed Approach to Assess Supply Chain Risks to Meet the New

Challenges in the Defense Industry

by

MASSACHUSETTS INSTITUTE

OF TECHNOLOGY

Cheng-Lung Chou

B.S. Mechanical Engineering, Columbia University, 1998

M.S. Mechanical Engineering, Johns Hopkins University, 2000

JUN 1 4 20l?

BRA R IES

SUBMITTED TO THE MIT SLOAN SCHOOL OF MANAGEMENT AND THE ENGINEERING

SYSTEMS DIVISION IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE

DEGREES OF

MASTER OF BUSINESS ADMINISTRATION

AND

MASTER OF SCIENCE IN ENGINEERING SYSTEMS

ARCRIvES

IN CONJUNCTION WITH THE LEADERS FOR GLOBAL OPERATIONS PROGRAM AT THE

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2012

0 2012 Cheng-Lung Chou. All rights reserved.

The author hereby grants to MIT permission to reproduce and to distribute publicly paper and electronic

copies of this thesis document in whole or in part ingny medium now known or hereafter created.

Signature of Author

Engineering Systems Division, MIT Sloan School of Management

May 11 th, 2012

Certified by

Roy E. Welsch, Ph.D.

1

Eastman Kodak Leaders for Global Operations Professor of Management

Professor of Statistics and Engineering Systems

~

'-or

Certified by

, trn.D.

wnn

Senior Lec urer, Emeritus

MIT Engineering Systems Division

Thesis Supervisor

uam et.

,~r2

Accepted by

Oli de Weck, Chair, Engineering Systems Education Committee

Associate Professor of Aeronautics and Astronautics and Engineering Systems

Accepted by

-v--F- U-,-

lMaura M. Herson, Director, MBA Program

MIT Sloan School of Management

This page intentionally left blank.

2

A Proposed Approach to Assess Supply Chain Risks to Meet the New

Challenges in the Defense Industry

by

Cheng-Lung Chou

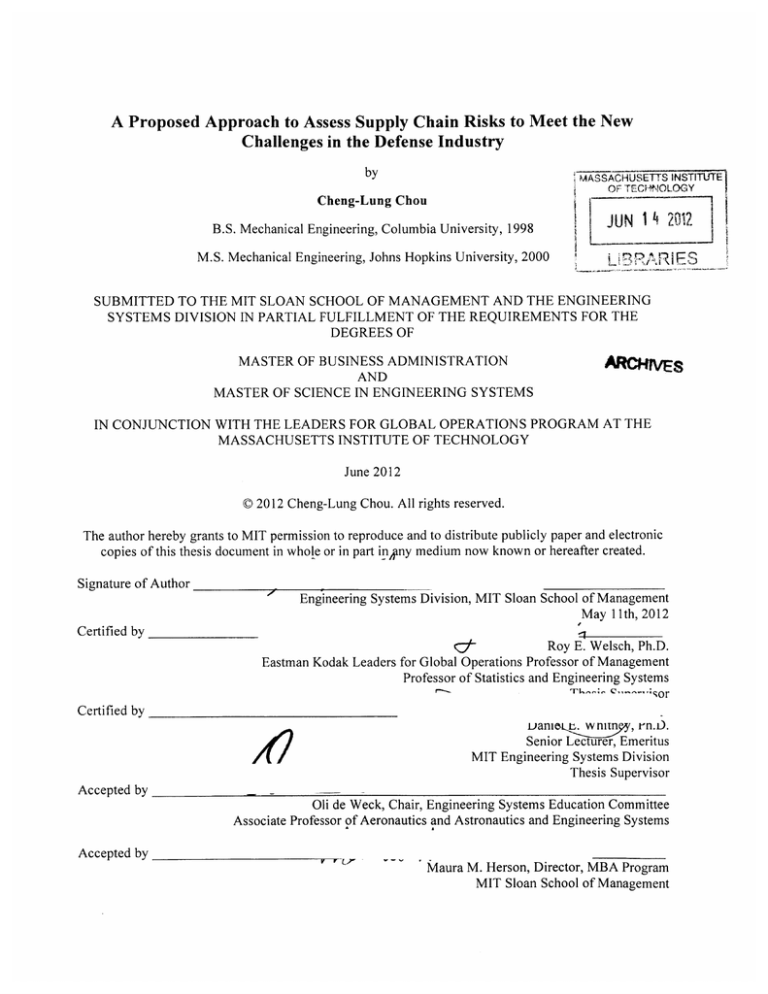

Submitted to the MIT Sloan School of Management and the Engineering Systems Division on May 11,

2012 in Partial Fulfillment of the Requirements for the Degrees of Master of Business Administration and

Master of Science in Engineering Systems

ABSTRACT

Department of Defense (DoD) had doubled its planned investments in new weapon systems from

about $700 billion in 2001 to nearly $1.4 trillion in 2006. Despite the technical superiority of its weapon

systems, DoD's weapon systems acquisition process had been plagued with cost increases, schedule

delays, and performance shortfalls'.

To address the maturity gaps, DoD mandated in 2008 that all prime contractors (including

Raytheon) for new US government funded defense programs to evaluate/document technology and

manufacturing readiness levels (T/MRL) of their supply base. There are 10 manufacturing & 9

technology readiness levels and specific levels need to be met for certain program milestones. DoD has

released a set of questionnaires (Deskbooks), designed to evaluate the maturity levels of a supplier in

areas such as engineering design, operation, manufacturing, and facility etc.

The goal of this thesis is to develop an assessment method, using the Deskbooks as a reference, to

address the core issues in the defense acquisition process. The thesis will also take a deep dive into

Raytheon's supply chain management philosophy and analyze how Raytheon's strategic sourcing

initiatives align with the new challenges in the defense industry.

Thesis Supervisor: Roy E. Welsch

Title: Professor of Statistics and Engineering Systems

Thesis Supervisor: Daniel E. Whitney

Title: Senior Lecturer, Emeritus

'Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major Weapon

Programs. March 2006

3

This page intentionally left blank.

4

ACKNOWLEDGEMENT

I want to thank the Leaders for Global Operations (LGO) program at MIT Sloan for giving me this

amazing opportunity to not only spend two years at this prestigious institution but also work on this

meaningful and impactful project with Raytheon.

I want to thank my Raytheon supervisors John O'Sullivan and James Tiernan, project champion Charles

Mullins, engineering director Alan Glickman, and many others (Mark Yun, Hans Borchardt, John

Baranauskus, Carl Bender, John Stranberg, and countless others) within the Supplier Engineering

organization at Raytheon Integrated Defense Systems for not only their support but also their knowledge

and mentorship.

I also want to thank my advisors, Roy Welsch and Dan Whitney, for their invaluable insights provided

throughout my internship and during my thesis writing.

I want to especially thank my family for the moral and financial support throughout these two very

challenging years. Without them, I would not have been able to make it through the program.

5

This page intentionally left blank.

6

TABLE OF CONTENTS

ABSTRACT ..................................................................................................................................................

3

ACKNOW LEDGEM ENT ............................................................................................................................

5

TABLE OF CON TENTS..............................................................................................................................7

LIST OF FIGURES ......................................................................................................................................

1

2

3

INTRODUCTION ..............................................................................................................................

9

11

1.1

Project M otivation ......................................................................................................................

13

1.2

Manufacturing Readiness Levels (M RL) Overview ...............................................................

19

1.3

Thesis Overview .........................................................................................................................

21

RAYTHEON COM PANY OVERVIEW ......................................................................................

22

2.1

Raytheon Integrated Defense System s (IDS).........................................................................

22

2.2

Supplier Engineering Organization.........................................................................................

23

2.3

Unique Challenges ......................................................................................................................

23

2.4

Supply Chain as a Competitive Advantage.............................................................................

24

LITERA TURE REVIEW ...................................................................................................................

27

3.1

Trust in Supply Chain .................................................................................................................

27

3.2

Supplier Evaluation.....................................................................................................................

28

3.3

Applications of M RL ..................................................................................................................

30

7

3.4

4

5

Current/Published M RL A ssessm ent M ethods ......................................................................

ASSESSM EN T TOOL DEVELOPM EN T ....................................................................................

33

37

4.1

Form ing a Com prehensive Data Set ......................................................................................

37

4.2

Artifact-Based Approach .......................................................................................................

39

4.3

Supplier Engagem ent ..................................................................................................................

41

4.4

Method of Data Gathering .......................................................................................................

42

4.5

Tool Enhancem ent ......................................................................................................................

43

METRICS GENERATION & ALGORITHM CREATION ..............................................................

47

5.1

Risk Factor Calculation..........................................................................................................

47

5.2

Scorecard M etrics .......................................................................................................................

49

5.3

Percentage to Goal ......................................................................................................................

50

5.4

Risk M apping..............................................................................................................................51

5.5

Sum m ary Report .........................................................................................................................

52

6

FUTURE ENHANCEM EN T & CON CLU SION S.........................................................................

53

7

APPEN D IX (M RL Maturation M atrix) .........................................................................................

57

8

REFEREN CES

65

9

ACRON Y M S......................................................................................................................................67

...................................................................................................................................

8

LIST OF FIGURES

Figure 1 Total Projected Cost of DoD's Top Five Programs in fiscal Years 2001 and 2006 ..................... I1

Figure 2 Cost and cycle time growth for 26 weapon systems.....................................................................

I

Figure 3 Examples of DoD programs with reduced buying power ........................................................

12

Figure 4 Analysis of DoD Major Defense Acquisition Program Portfolios ............................................

13

Figure 5 Life-Cycle Cost Determination ...............................................................................................

15

Figure 6 Cost & Schedule Comparison of Programs with Mature Vs. Immature Technologies ............

16

Figure 7 MRL/TRL Relations to Milestones and Technical Reviews...................................................

18

F igure 8 M RL Progression..........................................................................................................................

19

Figure 9 MRL Sub-Threads Definitions ................................................................................................

20

Figure 10 Distribution of Average Procurement Unit-Cost Growth after a Production Decision for Ma on

for Major Defense Acquisition Programs (For All Major Defense Acquisition Programs Entering

Production in Fiscal Year 2000 or Later)................................................................................................

31

Figure 11 Honeywell's MRL Assessment Model ...................................................................................

32

F igure 12 M R L A ssist B eta ........................................................................................................................

33

Figure 13 Examples of MRL Assist Beta Questions/Answers ...............................................................

34

F igure 14 Samp le Scorecard .......................................................................................................................

34

Figure 15 Example of Answers for MRL Assist Beta ............................................................................

35

Figure 16 Samples of Process-Related Questions..................................................................................

35

Figure 17 Summary of MRL Questions..................................................................................................

38

Figure 18 MRL Assessment Flowchart ..................................................................................................

42

Figure 19 Scoring for Product- & Business-Level Assessments ............................................................

43

Figure 20 Inputs on Quality of Closure Path ........................................................................................

43

Figure 21 Guideline for R anking ...........................................................................................................

44

Figure 22 Artifacts Filtering Criteria Utilizing VBA.............................................................................

46

9

Figure 23 Sample Screenshot for VBA -Assisted M RL Tool.................................................................

46

Figure 24 Sample MRL Scorecard (Threads with ratings 2 or more levels below desired state is shown in

re d ) ..............................................................................................................................................................

50

Figure 25 Example of Percentage-to-Goal Calculation ..........................................................................

51

Figure 26 Sample Risk M ap ......................................................................................................................

51

Figure 27 Assessm ent Sum mary.................................................................................................................52

Figure 28 Illustration of Integer Program ming ......................................................................................

10

54

1

INTRODUCTION

The risks to profit margins of major defense contractors, such as Raytheon and Lockheed Martin,

had often been negated by cost-plus contracts used by the Department of Defense (DoD). On

developnent programs, CPFF (cost plus fixed fee, one type of cost-plus contracts) helped to reduce the

risks to the companies' margins (although there were still significant risks to the companies' reputation

and extension of the project if overruns became common place). However, the cost-plus model had placed

a heavy burden on the defense budgets and the DoD.

The DoD had doubled its planned investments in new weapon systems from about $700 billion in

2001 to nearly $1.4 trillion in 20062. While some of this increase was due to the increased spending on

the war against terror, deficiencies in DoD weapon acquisition process were the main contributors. In a

detailed report (GAO-06-39 1), the Government Accountability Office (GAO) conducted an independent

assessment on 52 weapon systems in 2006 and this study revealed gaps in technology, design, and

production readiness. Some of the financial and schedule impacts are highlighted below:

Figure 1 Total Projected Cost of DoD's Top Five Programs in fiscal Years 2001 and 20063

Billions of constant 2006 dollars

2006|

2001

Program

F-22A Raptor aircraft

DDG-51 class destroyer ship

Virginia class submarine

C-17 Globemaster airlift aircraft

F/A-18E/F Super Hornet fighter

aircraft

Total

Cost

$65.0

$64.4

$62.1

$51.1

$48.2

Program

Joint Strike Fighter aircraft

Future Combat Systems

Virginia class submarine

DDG-51 class destroyer ship

F-22A Raptor aircraft

$550.0

$290.8 Total

Figure 2 Cost and cycle time growth for 26 weapon systems

Cost

$206.3

$127.5

$80.4

$70.4

$65.4

4

Billions of constant 2006 dollars

Total cost

RDT&E cost

5

Weighted average acquisition cycle time

First full estimate

$547.7

$120.4

154.5 months

Latest estimate

$6274

$164.9

180.2 months

Percentage change

14.6

37.0

16.7

Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major Weapon

Programs. March 2006

2

3 Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major Weapon

Programs, Page 5. March 2006

4 Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major Weapon

Programs, Page 6. March 2006

1

The consequences of cost and cycle-time growth are particularly crucial because it reduced the

buying power of the defense dollars'. This is not only a serious problem from a pure financial and

budgetary concern but also a potential problem area for national security. In this GAO report, six

particular programs were highlighted and the reduction in the defense buying power is highlighted below:

Figure 3 Examples of DoD programs with reduced buying power

6

Initial

estimate

Initial

quantity

Latest

estimate

Latest

quantity

Percent of unit

cost increase

$189. 8 bilon

2,866 aircraft

$206.3 billion

2.458 aircraft

26.7

$82.6 bilon

15 systems

$127.5 billion

15 systems

54.4

Ra5or

$81

1 billion

648 aircraft

$65.4 biltion

181 aircraft

188.7

Evo ved

E

$15.4 billion

181 vehicles

528.0 billion

138 vehicles

137.8

$4.1 billon

5 satellites

$10.2 billion

3 satellites

315.4

58.1 buion

1,025 vehicles

511.1 bInion

1,025 vehicles

35.9

Program

Joint

Strce

Fighter

Future

combat

Systems

Space

ed

System High

Expeditiory

Fightig

Vehie

As pointed out in the article from Center for Strategic and International Studies 7, there are several

types of cost-plus contracts:

Award-fee contracts ($38B in FY'07) - Tie the contractor fee to the quality of the end product

Incentive fee contracts ($8B in FY'07) - Provide a larger fee for contracts that meet or exceed

performance targets such as cost savings

Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major Weapon

Programs. March 2006

6 Government Accountability Office. GAO-06-391 Defense Acquisitions: Assessments of Selected Major

Weapon

Programs, Page 9. March 2006

Joachim Hofbauner & Greg Sanders. Defense Industrial Initiatives Current Issue Cost-Plus Contracts.

5

12

Fixed-fee contracts ($32B in FY'07) - Entail a pre-negotiated fee for the contractor, providing no

incentive for performance or cost savings

Some argue that cost-plus contracts, particularly those with fixed fees, may provide insufficient

incentives to reduce cost 8 . This problem only got worse from 2006 and on as seen in an updated GAO

report.

Figure 4 Analysis of DoD Major Defense Acquisition Program Portfolios'

Fiscal year 2009 dollars

2003

Portfolio size

Number of programs

Total planned commitments

Commitments outstanding

Portfolio Indicators

Change to total RDT&E costs from

first estimate

Change to total acquisition cost

from first estimate

Total acquisition cost growth

Share of programs with 25 percent

increase inprogram acquisition

unit cost growth

Awrage schedule delay In

deltvering Initial capablites

77

$1.2 trillion

$724.2 bilion

Fiscal year

2007

2008

95

96

$1.6 trillion

$1.6 trillion

$875.2 billion $786.3 billion

37 percent

40 percent

42 percent

19 percent

26 percent

25 percent

$183 billion

41 percent

18 months

$301.3 bilion' $296.4 blion

44 percent

42 percent

21 months

22 months

As part of the effort to address this issue, DoD had begun a shift from the cost-plus-model to the

firm-fix-pricing model. This shift is unavoidable as the topic of defense budget has become a hot political

topic. In televised 2008 presidential debates, the candidates attributed one of the causes for the

government budget crisis was due to the over-spending of the defense budget based on the cost-plus

model'".

1.1

Project Motivation

8 Joachim Hofbauner & Greg Sanders. Defense Industrial Initiatives Current Issue Cost-Plus Contracts.

9 Government Accountability Office. GAO-09-326 Defense Acquisitions: Assessments of Selected Major Weapon

Programs, Page 1. March 2009.

1 New York Times. The First Presidential Debate between Senator John McCain and Barack Obama in Oxford,

Miss. September 26 2008.

13

Shifting to a firm-fixed pricing model is only a starting point. The other important insight gathered

from the GAO reports throughout the years was that mature technologies, stable designs, and production

processes in control were characteristics of a successful DoD program". This means all three components

must be seamlessly executed in unison. The successful execution of one or a combination of any of the

above two will not be sufficient. A mature technology that can't be manufactured is just as damaging to

the success of a weapon program as an immature technology in a tightly controlled manufacturing

process.

Therefore it is critical to have a way to assess how mature a technology is, how tightly controlled a

manufacturing environment is, and most importantly how to transition a technology into production. As

Mark Gordon, Director of Defense Programs for the National Center for Advanced Technologies, argued

in his presentation dated April 17t 2008, manufacturing is the key to technology transition 2 . The ability

to "manufacture a component is not subservient to technology development cycle, but central to it"". He

went on to argue that "The capability to produce a technology/material is often not seen as part of

technology transition or innovation, and may be ignored by the Science and Technology community.

However, it is a core focus in highly competitive commercial markets" 4 . The maturation of technology

must be synchronized with the ability to manufacture, support, and test.

This is not to undermine the important of mature technology and design as up to 90% of the total

life cycle cost can be locked in even before a program enters into production.

" Jim Morgan. Manufacturing Readiness Levels (MRLs) for Multi-Dimensional Assessment of Technology

Maturity. May 10th, 2006

12 Mark Gordon. The Need for Manufacturing Innovation and Readiness.

April 17, 2008

" Mark Gordon. The Need for Manufacturing Innovation and Readiness, Slide 3. April 17, 2008

14 Mark Gordon. The Need for Manufacturing Innovation and Readiness, Slide 3. April 17, 2008

14

Figure 5 Life-Cycle Cost Determination'5

195%

850%

16*4

7

0.

2a

Idea

~

Life-Cycle Cost

Determination

Up to S6% of Costs are commitd during desigr

and development - At Milestone 13, up to 90% of

costs could be locked int

35%

Cost-Reduction

Opportunities

22%

validation

Design

Developrnent

Production

Support

Source: DARPA Rap: Desn Explorason and Optimzaton (RaDEO) Promet

In Mark Gordon's report, he compared the schedule delays and cost overruns of 62 selected major

weapon programs. In addition to the chart below, several additional startling facts were revealed as well:

*

Only 16% of programs achieved mature technology at Milestone B (entering system

development and demonstration)

*

At critical design review, only 44% achieved technology maturity and 27% demonstrated

design stability (90% drawings releasable)

*

At Milestone C (the start of production), 10% of programs were collecting data on process

control (0% in control). 47% reported they have already conducted or planned to conduct a

developmental test of a production representative article (i.e. prototype)

"5Mark Gordon. The Need for Manufacturing Innovation and Readiness, Slide 3. April 17, 2008

15

Figure 6 Cost & Schedule Comparison of Programs with Mature Vs. Immature Technologies"

Based on

62 programs

RDT&E

Cost Increase

Technology Status at

Beginning of Development

Immature

Mature

2*6

3*3

Acquisition Unit

Cost Increase

<1%

>30%

Average

Schedule Delay

1 month

20 months

With this in mind along with the increasing focus and political pressure to address the defense

budget issue, John Young, Under Secretary of Defense for Acquisition Technology and Logistics, signed

off on the update to DoD 5000.2 Acquisition Reform on December 8th, 2008, requiring manufacturing

readiness levels (MRL) and technology readiness levels (TRL) assessments of critical technology

elements (CTE) and key manufacturing technologies (KMT) be performed and documented on US

government funded defense programs. The assessments shall be executed by prime DoD contractors on

their internal processes/technologies as well as their supply base.

With the official release of the Technology Readiness Assessment Deskbook in July 2009 and the

ManufacturingReadiness Level Deskbook in July 2010, MRL and TRL assessments (T/MRA) are now

contractual requirements for any defense contracts, for example Raytheon's $214 million dollars

preliminary design contracts awarded in February 2011. In addition to contractual requirements, T/MRA

will also be used to down-select on further/future contract awards among competing contractors.

In the Deskbook, the different levels of MRLs and TRLs are shown in relations to system

milestones and technical reviews (Figure 7). The Deskbooks are essentially comprised of a set of

questions designed as a guide to evaluate a supplier's manufacturing and technology maturity levels

across various areas such as engineering, production, supply chain, and cost control etc. As shown in

16 Mark

Gordon. The Need for Manufacturing Innovation and Readiness, Slide 5. April 17, 2008

16

Figure 7, there are 9 and 10 levels for TRA and MRA respectively. As mentioned in the paragraph above,

Raytheon will need to assess, document, report, and achieve a specified MRL"X" and TRL"X" of

several CTEs and KMTs at both the system integrator level (Raytheon) and the supply chain level (1"

Tier Supplier) as part of the contractual requirements to be delivered at major program milestone reviews.

At the beginning (February 2011) of this project, there were no established and objective ways to

perform this evaluation since the only reference was the MRL and TRL Deskbooks. As a source of

competitive advantage, it was critical for Raytheon to take a leading role in an effort to interpret this new

requirements from its main customer (U.S. Government & DoD) and to design/implement a process not

only as a deliverable to its customer but also as an attempt to shape and guide the direction of T/MRL

assessments.

Due to resource constraints and better management of the scope, the project was primarily focused

on the interpretation and implementation of the MRL as will this thesis. Furthermore, the project focused

on the I" tier supplier level in order to have a better understanding of technology and manufacturing risks

embedded in the supply chain and to formulate a mitigation strategy to address these risks by performing

a MRL assessment (MRA).

17

Figure 7 MRL/TRL Relations to Milestones and Technical Reviews

7

kaidnaleDeglopment

(

irtna

A FRP

Desion

ReviOON

Materiel

DevelopmentDecsin

I

Post-PDR

Assessment

PosttDR

Assessment

I

TRA

TRA

Technical Reviews

Sstainment

SystemsAcqiition

II

Pre-SystemsAcqisition

Ak!

SRR SFR PDR

ITR ASR

CDR

SVR

(FCA

PRR)

TRR

Technology Readiness Levels

I&

Mandtacturaig Readness Level

El EL

LL

EL5

RL6

1

ELVI

L?

ELI

ELI

17OSD Manufacturing Technology Program in collaboration with the joint Service/Industry MRL Working Group.

Manufacturing Readiness Level DeskBook, Page 3-2. July 30t, 2010

18

1.2

Manufacturing Readiness Levels (MRL) Overview

As previously mentioned, there are 10 different levels of MRLs. For each MRL, it establishes

expectation s for manufacturing maturity that should result in minimum manufacturing risk for a specific

product and build phase (i.e. prototyping, pilot production, Low Rate Initial Production, Full Rate

Production, etc.). The matrix below demonstrates the progression in maturity.

Figure 8 MRL Progression

What has been

demonstrated:

MRL 6

MRL 4

MR

10

canent

What was the

Environment

prottyp

Emoawet

Preparation is

completed for.

Rdeamt

asse...."Now

Sstem

There are 9 major areas (Threads) to focus on in order to determine the appropriate MRL:

Technology & Industrial Base, Design, Cost & Funds, Materials, Process, Quality, Manufacturing

Personnel, Facilities, and Manufacturing Management (Figure 9). Under each thread often there are subthreads to dive deeper into the particular topic. The detailed breakdown is demonstrated in the Appendix' 8

and explains the criteria and requirements in detail for each sub-thread.

With the Appendix as a reference, a questionnaire can be constructed and sent to the targeted

supplier(s) for evaluation. By analyzing their responses to the questions, a MRL rating may be generated

and assigned to the particular supplier for the specific CTE.

" OSD Manufacturing Technology Program in collaboration with the joint Service/Industry MRL Working Group.

Manufacturing Readiness Level DeskBook, Page A-1 to A-17. July 30th, 2010

19

Figure 9 MRL Sub-Threads Definitions

dDescr tion

A. Technology& IndustrialBase

Tech Maturity

A.1 Transitionto Production

A.2 Manufacturing Techrolky Development

B. Design

B.1 Producibility Program

B-2 Design Maturity

C. Cost& Funds

CI Cost Modeling

C.2 Cost Analysis

C.3 Manufacturing Investment Bucket

D. Materials

D.1 Material Maturity

D. Material Availability

D3 Material SupplyChain

DA SpecialHandling

E. Process

E Modeling & Simulation

E.2 Process Maturity

E.S Yields& Rates

F. Quality

G Manufacturing Personnel

H. Facilities

H.1 ToolirgSTE/SIE

H2 Facilities

I- Mfg. Mgmt

11 Mfg. Plannirg&SchedLirkg

.2 Mfg Material Planning

20

1.3

Thesis Overview

In chapter one, the motivation and an overview of MRL have been provided. Chapter two will give

a very brief overview of Raytheon Integrated Defense Systems and the Supplier Engineering team that is

responsible for carrying out this MRL initiative.

In chapter three, some reviews of past works focusing on supply chain risk/evaluation will be

addressed. Some examinations of current supply chain risk assessment methods will also be discussed.

Chapter four will discuss the methodology taken to actually construct the MRA process, what

resources were required, what assumptions were made, and what attempts were taken to simplify the

process without sacrificing the robustness of the assessment process

In chapter five, the development of metrics will be discussed. The discussion will focus specifically

on how the team at Raytheon uses the answers gathered from the suppliers to generate a set of metrics

both for reporting externally to the customer and for generating a plan to address the risks associated with

the particular supplier internally.

Finally in chapter six, the main points of this thesis will be discussed. Some recommendations for

Raytheon will also be addressed on how the MRA process can be improved upon.

21

2

RAYTHEON COMPANY OVERVIEW

Raytheon Company is the technology and innovation leader specializing in defense, homeland

security, and other government markets throughout the world. Raytheon specializes in manufacturing

radars and electro-optical sensors (airborne, naval and ground based), satellite sensors, radios and digital

communication systems, and missile defense. Company revenue is 2011 was $25B with 71,000

employees worldwide. Raytheon serves customers in over 80 countries. Raytheon's 10-K, filed on

February

2 3 rd

2011, indicated 23% of sales were international. This is up from the 20% just over two

years ago.

Raytheon operates in six different business segments: Integrated Defense Systems, Intelligence and

Information Systems, Missile Systems, Network Centric Systems, Space and Airborne Systems, and

Technical Services. The development of the MRA process took place at Raytheon Integrated Defense

Systems.

2.1

Raytheon Integrated Defense Systems (IDS)

Headquartered in Tewksbury, Massachusetts, Raytheon Integrated Defense Systems is a leader in

global capabilities integration, providing affordable, integrated solutions to a broad international and

domestic customer base. IDS leverages its core domain knowledge and capabilities in sensors, command,

control and communication (C3), persistent surveillance/intelligence, surveillance and reconnaissance

(ISR), effects and mission support, to provide integrated naval, air and missile defense and civil security

response solutions. Key customers include the U.S. Navy, Army and Air Force, and the U.S. Missile

Defense Agency (MDA), and numerous international customers' 9 . The Patriot Air & Missile Defense

System for the U.S. Army is one of the well-known products from Raytheon IDS. The three main

business segments for Raytheon IDS are Global Integrated Sensors, Integrated Air & Missile Defense,

'9Raytheon Company. Form 10-K. February

2 3rd,

2011

22

and Seapower Capability Systems. As of 2011, Raytheon IDS generated $5B with 13,900 employees

worldwide.

2.2

Supplier Engineering Organization

The Supplier Engineering (SE) team is organized under Integrated Supply Chain. The primary

function of the team is to act as liaisons between design engineering and Raytheon's supply base. The

task of interpreting, designing, and implementing the MRA process fell under the jurisdiction of this

department.

2.3

Unique Challenges

The defense industry is driven by engineering and programs while bound by stringent regulatory

20

compliance and has a massive supply base and demanding customers .With Pentagon, the world's largest

weapons buyer, looking to cut defense expenditures by nearly $500B over the next decade21 , defense

contractors such as Raytheon must look to international sales to diversify their revenue portfolio and to

mitigate risks. As of 2012, foreign orders account for more than one third of Raytheon's backlog.

While this international expansion plan will give defense contractors such as Raytheon the

necessary reserve to weather the federal budget deficit storm, foreign arms sales need to be compliant

with ITAR (International Traffic in Arms Regulation). In addition the sales need to be approved by the

U.S. Congress, for example the 2011 sales of Patriot Missiles to Taiwan worth over $1B.

In the context of MRL assessment, international sales present another challenge. Often as

contractors such Raytheon and Lockheed Martin bid for international contracts, the foreign government

would request a certain portion of the contracts go to local manufacturers as was the case with Raytheon's

Patriot Missile deal with Turkey.

20

21

Since Raytheon must work with pre-selected Turkish supplier, the

Gartner. Supply Chain Transfonnation in the Manufacturing Sector: Raytheon Company. February 24th, 2011

Karen Jacobs and Andrea Shalal-Esa. U.S. Arms Makers See Flat Sales in Face of Cuts. Reuters January

26th,

2012

22

Hurriyet Daily News. US Raytheon announces missile project worth nearly $4B for Turkey. May 26th, 2011

23

MRL assessment is now more of a risk mitigation method versus a contractual requirement. Raytheon

now must face risks in technology maturity, transition to production, production readiness, cost, and most

of important of all, system integration.

In another scenario, Raytheon often is exposed to a sole-source risk because a specific supplier

might have a proprietary technology (CTE) that is crucial to the particular weapon system. The CTE

might have been requested by the customer or identified by Raytheon engineering. The technology might

be mature enough but not to the stage where it can be manufactured with scale and efficiency. In this case

the MRL assessment is even more critical as it is now both a contractual requirement to the customer and

a basis to form a risk mitigation plan in order for the weapon program to succeed.

2.4

Supply Chain as a Competitive Advantage

With a supply chain as complex as one that Raytheon has, it is critical for Raytheon to manage its

supply chain diligently. Excellence in supply chain is not only a prerequisite to ensure program/mission

success but also a financial incentive as between 65 and

7023

cents of every dollar that Raytheon brings in

of its $25B revenue is in the supply chain. With an efficient supply chain, Raytheon can obviously be

more competitive as it is able to provide better solutions to customers and a level of service that

customers expect.

Efficiency in supply chain does not equate to lowest cost. Cost is important so is having best value

and providing speed. To achieve this, Raytheon started to rationalize its supply chain. Raytheon set out to

reduce the number of suppliers to an optimal level where it is now possible to "sit with them, share with

them a business plan, align with their business plan, and have discussions around their capacity and their

capability"24 . Raytheon's goal is to pull the suppliers into the design phase early on to avoid problems

later. By reducing the number of the suppliers, Raytheon doesn't have to deal with thousands of suppliers.

The suppliers are chosen "purposefully, based on their technology, their capability and their alignment to

23

24

SupplyChainBrain. How Raytheon Transformed Its Supply Chain. October 20th, 2011

SupplyChainBrain. How Raytheon Transformed Its Supply Chain. October 20th, 2011

24

Raytheon's business model" . With this, Raytheon seeks to partner with the suppliers not just for today's

need but for tomorrow's as well.

The criticality of supply chain management is echoed by a report published by Apogee Consulting

where it was pointed out that "Supply chain management may well be the key to program execution

success" 26. The article did an analysis on two recent DoD programs where Raytheon was responsible for

one and a competing defense contractor was responsible for the other. Raytheon's program was

successful because "Raytheon invested in its supply chain and worked with its suppliers in a partnership

to develop common processes and align approaches" 2 . In other words, Raytheon took the initiatives and

the responsibilities to share the risks with the suppliers.

On the other hand, the other defense contractor encountered many problems because it transferred

program quality and execution risks to the suppliers. It had the mentality that when the subcontract

manager, buyer, or procurement manager said cost/schedule/quality/performance risks have been pushed

downward in the supply chain, the risks were mitigated.

Digging a little deeper into Raytheon's strategic supply chain initiatives, we can see that Raytheon

adopted common sourcing practices for a small number of commodities. The idea behind this was to

provide engineers in selected programs with a common language when purchasing materials across the

company . With the key suppliers chosen, Raytheon can "share its technology roadmaps with them so

all parties are aligned on systems and business plans. Raytheon will provide suppliers with extended

inventory visibility by allowing them access to its material requirements planning system. In turn,

Raytheon will gain improved visibility to supplier risk issues so it can proactively mitigate potential

,29

snags"

SupplyChainBrain. How Raytheon Transformed Its Supply Chain. October 20th, 2011

Apogee Consulting. Raytheon Builds on Supply Chain Management Success. April 4th, 2011

2 Apogee Consulting. Raytheon Builds on Supply Chain Management Success. April 4th, 2011

28 Industry Week. Raytheon Shores Up Its Supply Chain. March 16th, 2011

29 Industry Week. Raytheon Shores Up Its Supply Chain. March 16th, 2011

25

26

25

How can other companies in the defense industry learn from Raytheon? Raytheon's supply chain

leadership team has identified six initiatives from which other manufacturers can benchmark 0 : supply

chain span of control, cross-function alignment, performance management, sourcing and procurement

organization redesign, integration with program acquisition and engineering, and finally talent

management.

With a supply chain management philosophy such as Raytheon's, Raytheon does not see

customer's MRL requirement as a hindrance. Rather, Raytheon sees this as an opportunity to differentiate

itself from competition, showcase its unique value proposition to the customer base, and take a leadership

role in re-shaping supply chain management to better meet the challenges in the new defense acquisition

environment.

30

Gartner. Supply Chain Transformation in the Manufacturing Sector: Raytheon Company. February 24th, 2011

26

3

LITERATURE REVIEW

In this chapter, literature reviews will be performed on several topics. The reviews will focus on past

researches on trust in supply chain, supplier evaluation, applications of MRL, and current/published

methods for assessing MRLs.

3.1

Trust in Supply Chain

In order for Raytheon to carry out the strategic supply chain initiatives discussed in the previous

chapter, it is critical to build trust but it is not easy to do so. As argued in thesis of Andrew Corum3 ,

"although the engineering functions may involve a great deal of collaboration in manufacturing and

product design, this is shadowed by the governance functions who, in selecting and writing contracts with

suppliers, are distrustful and even adversarial" 32 . This is very true in the defense and aerospace industry

where many of the risks are simply passed down to the first-tier suppliers and blame games ensue when

things do go wrong. Because of this adversarial nature, how would suppliers view Raytheon as Raytheon

approaches them with this MRL initiative?

As Corum mentioned in his thesis, the nature of the supplier-buyer tractions will not be as

adversarial if the transaction is not a one-time deal. If the supplier can understand the buyer's long-term

interest, then the supplier may trust the buyer not to yield to short-run temptations- . This is exactly the

approach Raytheon is taking with its key suppliers. With the number of suppliers reduced, Raytheon can

now focus on the key suppliers to build a relation, one where mutual understanding and risk sharing are

the main themes.

And this is how Raytheon intends to market the MRL assessment with key suppliers. The message

being sent out is loud and clear that the MRL assessment is a way to help both Raytheon and the supplier

and it is not a one-time purchase. By demonstrating the competency in MRL assessment, Raytheon can be

31 Andrew

32

3

Corum. Design and Development of a Supplier Evaluation Process. May 8th, 2009

Andrew Corum. Design and Development of a Supplier Evaluation Process, Page 19. May 8th, 2009

Andrew Corum. Design and Development of a Supplier Evaluation Process. May 8th, 2009

27

more competitive in bidding for government contracts. In return, as suppliers are being put through the

MRL assessments by Raytheon, the suppliers get an in-depth look at how Raytheon's internal processes

work, how Raytheon's technology roadmaps align with the ones of the suppliers, and how the suppliers

can improve their performance not only on the current program but also on processes across the

organization to make themselves more competitive and profitable.

3.2

Supplier Evaluation

Companies such as Dun & Bradstreet and ThomasNet provide some good overviews of domestic

suppliers. Unfortunately this information is of little use in the context of the MRL assessments. Raytheon

as a whole keeps Dun & Bradstreet data on all of its suppliers as an overview of its supply base with

regards to finance and risk management.

As Corum further pointed out in his work, there is no one right way to evaluate a supplier. In fact,

"there are virtually infinite possibilities in how to evaluate a supplier".

It is often not wise to focus on a

single aspect of a supplier but too broad of a scope will also prove to be cumbersome and inefficient. In

Corum's work, the assessment focused on experience, EHS (environment, health, and safety), logistic &

delivery, quality, operations, communication, financial records and technical competence 5 .

In a similar work by Steven Croce, his risk assessment focused on36-

*

Design Risk

o

o

*

Design Maturity

Knowledge Transfer

" Language Barriers

- CAD Data Compatibility

Financial Risk

o Supplier financial Standing

o

- Financial Ratios

" Debt Rating

- Payables

Exchange Rates

Andrew Corum. Design and Development of a Supplier Evaluation Process, Page 29. May 8th, 2009

" Andrew Corum. Design and Development of a Supplier Evaluation Process. May 8th, 2009

36 Steven Croce. Risk Management Framework for Evaluating Suppliers. May 7th,

2007

3

28

o

o

*

Labor Rates

Proposed Price

- Cost Audits

- Comparisons of Other Offers

Operation Risk

o Material Purchasing

o Quality Standards

o

Shipping Mechanism

o

*

Capabilities

- Capacity

- Lead Time

- Previously Delivered Metrics

Business Risk

o

Geopolitical Risks

o

Worker Risks

- Unions

- Strikes

Demand Stability

Uncategorized Ratings from Online Database

Licensing

o

o

o

Looking at some of these past works on supplier evaluating, it becomes evident that MRL

assessment covers a large portion of these areas. MRL focuses on determining what the current state of

the supplier is, what the desired state of the supplier is, and most importantly what needs to be done, with

both Raytheon and the supplier bearing responsibilities, in order for the supplier to get to the desired state.

With that in mind, the only topics the MRL does not cover in the two above studies are experience,

communication, financial records/standings, geopolitical risks, and licensing. However, Raytheon already

has established processes in place that address most of the issues above, therefore the MRL assessment

dovetails into Raytheon's overall supplier risk management philosophy quite nicely.

The other issue that often arises from supplier evaluation is consistency. As Corum pointed out,

"the best practice for the question and response format was determined to be a direct question that has

pre-defined specific and objective responses. By phrasing questions in this manner and restricting the

responses to a pre-defined answer, the results of the evaluation would be consistent both across suppliers

29

as well as the evaluators"". In the next chapter, details will be given on how the pre-determined answers

were created and how objectivity and consistency were achieved.

3.3

Applications of MRL

Although it is not until recently that MRL became a requirement, the concept has been around

since 2005 when it was adopted by the DoD for usage. MRLs were developed by a joint DoD/industry

working group under the sponsorship of the Joint Defense Manufacturing Technology Panel (JDMTP) 38.

The intent was to provide a common metric and vocabulary for assessing and discussing manufacturing

maturity, risk, and readiness.

The GAO has argued that the MRL is the best practice for DoD program acquisition outcomes39

but at the same time the GAO has continuously noted inconsistent application across DoD components".

In the report GAO- 10-439, the argument for adopting MRLs for best practice can be clear illustrated in

Figure 10. If MRL assessments can be performed consistently and accurately, the cost overrun that

happens after a production decision should be minimized.

3 Andrew Corum. Design and Development of a Supplier Evaluation Process, Page 31. May 8th, 2009

Wikipedia. Manufacturing Readiness Level. January 14th, 2012

Government Accountability Office. GAO-02-701 Capturing Design and Manufacturing Knowledge Early

Improved Acquisition Outcomes. July 2002

40 Government Accountability Office. GAO-10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Manufacturing Risks Are Managed. April 2010

38

39

30

Figure 10 Distribution of Average Procurement Unit-Cost Growth after a Production Decision for Ma on for Major

Defense Acquisition Programs (For All Major Defense Aiquisition Programs Entering Production in Fiscal Year 2000 or

4

Later)

Programs with less than 5% growth

29%

Programs with 5% to 10% growth

16%

Programs with 11% to 15% growth

39%

16%

Programs with more than 15% growth

Even before the official release of the MRL Deskbook in 2010, several firms had started applying

the MRL frameworks/principles in their programs. GE Healthcare and Honeywell were two such

companies.

Although GE Healthcare did not explicitly used MRL framework for the development of its

Gemstone Scintillator , the product underwent years in laboratory development on a small scale until GE

was convinced that this technology was ready to enter into the production phase. In order for the

Gemstone Scintillator to work, a mineral composite used for optical component was needed. GE tested

thousands of alternatives to find what could meet the technical requirements and be produced in the

quantities needed. Once the material was tested and identified, GE began to determine its suppliers and

what equipment was needed. GE built a pilot plant to produce the material and the scintillator 2 years

before the scintillator entered the firm's gated process4.

4' Government Accountability Office. GAO-10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Manufacturing Risks Are Managed, Page 11. April 2010

42 Government Accountability Office. GAO-10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Manufacturing Risks Are Managed. April 2010

1 Government Accountability Office. GAO-10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Manufacturing Risks Are Managed. April 2010

31

The MRL case for Honeywell is more conventional. In 2005, Honeywell determined that they

needed analytical tools and models to support evaluations of manufacturing and risk throughout the

product-development life cycle". After performing some literature search, Honeywell engineers found

DoD's MRLs and they realized this can provide the type of metric needed for a quantitative assessment.

Honeywell modified the criteria from the MRL to meet its needs. Honeywell's MRL Maturity Model

assessment tool is comprised of three main components: Manufacturing Complexity Model, Yield

Prediction Model, and Design for Manufacturing Scorecard Analysis. The output of the tool is an MRL

assessment score that can identify gaps and risks. Honeywell's MRL assessment model can be illustrated

in the below figure:

45

Figure 11 Honeywell's MRIL Assessment Model

Manufacturin

Complexity Mfodel

SIdentifies design attributes

drivig manufacturing

complexity

Yield Prediction Model

e

Ouantlftes anticpated yield

of propoed design

cor"Ptsevaluate

MRL Assessment Tool

\

.uit on nputs fror three

producibuity analysis

tools and MRL works op to

MRL

Maturity

Model

Output:

fmanufacturing

matu*

w

OeZ

MRL score

Design for

Manufacturing

Scorecard Analysis

o

Guantifies

theimpact of

design for manufacturing

Violatons

MRL Workshop

* Review manufacturing

maturity artifacts against

evaluation criteria to gain

concensus on ratings for

each category

44Government

Manufacturing

4 Government

Manufacturing

Accountability Office. GAO-10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Risks Are Managed. April 2010

Accountability Office. GAO- 10-439 DoD Can Achieve Better Outcomes by Standardizing the Way

Risks Are Managed, Page 30. April 2010

32

3.4

Current/Published MRIL Assessment Methods

As the project at Raytheon was underway, consideration was given on whether currently available

templates should be used in place of recreating a brand new tool at Raytheon. One such tool was the MRL

Assist Tool Beta 1.3 from the BMP (best manufacturing practice) website.46 The Beta 1.3 is a web-based

tool with input options such as below:

47

Figure 12 MRL Assist Beta

MRL Repository Questions

Select Questions:

3 / Pre-Concept Refinement

TECHNOLOGY &INDUSTRIAL BASE w

1.1

Has the Program achieved an acceptable level of technology maturity?

1.1.1

Has the Program attained a minimum of TRL 37

1.1.2

Were technology risks correctly identified in earlier S&T activity efforts?

Based on the MRL criteria (MRL 1-10) and threads (1 out of 9) desired, a list of questions will be

automatically generated. An example of questions and answers is illustrated below:

46

4'

https://www.mrlassist.bmpcoe.org

Best Manufacturing Practices/Center of Excellence. MRL Assist Tool User Manual (Version 1.1). February 2007

33

Figure 13 Examples of MRL Assist Beta Questions/Answers

raft*

4

s

Clik hoe toassga

sebcted questions to

a team ummber

Use ths fiser

mechanism to arae

sevey questions

Clck hee to select

a question

As the suppliers fill out the questionnaire, program status and scores will be calculated and presented:

49

Figure 14 Sample Scorecard

Quostw oswed os o

O."

*W / ?OOW4eoq

*

3 i

-"

(vVol

4 Y,

o

'a

So

1, 2M

Wodwooy, N4ovw

15

ft

N

IS

0* IS

20

ko

WA 3?

I.M.,g.rd

WA S

UUwo

at)

d 4)

a

a___________

S WA I

L*Aftlaow4

I)

Detaled bnakdwn

While tools like these seem like good candidates for Raytheon to adopt and use, there were some

factors that drove Raytheon toward developing its own version of the MRL assessment. First, the

48

49

Best Manufacturing Practices/Center of Excellence. MRL Assist Tool User Manual (Version 1.1). February 2007

Best Manufacturing Practices/Center of Excellence. MRL Assist Tool User Manual (Version 1.1). February 2007

34

questionnaire format is simply too long and cumbersome. Looking at the example from Figure 14 above,

for a MRL5 assessment, there are almost 270 questions to be answered in a YES/NO fashion. For each

question answered, justification must be entered as below:

Figure 15 Example of Answers for MRL Assist Betas5

Typ

Numb.

Questiea

Assi*ee

Amswer

3stcade

Ths tcOvilogy is

1.1

commoly fouid in

Has the Progam adwvd an

acceptable Wel of technology

prodco grade

raoaes

maturty?

Click hene to answer

the question or ad a

commn0-t

You must be aus ued to a

question before you can

answer it.

Here is another example of the type of questions being asked in the process thread.

5

Figure 16 Samples of Process-Related Questious '

Executive- Level Question:

5.2 Does the Program have a Variation and Variability Reduction Plan?

Program Manager - Level Questions:

5.2.1

5 2.2

5.2.3

5.2.4

Have all critical manufacturing processes been charactenzed in a factory environment?

Have key characteristics and process capability indexes been documented?

Have initial Sigma levels and vanation/variability efforts been documented?

Have yield improvements been Initiated as necessary?

5.2.5 Will yield data be gathered on the pilot line build?

IPT Lead-Level

Questions:

5 2 1 1 Have initial production line simulation models been developed?

5.2 1 2 Will simulation models be used to determine bottlenecks and improve processes?

5.2 1 3 Have analyses of assembly methods been performed in a relevant

manufacturing environment?

5.2.1 A Will all assembly methods be developed, documented and verified on the pilot line

5 2.1 5 Have process requirements been proven and validated in a relevant

manufacturing environment?

52.1 6 Have required Manufacturing Technology initiatives been developed?

5 2.1 7 Has the plan been completed to Implement tooling?

5 2.1.8 Will the pilot kne be developed and proven out using hard tooling?

52 1 9 Has the automated STE Implementation plan been completed?

5 2 1 10 Will the pilot line be developed and proven out with STE?

1

Best Manufacturing Practices/Center of Excellence. MRL Assist Tool User Manual (Version 1.1). February 2007

Best Manufacturing Practices/Center of Excellence. MRL Assist Tool User Manual (Version 1.1). February 2007

35

Although from a robustness and contractual standpoint this format works, Raytheon did not believe it was

the best way to approach suppliers because an audit like this will most likely require suppliers to devote

significant amount of man hours to comply, thus disrupting their daily business operations.

The second reason was the scoring algorithm. From Figure 14, it can be seen that scoring is

accomplished by simply counting up the number of YESs/NOs and a bar graph is shown as percentage to

completion. With scoring like these, each question is weighted equally but in fact it should not be. A good

supplier should not be penalized for answering NO on a question that is not relevant. Conversely, a bad

supplier might get good scoring because of it answering many YESs on irrelevant questions.

Although Raytheon went ahead and developed its own version of the MRL tool, tools like the

MRL Assist Beta provided the team with good insights and great foundations to build upon.

36

4

ASSESSMENT TOOL DEVELOPMENT

In this chapter, the development of the Raytheon MRL tool will be discussed. While the

methodology and the approach taken will be described in detail, it is of the upmost importance to protect

proprietary information of Raytheon. Therefore a lot of the data presented will be altered and screenshots

will be masked as appropriately.

One of the biggest challenges throughout the project was to find the optimal point between

assessment robustness and speed. There are schools of thoughts that insist on leaving no stones unturned

but as a result supplier cooperation would be low. Even if the suppliers were forced to comply with

Raytheon's request as a contractual agreement, the quality of the assessment at the end would still be

affected and Raytheon's philosophy of working with the suppliers and sharing risks mutually would not

be realized. Also it was important for Raytheon to show the suppliers that the assessment is really an

attempt to help the suppliers to grow and to further develop their own internal processes to better meet the

challenges of tomorrow.

4.1

Forming a Comprehensive Data Set

The MRL Deskbook gave the team a great starting point on the kind of questions that need to be

answered at each level of the manufacturing maturity. However, Raytheon believed that a thorough

assessment will cover beyond what the Deskbook has described in the MRL Maturation Matrix.

With this goal in mind, several additional documents were pulled in along with the original MRL

Deskbook. Since the MRL assessments have been around since 2005, Raytheon has had to perform

assessments on not only internal processes but also certain suppliers to meet program requirements from

the Air Force and the Navy. Digging deeper into the questions from the additional documents, it was

52

See Appendix

37

revealed that the additional questions are very similar not only in the ways they were asked but also the

way the questions progressed as the levels increased.

Complied by the author and another employee from the Supplier Engineering team, a total of

2,500+ questions were absorbed into Raytheon's MRL tool across all 10 MRL levels and within each

level the 9 main threads. With the assistance of a senior engineering director, this list was reduced to

1,300+ questions. Great care was taken to identify duplicity across all the questions and if two or more

questions are worded similarly, then the question originated from the DoD Deskbook was chosen over the

other questions. The chart below illustrates how the questions are populated across the different levels.

Figure 17 Summary of MRL Questions

MRL 1

MRL 2

MRL 3

MRL4

MRL 5

MRL 6

MRL7

MRL 8

MRL 9

MRL 10

DoD

10

23

42

55

68

70

71

76

67

53

Non-DoD

0

0

0

97

104

129

121

253

137

2

Total

10

23

42

152

172

199

192

329

204

55

With the comprehensive 1,300+ question, this will serve as the foundation as Raytheon's MRL

assessment tool. A question arose at this point whether if this was the tool to release to the suppliers to

start the assessment programs. However releasing this tool would not have been any different than some

of the outside tools that were evaluated with the exception that some of the questions were originated

from Raytheon.

38

4.2

Artifact-Based Approach

One insight gained as the number of the questions was being reduced was that there were primarily

two types of questions. The first type of questions only appeared on certain level and the second type of

questions appeared throughout a range of levels (for example from level 4 to 6) but was asked differently.

Below are some examples:

"Have producibility and manufacturing assessments of key technologies and components been

initiated as anronriate?"

This question above is asked in thread B (Design) in MRL5. There are no similar questions asked again in

the question bank.

"Have production lines to be modeled been identified?"

"Have production lines been modeled for pilot?"

These two questions above, one appeared in MRL5 and one appeared in MRL6, are treated as

type 2. Further insights were revealed that having certain artifacts, processes/documents, in place can

adequately give the question the answer of YES. In the first question, an artifact that defines and reviews

a supplier's operational capability can answer the question. Therefore, if a supplier is being evaluated for

MRL5 and this operation capability artifact is deemed to be mature for the level required, then the

question can receive a rating of YES. Since this question only appeared on MRL5, if an assessment was

targeted for MRL6, then this artifact will not be required nor will the question be asked.

For the two questions on production line modeling, a common artifact such as value stream

mapping can adequately answer the questions. Since these questions appeared on MRL5 and MRL6, an

artifact similar to value stream mapping will be required for review for either a MRL5 or MRL6

assessment.

39

Furthermore, some common artifacts can be used to answer multiple questions across multiple

threads and levels. This observation essentially formed the basis for Raytheon's MRL tool. Instead of

asking questions that require YES/NO answers, the suppliers will be asked to present various artifacts to

demonstrate proven capabilities across various business, engineering, and operation areas. Here are some

additional processes/artifacts generally accepted across different industries: EVMS (cost), BOM Analysis

(material), Capacity Plan (material), Engineering-Change-Notices Processes (process), Poka-Yoke

(process), Non-Conforming Material Policy (quality), and Master Schedule (manufacturing management),

etc.

For each artifact reviewed, a numerical score (1-10) will be given. The numbers represent how

mature the artifact is according to the MRL Matrix53 . The supplier will first self-evaluate but the scores

will be verified by Raytheon audit team on site. This scale provides objective assessment and common

language to be used across all users (Raytheon and suppliers). The maturation of these artifacts will

essentially form the basis for a risk mitigation plan that will show the path for a supplier to go from the

current state to desired state. It is through the collaborated effort between Raytheon and the suppliers that

the risks and responsibilities will be shared.

With over 1,300 questions, a focus group approach was taken. There are diverse talents and

experiences across the members of the Supplier Engineering team. Eight members were selected and

paired up into four teams based on their expertise and knowledge. Each team was responsible for two

threads (one team was responsible for three threads) and for each question the team will propose an

artifact that will best answer a particular question.

At the end of this effort, a list of 250+ artifacts was created that would address all the questions

that were absorbed into the MRL question bank. It was also decided the tool be Excel-based for ease of

transmitting between Raytheon and the suppliers as well as across multiple divisions within Raytheon.

" See Appendix

40

4.3

Supplier Engagement

One issue with the current artifact list is that the artifacts were terms that were familiar to

Raytheon. As the team releases the tool into the field, how would the suppliers react to the terminologies

used? It was decided to release the tool to a supplier who will supply a critical component (that has been

identified as a CTE) to a one of Raytheon IDS's radar programs.

There were two main feedbacks Raytheon was particularly interested in. First, will suppliers be

familiar with the artifacts Raytheon has identified? If the supplier has an artifact that is similar in nature

as the one that has been required, can the supplier submit this artifact instead? Also are the artifacts

commonly used by the suppliers for not only program specific purposes but also normal company

business operation?

Second, Raytheon was interested in how the supplier deemed the time required to self-evaluate

itself and fill out the form. A team from Raytheon was dispatched to the supplier and worked with the

supplier management staff to run through the entire list of artifacts and the progression of the maturity

expected throughout the entire product lifecycle. At the end of two days, the first version of the MRL

assessment tool was fully filled out.

Another invaluable insight gained was how Raytheon should work with the suppliers in order to

minimize the time required to do this assessment while achieving the maximum coverage required by the

assessment. This flowchart below will demonstrate an ideal MRL assessment effort between Raytheon

and its suppliers.

41

Figure 18 MRL Assessment Flowchart

Sellther*

Value

Propostion

Agnwl1PA

Prepare

Assessmet

Tool

2wek

prorto event

Woek

prtortoevet

o

No

U id

4.4

i

t

w

Manag9eand

Dleftecuire

Une tour

montorgap

closure efforts

tocomplebon

RTNISuppier

investmerts

requiredto

close gaps

Jointassessment

andartlactreview

MRLbsseline

Supplier

dy?

Yes

Method of Data Gathering

In this section, explanation will be given on how data is gathered from suppliers. As mentioned in

the previous section, the score for reach artifact is from a scale of 1 to 10. However, there is additional

information which the suppliers will need to fill out to be also verified by Raytheon.

For each artifact, a numerical score must be given on two levels: agreed product level and business

process. This is essential because Raytheon wants visibilities on the supplier for its current state and its

potential for reaching the desired state. For example, on a given program if the value mapping stream

artifact is graded to be MRL6 when the desired level is MRL8, then there is a gap. The supplier is more

likely to close on this gap if value stream mapping is a business process that the supplier is already

engaged in. In this case, the supplier will receive a score of 10, for example, for company-level business

process rating. If the supplier does not currently employ value stream mapping or its current value stream

mapping process leaves a lot to be desired, then the supplier will receive a score of 8 or below for

company-level process rating. When this happens, this gives Raytheon the intuition that it will be less

likely for the supplier to mature this artifact to the desired state than it would have been if the supplier

already engages in value stream mapping. The altered screen shot below demonstrates how the data

would be entered:

42

Figure 19 Scoring for Product- & Business-Level Assessments

eed Product Level

Supporting Artifact

Business Process

xxxxxxxxxxxx

XXXXXXXXXXXX

NI A

1

2

5

6

7

YYYYYYYYYYYY

The pre-determined drag-down selection also restricts users from entering values that are not numerical,

thus standardizing inputs and eliminating errors.

There are two additional data points collected for each artifact. If an artifact was scored below the

desired state, then it is necessary for the supplier to provide a plan, i.e. closure path, to get to the desired

state. The Raytheon audit team will give a qualitative score on the quality of this closure path as

illustrated below:

Figure 20 Inputs on Quality of Closure Path

Supportifg Artifact

Closure Path Exist?

Closmwe Comments

xxxxxxxxxxxx

XXXXXXXXXXXX

XXXXXXXXXXXX

Known Plan

Unknown Plan

Known Plan wiReservotions

The three possible answers are known plan, unknown plan, and known plan w/reservations. If the supplier

has a clear path, known plan, to the desired state for the specific artifact then it is more likely for the

supplier to mature the artifact in time versus as if a supplier has no ideas, which in this case the choice

would unknown plan. In the cell to right, it provides a space for the supplier or Raytheon team to provide

some comments that would be company or program specific.

4.5

Tool Enhancement

43

Even with buy-ins from suppliers secured (another supplier was introduced to the tool and similar

reactions and feedbacks were received), Raytheon wanted to enhance the tool to further increase

efficiencies and minimize errors. The first thing that needed to be accomplished was the weighting of the

artifacts. In the MRL tool that was evaluated, each question received equal weighting. The problem with

this approach was that a good supplier might be unnecessarily penalized for missing some of the less

relevant artifacts while a bad supplier might fly under the radar with many irrelevant artifacts meeting the

criteria.

4

Using a qualitative approach commonly used in project management , the goal was to give a

weighting to each artifact. Again a focus group, enlisting the help from 20+ Raytheon employees, was

formed to perform such a task. The artifacts were distributed among the focus group and each member

was asked to rank the artifacts in three different aspects: cost, schedule, and performance. The rankings

are low/L/l, medium/M/3, and high/H/9 for severity of impacts to the program if this artifact was not able

to reach MRLX by the time it was due. The guideline below was given to Raytheon employees to assist

them in ranking the artifacts. The criteria have been altered for illustration purpose only:

Figure 21 Guideline for Ranking

IMPACT

COST

SCHEDULE

PERFORMANCE

9

5

Amro Elkkad, PMP. Risk-Tolerance Based Schedule and Cost Estimates. 2008

44

The 1/3/9 scale is chosen over the 1-10 scale for several reasons. First, it was simply easier to use

and to communicate to the team. Additionally the non-linearity of the 1/3/9 scale was better at

emphasizing the severe risks if an artifact received a rating of 9. This same approach was taken for

assigning probabilities which will be discussed in Chapter 5.

Employees themselves were given weights. For example, the engineering director was given a

weight of 3 to rank the artifacts in the threads of design and technology while an engineer within the

Supplier Engineering group might only receive a weight of 2 for his/her ranking. In addition, each artifact

was reviewed and ranked by at least two different employees and the average of their rankings was taken.

The next step was to categorize the artifacts. Based on the needs of the program and the nature of

the assessment, perhaps not every single artifact needs to be assessed. Several categories were formed and

they are listed below:

"

Build-to-Print vs. Build-to-Specification

"

Prime vs. Sub-contractor56

-

Contractual vs. Non-contractual Assessments5 7

Additional filters were added. One of such is previous quality audits performed at the suppliers.

Raytheon performs regular quality audits at many of its suppliers. An analysis was done to find the

common questions between the MRL tool and the quality assessment. Several of the MRL artifacts were

identified that can be adequately answered if certain quality metrics were met in a separate quality audit.

Even though the goal was to reduce the number of artifacts, certain special processes (such as plating,

brazing, and painting etc.) will often need to be evaluated as part of the contractual requirements.

Therefore each special process will need to be added into the MRL assessment as required.