Optimal block size for variance estimation by a spatial

advertisement

Optimal block size for variance estimation by a spatial

block bootstrap method

running title: bootstrap block size

Daniel J. Nordman, Soumendra N. Lahiri

Brooke L. Fridley

Iowa State University, Ames, USA

Mayo Clinic, USA

Abstract

This paper considers the block selection problem for a block bootstrap variance estimator

applied to spatial data on a regular grid. We develop precise formulae for the optimal block

sizes that minimize the mean squared error of the bootstrap variance estimator. We then

describe practical methods for estimating these spatial block sizes and prove the consistency

of a block selection method by Hall, Horowitz and Jing (1995), originally introduced for

time series. The spatial block bootstrap method is illustrated through data examples and

its performance is investigated through several simulation studies.

AMS(2000) Subject Classification: Primary 62G09; Secondary 62M30

Key Words: block bootstrap, empirical block choice, stationary random fields.

1

Introduction

In recent years, different versions of block bootstrap methods have been proposed for spatial

data. As in the time series case, the accuracy of a block bootstrap estimator of a population

parameter critically depends on the block size employed. Although this is a very important

problem, there seems to be little information available in the literature about the (theoretical)

optimal block sizes for estimating a given parameter with a spatial block bootstrap. In this

paper, we investigate the problem of determining optimal block sizes for variance estimation

by a spatial block bootstrap method.

For times series data, expressions for theoretical optimal block sizes with different block

bootstrap methods are known (Künsch, 1989, Hall et al., 1995, Lahiri, 1999). In the time

series case, the optimal block sizes depend on the blocking mechanism (i.e., overlapping/nonoverlapping blocks) and the covariance structure of the process. In comparison, the optimal

1

block sizes in the spatial case are determined by the blocking mechanism, the covariance

structure of the spatial process, and the dimension of the spatial sampling region. For

a large class of “smooth function model” spatial statistics, the main results of this paper

give expansions for the mean squared error (MSE) of block bootstrap variance estimators

based on overlapping and non-overlapping versions of the spatial block bootstrap method.

Using these MSE expansions, we give explicit expressions for the optimal bootstrap block

sizes. It turns out that, due to the mechanics of the bootstrap, the optimal blocks in

the spatial case are a natural extension of the time series case under identical conditions

on the smooth function model. This result is surprising compared to spatial subsampling

variance estimators, studied by Politis and Romano (1994a), Sherman and Carlstein (1994)

and Sherman (1996). With subsampling, the formulae for optimal blocks may differ largely

between the spatial/temporal settings and also require much smoother functions for time

series data than for spatial data (Nordman and Lahiri, 2004).

We then develop data-based methods for estimating the optimal block size and study

their performance through simulation studies. In particular, we contribute the first proof of

consistency (to our knowledge) for a general block estimation technique of Hall et al. (1995),

which has been applied by Hall and Jing (1996), Politis and Sherman (2001) and Nordman

and Lahiri (2004) among others; the result applies to both time series and spatial block

bootstraps. We also illustrate the proposed methodology with two data examples, which

investigate spatial patterns in longleaf pine trees (Cressie, 1993) and a cancer mortality map

of the United States (Sherman and Carlstein, 1994).

The rest of the paper is organized as follows. We conclude this section with a brief

literature review. In Section 2, we describe the spatial sampling framework, the variance

estimation problem, and the spatial block bootstrap methods for variance estimation. In

Section 3, we state the assumptions and the main results on optimal block sizes. Empirical

methods for selecting the optimal block sizes are discussed in Section 4 while Section 5

summarizes a simulation study of the bootstrap estimators with empirical block choices.

Section 6 describes data examples of the bootstrap method. Proofs of all technical results

are given in the Appendix.

Block bootstrap methods for time series data and spatial data have been put forward

by Hall (1985), Künsch (1989), Liu and Singh (1992), Politis and Romano (1993, 1994b),

Politis et al. (1999), Zhu and Lahiri (2007) among others. In contrast to the spatial setting, considerable research has focused on optimal block sizes for time series block bootstrap

methods including work by Künsch (1989), Hall et al. (1995), Lahiri (1996), Bühlmann

2

and Künsch (1999), Lahiri et al. (2006), and Politis and White (2004). Nordman and

Lahiri (2004) derive expressions for optimal block sizes for a class of spatial subsampling

methods. See Lahiri (2003a, Chapter 12) for more on bootstrap methods for spatial data.

2

Block bootstrap variance estimators

2.1

Variance estimation problem with spatial data

We assume that the available spatial data are collected from a spatial sampling region Rn ⊂

Rd as follows. For a fixed vector t ∈ [−1/2, 1/2)d , identify the t-translated integer lattice

as Zd ≡ t + Zd . Suppose that a p-dimensional, stationary weakly dependent spatial process

{Z(s) : s ∈ Zd } is observed at those locations Sn ≡ {s1 , . . . , sNn } of the lattice grid Zd that

lie inside Rn , i.e., the data are Zn = {Z(s) : s ∈ Sn } for Sn = Rn ∩ Zd . For simplicity, we

let N ≡ Nn denote the spatial sample size or the number of sites in Rn .

To allow a wide variety of sampling region shapes, we suppose that Rn is obtained by

inflating a prototype set R0 by a constant λn :

Rn = λn R0 ,

(1)

where R0 ⊂ (−1/2, 1/2]d is a Borel subset containing an open neighborhood of the origin and {λn }n≥1 is a positive sequence of scaling factors such that λn ↑ ∞ as n → ∞.

Because R0 contains the origin, the shape of the sampling region Rn is preserved for different values of n as the region grows in an “increasing domain asymptotic” framework as

termed by Cressie (1993). Sherman and Carlstein (1994), Sherman (1996) and Nordman and

Lahiri (2004) use a comparable sampling framework. Observations indexed by Zd ≡ t + Zd ,

rather than the integers Zd , entail that the “center” of Rn at the origin need not be a

sampling site.

We study the spatial block bootstrap method of variance estimation for a large class

of statistics that are functions of sample means. Let H : Rp → R be a smooth function

P

and Z̄N = N −1 N

i=1 Z(si ) denote the sample mean of the N sites within Rn . Suppose

that a relevant statistic θ̂n can be represented as a function of the sample mean θ̂n =

H(Z̄N ) and estimates the population parameter θ = H(µ) involving the mean EZ(t) = µ ∈

Rp of the random field. Hall (1992) refers to this parameter/estimator framework as the

“smooth function” model which permits a wide range of spatial estimators with suitable

transformations of the Z(s)’s, including means, differences and ratios of sample moments

(e.g., spatial variograms), and other spatial test statistics.

3

√

Using a spatial block bootstrap, we wish to estimate the variance of the scaled statistic

N θ̂n , say σn2 = N Var(θ̂n ). We next describe two bootstrap variance estimators of σn2 .

2.2

Block bootstrap methods

The spatial bootstrap aims to generate bootstrap renditions of spatial data through the same

recipe used in the moving block bootstrap (MBB) for time series (Künsch, 1989, Liu and

Singh, 1992). Recall that the MBB produces a bootstrap reconstruction of a length n time

series through a sequence of block resampling steps as follows.

1. Partition the time series into k = bn/bc consecutive observational blocks of length b.

2. Develop some collection of length b observational blocks from the original time series.

Two possibilities for block collections are either the (non-overlapping) blocks in Step 1

or the size n − b + 1 set of all possible (potentially overlapping) blocks of length b.

3. For each data block in Step 1, create a bootstrap rendition of this block by independently resampling a length b block of observations from the collection in Step 2.

4. Paste the k resampled blocks together to form a bootstrap time series of length kb.

To re-create spatial data, the spatial block bootstrap involves the same essential steps as the

MBB, namely dividing the sampling region Rn into spatial data blocks, creating a bootstrap

version of each data block through block resampling, and concatenating these resampled

blocks together into a bootstrap spatial sample. Details are described next, where the time

series bootstrap is modified to accommodate the spatial data structure.

Let {bn }n≥1 be a sequence of positive integers to define the d-dimensional spatial blocks

as Bn (i) ≡ i + bn U, i ∈ Zd , using the unit cube U = (0, 1]d . We suppose b−1

n + bn /λn → 0 as

n → ∞ to keep the blocks Bn (·) small relative to the size of the sampling region Rn in (1).

To implement the block bootstrap, the sampling region Rn is first divided into disjoint

cubes or blocks of spatial observations. To this end, let Kn = {k ∈ Zd : Bn (bn k) ⊂ Rn }

represent the index set of all complete cubes Bn (bn k) = bn (k+U) lying inside Rn ; Figure 1(a)

illustrates a partition of Rn by cubes. For any A ⊂ Rd , let Zn (A) = {Z(s) : s ∈ A ∩ Sn }

denote the set of all observations corresponding to the sampling sites lying in the set A. We

define a bootstrap version of Zn (Rn ) by putting together bootstrap replicates of the process

Z(·) on each block in the partition of Rn , given by

Rn (k) ≡ Rn ∩ Bn (bn k),

4

k ∈ Kn .

(2)

That is, we consider one block subregion Rn (k), k ∈ Kn , at a time and create a bootstrap

rendition of the data Zn (Rn (k)) in that block by resampling from a suitable collection of

blocks in Rn ; piecing resampled blocks together builds a bootstrap version of the process

Z(·) on the entire region Rn . Similar to the MBB, we formulate two block bootstrap variance

estimators based on two different sources of blocks for resampling: rectangular subregions

Bn (·) within Rn that are overlapping (OL) or non-overlapping (NOL). We describe the OL

version in detail; the NOL version is similar.

2.2.1

Block bootstrap variance estimator

Define an integer index set In = {i ∈ Zd : Bn (i) ⊂ Rn } for those integer-translated blocks

bn U lying completely inside Rn ; see Figure 1(b). For each k ∈ Kn , we resample one block

at random from the collection of OL blocks {Bn (i) : i ∈ In }, independently from other

resampled blocks, to define a version of the process {Z(s) : s ∈ Rn (k) ∩ Zd } observed on

Rn (k). To make this precise, let {ik,OL : k ∈ Kn }, be a collection of iid random variables

with common distribution

P (ik,OL = j) =

1

,

|In |

j ∈ In ,

(3)

using |A| to denote the number of elements in a finite set A ⊂ Rd . For each k ∈ Kn , the OL

∗

block bootstrap version of data Zn (Rn (k)) is defined by Zn,

OL (Rn (k)) = Zn (Bn (ik,OL )). We

concatenate the resampled block observations for each k ∈ Kn into a single spatial bootstrap

∗

sample {Zn,

OL (Rn (k)) : k ∈ Kn } defined at sampling sites Sn ∩ {Rn (k) : k ∈ Kn }, which

has N1 ≡ N1n = |Kn | · bdn observations; see Figure 1(d). Here bdn = |bn U ∩ Zd | represents the

∗

number of sampling sites in a block Bn (i), i ∈ In or a subregion Rn (k), k ∈ Kn . Let Z̄n,

OL

be the average of the N1 resampled values. We define the OL block bootstrap version of θ̂n

∗

∗

2

as θ̂n,

OL = H(Z̄n,OL ) and give the corresponding variance estimator of σn as

2

∗

σ̂n,

OL (bn ) = N1 Var∗ (θ̂n,OL ),

where Var∗ denotes the conditional variance given the data.

Hence, this spatial block resampling scheme is an extension of the OL MBB for time series.

In applying the MBB to a size n time series, the bootstrap time stretch may have marginally

shorter length n1 = kb because the MBB resamples complete data blocks and ignores some

∗

boundary time values in the reconstruction. Analogously with {Zn,

OL (Rn (k)) : k ∈ Kn }, we

create a bootstrap rendition of those Rn -observations belonging to some “complete” block

Rn (k), k ∈ Kn in the partition of Rn ; see Figure 1(d). As in the time series case, some

5

boundary observations of Rn may not be used in the bootstrap reconstruction and this

occurs commonly with other spatial block methods like subsampling (Sherman, 1996).

2

Closed-form expressions for σ̂n,

OL (bn ) are obtainable for some spatial statistics θ̂n (see

2

(7)) while in other cases we may evaluate σ̂n,

OL (bn ) by Monte-Carlo simulation as follows.

Let M be a large positive integer, denoting the number of bootstrap replicates. For each

` = 1, . . . , M , generate a set {`ik,OL : k ∈ Kn }, of iid random variables according to (3)

to obtain the `th bootstrap sample replicate as {Zn (Bn (`ik,OL )) : k ∈ Kn }, whose sample

∗

∗

mean evaluated in the function H yields the `th bootstrap replicate `θ̂n,

OL of θ̂n,OL . The

Monte-Carlo approximation to the OL block bootstrap variance estimator is then given by

MC

2

σ̂n,

=

OL (bn )

M

´2

N1 X ³ ∗

MC ∗

,

θ̂

−

E

θ̂

` n,OL

∗

n,OL

M `=1

∗

EMC

∗ θ̂n,OL =

M

1 X ∗

.

`θ̂

M `=1 n,OL

For the NOL block bootstrap method, we consider resampling strictly from the NOL

collection of blocks {Rn (k) : k ∈ Kn }; see Figure 1(c). Let {ik,N OL : k ∈ Kn } denote iid

random variables with common distribution P (ik,N OL = j) = 1/|Kn |, j ∈ Kn . The NOL

∗

bootstrap version of Zn (Rn (k)), k ∈ Kn , is then Zn,

As

N OL (Rn (k)) = Zn (Rn (ik,N OL )).

before, we combine the resampled NOL block observations into a size-N1 bootstrap sample

∗

∗

{Zn,

N OL (Rn (k)) : k ∈ Kn } with an average denoted by Z̄n,N OL . The NOL block bootstrap

2

∗

variance estimator of σn2 is given by σ̂n,

N OL (bn ) = N1 Var∗ (θ̂n,N OL ) based on the NOL block

∗

∗

bootstrap version θ̂n,

N OL = H(Z̄n,N OL ) of θ̂n .

3

Main results

3.1

Assumptions

To describe the results on the spatial block bootstrap, we require some notation and assumpP

tions. For a vector x = (x1 , ..., xd )0 ∈ Rd , let kxk, kxk1 = di=1 |xi | and kxk∞ = max1≤i≤d |xi |

denote the Euclidean, l1 and l∞ norms of x, respectively. Define the distance between two

sets E1 , E2 ⊂ Rd as: dis(E1 , E2 ) = inf{kx − yk∞ : x ∈ E1 , y ∈ E2 }. For an uncountable set

A ⊂ Rd , vol(A) will refer to the volume (i.e., the Rd Lebesgue measure) of A.

The assumptions below, resembling those in Nordman and Lahiri (2004), include a

mixing/moment condition (Assumption 4r ) stated as a function of a positive argument

r ∈ Z+ = {0, 1, 2, . . .}. For ν = (ν1 , ..., νp )0 ∈ Zp+ , let Dν denote the νth order partial

ν

differential operator ∂ ν1 +...+νp /∂xν11 ...∂xpp and ∇ = (∂H(µ)/∂x1 , . . . , ∂H(µ)/∂xp )0 be the

vector of first order partial derivatives of H at µ. Let FZ (T ) denote the σ-field generated

6

by the variables {Z(s) : s ∈ T }, T ⊂ Zd and define the strong mixing coefficient for the

random field Z(·) as α(k, l) = sup{α̃(T1 , T2 ) : Ti ⊂ Zd , |Ti | ≤ l, i = 1, 2; dis(T1 , T2 ) ≥ k}

where α̃(T1 , T2 ) = sup{|P (A ∩ B) − P (A)P (B)| : A ∈ FZ (T1 ), B ∈ FZ (T2 )}.

Assumptions:

(d+1)/d

(1.) As n → ∞, b−1

n + bn

/λn → 0 for λn in (1). For any positive sequence an → 0, the

−(d−1)

d

number of cubes an (i + [0, 1) ), i ∈ Zd , intersecting both R0 and Rd \ R0 is O(an

).

p

(2.) H : R → R is twice continuously differentiable and, for some a ∈ Z+ and real C ≥ 0,

it holds that max{|Dν H(x)| : kνk1 = 2} ≤ C(1 + kxka ), x ∈ Rp .

¡ 0

¢

P

0

%(k)

∈

(0,

∞),

where

%(k)

=

Cov

∇

Z(t),

∇

Z(t

+

k)

.

(3.) σ 2 =

d

k∈Z

(4r .) There exist nonnegative functions α1 (·), g(·) such that α(k, l) ≤ α1 (k)g(l). For some

0 < δ ≤ 1, 0 < κ < (2r − 1 − 1/d)(2r + δ)/δ and C > 0, it holds that EkZ(t)k2r+δ < ∞,

P∞

(2r−1)d−1

α1 (m)δ/(2r+δ) < ∞, g(x) ≤ Cxκ , x ∈ [1, ∞).

m=1 m

Growth rates for the blocks bn and sampling region Rn = λn R0 in Assumption 1 are the

spatial analog of scaling used with the MBB for time series d = 1 (Lahiri, 1996). The condition on R0 is satisfied by most regions of practical interest and holds in the plane d = 2, for

example, if the boundary ∂R0 of R0 is delineated by a simple curve of finite length (Sherman,

1996). The R0 -condition implies that the effect of data points lying near the boundary of

Rn is negligible compared to the totality of data points and that the volume of Rn determines the number of data points and blocks (see Lemma 1(c) in Appendix). Conditions on

the smooth model function H in Assumption 2 are standard in this context. Assumption 3

implies that a positive limiting variance σ 2 = limn→∞ σn2 exists. Assumption 4r describes

a bound on the strong mixing coefficient satisfying certain growth conditions. Bounds of

this type are known to apply for many weakly dependent random fields and time series; see

Doukhan (1994) and Guyon (1995). This assumption also allows moment bounds for sample

means of the process Z(·) as well as a central limit theorem (Lahiri, 2003b).

3.2

Bias and variance expansions

To find optimal block sizes, we first provide the asymptotic bias and variance of the spatial

2

2

block bootstrap estimators σ̂n,

OL (bn ) and σ̂n,N OL (bn ).

Theorem 1 (i) Suppose that Assumptions 1 - 3 and 42+2a hold with “a” as specified under

7

Assumption 2 and B0 6= 0 in (4). Then, the bias of σ̂n2 (bn ) is

£

¤

¢

B0 ¡

E σ̂n2 (bn ) − σn2 = −

1 + o(1) ,

bn

B0 =

X

kkk1 %(k) ∈ R,

(4)

k∈Zd

2

2

where σ̂n2 (bn ) represents either σ̂n,

OL (bn ) or σ̂n,N OL (bn ).

(ii) If Assumptions 1-3 and 45+4a hold with “a” as specified under Assumption 2, then

¤

£ 2

(b

)

=

Var σ̂n,

n

OL

µ ¶d

¤¡

¢

£ 2

2

(b

)

1

+

o(1)

,

Var σ̂n,

n

N OL

3

¤

£ 2

¢

2σ 4 bdn ¡

(b

)

=

Var σ̂n,

1

+

o(1)

.

n

N OL

vol(Rn )

Because more OL blocks are generally available than NOL ones, the variance of OL block

estimator turns out to be (2/3)d times smaller than the variance of the NOL block estimator.

For d = 1, a size n time series sample is obtained from (1) by setting R0 = (−1/2, 1/2],

λn = n on the untranslated lattice Z = Z and applying this in Theorem 1 gives the wellknown bias/variance of the MBB variance estimator with time series (Künsch, 1989, Hall et

al., 1995, Lahiri, 1996).

We also note some important differences between the spatial bootstrap and spatial subsampling as an alternative for variance estimation with subregions of dependent data (Sherman and Carlstein, 1994, Sherman, 1996). While the block bootstrap aims to recreate a

“size Rn ” sampling region, subsampling considers scaled-down copies bn R0 of Rn = λn R0

that can be treated as repeated, smaller versions of Rn on which to evaluate a spatial statistic θ̂n (Politis et al., 1999, Section 3.8); the subsampling variance estimator of Var(θ̂n ) is

the sample variance of the subsample evaluations of θ̂n . Philosophical differences in the

bootstrap/subsampling mechanics translate into large differences in their bias and variance

expansions. For example, if Z̄0,n denotes the sample mean over the bdn observations in the

block bn U for the bootstrap or over the |bn R0 ∩ Zd | observations in bn R0 for subsampling, the

expected values Eσ̂ 2 of bootstrap/subsampling variance estimators of σ 2 = N Var(θˆn ) are

n

bootstrap: bdn Var(∇0 Z̄0,n ) + o(N −1/2 )

n

subsampling: |bn R0 ∩ Zd |Var{H(Z̄0,n )}(1 + o(1)).

That is, for any dimension d of sampling, the bootstrap bias (i.e, Eσ̂n2 − σn2 ) is determined

by the first (linear) term ∇0 Z̄0 in a Taylor’s expansion of H(Z̄0,n ) around µ. This is not

generally true for subsampling, which can require up to four terms in the Taylor’s expansion

of H(Z̄0,n ) to pinpoint the main O(b−1

n ) bias component, especially in lower dimensions d =

1, 2; see Nordman and Lahiri (2004). Under appropriate conditions, subsampling variance

estimators have the same variance and bias orders (i.e., bdn /λdn , b−1

n ) as the bootstrap, but the

proportionality constants for these orders depend greatly on the geometry of Rn and are not

8

as simple as the bootstrap expressions in Theorem 1. This can make plug-in estimation of

optimal block sizes (see Section 5) generally more difficult for subsampling compared to the

bootstrap.

3.3

Theoretical optimal block size

The bias and variance expansions from Theorem 1 yield an asymptotic MSE (e.g., E[σ̂n2 (bn )−

σn2 ]2 ), which the theoretically best block size bn minimizes to optimize the over-all performance of a block bootstrap variance estimator. Explicit expressions for optimal OL and

NOL block sizes for block bootstrap variance estimation are given in the following. We find

that, in large samples, OL blocks should be larger than NOL ones by a factor owing to

differing variances in Theorem 1.

Theorem 2 Under the assumptions of Theorem 1(ii), the optimal block sizes bopt

n,OL and

2

2

bopt

n,N OL for σ̂n,OL (·) and σ̂n,N OL (·) are given by

µ 2

¶1/(d+2)

µ ¶d/(d+2)

B0 · vol(Rn )

3

opt

opt

opt

(1 + o(1)).

(1 + o(1)),

bn,N OL =

bn,OL = bn,N OL

2

dσ 4

(5)

Remark: The volume vol(Rn ) may be replaced by the sample size N in Theorems 1-2.

In terms of the spatial sample size, the optimal block size for bootstrap variance estimation is O(N 1/(d+2) ). For the time series case d = 1, we set the time series length n for

vol(Rn ) in Theorem 2 to yield the optimal block expression obtained by other authors with

P

P∞

σ2 = ∞

k=−∞ %(k), B0 =

k=−∞ |k|%(k) (Künsch, 1989, Hall et al., 1995); see Section 3.2

for defining Rn with time series. Hence, there is continuity in the optimal blocks between

spatial and times series bootstrap settings. In the spatial case, the key difference is that

optimal blocks depend on the dimension d of sampling as well as the volume of the sampling

region Rn (or relatedly the spatial sample size) as determined by the geometry of Rn .

In subsequent sections, we turn to examining practical methods for estimating the optimal

block size for using the spatial bootstrap.

4

Empirical choice of the optimal block size

We describe two general approaches for estimating the optimal block size for the bootstrap

estimators. These are spatial versions of block selection procedures for time series and involve

either cross-validation (Hall et al., 1995) or “plug-in” estimators. Let σ̂n (·) denote either the

OL or NOL block-based variance estimator in the following.

9

Hall et al. (1995) proposed a method for choosing a block length with the time series MBB,

in which a type of subsampling is used to estimate the MSE incurred by the bootstrap at

various block sizes. To describe a spatial version of their procedure, let m ≡ mn be a positive

integer sequence satisfying m−1 +m/λn → 0 and define a set Mm = {i ∈ Zd : i+mU ⊂ Rn } of

all OL cubes mU (of side length m) within Rn = λn R0 . Consider the rectangular subsamples

of the data: Zn (i + mU), i ∈ Mm . (The subsampling regions here correspond to cubes

lying inside Rn , not scaled-down copies of Rn more typically associated with subsampling

2

(b), i ∈ Mm , denote the block bootstrap variance estimator

(Sherman, 1996).) Let σ̂i,m

applied to each subsample Zn (i + mU) by resampling blocks of size b. A subsampling

2

(b)), the mean squared error of the bootstrap variance estimator on a

estimator of MSE(σ̂i,m

rectangular subsample, is then given as

[ m (b) =

MSE

i2

X h

1

2

σ̂i,m

(b) − σ̂n2 (b∗n )

|Mm | i∈M

(6)

m

where b∗n is a pilot block size. For some ² > 0, let Jm,² = {b ∈ Z+ : md/(d+2)−² ≤ b ≤

©

ª

[ m (b) : b ∈ Jm,² , where b̂0m

md/(d+2)+² } and define the minimizer of (6) as b̂0m = arg min MSE

estimates the optimal block size for a sampling region of size vol(mU) = md . By Theorem 3,

we may re-scale b̂0m to obtain a block size estimator for the original sampling region Rn by

£

¤1/(d+2)

b̂HHJ

= b̂0m · vol(Rn )/md

.

n

We refer to this as HHJ method.

A second, computationally simpler estimator of the best block size is through a nonparametric plug-in (NPI) method. This involves directly substituting estimates of population quantities into the theoretical block expressions from (5).

For the time series

MBB, plug-in choices of block size have been suggested in various forms by Bühlmann and

Künsch (1999), Politis and White (2003), and Lahiri et al. (2006). Our NPI approach for

NPI

the spatial block size involves two integer sequences bNPI

n,1 and bn,2 of block sizes satisfying

d/(d+i)

NPI

1/bNPI

n,i + bn,i /λn

→ 0 as n → ∞, for i = 1, 2. Following Lahiri et al. (2006), we use

the difference of two bootstrap variance estimators to estimate the bias component B0 from

b0 = 2bNPI · [σ̂ 2 (bNPI ) − σ̂ 2 (2bNPI )]. The expectation result in Theorem 1 suggests

(5) by B

n,2

n

n,2

n

n,2

b0 as an appropriate estimator of B0 . Using the second block size bNPI

B

n,1 , we estimate the

b0 and σ̂ 2 into the theoretical

spread component σ 2 in (5) with σ̂ 2 = σ̂ 2 (bNPI ). Substituting B

n

n,1

of the optimal block size for σ̂n2 (·).

expression from Theorem 2 gives a NPI estimator b̂NPI

n

Both HHJ and NPI block selection methods are consistent under mild conditions. In

previous work, Hall et al. (1995) did not establish the consistency of their block method with

10

the time series MBB and the method’s formal consistency has remained an open problem.

We provide the first consistency result for the HHJ method (to our knowledge), which is

valid for both spatial and time series data. In the following, let bopt

n denote the theoretical

optimal block size for σ̂n2 (·) and suppose σ̂n2 (·) is used to compute b̂HHJ

or b̂NPI

n

n .

Theorem 3 Assume m2d+²(d+2) /vol(Rn ) = o(1) for ² defining Jm,² , b∗n = C ∗ vol(Rn )1/(d+2)

for some C ∗ > 0. In addition to Assumptions 1-3 and B0 6= 0, suppose Assumption 4r holds

using r = 5 + 4a for b̂NPI

or r = 15 + 12a for b̂HHJ

(with a as specified under Assumption 2).

n

n

p

p

opt

HHJ

opt

Then as n → ∞, b̂NPI

n /bn −→ 1 and b̂n /bn −→ 1.

The HHJ block estimator b̂HHJ

depends on the subsampling tuning parameter m and

n

a block size b∗n = C ∗ vol(Rn )1/(d+2) , C ∗ > 0 (e.g., C ∗ = 1, 2), of the optimal order from

Theorem 2. As in the time series case, the optimal value of m is unknown in the spatial

setting. However, to reduce the effect of the tuning parameter b∗n , we may follow the iteration

proposal of Hall et al. (1995). Upon obtaining an estimate b̂HHJ

n , this value may be set as the

pilot block b∗n in a second round of the algorithm and this iterative process may be repeated

until convergence of the block estimate. This is the approach we apply in Section 5.

NPI

For the NPI estimator b̂NPI

n requiring smoothing parameters bn,i , i = 1, 2, it can be estab-

1/(d+4)

lished that the optimal order of the block size bNPI

n,2 for estimating the bias B0 is vol(Rn )

1/(d+2)

while the optimal order of the block size bNPI

from Theorem 2. Hence, plaun,1 is vol(Rn )

1/(d+2i)

sible tuning parameters have the form bNPI

for Ci > 0, i = 1, 2.

n,i = Ci vol(Rn )

5

Simulation Study

In this section, we summarize numerical studies of the performance of spatial block bootstrap

variance estimators as well as empirical methods for choosing the block size.

5.1

Block Bootstrap MSE and Optimal Blocks

We first conducted a simulation study to compare OL and NOL block bootstrap variance

estimators of σn2 = N Var(θ̂n ) as well as a spatial subsampling estimator, where θ̂n = Z̄N

is the sample mean over a circular sampling region Rn . Two regions of different sizes were

considered Rn := {x ∈ R2 : kxk ≤ 9} and {x ∈ R2 : kxk ≤ 20}. We used the circulant

embedding method of Chan and Wood (1997) to generate real-valued mean-zero Gaussian

random fields on Z2 = Z2 (i.e., t = 0) with an Exponential covariance structure:

£

¤

Model(β1 , β2 ) : %(k) = exp − β1 |k1 | − β2 |k2 | , k = (k1 , k2 )0 ∈ Z2 , β1 , β2 > 0.

11

We considered the values (β1 , β2 ) = (1, 1) and (0.5, 0.3) to obtain covariograms exhibiting

various rates of decay. Because the smooth model function is linear here (i.e., H(x) = x), the

2

2

bootstrap estimator σ̂n,

OL (bn ) of σn has a closed form expression as a scaled sample variance

of block means

2

σ̂n,

OL (bn )

= |In |

−1

X

bdn

´2

³

Z̄i,n − Z̄n ,

Z̄n = |In |−1

X

Z̄i,n ,

(7)

i∈In

i∈In

where Z̄i,n denotes the sample mean of the b2n (d = 2) observations in an OL block Bn (i),

2

i ∈ In ; σ̂n,

N OL (bn ) is given by replacing In , Z̄i,n with NOL analogs Kn , Z̄bn i,n .

For comparison, we also evaluated the OL subsampling variance estimator of the sample

mean θ̂n = Z̄n with subsamples as translates of bn R0 , based on the template R0 = {x ∈

R2 : kxk ≤ 1/2} for Rn . Here the OL subsampling estimator of σn2 has a form similar to

2

d

σ̂n,

OL but employs OL subcircles rather than cubes (i.e., redefine In , bn in (7) by using bn R0

instead of bn U). That is, in the case of the sample mean, the only difference between the

OL block bootstrap and subsampling variance estimators is the subregion shape used and

the bootstrap employs rectangular blocks.

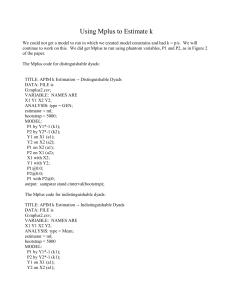

Figure 2 gives the normalized MSEs for each variance estimator over various block sizes

with optimal block sizes also denoted. We observe the following from the results:

1. The OL bootstrap estimator generally performed as good as (and often better than)

than the NOL version at any fixed block size considered.

2. At their optimal blocks, the OL block bootstrap performed slightly better than OL

subsampling in most cases. Table 1 provides a decomposition of the bias and variance

of both estimators at optimal block scaling. In the one instance where subsampling

outperformed the bootstrap (i.e., Model(0.5,0.3) on the larger Rn ), more optimal OL

circular subsamples were available than OL optimal blocks (i.e., 665 subcircles compared to 632 blocks).

3. The MSEs for the OL bootstrap exhibited more curvature than subsampling as a

function of block size, which may suggest a possible advantage for the bootstrap in

estimating/locating optimal block sizes. This topic is beyond the scope of the current

paper but may be important for future investigation.

5.2

Block Selection Methods

Using the same regions Rn and covariance models, we next studied empirical block selection for the OL block bootstrap by investigating possible values for constants (C1 , C2 )

12

1/(d+2i)

and (C ∗ , m), used to define the tuning parameters b̂NPI

, i = 1, 2 and

n,i = Ci vol(Rn )

b∗n = C ∗ vol(Rn )1/(d+2) in the NPI and HHJ methods, respectively. With time series, numerical studies for the MBB suggest that constants near one perform adequately for plug-in

block choices (Lahiri et al., 2006). Hence, we considered combinations of scalars C1 , C2 , C ∗ ∈

[1/2, 2] in increments of 1/4 and values m = {Cvol(Rn )1/6 : C = 2, 3, 4} to produce an estimator b̂n,OL of the optimal OL block size bopt

n,OL with the NPI or HHJ approach. (The m-values

in the HHJ method were chosen under Theorem 3 with ² = 1/2 and Jm,² = [1, m] ∩ Z; the

resulting values for m were typically around m = 5, 10, 15.) To initially assess a block

estimator, an MSE-criterion

2

4

2

2

opt

E{σ̂n,

OL (b̂n,OL ) − σ̂n,OL (bn,OL )} /σn

(8)

was evaluated based on 10,000 simulations for each sampling region Rn , dependence structure, and (C1 , C2 ) or (C ∗ , m) combination. The criterion assesses the discrepancy between

bootstrap variance estimators based on a block estimate and the optimal OL block choice

from Figure 2.

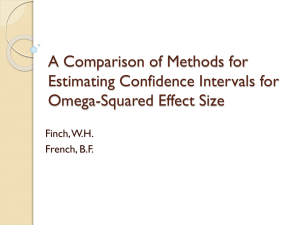

The simulation results (not included here for brevity) indicated tuning parameters values

of C1 , C2 ≤ 1 were generally best for the NPI method, especially with C2 = 1/2. For the

HHJ method, a constant C ∗ ∈ [1, 2] performed adequately as observed in Politis and Sherman (2001) while values around m = 10, 15 performed best on the larger sampling region

(especially under strongest spatial dependence with Model(0.5,0.3) where larger subsample

blocks may be expected). Figure 3 illustrates dot plots of the MSE-criterion (8) to give a

rough comparison of NPI and HHJ methods over the mentioned tuning parameter ranges

where the methods often performed well (i.e., C1 , C2 ∈ {0.5, .75, 1}, C ∗ ∈ {1, 1.5, 2}). Figure 3 indicates that both NPI and HHJ methods often performed comparably in terms of (8)

with no one method emerging as clearly superior. The NPI method often performed well and

produced the lowest MSE-realizations but the HHJ exhibited more stability (less variability)

across a larger number of tuning parameters. In this sense, the HHJ method appeared more

robust to the tuning parameter selection, which may be due to the HHJ iteration steps to

minimize the effect of C ∗ in b∗n (described at the end of Section 4). At the same time, the

HHJ method is computationally more intensive than the NPI which can be prohibitive in

some applications (see the data example of Section 6.2).

Table 2 displays the frequency distribution of OL block estimates based on both selection

methods. Both NPI and HHJ approaches performed fairly well in identifying the optimal

OL block size and both methods appear comparable. On the larger sampling regions, the

13

NPI and HHJ selections seemed to exhibit more variability but empirical block estimates

that deviate slightly from the optimal block appear less serious for these regions (since the

bootstrap MSEs in Figure 2 are similar around the optimal block size).

6

Data examples

6.1

Data Example 1: Longleaf Pines

We illustrate the spatial bootstrap method under the smooth function model with longleaf

pine data from Cressie (1993). Figure 8.4 of Cressie (1993) provides counts for the number of pines in a 32 × 32 grid of quadrats observed in a forest region of the Wade Tract,

Georgia, where each quadrat corresponds to a (0, 6.25] × [0, 6.25) m2 section partitioning a

(0, 200] × [0, 200) m2 study region. Analysis by Sherman (1996) suggests that the spatial

counts are stationary. We wish to test if the locations of pines exhibit complete spatial randomness or the tendency to cluster through the clustering index ICS≡ s2n /x̄n − 1 of David

and Moore (1954), involving the sample mean x̄n and variance s2n of the N = 1024 quadrat

counts (defining s2n here with a divisor N ). A positive ICS-value implies the trees tend to

cluster while we should expect the statistic to be zero when the tree locations are completely

spatially random; see Cressie (1993) for details.

To judge if the observed ICS =1.164 for the longleaf pine counts is significantly greater

than zero, a test statistic requires a variance estimate of σn2 = N Var(ICS) that accounts for

possible spatial dependence between the counts. The block bootstrap is applicable under

the smooth function model. To see this, let H : R2 → R for H(x, y) = x−1 y − x − 1 and

define Z̄N as the bivariate sample mean of Z(s) = (X(s), X 2 (s))0 of the tree counts X(s)

over quadrat sites s ∈ Rn ∩ Z2 , Rn = (0, 32]2 . Then, the ICS index equals θ̂n = H(Z̄N ).

2

2

Due to the nonlinearity of θ̂n , we evaluated the OL block estimator σ̂n,

OL of σn =

N Var(θ̂n ) by M = 3000 Monte-Carlo simulations. Both NPI and HHJ methods tended

to agree in their block selections. The NPI method selected an OL block b̂NPI

n,OL = 3 us1/(d+2i)

, i = 1, 2;

ing C2 = 0.5 and C1 ∈ {0.5, 1, 2} in the two pilot blocks b̂NPI

n,i = Ci N

NPI

∗

changing C2 = 2 with the same C1 range produced b̂NPI

n,OL = 3 or 5. Using bn = bn,1 (i.e.,

C∗ = C1 ∈ {0.5, 1, 2}) in the HHJ method led to block estimates b̂HHJ

n,OL = 3 for m ∈ {5, 20},

HHJ

b̂HHJ

n,OL = 5 for m = 10 and b̂n,OL = 6 for m = 15. That is, both NPI and HHJ appeared to be

fairly stable over one tuning parameter C1 = C ∗ in this example and changed slightly over

the second tuning parameter (C2 for NPI or m for HHJ); note that we may anticipate HHJ to

14

be robust to C ∗ due to iteration steps in the method (see Section 4). The resulting estimates

2

2

2

σ̂n,

OL (3) = 141.58, σ̂n,OL (5) = 126.60 and σ̂n,OL (6) = 116.53 produced estimated standard

2

1/2

errors (σ̂n,

of ICS as 0.372, 0.352 and 0.337 that indicate significant evidence of

OL /N )

clustering based on a normal approximation for the ICS index. Other findings in Chapter 8

of Cressie (1993) involving Poisson goodness-of-fit tests or distance methods support this

conclusion as well as the subsampling analysis by Sherman (1996).

6.2

Data Example 2: U.S. Cancer Mortality Map

Sherman and Carlstein (1994) provide a binary mortality map of the United States that indicates “high” or ”low” morality rates from liver and gallbladder cancer in white males during

1950-1959. The map consists of a spatial region Rn containing 2298 sites on the integer Z2

grid, of which 2012 have four nearest-neighbors available; see Sherman and Carlstein (1994)

for details. For a given site s ∈ Z2 , we code data Z(s) = 0 or 1 to indicate a low or high

mortality rate. (Note Sherman and Carlstein (1994) used coding −1/1 rather than 0/1.)

To investigate whether incidences of high cancer mortality exhibit clustering, Sherman

and Carlstein (1994) proposed fitting an autologistic model fit to the data as follows. Suppose

the data were generated by an autologistic model [Besag (1974)]:

£

¤

¡

¢

exp z(α + βN (s))

£

¤ , z = 0, 1,

P Z(s) = z | Z(i), i 6= s =

(9)

1 + exp α + βN (s)

P

where N (s) = kh−sk=1 Z(h) denotes the sum of indicators over the four nearest-neighbors

of site s. Positive values of the parameter β suggest a tendency of clustering, while β = 0

implies no clustering among sites. Maximum pseudolikelihood estimation (MPLE) with the

N = 2012 pairs Z(s), N (s) yielded β̂ = 0.419 and α̂ = −1.485. To test if the estimate β̂ is

significantly greater than zero, we require an estimate of N Var(β̂) that accounts for spatial

dependence because standard errors for MPLE statistics β̂ generally have no closed-forms.

We evaluated the OL block bootstrap estimator of N Var(β̂) based on M = 800 MonteCarlo simulations. For this variance estimation problem, a NPI estimate of OL block size

is relatively fast and easy to compute while the HHJ method is computationally prohibitive

(requiring many iterations of MPLE to be applied in the subsampling steps). Following

1/(d+2i)

, i = 1, 2,

tuning parameters from Section 5.2, we set C1 = C2 = 0.5 for b̂NPI

n,i = Ci N

in the NPI method which selected an OL block b̂NPI

n,OL = 4 with associated variance estimate

1/2

2

2

of β̂ is 0.0799,

σ̂n,

OL (4) = 12.84. The corresponding estimated standard error (σ̂n,OL (4)/N )

from which we conclude significant evidence of clustering. This agrees with other analyses

15

performed by Sherman and Carlstein (1994) accounting for their parametrization. Similar

NPI blocks b̂NPI

n,OL ∈ {2, 4, 5} with standard error estimates around 0.08 also followed from

other tuning combinations C1 , C2 ∈ {0.5, 0.75, 1} in this example.

Because the MPLE statistic β̂ does not readily fit into the smooth model framework

2

(Section 2.1), we further examined the bootstrap variance estimator σ̂n,

OL and block selection

in a small, but computationally involved, numerical study. We simulated data from an

autologistic model (9) with α = −1.49, β = 0.42 on a region of the same shape as the U.S.

mortality map. (For a single simulated data set, we generated independent Bernoulli p = 0.1

variables on the grid and applied the Gibbs Sampler with a burn-in of 10,000 iterations.)

The mean Eβ̂ and variance N Var(β̂) of the MPLE statistic were found to be 0.419 and

11.835 based on 10,000 simulated data sets. Table 3 displays the MSEs associated with the

2

OL block estimator σ̂n,

OL (based on M = 800 Monte-Carlo simulations) for various blocks

bn . The table also shows the distribution of OL block size estimates using the NPI method

with C1 = C2 = 0.5. The median and mode of the block estimates equal the optimal block

length bopt

n,OL =3 and simulations with other choices of C1 , C2 ∈ [1/2, 2] led to similar results.

7

Appendix: Proofs of Main Results

2

To save space, we consider only the OL block bootstrap estimator σ̂n,

OL (bn ) in detail. Proofs

for the NOL block version follow in an analogous manner. For i ∈ In and k ∈ Kn , let

Ui and Uk∗ respectively denote the sample averages of the bdn observations in Zn (Bn (i))

P

∗

−1

ˆ

and Zn,

OL (Rn (k)). Define µ̂n = |In |

i∈In Ui , a centered version µ̂n,cen = µ̂n − µ, ∇ =

ˆ 0 (Ui −µ) and W ∗ = ∇

ˆ 0 U ∗ . For ν = (ν1 , ..., νp )0 ∈

(∂H(µ̂n )/∂x1 , . . . , ∂H(µ̂n )/∂xp )0 , Wi,cen = ∇

k

k

Q

Q

p

p

νi

p

p

ν

ν

(Z+ ) , x ∈ R , write x = i=1 xi , ν! = i=1 (νi !), cν = D H(µ)/ν! and ĉν = Dν H(µ̂n )/ν!.

We assume that the Rd zero vector 0 ∈ Kn . Let C to denote a generic positive constant that

does not depend on n, block sizes, or any Zd , Zd points. Appearances of “a,r” refer to the

a, r-values from Assumptions 2 and 4r for the theorem under consideration. Limits in order

symbols are taken letting n tend to infinity.

We require some results in Lemma 1, where parts(a)-(b) follow from Doukhan (1994, p. 9,

26) and Jensen’s inequality and part(c) holds by the R0 -boundary condition in Assumption 1.

Lemma 1 (a) Suppose T1 , T2 ⊂ Zd are bounded with dis(T1 , T2 ) > 0. Let p, q > 0 where

1/p + 1/q < 1. If the random variable Xi is measurable with respect to FZ (Ti ), i = 1, 2, then

¡

¢1−1/p−1/q

|Cov(X1 , X2 )| ≤ 8(E|X1 |p )1/p (E|X2 |q )1/q α dis(T1 , T2 ); maxi=1,2 |Ti |

.

(b) Under Assumption 4r , r ∈ Z+ , it holds that for 1 ≤ m ≤ 2r and any T ⊂ Zd ,

16

Ek

P

s∈T

¡

¢

Z(s) − µ km ≤ C|T |m/2 and Ekµ̂n − µkm ≤ CN −m/2 .

(c) Under Assumption 1, N/vol(Rn ) → 1, |Kn |/(b−d

n vol(Rn )) → 1, |In |/vol(Rn ) → 1.

P2

2

∗

∗

∗

To prove Theorem 1, we write σ̂n,

OL (bn ) =

i=1 Var∗ (Ein ) + 2Cov∗ (E1n , E2n ) where

R

P

P

∗

∗

ν

∗

−1

∗

ν 1

(1 − ω)Dν H(µ̂n +

E1n

=

kνk1 =1 ĉν (Z̄n,OL − µ̂n ) , E2n = 2

kνk1 =2 (ν!) (Z̄n,OL − µ̂n )

0

∗

∗

∗

ω(Z̄n,

OL − µ̂n ))dω, using a Taylor expansion of θ̂n,OL = H(Z̄n,OL ) around µ̂n .

Proof of Theorem 1(i). By Assumption 1 and Lemma 1(c), it suffices to show that:

∗

E(N1 Var∗ (E1n

)) − σn2 = −

¢

B0 ¡

1 + o(1) ,

bn

∗

E(N1 Var∗ (E2n

)) = O(N −1 ),

(10)

∗

∗

from which N1 E|Cov∗ (E1n

, E2n

)| = O(N −1/2 ) = o(b−1

n ) follows by Holder’s inequality. BeginP

∗

∗

ning with Var∗ (E1n

) and writing E1n

= |Kn |−1 k∈Kn Wk∗ , it follows from the construction

∗

d

of Zn,

OL (Rn (k)), k ∈ Kn , by the distribution (3) and N1 = bn |Kn | that

µ

³

´2 ¶

Var∗ (W0∗ )

1 X 2

∗

d

0

ˆ µ̂n,cen

N1 Var∗ (E1n ) = N1

= bn

Wi,cen − ∇

,

|Kn |

|In | i∈I

(11)

n

ˆ 0 µ̂n,cen = |In |−1 P

ˆ

where ∇

i∈In Wi,cen . Expand each component ĉν , kνk1 = 1, of ∇ around µ

and write Wi,cen = S1i +S2i , i ∈ In , where S1i = ∇0 (Ui −µ) and S2i is defined by the difference.

2

By Assumption 5, Lemma 1(b) and Holder’s inequality, we find bdn E|S1i S2i |, bdn ES2i

≤ CN −1/2 ,

ˆ 0 µ̂n,cen )2 ≤ C bd N −1 = o(b−1 ) from k∇k

ˆ ≤ C(1 + kµ̂n,cen k + kµ̂n,cen k1+a ).

i ∈ In , and bd E(∇

n

Hence, (10) for

n

∗

Var∗ (E1n

)

σn2 − σ 2 = O(N −1/2 ),

n

will follow from (11) by showing

2

bdn ES1i

− σ2 = −

¢

B0 ¡

1 X bdn − Cn (k)

%(k)

=

−

1

+

o(1)

, (12)

d−1

bn

b

b

n

n

d

k∈Z

using the lattice point count Cn (k) = |Zd ∩ bn U ∩ (k + bn U)|, k ∈ Zd . The first part of

(12) holds from Nordman and Lahiri (2004, Lemma 9.1); the second part follows by the

−(d−1) ¡ d

Lebesgue dominated convergence theorem since 0 ≤ bdn − Cn (k) ≤ Ckkkd∞ bd−1

bn −

n , bn

¢

P

∗

) in (10),

Cn (k) → −kkk∞ for k ∈ Zd and k∈Zd kkkd∞ |%(k)| < ∞. Turning to Var∗ (E2n

4+2a

4

∗

∗

∗ 2

). We next note

) ≤ C(1 + kµ̂n k2a )(E∗ kZ̄n,

we bound E∗ (E2n

OL − µ̂n k + E∗ kZ̄n,OL − µ̂n k

P

−1

∗

∗

∗

∗

Z̄n,

OL = |Kn |

k∈Kn Uk and, conditioned on the data, {Uk }k∈Kn are iid with E∗ U0 = µ̂n ,

E∗ kU0∗ k4+2a < ∞ so that, for m = 2 or 2 + a,

2m

∗

≤ C|Kn |−m E∗ kU0∗ − µk2m ≤ C

E∗ kZ̄n,

OL − µ̂n k

|Kn |−m X

kUi − µk2m .

|In | i∈I

(13)

n

∗ 2

∗

) ] ≤ CN −1 . ¤

) ≤ N1 E[E∗ (E2n

By Holder’s inequality with Lemma 1, we find N1 EVar∗ (E2n

17

Proof of Theorem 1(ii). Beginning with (11), write |In |−1

P

i∈In

Wi2 ≡ T1n + 2T2n +

2

2

T3n as the sum of separate averages over terms S1i

, S1i S2i , S2i

, i ∈ In , respectively. From

Lemma 1(c) and Theorem 9.1 of Nordman and Lahiri (2004), it holds that Var(bdn T1n ) =

¡

¢

2

2σ 4 (2/3)d bdn /vol(Rn ) 1 + o(1) . To establish the expression for Var[σ̂n,

OL (bn )], it now suffices

to prove that

¡

¢

∗

Var(N1 Var∗ (E1n

)) = Var(bdn T1n ) 1 + o(1) ,

∗

Var(N1 Var∗ (E2n

)) = O(N −2 ),

∗

∗

since (10) and (14) entail Var[N1 Cov∗ (E1n

, E2n

)] = O(N −1 ).

(14)

By (13) and the bound

∗ 2

∗

on E∗ (E2n

) preceding (13), we may apply Lemma 1(b) to bound Var[N1 Var∗ (E2n

)] in

∗ 2 2

∗

(14) by N12 E[E∗ (E2n

) ] ≤ CN −2 . Then considering Var(N1 Var∗ (E1n

)) in (14), we bound

ˆ 0 µ̂n,cen )2 ] ≤ b2d E[∇

ˆ 0 µ̂n,cen ]4 = O(b2d /vol(Rn )2 ) so it becomes sufficient from (11) to

Var[bdn (∇

n

n

d

d

show Var(bn Tin ) = o(bn /vol(Rn )), i = 2, 3. We consider Var(bdn T3n ); the proof for Var(bdn T2n )

is similar.

2

2

For each k ∈ Zd , define a covariance function %n (k) = Cov(S20

, S2k

) and a distance

P

d

d

d

−2 2d

disn (k) = dis(Z ∩ Bn (0), Z ∩ Bn (k)). We bound Var(bn T3n ) = |In | bn

k∈Zd |{i ∈

P

In : i + k ∈ In }| · %n (k) ≤ V1n + V2n with sums V1n = |In |−1 b2d

n

k∈An |%n (k)| , V2n =

P

d

|In |−1 b2d

n

k∈Zd \An |%n (k)| where An = {k ∈ Z : kkk∞ ≤ bn }. By Lemma 1(b), |%n (k)| ≤

4

d

d

E(S20

) ≤ CN −2 b−2d

n , k ∈ An holds so that V1n = o(bn /vol(Rn )). For k ∈ Z \ An , it holds

(2r−1)d−1

that disn (k) ≥ 1 and |%n (k)| ≤ CN −2 b−2d

min{1, α1 [disn (k)]δ/(2r+δ) bn

n

} by Lemma 1(a)

and Assumption 4r . For ` ≥ 1 ∈ Z+ , |{k ∈ Zd : disn (k) = `}| ≤ C(bn + `)d−1 so we may

consider two sums of |%n (k)|, k ∈ Zd \ An , over distances ` ≡ disn (k) ≤ bn or > bn :

µ ¶2d(r−1)

µX

¶

∞

bn

X

¡

¢

`

1

d−1

(2r−1)d−1

d−1

δ/(2r+δ)

bn + bn

` α1 (`)

= o bdn /vol(Rn ) ,

V2n ≤ C 2

N |In | `=1

bn

`=b +1

n

2d(r−1)

substituting the additional term (`/bn )

≥ 1 in the second sum. Thus, Var(bdn T3n ) =

∗

o(bdn /vol(Rn )) follows, establishing the claim in (14) for Var∗ (E1n

). ¤

Proof of Theorem 2. Follows from Theorem 1 and optimization with calculus. ¤

b0 are MSE-consistent for σ 2

Proof of Theorem 3. By Theorem 1 and its proof, σ̂ 2 and B

then follows.

and B0 6= 0, respectively, so the consistency of b̂NPI

n

¯

¯

HHJ

[ m (b)/MSEm (b)−1¯ > η) (with

To establish the consistency of b̂n , let ∆m,η (b) = P (¯MSE

m ≡ mn ) for η > 0. It suffices to prove

¯µ

¯

µ ¶d/(d+2)

¶−1

¯ bd C

¯

m

B02

¯

¯

0

,

max ¯

MSE

(b)

−

1

max ∆m,η (b) ≤ C

+

¯ = o(1),

m

d

2

b∈Jm,² ¯

b∈Jm,²

¯

λn

m

b

18

(15)

where MSEm (b) = E[σ̂0,m (b) − σ 2 ]2 represents a MSE of a size-b block variance estimator on a sampling region mU (i.e., spatial observations Zn (mU)) with C0 /[2σ 4 ] = 1 or

[

(2/3)d for NOL or OL block cases. To see this, note b̂0m and bopt

m minimize MSEm (·) and

d 2

1/(d+2)

MSEm (·), respectively, where bopt

(1 + o(1)) is the optimal block

m = [2m B0 /(C0 d)]

size for a rectangular region mU by Theorem 2. Then, for b = b̂0m or bopt

m , it follows that

P

p

[ m (b)/MSEm (b) −→ 1 from ∆m,η (b) ≤

MSE

j∈Jm,² ∆m,η (j) = o(1) using (15), |Jm,² | =

p

O(md/(d+2)+² ) and m² (m2 /λn )d/(d+2) = o(1). By this and (15), one may argue that b̂0m /bopt

m −→

1. Consistency of b̂HHJ

= b̂0m · [vol(Rn )/md ]1/(d+2) for bopt

n

n then follows.

The second result in (15) for MSEm (b), b ∈ Jm,² , follows from the proof of Theorem 1

and using vol(mU) = md ; note σ 2 may be used in Theorem 1(i) (in place of σn2 ). For large n,

−2d/(d+2)

note that E[σ̂n2 (b∗n ) − σ 2 ]2 ≤ Cλn

d/(d+2)

(since b∗n is of order λn

) by applying Theorem 1

and that MSEm (b) ≥ Cm−2d/(d+2) (the optimal MSE order) for b ∈ Jm,² . Define MSEm (b) by

[ m (b) from (6). By adding/subtracting σ 2 inreplacing σ̂n2 (b∗n ) with σ 2 in the definition of MSE

[ m (b) and applying the Markov inequality, it holds that ∆m,η (b) ≤ C[P1 (b) + P2 (b) +

side MSE

P3 (b)] for any b ∈ Jm,² , where P1 (b) = {Var[MSEm (b)]/MSE2m (b)}1/2 , P2 (b) = E[σ̂n2 (b∗n ) −

P

2 ∗

σ 2 ]2 /MSEm (b) ≤ C(m/λn )2d/(d+2) and by Holder’s inequality P3 (b) =

i∈Mm E|σ̂n (bn ) −

1/2

2

σ 2 ||σ̂i,m

(b) − σ 2 |/(MSEm (b)|Mn |) ≤ CP2 (b) = O((m/λn )d/(d+2) ). To handle P1 (b), argud

2

2 4

ments as in proof of Theorem 1(ii) yield Var[MSEm (b)] ≤ Cλ−d

n m E[σ̂0,m (b) − σ ] , b ∈ Jm,² .

2

The bias/variance arguments from the proof of Theorem 1 give E[σ̂0,m

(b)−σ 2 ]4 /MSE2 (b) ≤ C,

for any b ∈ Jm,² when n is large, using the second part of (15). Hence, P1 (b) ≤ C(m/λn )d/2

holds and the bound on maxb∈Jm,² ∆m,η (b) in (15) follows. ¤

References

Besag, J. (1974) Spatial interaction and the statistical analysis of lattice systems (with

discussion). J. R. Stat. Sob. Ser. B 36, 192-236.

Bühlmann, P. and Künsch, H. R. (1999) Block length selection in the bootstrap for time

series. Comput. Stat. Data Anal. 31, 295-310.

Chan G. and Wood A. T. A. (1997) An algorithm for simulating stationary Gaussian

random fields. Applied Statistics 46, 171-181.

Cressie, N. (1993) Statistics for Spatial Data, 2nd Edition. John Wiley & Sons, New York.

David, F. N. and Moore, P. G. (1954) Notes on contagious distributions in plant populations. Ann. Bot. 18, 47-53.

19

Doukhan, P. (1994) Mixing: properties and examples. Lecture Notes in Statistics 85.

Springer-Verlag, New York.

Guyon, X. (1995) Random Fields on a Network. Springer-Verlag, New York.

Hall, P. (1985) Resampling a coverage pattern. Stochastic Processes and Their Applications

20, 231-246.

Hall, P. (1992) The Bootstrap and Edgeworth Expansion. Springer-Verlag, New York.

Hall, P. and Jing, B.-Y. (1996) On sample reuse methods for dependent data. J. R. Stat.

Soc. Ser. B 58, 727-737.

Hall, P., Horowitz, J. L., and Jing, B.-Y. (1995) On blocking rules for the bootstrap

with dependent data. Biometrika 82, 561-574.

Künsch, H. R. (1989) The jackknife and the bootstrap for general stationary observations.

Ann. Statist. 17, 1217-1241.

Lahiri, S. N. (1996) Empirical choice of the optimal block length for block bootstrap methods. Preprint, Department of Statistics, Iowa State University, Ames, IA.

Lahiri, S. N. (1999) Theoretical comparisons of block bootstrap methods. Ann. Statist. 27,

386-404.

Lahiri, S. N. (2003a) Resampling Methods for Dependent Data. Springer, New York.

Lahiri, S. N. (2003b) Central limit theorems for weighted sums of a spatial process under

a class of stochastic and fixed designs. Sankhya: Series A 65, 356-388.

Lahiri, S. N., Furukawa, K. and Lee, Y-D. (2006) A nonparametric plug-in rule for

selecting optimal block lengths for block bootstrap methods. Stat. Methodol. (in

press)

Liu, R.Y. and Singh, K. (1992) Moving blocks jackknife and bootstrap capture weak dependence. In Exploring the Limits of Bootstrap, 225-248, R. LePage and L. Billard

(editors). John Wiley & Sons, New York.

Nordman, D. J. and Lahiri, S. N. (2004) On optimal spatial subsample size for variance

estimation. Ann. Statist. 32, 1981-2027.

Politis, D. N. and Romano, J. P. (1993) Nonparametric resampling for homogeneous

strong mixing random fields. J. Multivariate Anal. 47, 301-328.

Politis, D. N. and Romano, J. P. (1994a) Large sample confidence regions based on

subsamples under minimal assumptions. Ann. Statist. 22, 2031-2050.

Politis, D. N. and Romano, J. P. (1994b) The stationary bootstrap. Journal of the American Statistical Association 89, 1303-1313.

20

Politis, D. N. and Sherman, M. (2001) Moment estimation for statistics from marked

point processes. J. R. Stat. Soc. Ser. B 63, 261-275.

Politis, D. N., Paparoditis, E. and Romano, J. P. (1999). Resampling marked point

processes. In Multivariate Analysis, Design of Experiments, and Survey Sampling: a

Tribute to J. N. Srivastava (Ed. - S Ghosh). Mercel Dekker, New York, 163-185.

Politis, D. N., Romano, J. P., and Wolf, M. (1999) Subsampling. Springer, New York.

Politis, D. N. and White, H. (2004) Automatic block-length selection for the dependent

bootstrap. Econometric Reviews 23 53-70.

Sherman, M. (1996) Variance estimation for statistics computed from spatial lattice data.

J. R. Stat. Soc. Ser. B 58, 509-523.

Sherman, M. and Carlstein, E. (1994) Nonparametric estimation of the moments of a

general statistic computed from spatial data. J. Amer. Statist. Assoc. 89, 496-500.

Zhu, J. and Lahiri, S.N. (2007) Weak convergence of blockwise bootstrapped empirical

processes for stationary random fields with statistical applications. Stat. Inference

Stoch. Process. 10, 107-145.

Daniel J. Nordman, Soumendra N. Lahiri

Brooke L. Fridley

Department of Statistics

Division of Biostatistics, Mayo Clinic

Iowa State University

200 First Street SW

Ames, IA 50010 USA

Rochester, MN 55905 USA

dnordman@iastate.edu, snlahiri@iastate.edu

Fridley.Brooke@mayo.edu

Figure 1: The blocking mechanism for the spatial block bootstrap method. (a) Partition of a

hexagonal sampling region Rn by subregions Rn (k), k ∈ Kn from (2); (b) Set of OL complete

blocks; (c) Set of NOL complete blocks; (d) Bootstrap version of the spatial process Z(·)

obtained by resampling and concatenating blocks from (b) or (c) in positions of complete

blocks from (a).

(a) complete block (b)

(c)

¢

¡

¡

¡

®¢¢ @

@

@

¡

¡

¡

@

¡ @

¡

@

@

@AK

@

¡

A incomplete block

@

¡

¡

(d)

@

@

@

¡

@

@

¡

¡

@

@

@

¡

¡

¡

21

¡

Table 1: Normalized bias Eσ̂n2 /σn2 − 1 and variance Var(σ̂n2 )/σn4 for OL block bootstrap

and OL subsampling estimators of σn2 = N Var(Z̄N ) when using optimal block scaling from

Figure 7). Estimates based on 100,000 simulations.

Rn = {x ∈ R2 : kxk ≤ 9}

Model(1, 1)

Bias

Rn = {x ∈ R2 : kxk ≤ 20}

Model(0.5, 0.3)

Var

Bias

Model(1, 1)

Var

Bias

Model(0.5, 0.3)

Var

Bias

Var

Bootstrap

-0.3895 0.0478

-0.6958 0.0283

-0.2629 0.0263

-0.4814 0.0543

Subsampling

-0.3855 0.0671

-0.7003 0.0328

-0.2679 0.0283

-0.4754 0.0536

Model(1,1), Circular Sampling Region Radius = 20

0.6

Model(1,1), Circular Sampling Region Radius = 9

0.5

0.4

0.2

0.1

2

4

6

8

10

12

2

4

6

8

10

12

14

16

18

block size b

Model(0.5,0.3), Circular Sampling Region Radius = 9

Model(0.5,0.3), Circular Sampling Region Radius = 20

0.7

0.8

normalized MSE

0.9

OL Block Boostrap (5)

NOL Block Bootstrap (5)

Subsampling (6)

0.5

2

4

6

8

10

12

0.3 0.4 0.5 0.6 0.7 0.8 0.9

block size b

0.6

normalized MSE

OL Block Boostrap (6)

NOL Block Bootstrap (6)

Subsampling (7)

0.3

normalized MSE

0.6

0.4

0.2

normalized MSE

0.8

OL Block Boostrap (4)

NOL Block Bootstrap (4)

Subsampling (5)

OL Block Boostrap (10)

NOL Block Bootstrap (9)

Subsampling (11)

2

4

block size b

6

8

10

12

14

16

18

block size b

Figure 2: Estimates of normalized MSE, E(σ̂n2 /σn2 − 1)2 , for OL/NOL spatial block bootstrap

variance estimators σ̂n2 (bn ) of σn2 = N Var(Z̄N ) for various block sizes bn as well as OL

subsampling variance estimator. Each estimated MSE is based on 100,000 simulations.

Values in (·) denote the optimal block sizes with minimal MSE.

22

0.04

0

0.02

MSE−criterion

0.06

Evaulations of MSE−criterion over 9 tuning parameter combinations

NPI

HHJ

_____________

region radius 9

Model(1,1)

NPI

HHJ

_____________

region radius 9

Model(0.5,0.3)

NPI

HHJ

_____________

region radius 20

Model(1,1)

NPI

HHJ

_____________

region radius 20

Model(0.5,0.3)

Figure 3: Dot plots of MSE-criterion (8) based on 9 combinations of tuning parameter

coefficients for each block selection method, circular sampling region Rn and covariance

model. The NPI method uses C1 , C2 ∈ {0.5, 0.75, 1}; the HHJ method uses C ∗ ∈ {1, 1.5, 2},

m ∈ {Cvol(Rn )1/6 : C = 2, 3, 4}.

23

Table 2: Frequency distribution of empirically chosen OL block bootstrap scaling with NPI

and HHJ methods (based on 1000 simulations). Along with C2 = 0.5, NPI1 and NPI2 use

C1 = 0.5 and 0.75, respectively. With C ∗ = 1, the HHJ ≡ HHJm method uses m = 5, 10

and 15, respectively. True optimal block sizes bopt

n,OL are determined from Figure 2.

Rn radius,

Model(·, ·)

Estimates b̂n,OL of optimal block size bopt

n,OL

Method

2

3

4

NPI1

76

924

9,

NPI2

646 354

(1,1)

HHJ5

103

HHJ10

8

5

6

7

8

9

10

11

12

4

897

288 597

104

2

NPI1

8

78

284

458

162

20,

NPI2

11

127

558

299

5

(1,1)

HHJ10

119

HHJ15

22

10

427 481

69

331

9,

NPI2

145 855

(0.5,0.3)

HHJ5

997

3

441

477

19

1

871

NPI1

HHJ10

10

6

1

669

5

4

1

NPI1

1

9

200

20,

NPI2

6

744

250

(0.5,0.3)

HHJ10

HHJ15

bopt

n,OL

58

5

6

926

242

657

95

624

166

10

1

2

2

2

2

Table 3: Normalized MSEs E(σ̂n,

for OL block bootstrap estimator σ̂n,

OL /σn − 1)

OL ≡

2

2

σ̂n,

OL (bn ) of MPLE variance σn = N Var(β̂n ), based on 1000 simulations for each block

bn . Also included, the distribution of OL block size estimates b̂NPI

n,OL using NPI method with

C1 = C2 = 0.5 (i.e., percentage of times that b̂NPI

n,OL = bn based on additional 500 simulations).

block size bn

1

2

3

4

5

6

7

MSE×102

1.654

0.939

0.896∗

1.006

1.326

1.879

2.450

b̂NPI

n,OL = bn %

9.0%

16.4%

24.8%

23.8%

16.0%

8.2%

1.8%

24