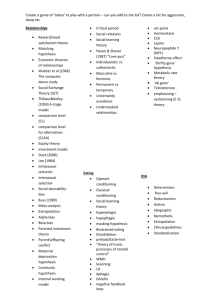

Introduction to Machine Learning CSE474/574: Concept Learning Varun Chandola <> Concept Learning

advertisement

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Introduction to Machine Learning

CSE474/574: Concept Learning

Varun Chandola <chandola@buffalo.edu>

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Outline

1

Concept Learning

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

2

Learning Conjunctive Concepts

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

3

Inductive Bias

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Outline

1

Concept Learning

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

2

Learning Conjunctive Concepts

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

3

Inductive Bias

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

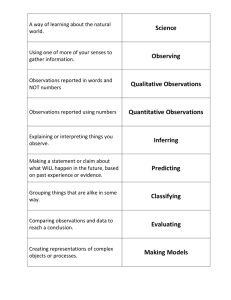

Concept Learning

Infer a boolean-valued function c : x → {true,false}

Input: Attributes for input x

Output: true if input belongs to concept, else false

Go from specific to general (Inductive Learning).

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Finding Malignant Tumors from MRI Scans

Attributes

1

Shape circular,oval

2

Size large,small

3

Color light,dark

4

Surface smooth,irregular

5

Thickness thin,thick

Concept

Malignant tumor.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Malignant vs. Benign Tumor

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Malignant vs. Benign Tumor

Malicious

Malicious

Benign

Malicious

A large irregularly shaped dark

reddish blob.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Malignant vs. Benign Tumor

Malicious

Malicious

Benign

Malicious

A large irregularly shaped dark

reddish blob.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Malignant vs. Benign Tumor

Malicious

Malicious

Benign

Malicious

A large irregularly shaped dark

reddish blob.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Notation

X - Set of all possible instances.

What is |X |?

Example: {circular,small,dark,smooth,thin}

D - Training data set.

D = {hx, c(x)i : x ∈ X , c(x) ∈ {0, 1}}

Typically, |D| |X |

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Representing a Concept - Hypothesis

A conjunction over a subset of attributes

A malignant tumor is: circular and dark and thick

{circular,?,dark,?,thick}

Target concept c is unknown

Value of c over the training examples is known

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Approximating Target Concept Through Hypothesis

Hypothesis: a potential concept

Example: {circular,?,?,?,?}

Hypothesis Space (H): Set of all hypotheses

What is |H|?

Special hypotheses:

Accept everything, {?,?,?,?,?}

Accept nothing, {∅, ∅, ∅, ∅, ∅}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

Approximating Target Concept Through Hypothesis

Hypothesis: a potential concept

Example: {circular,?,?,?,?}

Hypothesis Space (H): Set of all hypotheses

What is |H|?

Special hypotheses:

Accept everything, {?,?,?,?,?}

Accept nothing, {∅, ∅, ∅, ∅, ∅}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Outline

1

Concept Learning

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

2

Learning Conjunctive Concepts

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

3

Inductive Bias

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

A Simple Algorithm (Find-S [2, Ch. 2])

1

Start with h = ∅

2

Use next input {x, c(x)}

3

If c(x) = 0, goto step 2

h ← h ∧ x (pairwise-and)

4

5

If more examples: Goto step 2

6

Stop

Varun Chandola

Pairwise-and rules:

ax :

ax :

ah ∧ ax =

? :

? :

Introduction to Machine Learning

if

if

if

if

ah

ah

ah

ah

=∅

= ax

6= ax

=?

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Simple Example

Target concept

{?,large,?,?,thick}

How many positive examples can there be?

What is the minimum number of examples need to be seen to learn

the concept?

1

2

{circular,large,light,smooth,thick}, malignant

{oval,large,dark,irregular,thick}, malignant

Maximum?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Simple Example

Target concept

{?,large,?,?,thick}

How many positive examples can there be?

What is the minimum number of examples need to be seen to learn

the concept?

1

2

{circular,large,light,smooth,thick}, malignant

{oval,large,dark,irregular,thick}, malignant

Maximum?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Simple Example

Target concept

{?,large,?,?,thick}

How many positive examples can there be?

What is the minimum number of examples need to be seen to learn

the concept?

1

2

{circular,large,light,smooth,thick}, malignant

{oval,large,dark,irregular,thick}, malignant

Maximum?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partial Training Data

Target concept

{?,large,?,?,thick}

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

3

{oval,large,dark,smooth,thin}, benign

4

{oval,large,light,irregular,thick}, malignant

5

{circular,small,light,smooth,thick}, benign

Concept learnt:

{?,large,light,?,thick}

What mistake can this “concept” make?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partial Training Data

Target concept

{?,large,?,?,thick}

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

3

{oval,large,dark,smooth,thin}, benign

4

{oval,large,light,irregular,thick}, malignant

5

{circular,small,light,smooth,thick}, benign

Concept learnt:

{?,large,light,?,thick}

What mistake can this “concept” make?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partial Training Data

Target concept

{?,large,?,?,thick}

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

3

{oval,large,dark,smooth,thin}, benign

4

{oval,large,light,irregular,thick}, malignant

5

{circular,small,light,smooth,thick}, benign

Concept learnt:

{?,large,light,?,thick}

What mistake can this “concept” make?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Recap of Find-S

Objective: Find maximally specific hypothesis

Admit all positive examples and nothing more

Hypothesis never becomes any more specific

Questions

Does it converge to the target concept?

Is the most specific hypothesis the best?

Robustness to errors

Choosing best among potentially many maximally specific

hypotheses

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Recap of Find-S

Objective: Find maximally specific hypothesis

Admit all positive examples and nothing more

Hypothesis never becomes any more specific

Questions

Does it converge to the target concept?

Is the most specific hypothesis the best?

Robustness to errors

Choosing best among potentially many maximally specific

hypotheses

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Version Spaces

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

3

{oval,large,dark,smooth,thin}, benign

4

{oval,large,light,irregular,thick}, malignant

5

{circular,small,light,smooth,thin}, benign

Hypothesis chosen by Find-S:

{?,large,light,?,thick}

Other possibilities that are consistent with the training data?

What is consistency?

Version space: Set of all consistent hypotheses.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Version Spaces

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

3

{oval,large,dark,smooth,thin}, benign

4

{oval,large,light,irregular,thick}, malignant

5

{circular,small,light,smooth,thin}, benign

Hypothesis chosen by Find-S:

{?,large,light,?,thick}

Other possibilities that are consistent with the training data?

What is consistency?

Version space: Set of all consistent hypotheses.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

List Then Eliminate

1

2

VS ← H

For Each hx, c(x)i ∈ D:

Remove every hypothesis h from VS such that h(x) 6= c(x)

3

Return VS

Issues?

How many hypotheses are removed at every instance?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

List Then Eliminate

1

2

VS ← H

For Each hx, c(x)i ∈ D:

Remove every hypothesis h from VS such that h(x) 6= c(x)

3

Return VS

Issues?

How many hypotheses are removed at every instance?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Compressing Version Space

More General Than Relationship

hj ≥g hk

if

hk (x) = 1 ⇒ hj (x) = 1

hj >g hk

if

(hj ≥g hk ) ∧ (hk g hj )

In a version space, there are:

1

2

Maximally general hypotheses

Maximally specific hypotheses

Boundaries of the version space

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

1

{circular,large,light,smooth,thick}, malignant

2

{circular,large,light,irregular,thick}, malignant

{?,large,light,?,thick}

3

{oval,large,dark,smooth,thin}, benign

{?,large,?,?,thick}

4

{oval,large,light,irregular,thick}, malignant

{?,large,light,?,?}

5

{circular,small,light,smooth,thick}, benign

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example (2)

Specific

General

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example (2)

Specific

{?,large,light,?,thick}

General {?,large,?,?,thick}

Varun Chandola

{?,large,light,?,?}

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Boundaries are Enough to Capture Version Space

Version Space Representation Theorem

Every hypothesis h in the version space is contained within at least one

pair of hypothesis, g and s, such that g ∈ G and s ∈ S, i.e.,:

g ≥g h ≥g s

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Candidate Elimination Algorithm

1

2

Initialize S0 = {∅}, G0 = {?, ?, . . . , ?}

For every training example, d = hx, c(x)i

c(x) = −ve

c(x) = +ve

1

Remove from G any g for which

g (x) 6= +ve

1

Remove from S any s for which

s(x) 6= −ve

2

For every s ∈ S such that

s(x) 6= +ve:

2

For every g ∈ G such that

g (x) 6= −ve:

1

2

Remove s from S

For every minimal

generalization, s 0 of s

1

2

If g 0 (x) = −ve and there

exists s 0 ∈ S such that

g 0 >g s 0

Add g 0 to G

If s 0 (x) = +ve and there

exists g 0 ∈ G such that

g 0 >g s 0

Add s 0 to S

3

Remove from S all hypotheses that

are more general than another

hypothesis in S

Varun Chandola

Remove g from G

For every minimal

specialization, g 0 of g

3

Remove from G all hypotheses that

are more specific than another

hypothesis in G

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Candidate Elimination Algorithm

1

2

Initialize S0 = {∅}, G0 = {?, ?, . . . , ?}

For every training example, d = hx, c(x)i

c(x) = −ve

c(x) = +ve

1

Remove from G any g for which

g (x) 6= +ve

1

Remove from S any s for which

s(x) 6= −ve

2

For every s ∈ S such that

s(x) 6= +ve:

2

For every g ∈ G such that

g (x) 6= −ve:

1

2

Remove s from S

For every minimal

generalization, s 0 of s

1

2

If g 0 (x) = −ve and there

exists s 0 ∈ S such that

g 0 >g s 0

Add g 0 to G

If s 0 (x) = +ve and there

exists g 0 ∈ G such that

g 0 >g s 0

Add s 0 to S

3

Remove from S all hypotheses that

are more general than another

hypothesis in S

Varun Chandola

Remove g from G

For every minimal

specialization, g 0 of g

3

Remove from G all hypotheses that

are more specific than another

hypothesis in G

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Candidate Elimination Algorithm

1

2

Initialize S0 = {∅}, G0 = {?, ?, . . . , ?}

For every training example, d = hx, c(x)i

c(x) = −ve

c(x) = +ve

1

Remove from G any g for which

g (x) 6= +ve

1

Remove from S any s for which

s(x) 6= −ve

2

For every s ∈ S such that

s(x) 6= +ve:

2

For every g ∈ G such that

g (x) 6= −ve:

1

2

Remove s from S

For every minimal

generalization, s 0 of s

1

2

If g 0 (x) = −ve and there

exists s 0 ∈ S such that

g 0 >g s 0

Add g 0 to G

If s 0 (x) = +ve and there

exists g 0 ∈ G such that

g 0 >g s 0

Add s 0 to S

3

Remove from S all hypotheses that

are more general than another

hypothesis in S

Varun Chandola

Remove g from G

For every minimal

specialization, g 0 of g

3

Remove from G all hypotheses that

are more specific than another

hypothesis in G

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

Specific S0 {∅}

General G0 {?,?,?,?,?}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

Specific S0 {∅}

S1 {ci,la,li,sh,th}

h{ci,la,li,sh,th}, +vei

General G0 {?,?,?,?,?}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

Specific S0 {∅}

S1 {ci,la,li,sh,th}

h{ci,la,li,sh,th}, +vei

h{ci,la,li,ir,th}, +vei

S2 {ci,la,li,?,th}

General G0 {?,?,?,?,?}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

Specific S0 {∅}

S1 {ci,la,li,sh,th}

h{ci,la,li,sh,th}, +vei

h{ci,la,li,ir,th}, +vei

S2 {ci,la,li,?,th}

h{ov,sm,li,sh,tn}, -vei

G3 {ci,?,?,?,?},{?,la,?,?,?},{?,?,dk,?,?},{?,?,?,ir,?},{?,?,?,?,th}

General G0 {?,?,?,?,?}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Example

Specific S0 {∅}

S1 {ci,la,li,sh,th}

h{ci,la,li,sh,th}, +vei

h{ci,la,li,ir,th}, +vei

S2 {ci,la,li,?,th}

h{ov,sm,li,sh,tn}, -vei

h{ov,la,li,ir,th}, +vei

S4 {?,la,li,?,th}

G3 {ci,?,?,?,?},{?,la,?,?,?},{?,?,?,?,th}

G3 {ci,?,?,?,?},{?,la,?,?,?},{?,?,dk,?,?},{?,?,?,ir,?},{?,?,?,?,th}

General G0 {?,?,?,?,?}

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Understanding Candidate Elimination

S and G boundaries move towards each other

Will it converge?

1

2

3

No errors in training examples

Sufficient training data

The target concept is in H

Why is it better than Find-S?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Not Sufficient Training Examples

Use boundary sets S and G to make predictions on a new instance

x∗

Case 1: x ∗ is consistent with every hypothesis in S

Case 2: x ∗ is inconsistent with every hypothesis in G

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,?,?,?}

Varun Chandola

{?,?,?,?,th}

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,li,?,?}

{?,la,?,?,th}

{?,la,?,?,?}

Varun Chandola

{?,?,li,?,th}

{?,?,?,?,th}

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,li,?,?}

{?,la,?,?,th}

{?,la,?,?,?}

{?,?,li,?,th}

{?,?,?,?,th}

{ci,la,li,sh,th}, ?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,li,?,?}

{?,la,?,?,th}

{?,la,?,?,?}

{?,?,li,?,th}

{?,?,?,?,th}

{ov,sm,li,ir,tn}, ?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,li,?,?}

{?,la,?,?,th}

{?,la,?,?,?}

{?,?,li,?,th}

{?,?,?,?,th}

{ov,la,dk,ir,th}, ?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Partially Learnt Concepts - Example

{?,la,li,?,th}

{?,la,li,?,?}

{?,la,?,?,th}

{?,la,?,?,?}

{?,?,li,?,th}

{?,?,?,?,th}

{ci,la,li,ir,tn}, ?

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

Using Partial Version Spaces

Halving Algorithm

Predict using the majority of concepts in the version space

Randomized Halving Algorithm [1]

Predict using a randomly selected member of the version space

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Outline

1

Concept Learning

Example – Finding Malignant Tumors

Notation

Representing a Possible Concept - Hypothesis

Hypothesis Space

2

Learning Conjunctive Concepts

Find-S Algorithm

Version Spaces

LIST-THEN-ELIMINATE Algorithm

Compressing Version Space

Analyzing Candidate Elimination Algorithm

3

Inductive Bias

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

How many target concepts can there be?

Target concept labels examples in X

2|X | possibilities (C)

Qd

|X | = i=1 ni

Conjunctive hypothesis space H has

Qd

i=1

ni + 1 possibilities

Why is this difference?

Hypothesis Assumption

Target concept is conjunctive.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

How many target concepts can there be?

Target concept labels examples in X

2|X | possibilities (C)

Qd

|X | = i=1 ni

Conjunctive hypothesis space H has

Qd

i=1

ni + 1 possibilities

Why is this difference?

Hypothesis Assumption

Target concept is conjunctive.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Inductive Bias

C

H

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Inductive Bias

{ci,?,?,?,?,?}

C

H

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Inductive Bias

{ci,?,?,?,?,?} ∨

{?,?,?,?,?,th}

{ci,?,?,?,?,?}

C

H

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Bias Free Learning – C ≡ H

Simple tumor example: 2 attributes - size (sm/lg) and shape (ov/ci)

Target label - malignant (+ve) or benign (-ve)

|X | = 4

|C| = 16

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Bias Free Learning is Futile

A learner making no assumption about target concept cannot

classify any unseen instance

Inductive Bias

Set of assumptions made by a learner to generalize from training

examples.

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

Examples of Inductive Bias

Rote Learner – No Bias

Candidate Elimination – Stronger Bias

Find-S – Strongest Bias

Varun Chandola

Introduction to Machine Learning

Concept Learning

Learning Conjunctive Concepts

Inductive Bias

References

References

W. Maass.

On-line learning with an oblivious environment and the power of

randomization.

In Proceedings of the Fourth Annual Workshop on Computational

Learning Theory, COLT ’91, pages 167–178, San Francisco, CA,

USA, 1991. Morgan Kaufmann Publishers Inc.

T. M. Mitchell.

Machine Learning.

McGraw-Hill, Inc., New York, NY, USA, 1 edition, 1997.

Varun Chandola

Introduction to Machine Learning