Lecture25

advertisement

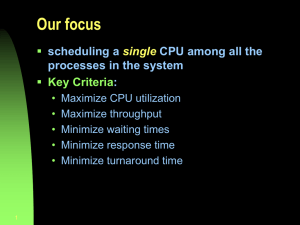

COT 4600 Operating Systems Fall 2009 Dan C. Marinescu Office: HEC 439 B Office hours: Tu-Th 3:00-4:00 PM Lecture 25 Attention: project phase 4 and HW 6 – due Tuesday November 24 Last time: Multi-level memories Memory characterization Multilevel memories management using virtual memory Adding multi-level memory management to virtual memory Today: Final exam – Thursday December 10 4-6:50 PM Scheduling Next Time: Network properties (Chapter 7) - available online from the publisher of the textbook 2 Scheduling The process of allocating resource e.g., CPU cycles, to threads/processes. Distinguish Policies Mechanisms to implement policies Scheduling problems have evolved in time: Early on: emphasis on CPU scheduling Now: more interest in transaction processing and I/O optimization Scheduling decisions are made at different levels of abstraction and it is not always easy to mediate. Example: an overloaded transaction processing system Incoming transaction are queued in a buffer which may fill up; The interrupt handler is constantly invoked as dropped requests are reissued; The transaction processing thread has no chance to empty the buffer; Solution: when the buffer is full disable the interrupts caused by incoming transactions and allow the transaction processing thread to run. Scheduling objectives Performance metrics: CPU Utilization Fraction of time CPU does useful work over total time Throughput Number of jobs finished per unit of time Turnaround time Time spent by a job in the system Response time Time to get the results Waiting time Time waiting to start processing All these are random variables we are interested in averages!! The objectives - system managers (M) and users (U): Maximize CPU utilization M Maximize throughput M Minimize turnaround time U Minimize waiting time U Minimize response time U CPU burst CPU burst the time required by the thread/process to execute Scheduling policies First-Come First-Serve (FCFS) Shortest Job First (SJF) Round Robin (RR) Preemptive/non-preemptive scheduling First-Come, First-Served (FCFS) Thread Burst Time P1 24 P2 3 P3 3 Processes arrive in the order: P1 P2 P3 Gantt Chart for the schedule: P1 0 P2 24 P3 27 Waiting time for P1 = 0; P2 = 24; P3 = 27 Average waiting time: (0 + 24 + 27)/3 = 17 Convoy effect short process behind long process 30 FCFS Scheduling (Cont’d.) Now threads arrive in the order: P2 P3 P1 Gantt chart: P2 0 P3 3 P1 6 Waiting time for P1 = 6; P2 = 0; P3 = 3 Average waiting time: (6 + 0 + 3)/3 = 3 Much better!! 30 Shortest-Job-First (SJF) Use the length of the next CPU burst to schedule the thread/process with the shortest time. SJF is optimal minimum average waiting time for a given set of threads/processes Two schemes: Non-preemptive the thread/process cannot be preempted until completes its CPU burst Preemptive if a new thread/process arrives with CPU burst length less than remaining time of current executing process, preempt. known as Shortest-Remaining-Time-First (SRTF) Example of non-preemptive SJF Thread P1 P2 P3 P4 SJF (non-preemptive) Arrival Time Burst Time 0.0 2.0 4.0 5.0 7 4 1 4 P1 0 3 P3 7 P2 8 Average waiting time = (0 + 6 + 3 + 7)/4 P4 12 =4 16 Example of Shortest-Remaining-Time-First (SRTF) (Preemptive SJF) Thread Burst Time P1 0.0 P2 2.0 P3 4.0 P4 5.0 Shortest-Remaining-Time-First P1 0 Arrival Time P2 2 P3 4 7 4 1 4 P2 5 P4 7 Average waiting time = (9 + 1 + 0 +2)/4 = 3 P1 11 16 Round Robin (RR) Each process gets a small unit of CPU time (time quantum), usually 10-100 milliseconds. After this time has elapsed, the thread/process is preempted and added to the end of the ready queue. If there are n threads/processes in the ready queue and the time quantum is q, then each thread/process gets 1/n of the CPU time in chunks of at most q time units at once. No thread/process waits more than (n-1)q time units. Performance q large FIFO q small q must be large with respect to context switch, otherwise overhead is too high RR with time slice q = 20 Thread P1 P2 P3 P4 P1 0 P2 20 37 P3 Burst Time 53 17 68 24 P4 57 P1 77 P3 97 117 P4 P1 P3 P3 121 134 154 162 Typically, higher average turnaround than SJF, but better response Time slice (quantum) and context switch time Turnaround time function of time quantum Job Arrival time Work Start time Finish time Wait time till start Time in system A 0 3 0 3 0 3 B 1 5 3 3+5=8 3–1=2 8–1=7 C 3 2 8 8 + 2 = 10 8–3=5 10 – 3 = 7 A 0 3 0 3 0 3 B 1 5 5 5 + 5 = 10 4 10 – 1 = 9 C 3 2 3 3+2=5 0 5–3=2 A 0 3 0 6 0 6–0=6 B 1 5 1 10 1–1=0 10 – 1 = 9 C 3 2 5 8 5–3=2 8–3=5 Scheduling policy Average waiting time till the job started Average time in system FCFS 7/3 17/3 SJF 4/3 14/3 RR 3/3 20/3 Priority scheduling Each thread/process has a priority and the one with the highest priority (smallest integer highest priority) is scheduled next. Preemptive Non-preemptive SJF is a priority scheduling where priority is the predicted next CPU burst time Problem Starvation – low priority threads/processes may never execute Solution to starvation Aging – as time progresses increase the priority of the thread/process Priority my be computed dynamically Priority inversion A lower priority thread/process prevents a higher priority one from running. T3 has the highest priority, T1 has the lowest priority; T1 and T3 share a lock. T1 acquires the lock, then it is suspended when T3 starts. Eventually T3 requests the lock and it is suspended waiting for T1 to release the lock. T2 has higher priority than T1 and runs; neither T3 nor T1 can run; T1 due to its low priority, T3 because it needs the lock help by T1. Allow a low priority thread holding a lock to run with the higher priority of the thread which requests the lock Estimating the length of next CPU burst Done using the length of previous CPU bursts, using exponential averaging n 1 t n 1 n . 1. t n actual length of n th CPU burst 2. n 1 predicted value for the next CPU burst 3. , 0 1 Exponential averaging =0 n+1 = n Recent history does not count =1 n+1 = tn Only the actual last CPU burst counts If we expand the formula, we get: n+1 = tn+(1 - ) tn -1 + … +(1 - )j tn -j + … +(1 - )n +1 0 Since both and (1 - ) are less than or equal to 1, each successive term has less weight than its predecessor Predicting the length of the next CPU burst Multilevel queue Ready queue is partitioned into separate queues each with its own scheduling algorithm : foreground (interactive) RR background (batch) FCFS Scheduling between the queues Fixed priority scheduling - (i.e., serve all from foreground then from background). Possibility of starvation. Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i.e., 80% to foreground in RR 20% to background in FCFS Multilevel Queue Scheduling Multilevel feedback queue A process can move between the various queues; aging can be implemented this way Multilevel-feedback-queue scheduler characterized by: number of queues scheduling algorithms for each queue strategy when to upgrade/demote a process strategy to decide the queue a process will enter when it needs service Example of a multilevel feedback queue exam Three queues: Q0 – RR with time quantum 8 milliseconds Q1 – RR time quantum 16 milliseconds Q2 – FCFS Scheduling A new job enters queue Q0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q1. At Q1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q2. Multilevel Feedback Queues Unix scheduler The higher the number quantifying the priority the lower the actual process priority. Priority = (recent CPU usage)/2 + base Recent CPU usage how often the process has used the CPU since the last time priorities were calculated. Does this strategy raises or lowers the priority of a CPU-bound processes? Example: base = 60 Recent CPU usage: P1 =40, P2 =18, P3 = 10 Comparison of scheduling algorithms Round Robin FCFS MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next Throughput May be low is quantum is too small Not emphasized May be low is quantum is too small High High Response time Shortest average response time if quantum chosen correctly May be poor Good for I/O bound but poor for CPUbound processes Good for short processes But maybe poor for longer processes Good for short processes But maybe poor for longer processes IO-bound Round Robin FCFS MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next No distinction between CPU-bound and IO-bound No distinction between CPU-bound and IO-bound Gets a high priority if CPUbound processes are present No distinction between CPU-bound and IO-bound No distinction between CPU-bound and IO-bound Does not occur May occur for CPU bound processes May occur for processes with long estimated running times May occur for processes with long estimated running times Infinite Does not postponem occur ent Overhead CPUbound Round Robin FCFS MFQ Multi-Level Feedback Queue SFJ Shortest Job First SRJN Shortest Remaining Job Next Low The lowest Can be high Complex data structures and processing routines Can be high Routine to find to find the shortest job for each reschedule Can be high Routine to find to find the minimum remaining time for each reschedule No distinction between CPU-bound and IO-bound No distinction between CPU-bound and IO-bound Gets a low priority if IObound processes are present No distinction between CPU-bound and IO-bound No distinction between CPU-bound and IO-bound Terminology for scheduling algorithms A scheduling problems is defined by ( , , :) ( ) The machine environment ( ) A set of side constrains and characteristics ( ) The optimality criterion Machine environments: 1 One-machine. P Parallel identical machines Q Parallel machines of different speeds R Parallel unrelated machines O Open shop. m specialized machines; a job requires a number of operations each demanding processing by a specific machine F Floor shop One-machine environment n jobs 1,2,….n. pj amount of time required by job j. rj the release time of job j, the time when job j is available for processing. wj the weight of job j. dj due time of job j; time job j should be completed. A schedule S specifies for each job j which pj units of time are used to process the job. CSj the completion time of job j under schedule S. The makespan of S is: CSmax = max CSj The average completion time is 1 n n S C j j 1 One-machine environment (cont’d) n S w C j j Average weighted completion time: Optimality criteria minimize: the makespan CSmax n S the average completion time : C j j 1 The average weighted completion time: j 1 n S w C j j L j C d j the lateness of job j j 1 n S Lmax max j 1 L j maximum lateness of any job under schedule S. Another optimality criteria, minimize maximum lateness. S j Priority rules for one machine environment Theorem: scheduling jobs according to SPT – shortest processing time is optimal for 1 || C j Theorem: scheduling jobs in non-decreasing order of is optimal for 1 || w j C j wj pj Real-time schedulers Soft versus hard real-time systems A control system of a nuclear power plant hard deadlines A music system soft deadlines Time to extinction time until it makes sense to begin the action Earliest deadline first (EDF) Dynamic scheduling algorithm for real-time OS. When a scheduling event occurs (task finishes, new task released, etc.) the priority queue will be searched for the process closest to its deadline. This process will then be scheduled for execution next. EDF is an optimal scheduling preemptive algorithm for uniprocessors, in the following sense: if a collection of independent jobs, each characterized by an arrival time, an execution requirement, and a deadline, can be scheduled (by any algorithm) such that all the jobs complete by their deadlines, the EDF will schedule this collection of jobs such that they all complete by their deadlines. 38 Schedulability test for Earliest Deadline First n U j 1 dj 1 pj Execution Time Process Period P1 1 8 P2 2 5 P3 4 10 In this case U = 1/8 +2/5 + 4/10 = 0.925 = 92.5% It has been proved that the problem of deciding if it is possible to schedule a set of periodic processes is NP-hard if the periodic processes use semaphores to enforce mutual exclusion. 39