A Behavior-based Methodology for Malware Detection

advertisement

A Behavior-based Methodology for

Malware Detection

Student: Hsun-Yi Tsai

Advisor: Dr. Kuo-Chen Wang

2012/04/30

Copyright © 2011, MBL@CS.NCTU

Outline

•

•

•

•

•

•

•

•

•

Introduction

Problem Statement

Sandboxes

Behavior Rules

Prototype

Malicious Degree

Evaluation

Conclusion and Future Works

References

Copyright © 2011, MBL@CS.NCTU

Introduction

• Signature-based detection may fail sometimes

– Malware developers may make some changes to evade detection

• Malware and their variations still share the same

behaviors in high level

– Malicious behaviors are similar most of the time

• Behavior-based detection

– To detect unknown malware or the variations of known malware by

analyzing their behaviors

Copyright © 2011, MBL@CS.NCTU

Problem Statement

• Given

– Several sandboxes

– l known malware Mi = {M1,M2, …, Ml} for training

– m known malware Sj = {S1, S2, …, Sm} for testing

• Objective

– n behaviors Bk = {B1,B2, …, Bn}

– n weights Wk = {W1,W2, …, Wn}

– MD (Malicious degree)

Copyright © 2011, MBL@CS.NCTU

Sandboxes

• Online (Web-based)

– GFI Sandbox

– Norman Sandbox

– Anubis Sandbox

• Offline (PC-based)

– Avast Sandbox

– Buster Sandbox Analyzer

Copyright © 2011, MBL@CS.NCTU

Behavior Rules

• Malware Host Behaviors

–

–

–

–

–

–

–

–

–

–

–

–

–

Creates Mutex

Creates Hidden File

Starts EXE in System

Checks for Debugger

Starts EXE in Documents

Windows/Run Registry Key Set

Hooks Keyboard

Modifies Files in System

Deletes Original Sample

More than 5 Processes

Opens Physical Memory

Deletes Files in System

Auto Start

• Malware Network Behaviors

– Makes Network Connections

•

•

•

DNS Query

HTTP Connection

File Download

Copyright © 2011, MBL@CS.NCTU

Behavior Rules (Cont.)

Ulrich Bayer et al. [13]

Copyright © 2011, MBL@CS.NCTU

Prototype

Copyright © 2011, MBL@CS.NCTU

Malicious Degree

• Malicious Degree

– Malicious behaviors: 𝑿 = {𝒙𝒊 | 𝟏 ≤ 𝒊 ≤ 𝟏𝟑}

– Weights: 𝑾 = 𝒘𝒊,𝒋 𝟏 ≤ 𝒊 ≤ 𝟏𝟑, 𝟏 ≤ 𝒋 ≤ 𝟏𝟎 ∪ 𝒘′𝒌 𝟏 ≤ 𝒌 ≤ 𝟏𝟎

– Bias: 𝑩 = 𝒃𝒋 𝟏 ≤ 𝒋 ≤ 𝟏𝟎 ∪ {𝒃′}

– Transfer function:𝒇 𝒏 =

– 𝑴𝑫 = 𝒇

𝟏𝟎

′

𝒘

𝒋=𝟏 𝒋

𝟏

𝟏+𝒆−𝒏

×𝒇

𝟏𝟑

𝒊=𝟏 𝒘𝒊,𝒋 𝒙𝒊

Copyright © 2011, MBL@CS.NCTU

− 𝒃𝒋 − 𝒃′

Weight Training Module - ANN

• Using Artificial Neural Network (ANN) to train

weights

Copyright © 2011, MBL@CS.NCTU

Weight Training Module - ANN (Cont.)

• Neuron for ANN hidden layer

Copyright © 2011, MBL@CS.NCTU

Weight Training Module - ANN (Cont.)

• Neuron for ANN output layer

Copyright © 2011, MBL@CS.NCTU

Weight Training Module - ANN (Cont.)

• Delta learning process

Mean square error: E

1

(d O) 2

2

d: expected target value

Weight set: W {w | 1 i 13, 1 j 10} {w ' | 1 k 10}

i, j

k

W ,

E

x , : learning factor; x: input value

new old

Copyright © 2011, MBL@CS.NCTU

Evaluation – Initial Weights

Behavior

Weight

Creates Mutex

0.47428571

Creates Hidden File

0.4038462

Starts EXE in System

0.371795

Checks for Debugger

0.3397436

Starts EXE in Documents

0.2820513

Windows/Run Registry Key Set

0.26923077

Hooks Keyboard

0.19871795

Modifies File in System

0.16025641

Deletes Original Sample

0.16025641

More than 5 Processes

0.16666667

Opens Physical Memory

0.05769231

Delete File in System

0.05128205

Autorun

0.24

Copyright © 2011, MBL@CS.NCTU

Evaluation (Cont.)

• Try to find the optimal MD value makes PF and PN

approximate to 0.

Benign

MD

Benign

Samples

Malicious

Samples

Ambiguous

Malicious

Copyright © 2011, MBL@CS.NCTU

Evaluation (Cont.)

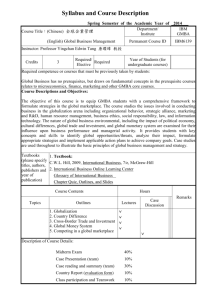

• Training data and testing data

Malicious

Benign

Total

Training

74

80

154

Testing

35

31

66

• Threshold of MD value.

30

Number of samples

25

Benign

0.4

Malicious

20

15

10

5

0

0.001 0.02 0.03 0.04 0.05 0.06 0.23 0.32 0.97 0.98 0.99

MD Value

Copyright © 2011, MBL@CS.NCTU

1

Evaluation (Cont.)

• With Creates Hidden File, Windows/Run Registry

Key Set, More than 5 Processes, and Delete File in

System:

Weights

Accuracy

Frequency

98%

1

92.4%

0.5

91%

Copyright © 2011, MBL@CS.NCTU

Evaluation (Cont.)

• Without Creates Hidden File, Windows/Run

Registry Key Set, More than 5 Processes, and

Delete File in System:

Weights

Accuracy

Frequency

97%

1

94%

0.5

92.4%

Copyright © 2011, MBL@CS.NCTU

Conclusion and Future Work

• Conclusion

– Collect several common behaviors of malwares

– Construct Malicious Degree (MD) formula

• Future work

– Add more malware network behaviors

– Classify malwares according to their typical behaviors

– Detect unknown malwares

Copyright © 2011, MBL@CS.NCTU

References

[1] GFI Sandbox. http://www.gfi.com/malware-analysis-tool

[2] Norman Sandbox. http://www.norman.com/security_center/security_tools

[3] Anubis Sandbox. http://anubis.iseclab.org/

[4] Avast Sandbox. http://www.avast.com/zh-cn/index

[5] Buster Sandbox Analyxer (BSA). http://bsa.isoftware.nl/

[6] Blast's Security. http://www.sacour.cn

[7] VX heaven. http://vx.netlux.org/vl.php

[8] “A malware tool chain : active collection, detection, and analysis,” NBL, National Chiao Tung University.

[9] U. Bayer, I. Habibi, D. Balzarotti, E. Krida, and C. Kruege, “A view on current malware behaviors,”

Proceedings of the 2nd USENIX Workshop on Large-Scale Exploits and Emergent Threats : botnets,

spyware, worms, and more, pp. 1 - 11, Apr. 22-24, 2009.

[10] U. Bayer, C. Kruegel, and E. Kirda, “TTAnalyze: a tool for analyzing malware,” Proceedings of 15th

European Institute for Computer Antivirus Research, Apr. 2006.

[11] P. M. Comparetti, G, Salvaneschi, E. Kirda, C. Kolbitsch, C. Kruegel, and S. Zanero, ”Identifying

dormant functionality in malware programs,” Proceedings of Security and Privacy (SP), 2010 IEEE

Symposium, pp. 61 - 76, May 16-19, 2010.

[12] M. Egele, C. Kruegel, E. Kirda, H. Yin, and D. Song, “Dynamic spyware analysis,” Proceedings of

USENIX Annual Technical Conference, pp. 233 - 246, Jun. 2007.

[13] J. Kinder, S. Katzenbeisser, C. Schallhart, and H. Veith, “Detecting malicious code by model checking,”

Proceedings of the 2nd International Conference on Intrusion and Malware Detection and Vulnerability

Assessment (DIMVA’05), pp. 174 - 187, 2005.

Copyright © 2011, MBL@CS.NCTU

References (Cont.)

[14] W. Liu, P. Ren, K. Liu, and H. X. Duan, “Behavior-based malware analysis and detection,”

Proceedings of Complexity and Data Mining (IWCDM), pp. 39 - 42, Sep. 24-28, 2011.

[15] C. Mihai and J. Somesh, “Static analysis of executables to detect malicious patterns,”

Proceedings of the 12th conference on USENIX Security Symposium, Vol. 12, pp. 169 - 186, Dec. 1012, 2006.

[16] A. Moser, C. Kruegel, and E. Kirda, “Exploring multiple execution paths for malware analysis,”

Proceedings of 2007 IEEE Symposium on Security and Privacy, pp. 231 - 245, May 20-23, 2007.

[17] J. Rabek, R. Khazan, S. Lewandowskia, and R. Cunningham, “Detection of injected,

dynamically generated, and ob-fuscated malicious code,” Proceedings of the 2003 ACM workshop

on Rapid malcode, pp. 76 - 82, Oct. 27-30, 2003.

[18] A. Sabjornsen, J. Willcock, T. Panas, D. Quinlan, and Z. Su, “Detecting code clones in binary

executables,” Proceedings of the 18th international symposium on Software testing and analysis,

pp. 117 - 128, 2009.

[19] M. Shankarapani, K. Kancherla, S. Ramammoorthy, R. Movva, and S. Mukkamala, “Kernel

machines for malware classification and similarity analysis,” Proceedings of Neural Networks

(IJCNN), The 2010 International Joint Conference, pp.1 - 6, Jul. 18-23, 2010.

[20] C. Wang, J. Pang, R. Zhao, W. Fu, and X. Liu, “Malware detection based on suspicious

behavior identification,” Proceedings of Education Technology and Computer Science, Vol. 2, pp.

198 - 202, Mar. 7-8, 2009.

[21] C. Willems, T. Holz, and F. Freiling. “Toward automated dynamic malware analysis using

CWSandbox,” IEEE Security and Privacy, Vol. 5, No. 2, pp. 32 - 39, May. 20-23, 2007.

Copyright © 2011, MBL@CS.NCTU