(IMMEX) Program - CRESST

advertisement

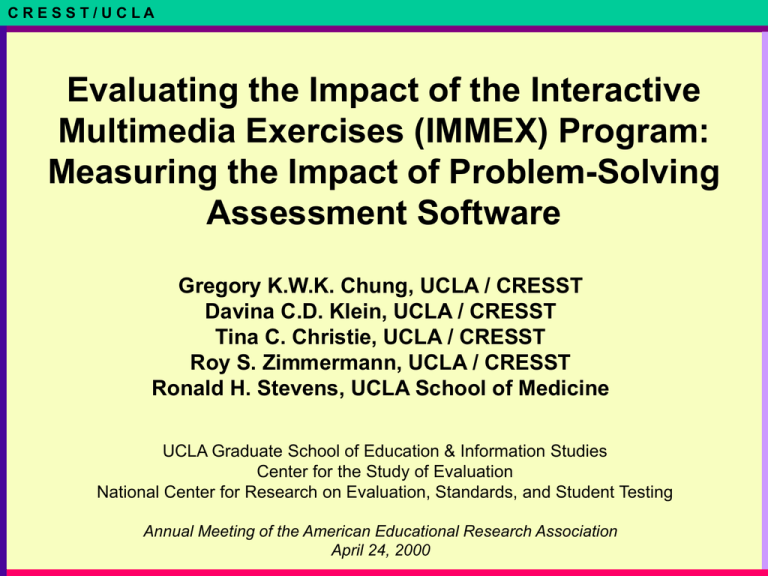

C RE SS T/U C LA Evaluating the Impact of the Interactive Multimedia Exercises (IMMEX) Program: Measuring the Impact of Problem-Solving Assessment Software Gregory K.W.K. Chung, UCLA / CRESST Davina C.D. Klein, UCLA / CRESST Tina C. Christie, UCLA / CRESST Roy S. Zimmermann, UCLA / CRESST Ronald H. Stevens, UCLA School of Medicine UCLA Graduate School of Education & Information Studies Center for the Study of Evaluation National Center for Research on Evaluation, Standards, and Student Testing Annual Meeting of the American Educational Research Association April 24, 2000 C RE SS T/U C LA Overview IMMEX overview Evaluation questions, design, findings Focus on barriers to adoption Implications for the future C RE SS T/U C LA Implementation Context Los Angeles Unified School District 697,000 students, 41,000 teachers, 790 schools (1998) Average class size: 27 (1998-99) Limited English Proficiency (LEP): 46% of students (1998-99) 2,600 classrooms have Internet access (1998-99) C RE SS T/U C LA IMMEX Program Goal Improve student learning via the routine use of IMMEX assessment technology in the classroom Explicitly link assessment technology with classroom practice, theories of learning, and science content Provide aggressive professional development, IMMEX, and technology support C RE SS T/U C LA IMMEX Program Problem Solving Assessment Software Problem solving architecture: Students presented with a problem scenario, provided with information that is relevant and irrelevant to solving problem Problem solving demands embedded in design of information space and multiple problem sets (e.g., medical diagnosis) Performance: # completed, % solved Process: Pattern of information access yields evidence of use of a particular problem solving strategy (e.g., elimination, evidence vs. conjecture, cause-effect) C RE SS T/U C LA IMMEX Program: Theory of Action Quality teacher training Greater teacher facility with technology Individual teacher differences Deeper teacher understanding of science content Use of IMMEX to assess students Greater teacher understanding of students Use of IMMEX to instruct students Better classroom teaching Increased student outcomes C RE SS T/U C LA Evaluation Questions Implementation: Is the IMMEX software being implemented as intended? Impact: How is IMMEX impacting classrooms, teachers, and students? Integration: How can IMMEX best be integrated into the regular infrastructure of schooling? C RE SS T/U C LA Evaluation Methodology Pre-post design Y1, Y2: Focus on teachers and classroom impact Y3, Y4: Focus on student impact Examine impact over time C RE SS T/U C LA Evaluation Methodology Instruments Teacher surveys: demographics, teaching practices, attitudes, usage, perceived impact Teacher interviews: barriers, integration, teacher characteristics Student surveys: demographics, perceived impact, attitudes, strategy use C RE SS T/U C LA Evaluation Methodology Data collection: Year 1: Spring 99 Year 2: Fall 99/Spring 00 Year 3, 4: Fall/Spring 01, Fall/Spring 02 Teacher sample Y1: All IMMEX-trained teachers (~240): 45 responded to survey, 9 interviewed Y2 Fall 99: 1999 IMMEX users (38): 18 responded to survey, 8 interviewed C RE SS T/U C LA Evaluation Methodology Year 1 Year 2 Spr 99 Fall 99 Year 3 Spr 00 Fall 00 Year 4 Spr 01 Fall 01 Spr 01 Teacher sample Y1: Sample all teachers who were trained on IMMEX (~240) 45 responded to survey, 9 interviewed Y2 Fall: Sample all confirmed 1999 users (38) 18 responded to survey, 8 interviewed C RE SS T/U C LA Results Teacher surveys: High satisfaction with participation in IMMEX program Once a month considered high, more often few times (< 7 times) a school year Implementation: assessing students’ problem solving, practice integrating their knowledge Impact: use of technology, exchange of ideas with colleagues, teaching effectiveness C RE SS T/U C LA Results Teacher interviews: In general, IMMEX teachers have a very strong commitment to teaching and student learning Passionate about their work, committed to students and the profession, engage in a variety of activities (school and professional), open to new teaching methods Strong belief in the pedagogical value of IMMEX C RE SS T/U C LA Results Teacher interviews: In general, IMMEX teachers are willing to commit the time and effort required to implement IMMEX Able to deal with complexity of implementation logistics Highly motivated, organized, self-starters C RE SS T/U C LA Results Teacher interviews: General barriers Lack of computer skills Lack of computers Classroom challenges C RE SS T/U C LA Results Teacher interviews: IMMEX barriers User-interface Lack of problem sets / Weak link to curriculum Amount of time to implement IMMEX in classroom Amount of time to author IMMEX problem sets C RE SS T/U C LA Addressing Barriers Barriers Computer related How Addressed Basic computer skills instruction, rolling labs, on-demand technical support, Web version Implementation Full-service model Problem sets >100 problem sets, authoring capability, ongoing problem set development Authoring, Curriculum, Finely-tuned development workshops, stipend, documentation, curriculum guides Experienced, dedicated, focused staff with teaching and research experience C RE SS T/U C LA Implications Short-term No widespread adoption by teachers too many barriers for too many teachers only highly motivated likely to adopt full-service model evidence of difficulty of adoption Learn from the “A-team” high usage teachers represent best practices Establish deployment infrastructure C RE SS T/U C LA Implications Long-term Problem solving instruction and assessment will remain relevant Computer barriers: lowered (computer access, skills) Time-to-Implement barriers: lowered (problem set expansion, Web access, automated scoring and reporting) Time-to-Author barriers: ???(reduction in mechanics of authoring, problem set expansion; conceptual development of problem sets remains a constant) C RE SS T/U C LA Contact Information For more information about the evaluation: Greg Chung (greg@ucla.edu) www.cse.ucla.edu For more information about IMMEX: Ron Stevens (immex_ron@hotmail.com) www.immex.ucla.edu C RE SS T/U C LA IMMEX Program First used for medical school examination in 1987 First K-12 deployment context (content development, teacher training, high school use) between 1990-92 C RE SS T/U C LA IMMEX Software: Search path maps