Selection of predictor variables

advertisement

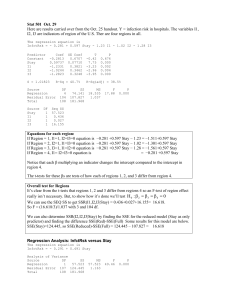

Model selection Stepwise regression Statement of problem • A common problem is that there is a large set of candidate predictor variables. • (Note: The examples herein are really not that large.) • Goal is to choose a small subset from the larger set so that the resulting regression model is simple, yet have good predictive ability. Example: Cement data • Response y: heat evolved in calories during hardening of cement on a per gram basis • Predictor x1: % of tricalcium aluminate • Predictor x2: % of tricalcium silicate • Predictor x3: % of tetracalcium alumino ferrite • Predictor x4: % of dicalcium silicate Example: Cement data 105.05 y 83.35 16 x1 6 59.75 x2 37.25 18.25 x3 8.75 46.5 x4 19.5 83 .35 10 5.0 5 6 16 37 .25 59 .7 5 8.7 5 18 .2 5 19 .5 46 .5 Two basic methods of selecting predictors • Stepwise regression: Enter and remove predictors, in a stepwise manner, until there is no justifiable reason to enter or remove more. • Best subsets regression: Select the subset of predictors that do the best at meeting some well-defined objective criterion. Stepwise regression: the idea • Start with no predictors in the “stepwise model.” • At each step, enter or remove a predictor based on partial F-tests (that is, the t-tests). • Stop when no more predictors can be justifiably entered or removed from the stepwise model. Stepwise regression: Preliminary steps 1. Specify an Alpha-to-Enter (αE = 0.15) significance level. 2. Specify an Alpha-to-Remove (αR = 0.15) significance level. Stepwise regression: Step #1 1. Fit each of the one-predictor models, that is, regress y on x1, regress y on x2, … regress y on xp-1. 2. The first predictor put in the stepwise model is the predictor that has the smallest t-test P-value (below αE = 0.15). 3. If no P-value < 0.15, stop. Stepwise regression: Step #2 1. Suppose x1 was the “best” one predictor. 2. Fit each of the two-predictor models with x1 in the model, that is, regress y on (x1, x2), regress y on (x1, x3), …, and y on (x1, xp-1). 3. The second predictor put in stepwise model is the predictor that has the smallest t-test P-value (below αE = 0.15). 4. If no P-value < 0.15, stop. Stepwise regression: Step #2 (continued) 1. Suppose x2 was the “best” second predictor. 2. Step back and check P-value for β1 = 0. If the P-value for β1 = 0 has become not significant (above αR = 0.15), remove x1 from the stepwise model. Stepwise regression: Step #3 1. Suppose both x1 and x2 made it into the two-predictor stepwise model. 2. Fit each of the three-predictor models with x1 and x2 in the model, that is, regress y on (x1, x2, x3), regress y on (x1, x2, x4), …, and regress y on (x1, x2, xp-1). Stepwise regression: Step #3 (continued) 1. The third predictor put in stepwise model is the predictor that has the smallest t-test P-value (below αE = 0.15). 2. If no P-value < 0.15, stop. 3. Step back and check P-values for β1 = 0 and β2 = 0. If either P-value has become not significant (above αR = 0.15), remove the predictor from the stepwise model. Stepwise regression: Stopping the procedure • The procedure is stopped when adding an additional predictor does not yield a t-test P-value below αE = 0.15. Example: Cement data 105.05 y 83.35 16 x1 6 59.75 x2 37.25 18.25 x3 8.75 46.5 x4 19.5 83 .35 10 5.0 5 6 16 37 .25 59 .7 5 8.7 5 18 .2 5 19 .5 46 .5 Predictor Constant x1 Coef 81.479 1.8687 SE Coef 4.927 0.5264 T 16.54 3.55 P 0.000 0.005 Predictor Constant x2 Coef 57.424 0.7891 SE Coef 8.491 0.1684 T 6.76 4.69 P 0.000 0.001 Predictor Constant x3 Coef 110.203 -1.2558 SE Coef 7.948 0.5984 T 13.87 -2.10 P 0.000 0.060 Predictor Constant x4 Coef 117.568 -0.7382 SE Coef 5.262 0.1546 T 22.34 -4.77 P 0.000 0.001 Predictor Constant x4 x1 Coef 103.097 -0.61395 1.4400 SE Coef 2.124 0.04864 0.1384 T 48.54 -12.62 10.40 P 0.000 0.000 0.000 Predictor Constant x4 x2 Coef 94.16 -0.4569 0.3109 SE Coef 56.63 0.6960 0.7486 T 1.66 -0.66 0.42 P 0.127 0.526 0.687 Predictor Constant x4 x3 Coef 131.282 -0.72460 -1.1999 SE Coef 3.275 0.07233 0.1890 T 40.09 -10.02 -6.35 P 0.000 0.000 0.000 Predictor Constant x4 x1 x2 Predictor Constant x4 x1 x3 Coef 71.65 -0.2365 1.4519 0.4161 SE Coef 14.14 0.1733 0.1170 0.1856 Coef SE Coef 111.684 4.562 -0.64280 0.04454 1.0519 0.2237 -0.4100 0.1992 T 5.07 -1.37 12.41 2.24 P 0.001 0.205 0.000 0.052 T 24.48 -14.43 4.70 -2.06 P 0.000 0.000 0.001 0.070 Predictor Constant x1 x2 Coef 52.577 1.4683 0.66225 SE Coef 2.286 0.1213 0.04585 T 23.00 12.10 14.44 P 0.000 0.000 0.000 Predictor Constant x1 x2 x4 Coef 71.65 1.4519 0.4161 -0.2365 SE Coef 14.14 0.1170 0.1856 0.1733 Predictor Constant x1 x2 x3 Coef SE Coef 48.194 3.913 1.6959 0.2046 0.65691 0.04423 0.2500 0.1847 T 5.07 12.41 2.24 -1.37 P 0.001 0.000 0.052 0.205 T 12.32 8.29 14.85 1.35 P 0.000 0.000 0.000 0.209 Predictor Constant x1 x2 Coef 52.577 1.4683 0.66225 SE Coef 2.286 0.1213 0.04585 T 23.00 12.10 14.44 P 0.000 0.000 0.000 Stepwise Regression: y versus x1, x2, x3, x4 Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is y on 4 predictors, with N = Step Constant 1 117.57 2 103.10 3 71.65 x4 T-Value P-Value -0.738 -4.77 0.001 -0.614 -12.62 0.000 -0.237 -1.37 0.205 1.44 10.40 0.000 1.45 12.41 0.000 1.47 12.10 0.000 0.416 2.24 0.052 0.662 14.44 0.000 2.31 98.23 97.64 3.0 2.41 97.87 97.44 2.7 x1 T-Value P-Value x2 T-Value P-Value S R-Sq R-Sq(adj) C-p 8.96 67.45 64.50 138.7 2.73 97.25 96.70 5.5 4 52.58 13 Caution about stepwise regression! • Do not jump to the conclusion … – that all the important predictor variables for predicting y have been identified, or – that all the unimportant predictor variables have been eliminated. Caution about stepwise regression! • Many t-tests for βk = 0 are conducted in a stepwise regression procedure. • The probability is high … – that we included some unimportant predictors – that we excluded some important predictors Drawbacks of stepwise regression • The final model is not guaranteed to be optimal in any specified sense. • The procedure yields a single final model, although in practice there are often several equally good models. • It doesn’t take into account a researcher’s knowledge about the predictors. Example: Modeling PIQ 130.5 PIQ 91.5 100.728 MRI 86.283 73.25 Height 65.75 170.5 Weight 127.5 .5 .5 91 130 3 8 .28 .7 2 86 100 .75 3.25 65 7 7.5 70.5 12 1 Stepwise Regression: PIQ versus MRI, Height, Weight Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is PIQ on 3 predictors, with N = 38 Step Constant 1 4.652 2 111.276 MRI T-Value P-Value 1.18 2.45 0.019 2.06 3.77 0.001 Height T-Value P-Value S R-Sq R-Sq(adj) C-p -2.73 -2.75 0.009 21.2 14.27 11.89 7.3 19.5 29.49 25.46 2.0 The regression equation is PIQ = 111 + 2.06 MRI - 2.73 Height Predictor Constant MRI Height Coef 111.28 2.0606 -2.7299 S = 19.51 SE Coef 55.87 0.5466 0.9932 R-Sq = 29.5% T 1.99 3.77 -2.75 P 0.054 0.001 0.009 R-Sq(adj) = 25.5% Analysis of Variance Source Regression Error Total DF 2 35 37 Source MRI Height DF 1 1 SS 5572.7 13321.8 18894.6 Seq SS 2697.1 2875.6 MS 2786.4 380.6 F 7.32 P 0.002 Example: Modeling BP 120 BP 110 53.25 Age 47.75 97.325 Weight 89.375 2.125 BSA 1.875 8.275 Duration 4.425 72.5 Pulse 65.5 76.25 Stress 30.75 0 11 0 12 . 75 3.25 47 5 5 5 .37 7. 32 89 9 75 25 1. 8 2. 1 25 .275 4. 4 8 .5 65 .5 72 .75 6. 25 30 7 Stepwise Regression: BP versus Age, Weight, BSA, Duration, Pulse, Stress Alpha-to-Enter: 0.15 Alpha-to-Remove: 0.15 Response is BP on 6 predictors, with N = 20 Step Constant 1 2.205 2 -16.579 3 -13.667 Weight T-Value P-Value 1.201 12.92 0.000 1.033 33.15 0.000 0.906 18.49 0.000 0.708 13.23 0.000 0.702 15.96 0.000 Age T-Value P-Value BSA T-Value P-Value S R-Sq R-Sq(adj) C-p 4.6 3.04 0.008 1.74 90.26 89.72 312.8 0.533 99.14 99.04 15.1 0.437 99.45 99.35 6.4 The regression equation is BP = - 13.7 + 0.702 Age + 0.906 Weight + 4.63 BSA Predictor Constant Age Weight BSA S = 0.4370 Coef SE Coef T P -13.667 2.647 -5.16 0.000 0.70162 0.04396 15.96 0.000 0.90582 0.04899 18.49 0.000 4.627 1.521 3.04 0.008 R-Sq = 99.5% R-Sq(adj) = 99.4% Analysis of Variance Source Regression Error Total Source Age Weight BSA DF 3 16 19 DF 1 1 1 SS 556.94 3.06 560.00 MS 185.65 0.19 Seq SS 243.27 311.91 1.77 F 971.93 P 0.000 Stepwise regression in Minitab • Stat >> Regression >> Stepwise … • Specify response and all possible predictors. • If desired, specify predictors that must be included in every model. (This is where researcher’s knowledge helps!!) • Select OK. Results appear in session window.